Running OpenHands LM 32B with Featherless.ai: A Practical Guide

The landscape of AI-powered software development is evolving at breakneck speed, and the release of OpenHands LM 32B marks a significant leap forward. This powerful, open-source coding model, boasting an impressive 37.2% resolve rate on SWE-Bench Verified, brings enterprise-grade AI assistance directly to your local environment. By pairing it with Featherless.ai's efficient model hosting, you create a potent yet accessible development setup. Whether you're a solo developer aiming to accelerate your workflow or part of a team seeking freedom from proprietary solutions, this combination offers compelling advantages. Why Choose Featherless.ai for Your OpenHands LM Deployment? Running large models like OpenHands LM 32B locally can be resource-intensive. Featherless.ai provides an elegant solution, standing out in the crowded AI inference market with its unique approach to model hosting: Vast Model Catalog: Easily access an extensive library of models, including the OpenHands LM 32B. Predictable Subscription Pricing: Enjoy straightforward costs with a subscription, avoiding volatile pay-per-token fees. Ready to get started? The first step is installing the OpenHands application itself. Installing OpenHands To get started with OpenHands, you'll need to install the application first. The installation process varies depending on your operating system and device. I recommend following the official installation guide provided by All Hands: OpenHands Installation Guide The documentation provides detailed instructions for: Installing on macOS, Windows, and Linux System requirements Configuration options Troubleshooting common installation issues Ensure you have OpenHands installed and running correctly before proceeding to connect it with the powerful LM hosted on Featherless.ai. Connecting OpenHands to Featherless.ai With the OpenHands application installed, it's time to connect it to the OpenHands LM 32B model running efficiently on Featherless.ai. This integration unlocks the model's power without requiring local GPU resources. Here's how to set up the integration: First, you'll need to create an account on Featherless.ai if you don't already have one. Once logged in, navigate to your account settings to obtain your API key. This key will authenticate your requests to the Featherless.ai API. Open the OpenHands application (usually configured on localhost:3000. Go to the settings page (gear icon, typically at the bottom left). Since Featherless.ai provides an OpenAI-compatible API endpoint, we can configure OpenHands to use it by setting the following options within the application: Enable Advanced options (toggle switch). Set the following: Custom Model to openai/all-hands/openhands-lm-32b-v0.1 . The openai/ prefix tells OpenHands to use the OpenAI API format with the specified model available on Featherless.ai. Base URL to https://api.featherless.ai/v1 API Key to your Featherless API Key Disable memory condensation Fill in your Git Provider Settings if necessary (e.g., GitHub token). Save Changes! That's all it takes! Your OpenHands application is now powered by the sophisticated OpenHands LM 32B via Featherless.ai. Start experimenting! Try feeding it complex coding challenges, asking it to refactor code, or even resolving GitHub issues directly. You might be surprised by its problem-solving prowess. Furthermore, this same setup process works seamlessly with other cutting-edge models available in the extensive Featherless.ai catalog, like the recent DeepSeek V3. Ready to start building? Head over to https://featherless.ai/ to create an account. Our growing community of developers, enthusiasts, and AI practitioners is here to help you get the most out of Featherless: Join our Discord community to connect with other users Follow us on Twitter (@FeatherlessAI) for the latest updates We’ll be looking forward to seeing what you all create and share with the community.

The landscape of AI-powered software development is evolving at breakneck speed, and the release of OpenHands LM 32B marks a significant leap forward. This powerful, open-source coding model, boasting an impressive 37.2% resolve rate on SWE-Bench Verified, brings enterprise-grade AI assistance directly to your local environment. By pairing it with Featherless.ai's efficient model hosting, you create a potent yet accessible development setup. Whether you're a solo developer aiming to accelerate your workflow or part of a team seeking freedom from proprietary solutions, this combination offers compelling advantages.

Why Choose Featherless.ai for Your OpenHands LM Deployment?

Running large models like OpenHands LM 32B locally can be resource-intensive. Featherless.ai provides an elegant solution, standing out in the crowded AI inference market with its unique approach to model hosting:

- Vast Model Catalog: Easily access an extensive library of models, including the OpenHands LM 32B.

- Predictable Subscription Pricing: Enjoy straightforward costs with a subscription, avoiding volatile pay-per-token fees.

Ready to get started? The first step is installing the OpenHands application itself.

Installing OpenHands

To get started with OpenHands, you'll need to install the application first. The installation process varies depending on your operating system and device. I recommend following the official installation guide provided by All Hands:

The documentation provides detailed instructions for:

- Installing on macOS, Windows, and Linux

- System requirements

- Configuration options

- Troubleshooting common installation issues

Ensure you have OpenHands installed and running correctly before proceeding to connect it with the powerful LM hosted on Featherless.ai.

Connecting OpenHands to Featherless.ai

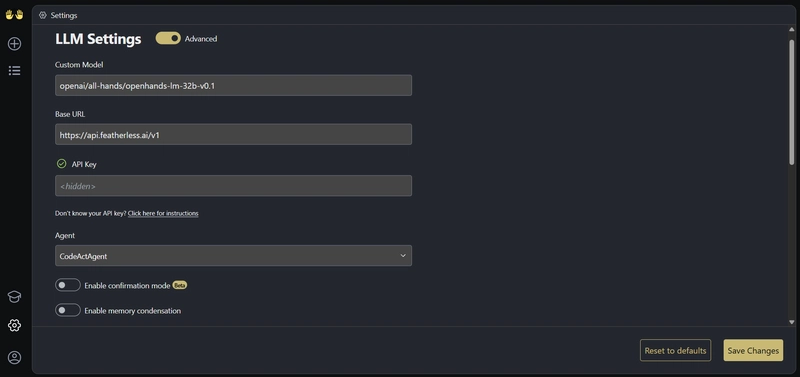

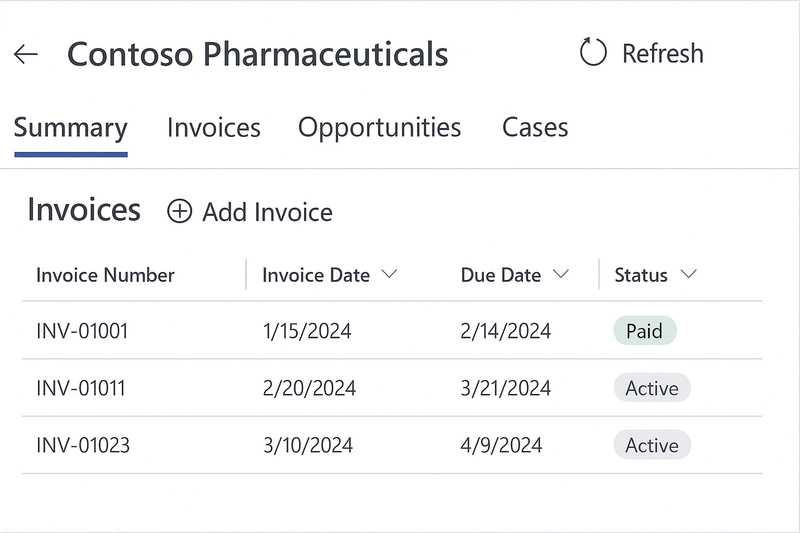

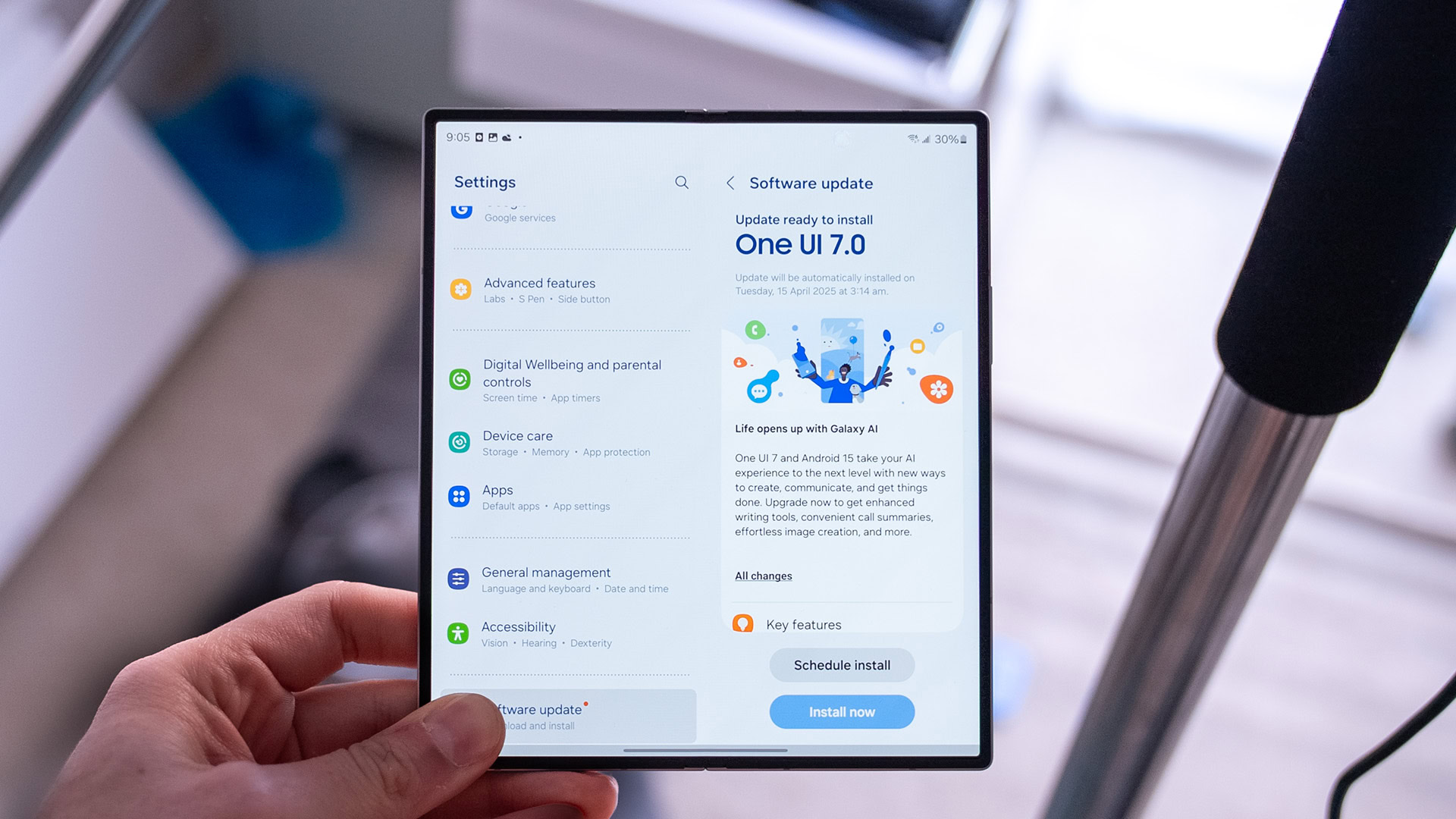

With the OpenHands application installed, it's time to connect it to the OpenHands LM 32B model running efficiently on Featherless.ai. This integration unlocks the model's power without requiring local GPU resources. Here's how to set up the integration:

- First, you'll need to create an account on Featherless.ai if you don't already have one.

- Once logged in, navigate to your account settings to obtain your API key. This key will authenticate your requests to the Featherless.ai API.

- Open the OpenHands application (usually configured on localhost:3000.

- Go to the settings page (gear icon, typically at the bottom left).

- Since Featherless.ai provides an OpenAI-compatible API endpoint, we can configure OpenHands to use it by setting the following options within the application:

- Enable Advanced options (toggle switch).

- Set the following:

- Custom Model to

openai/all-hands/openhands-lm-32b-v0.1. The openai/ prefix tells OpenHands to use the OpenAI API format with the specified model available on Featherless.ai. -

Base URLto https://api.featherless.ai/v1 -

API Keyto your Featherless API Key - Disable memory condensation

- Custom Model to

- Fill in your Git Provider Settings if necessary (e.g., GitHub token).

- Save Changes!

That's all it takes! Your OpenHands application is now powered by the sophisticated OpenHands LM 32B via Featherless.ai. Start experimenting! Try feeding it complex coding challenges, asking it to refactor code, or even resolving GitHub issues directly. You might be surprised by its problem-solving prowess. Furthermore, this same setup process works seamlessly with other cutting-edge models available in the extensive Featherless.ai catalog, like the recent DeepSeek V3.

Ready to start building? Head over to https://featherless.ai/ to create an account. Our growing community of developers, enthusiasts, and AI practitioners is here to help you get the most out of Featherless:

- Join our Discord community to connect with other users

- Follow us on Twitter (@FeatherlessAI) for the latest updates

We’ll be looking forward to seeing what you all create and share with the community.

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

.png?#)

.webp?#)

.webp?#)

.webp?#)

![[Fixed] Gemini app is failing to generate Audio Overviews](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/03/Gemini-Audio-Overview-cover.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![What’s new in Android’s April 2025 Google System Updates [U: 4/14]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/01/google-play-services-3.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Seeds tvOS 18.5 Beta 2 to Developers [Download]](https://www.iclarified.com/images/news/97011/97011/97011-640.jpg)

![Apple Releases macOS Sequoia 15.5 Beta 2 to Developers [Download]](https://www.iclarified.com/images/news/97014/97014/97014-640.jpg)