Optimizing SQL queries for your AI Agents.

So, you're building the next generation of Agentic AI, a smart, autonomous system ready to understand, reason, and act. Fantastic! But where does this digital brain get its crucial "proprietary knowledge" or the specific context it needs to make intelligent decisions? Often, it's from your trusty SQL database, queried to pull in everything from user history and product catalogs to real-time sensor data that forms the bedrock of its understanding. You've designed your agent, given it goals, and it's ready to interact. But then... the pause. That moment your agent needs to "think" to fetch that vital piece of information from the database to build context and it feels like an eternity. Your sophisticated agent, designed for dynamic action, is suddenly hamstrung by a slow SQL query, turning its "real-time" reasoning into "just-a-minute" frustration. This, my friends, is where SQL query optimization becomes absolutely critical for potent Agentic AI. It's like tasking a brilliant detective with solving a case, but every time they need a clue (a piece of data), they have to manually sift through mountains of unsorted files in a dusty archive. The detective is smart, but the information retrieval is crippling their speed and effectiveness. Our agents are no different. How do we ensure our AI agents get their context fast and efficiently? Drawing from alrady existing solid optimization principles, let's look at the game plan: Become a Data Profiler with EXPLAIN: Before your agent can act intelligently, you need to understand how it's getting its intelligence. EXPLAIN (or its equivalent) unveils the database's strategy for fetching data. Red Flag for Agents: Is it scanning millions of rows for a small piece of context your agent needs right now? That's a bottleneck that directly impacts agent responsiveness. Costly SORT Operations: If the agent needs sorted data for its logic, these can be memory hogs, slowing down the entire context-building process. Full Table Scans: The arch-nemesis! Your agent shouldn't wait for the DB to read an entire table to understand a specific situation. Sharpen the Agent's Focus (Tune the Query Itself!): This is often where the biggest wins for agent performance lie. Filter with Precision (WHERE clause): Equip your agent's queries to be surgical. Only fetch the exact data needed for the current task or decision. The more targeted the WHERE clause, the faster the context is built. Efficient JOINs: If your agent needs to combine information from multiple tables (e.g., user profile + interaction history), ensure those JOINs are lean and mean. Concise IN Lists: If your agent is checking against a set of known items, keep that list tight. Create Fast-Access Lanes with Indexes: Think of indexes as creating a super-efficient filing system for the data your agent frequently needs. Identify columns your agent often uses for lookups in its WHERE clauses or to ORDER BY for its reasoning. Indexing these is like giving your agent a direct line to the information. Agent Benefit: Faster context retrieval = quicker decision-making = a more responsive and seemingly "smarter" agent. Caution: Don't just slap indexes everywhere. They speed up reads (good for agents fetching context) but can slow down writes. Use EXPLAIN to validate their impact. Major Overhauls for Complex Agent Needs: When agents deal with massive, evolving datasets: Table Partitioning: If your agent reasons over time-series data (e.g., daily user activity), partitioning by date can mean it only queries the relevant recent slice of data, dramatically speeding up context gathering. Data Structure Redesign: Sometimes, the way data is structured needs to align better with how your agent thinks and accesses information. This is a deep dive, but for complex agents, it can be transformative. If you dev with python like myself, here's how to how to use EXPLAIN to inspect potential performance bottlenecks like full table scans and costly sorts, which can directly affect AI agent responsiveness. Suppose you have postgres setup somewhere and your table schema looks like this: CREATE TABLE documents ( id SERIAL PRIMARY KEY, title TEXT, content TEXT, created_at TIMESTAMP DEFAULT NOW() ); Create a python file and enter this: import psycopg2 # Connect to your PostgreSQL database conn = psycopg2.connect( dbname="your_db", user="your_user", password="your_password", host="localhost", port="5432" ) cur = conn.cursor() # The actual query the AI agent might run query = """ SELECT * FROM documents WHERE content ILIKE '%recycling%' ORDER BY created_at DESC LIMIT 10; """ # Use EXPLAIN ANALYZE to see what PostgreSQL does under the hood cur.execute(f"EXPLAIN (ANALYZE, BUFFERS, VERBOSE) {query}") plan = cur.fetchall() print("Query Plan:\n") for row in plan: print(row[0]) cur.close() conn.close() Save and run the file, then observe the re

So, you're building the next generation of Agentic AI, a smart, autonomous system ready to understand, reason, and act. Fantastic! But where does this digital brain get its crucial "proprietary knowledge" or the specific context it needs to make intelligent decisions? Often, it's from your trusty SQL database, queried to pull in everything from user history and product catalogs to real-time sensor data that forms the bedrock of its understanding.

You've designed your agent, given it goals, and it's ready to interact. But then... the pause. That moment your agent needs to "think" to fetch that vital piece of information from the database to build context and it feels like an eternity. Your sophisticated agent, designed for dynamic action, is suddenly hamstrung by a slow SQL query, turning its "real-time" reasoning into "just-a-minute" frustration. This, my friends, is where SQL query optimization becomes absolutely critical for potent Agentic AI.

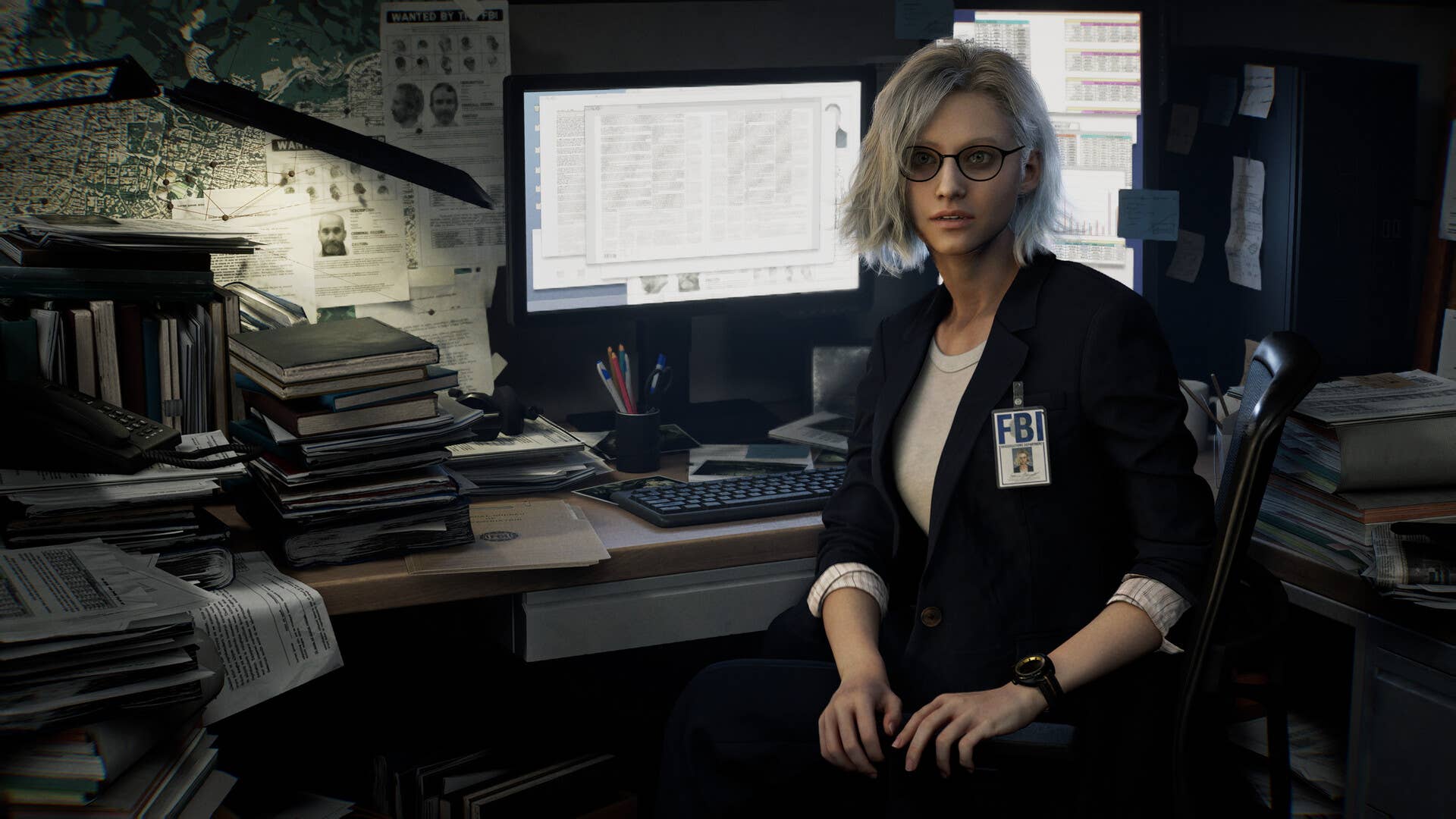

It's like tasking a brilliant detective with solving a case, but every time they need a clue (a piece of data), they have to manually sift through mountains of unsorted files in a dusty archive. The detective is smart, but the information retrieval is crippling their speed and effectiveness. Our agents are no different.

How do we ensure our AI agents get their context fast and efficiently? Drawing from alrady existing solid optimization principles, let's look at the game plan:

Become a Data Profiler with EXPLAIN:

Before your agent can act intelligently, you need to understand how it's getting its intelligence.

EXPLAIN(or its equivalent) unveils the database's strategy for fetching data.Red Flag for Agents: Is it scanning millions of rows for a small piece of context your agent needs right now? That's a bottleneck that directly impacts agent responsiveness.

Costly

SORTOperations: If the agent needs sorted data for its logic, these can be memory hogs, slowing down the entire context-building process.Full Table Scans: The arch-nemesis! Your agent shouldn't wait for the DB to read an entire table to understand a specific situation.

Sharpen the Agent's Focus (Tune the Query Itself!):

This is often where the biggest wins for agent performance lie.

Filter with Precision (WHERE clause): Equip your agent's queries to be surgical. Only fetch the exact data needed for the current task or decision. The more targeted the WHERE clause, the faster the context is built.

Efficient

JOINs: If your agent needs to combine information from multiple tables (e.g., user profile + interaction history), ensure those JOINs are lean and mean.Concise

INLists: If your agent is checking against a set of known items, keep that list tight.

Create Fast-Access Lanes with Indexes:

Think of indexes as creating a super-efficient filing system for the data your agent frequently needs.

Identify columns your agent often uses for lookups in its

WHEREclauses or toORDER BYfor its reasoning. Indexing these is like giving your agent a direct line to the information.Agent Benefit: Faster context retrieval = quicker decision-making = a more responsive and seemingly "smarter" agent.

Caution: Don't just slap indexes everywhere. They speed up reads (good for agents fetching context) but can slow down writes. Use

EXPLAINto validate their impact.

Major Overhauls for Complex Agent Needs:

When agents deal with massive, evolving datasets:

Table Partitioning: If your agent reasons over time-series data (e.g., daily user activity), partitioning by date can mean it only queries the relevant recent slice of data, dramatically speeding up context gathering.

Data Structure Redesign: Sometimes, the way data is structured needs to align better with how your agent thinks and accesses information. This is a deep dive, but for complex agents, it can be transformative.

If you dev with python like myself, here's how to how to use EXPLAIN to inspect potential performance bottlenecks like full table scans and costly sorts, which can directly affect AI agent responsiveness.

Suppose you have postgres setup somewhere and your table schema looks like this:

CREATE TABLE documents (

id SERIAL PRIMARY KEY,

title TEXT,

content TEXT,

created_at TIMESTAMP DEFAULT NOW()

);

Create a python file and enter this:

import psycopg2

# Connect to your PostgreSQL database

conn = psycopg2.connect(

dbname="your_db",

user="your_user",

password="your_password",

host="localhost",

port="5432"

)

cur = conn.cursor()

# The actual query the AI agent might run

query = """

SELECT * FROM documents

WHERE content ILIKE '%recycling%'

ORDER BY created_at DESC

LIMIT 10;

"""

# Use EXPLAIN ANALYZE to see what PostgreSQL does under the hood

cur.execute(f"EXPLAIN (ANALYZE, BUFFERS, VERBOSE) {query}")

plan = cur.fetchall()

print("Query Plan:\n")

for row in plan:

print(row[0])

cur.close()

conn.close()

Save and run the file, then observe the result. And if you are not understanding what you are seeing, you know what to do.

My Takeaway for AI Devs:

For Agentic AI, the speed and efficiency of data retrieval for context building are not just "database concerns", they are core to agent performance. A slow query means a slow-thinking agent, leading to poor user experience or ineffective automation. Regularly optimizing the SQL queries that feed your agents is like sharpening their cognitive tools. Use EXPLAIN religiously, prioritize query tuning and intelligent indexing.

How are you ensuring your agents get the data they need, when they need it, without the lag? Share your strategies for performant context-building!

![[The AI Show Episode 156]: AI Answers - Data Privacy, AI Roadmaps, Regulated Industries, Selling AI to the C-Suite & Change Management](https://www.marketingaiinstitute.com/hubfs/ep%20156%20cover.png)

![[The AI Show Episode 155]: The New Jobs AI Will Create, Amazon CEO: AI Will Cut Jobs, Your Brain on ChatGPT, Possible OpenAI-Microsoft Breakup & Veo 3 IP Issues](https://www.marketingaiinstitute.com/hubfs/ep%20155%20cover.png)

_incamerastock_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

_Brain_light_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Senators reintroduce App Store bill to rein in ‘gatekeeper power in the app economy’ [U]](https://i0.wp.com/9to5mac.com/wp-content/uploads/sites/6/2025/06/app-store-senate.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)