Mind the Gap: Bridging AI and Human Communication

AI agents are getting smarter by the day — solving tasks, querying APIs, summarizing data, and even collaborating with other agents. But something still feels… robotic. Cold. Sometimes even frustrating. Why? Because while our agents may process language, they don’t truly communicate like humans do. In this post, I want to share an idea: what if we made AI protocols more human by design — not just functional, but semantically rich, emotionally aware, and socially intelligent? What’s Missing in AI Communication? Traditional agent communication focuses on information, not meaning. Most protocols like MCP (Model Context Protocol) or A2A (Agent-to-Agent) lack the deeper layers of human communication, such as: Intent What Humans Do: We say things to ask, inform, joke, suggest What Most AI Agents Miss: AI rarely marks its purpose explicitly Emotion What Humans Do: We signal frustration, joy, sarcasm What Most AI Agents Miss: Most agents ignore emotional tone Clarification What Humans Do: “Wait, what do you mean?” What Most AI Agents Miss: Agents just move on or hallucinate answers Confidence What Humans Do: “I think…” vs. “I’m sure” What Most AI Agents Miss: No confidence levels in many agent messages Tone & Politeness What Humans Do: “Hey, could you help me?” What Most AI Agents Miss: Agents are often blunt or too formal Roles What Humans Do: Friend? Boss? Intern? What Most AI Agents Miss: Agents don’t adapt tone based on roles Trust What Humans Do: We assess who’s credible or not What Most AI Agents Miss: AI doesn’t share or track trustworthiness Intuition What Humans Do: We often “just know” when something feels off — even without full data What Most AI Agents Miss: AI requires explicit input or context; it can’t yet “sense” urgency, danger, or hidden intent unless directly told Real-Life Example - Meeting Scheduling Imagine you’re using an AI agent to schedule a meeting for your team. You: “Create a meeting with all teammates at the most suitable hour this week.” Most agents might reply with: “Meeting scheduled: Wednesday at 12:00 PM. All participants invited.” It did the job — but it missed the human factors. A more human-aware agent could respond: “I’ve checked everyone’s calendars and found a time when no one is in deep work or back-to-back meetings. Wednesday at noon seems ideal. However, Sam needs to leave by 1:00 PM, and Amanda has had a tough week and hasn’t caught up on her tasks. Does this time still work for you, or should we consider another option?” - It understands the intent — not just “find a free time,” but “make it convenient and considerate.” - It recognizes team dynamics — avoiding context-switching and meeting fatigue. - It reflects tone and flexibility. - It invites clarification before locking in a decision. That’s a smarter, more thoughtful interaction — one that shows emotional and social intelligence, not just scheduling. Real-Life Example - Better assistant for psychologists AI can also support psychologists by helping them be more accurate and attentive with their patients. For instance: - Early signs of depression, anxiety, or PTSD can be flagged faster using tone recognition (and not just text for example). - Analyzing Subtle changes in speech patterns, tone, or expression which might signal emotional distress. By enhancing observation, more context and data, AI will become a more valuable partner in mental health — improving care, not replacing it. How Do We Fix This? We might need to upgrade our protocols to include more human-like metadata in every message agents send to each other or to users. Simple Example - { "intent": "suggest", "tone": "collaborative", "confidence": 0.78, "role": "assistant", "emotion": "neutral-curious", "goal": "recommend_best_option", "rationale": "based_on_past_user_feedback_and_context", "message": "Would you like me to try an alternative approach?", "context": "{{members_data / any}}" } More data and context will give AI models the ability to have more accurate answers and act in more accurate way while performing any task. The Benefits of Humanizing Agents Better multi-agent collaboration (agents understand goals, roles, trust) More natural human-AI interaction (especially in assistants, copilots, therapy bots, etc.) Improved AI safety and transparency (you know why an agent made a decision) Close the gap between software to human intentions Final Thought I truly believe that the future will be better with AI agents that communicate with meaning, not just text. When agents become more like humans — not in personality, but in understanding — they’ll become not just useful assistants, but trusted collaborators. And after all, human communication is one of our greatest strengths as a species. It’s time our agents learned a bit of it too._

AI agents are getting smarter by the day — solving tasks, querying APIs, summarizing data, and even collaborating with other agents. But something still feels… robotic. Cold. Sometimes even frustrating.

Why?

Because while our agents may process language, they don’t truly communicate like humans do.

In this post, I want to share an idea: what if we made AI protocols more human by design — not just functional, but semantically rich, emotionally aware, and socially intelligent?

What’s Missing in AI Communication?

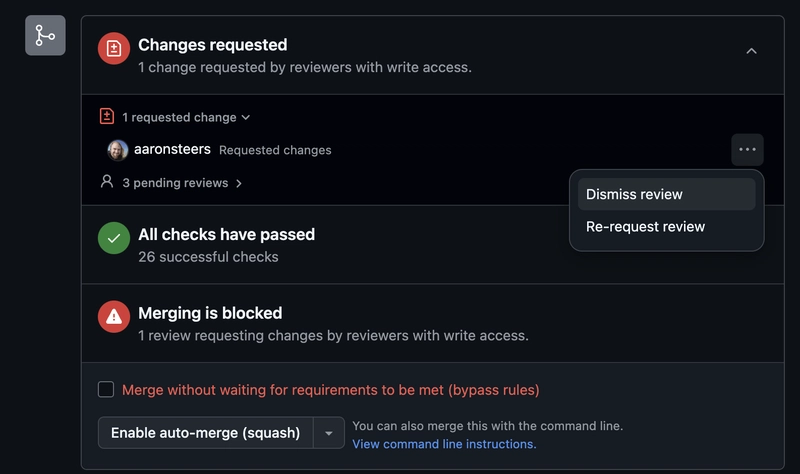

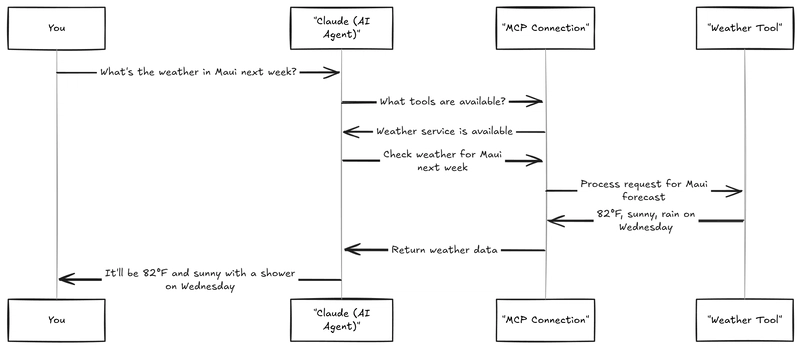

Traditional agent communication focuses on information, not meaning. Most protocols like MCP (Model Context Protocol) or A2A (Agent-to-Agent) lack the deeper layers of human communication, such as:

Intent

What Humans Do: We say things to ask, inform, joke, suggest

What Most AI Agents Miss: AI rarely marks its purpose explicitly

Emotion

What Humans Do: We signal frustration, joy, sarcasm

What Most AI Agents Miss: Most agents ignore emotional tone

Clarification

What Humans Do: “Wait, what do you mean?”

What Most AI Agents Miss: Agents just move on or hallucinate answers

Confidence

What Humans Do: “I think…” vs. “I’m sure”

What Most AI Agents Miss: No confidence levels in many agent messages

Tone & Politeness

What Humans Do: “Hey, could you help me?”

What Most AI Agents Miss: Agents are often blunt or too formal

Roles

What Humans Do: Friend? Boss? Intern?

What Most AI Agents Miss: Agents don’t adapt tone based on roles

Trust

What Humans Do: We assess who’s credible or not

What Most AI Agents Miss: AI doesn’t share or track trustworthiness

Intuition

What Humans Do: We often “just know” when something feels off — even without full data

What Most AI Agents Miss: AI requires explicit input or context; it can’t yet “sense” urgency, danger, or hidden intent unless directly told

Real-Life Example - Meeting Scheduling

Imagine you’re using an AI agent to schedule a meeting for your team.

You: “Create a meeting with all teammates at the most suitable hour this week.”

Most agents might reply with:

“Meeting scheduled: Wednesday at 12:00 PM. All participants invited.”

It did the job — but it missed the human factors.

A more human-aware agent could respond:

“I’ve checked everyone’s calendars and found a time when no one is in deep work or back-to-back meetings. Wednesday at noon seems ideal. However, Sam needs to leave by 1:00 PM, and Amanda has had a tough week and hasn’t caught up on her tasks. Does this time still work for you, or should we consider another option?”

- It understands the intent — not just “find a free time,” but “make it convenient and considerate.”

- It recognizes team dynamics — avoiding context-switching and meeting fatigue.

- It reflects tone and flexibility.

- It invites clarification before locking in a decision.

That’s a smarter, more thoughtful interaction — one that shows emotional and social intelligence, not just scheduling.

Real-Life Example - Better assistant for psychologists

AI can also support psychologists by helping them be more accurate and attentive with their patients. For instance:

- Early signs of depression, anxiety, or PTSD can be flagged faster using tone recognition (and not just text for example).

- Analyzing Subtle changes in speech patterns, tone, or expression which might signal emotional distress.

By enhancing observation, more context and data, AI will become a more valuable partner in mental health — improving care, not replacing it.

How Do We Fix This?

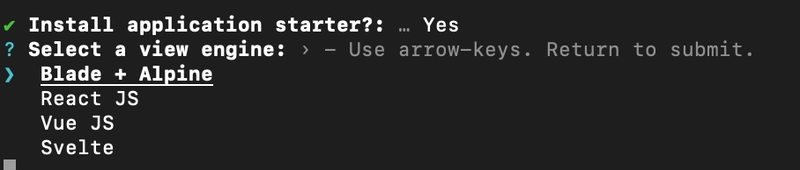

We might need to upgrade our protocols to include more human-like metadata in every message agents send to each other or to users.

Simple Example -

{

"intent": "suggest",

"tone": "collaborative",

"confidence": 0.78,

"role": "assistant",

"emotion": "neutral-curious",

"goal": "recommend_best_option",

"rationale": "based_on_past_user_feedback_and_context",

"message": "Would you like me to try an alternative approach?",

"context": "{{members_data / any}}"

}

More data and context will give AI models the ability to have more accurate answers and act in more accurate way while performing any task.

The Benefits of Humanizing Agents

- Better multi-agent collaboration (agents understand goals, roles, trust)

- More natural human-AI interaction (especially in assistants, copilots, therapy bots, etc.)

- Improved AI safety and transparency (you know why an agent made a decision)

- Close the gap between software to human intentions

Final Thought

I truly believe that the future will be better with AI agents that communicate with meaning, not just text.

When agents become more like humans — not in personality, but in understanding — they’ll become not just useful assistants, but trusted collaborators.

And after all, human communication is one of our greatest strengths as a species.

It’s time our agents learned a bit of it too._

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

![From Accountant to Data Engineer with Alyson La [Podcast #168]](https://cdn.hashnode.com/res/hashnode/image/upload/v1744420903260/fae4b593-d653-41eb-b70b-031591aa2f35.png?#)

.png?#)

![Apple Watch SE 2 On Sale for Just $169.97 [Deal]](https://www.iclarified.com/images/news/96996/96996/96996-640.jpg)

![Apple Posts Full First Episode of 'Your Friends & Neighbors' on YouTube [Video]](https://www.iclarified.com/images/news/96990/96990/96990-640.jpg)