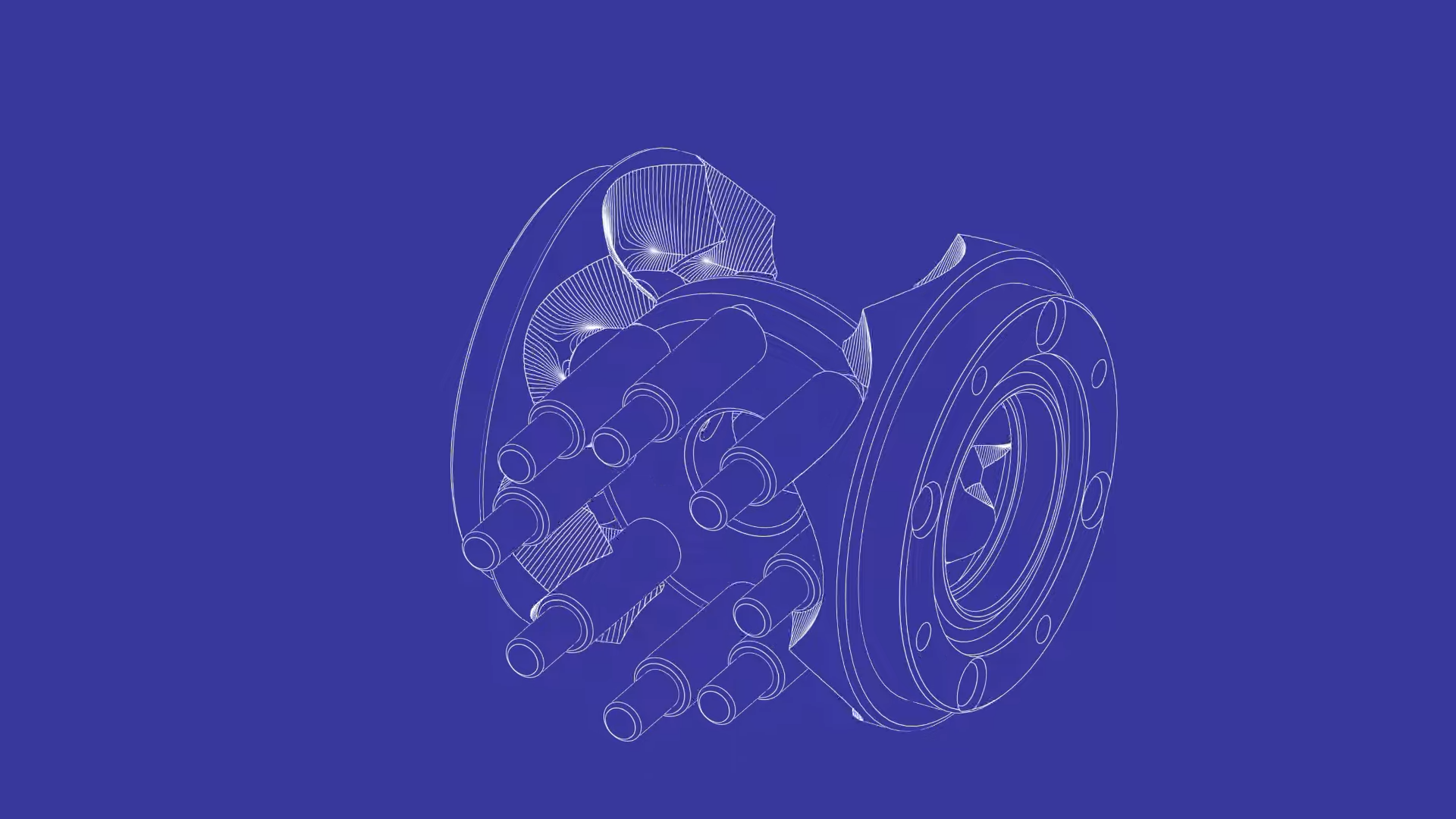

Choosing the Eyes of the Autonomous Vehicle: A Battle of Sensors, Strategies, and Trade-Offs

By 2030, the autonomous vehicle market is expected to surpass $2.2 trillion, with millions of cars navigating roads using AI and advanced sensor systems. Yet amid this rapid growth, a fundamental debate remains unresolved: which sensors are best suited for autonomous driving — lidars, cameras, radars, or something entirely new? This question is far from […] The post Choosing the Eyes of the Autonomous Vehicle: A Battle of Sensors, Strategies, and Trade-Offs appeared first on Unite.AI.

By 2030, the autonomous vehicle market is expected to surpass $2.2 trillion, with millions of cars navigating roads using AI and advanced sensor systems. Yet amid this rapid growth, a fundamental debate remains unresolved: which sensors are best suited for autonomous driving — lidars, cameras, radars, or something entirely new?

This question is far from academic. The choice of sensors affects everything from safety and performance to cost and energy efficiency. Some companies, like Waymo, bet on redundancy and variety, outfitting their vehicles with a full suite of lidars, cameras, and radars. Others, like Tesla, pursue a more minimalist and cost-effective approach, relying heavily on cameras and software innovation.

Let's explore these diverging strategies, the technical paradoxes they face, and the business logic driving their decisions.

Why Smarter Machines Demand Smarter Energy Solutions

This is indeed an important issue. I faced a similar dilemma when I launched a drone-related startup in 2013. We were trying to create drones capable of tracking human movement. At that time, the idea was ahead, but it soon became clear that there was a technical paradox.

For a drone to track an object, it must analyze sensor data, which requires computational power — an onboard computer. However, the more powerful the computer needs to be, the higher the energy consumption. Consequently, a battery with more capacity is needed. However, a larger battery increases the drone's weight, and more weight requires even more energy. A vicious cycle arises: increasing power demands lead to higher energy consumption, weight, and ultimately, cost.

The same problem applies to autonomous vehicles. On the one hand, you want to equip the vehicle with all possible sensors to collect as much data as possible, synchronize it, and make the most accurate decisions. On the other hand, this significantly increases the system's cost and energy consumption. It’s important to consider not only the cost of the sensors themselves but also the energy required to process their data.

The amount of data is increasing, and the computational load is growing. Of course, over time, computing systems have become more compact and energy-efficient, and software has become more optimized. In the 1980s, processing a 10×10 pixel image could take hours; today, systems analyze 4K video in real-time and perform additional computations on the device without consuming excessive energy. However, the performance dilemma still remains, and AV companies are improving not only sensors but also computational hardware and optimization algorithms.

Processing or Perception?

The performance issues where the system must decide which data to drop are primarily due to computational limitations rather than problems with LiDAR, camera, or radar sensors. These sensors function as the vehicle's eyes and ears, continuously capturing vast amounts of environmental data. However, if the onboard computing “brain” lacks the processing power to handle all this information in real time, it becomes overwhelming. As a result, the system must prioritize certain data streams over others, potentially ignoring some objects or scenes in specific situations to focus on higher-priority tasks.

This computational bottleneck means that even if the sensors are functioning perfectly, and often they have redundancies to ensure reliability, the vehicle may still struggle to process all the data effectively. Blaming the sensors isn't appropriate in this context because the issue lies in the data processing capacity. Enhancing computational hardware and optimizing algorithms are essential steps to mitigate these challenges. By improving the system's ability to handle large data volumes, autonomous vehicles can reduce the likelihood of missing critical information, leading to safer and more reliable operations.

Lidar, Сamera, and Radar systems: Pros & Cons

It’s impossible to say that one type of sensor is better than another — each serves its own purpose. Problems are solved by selecting the appropriate sensor for a specific task.

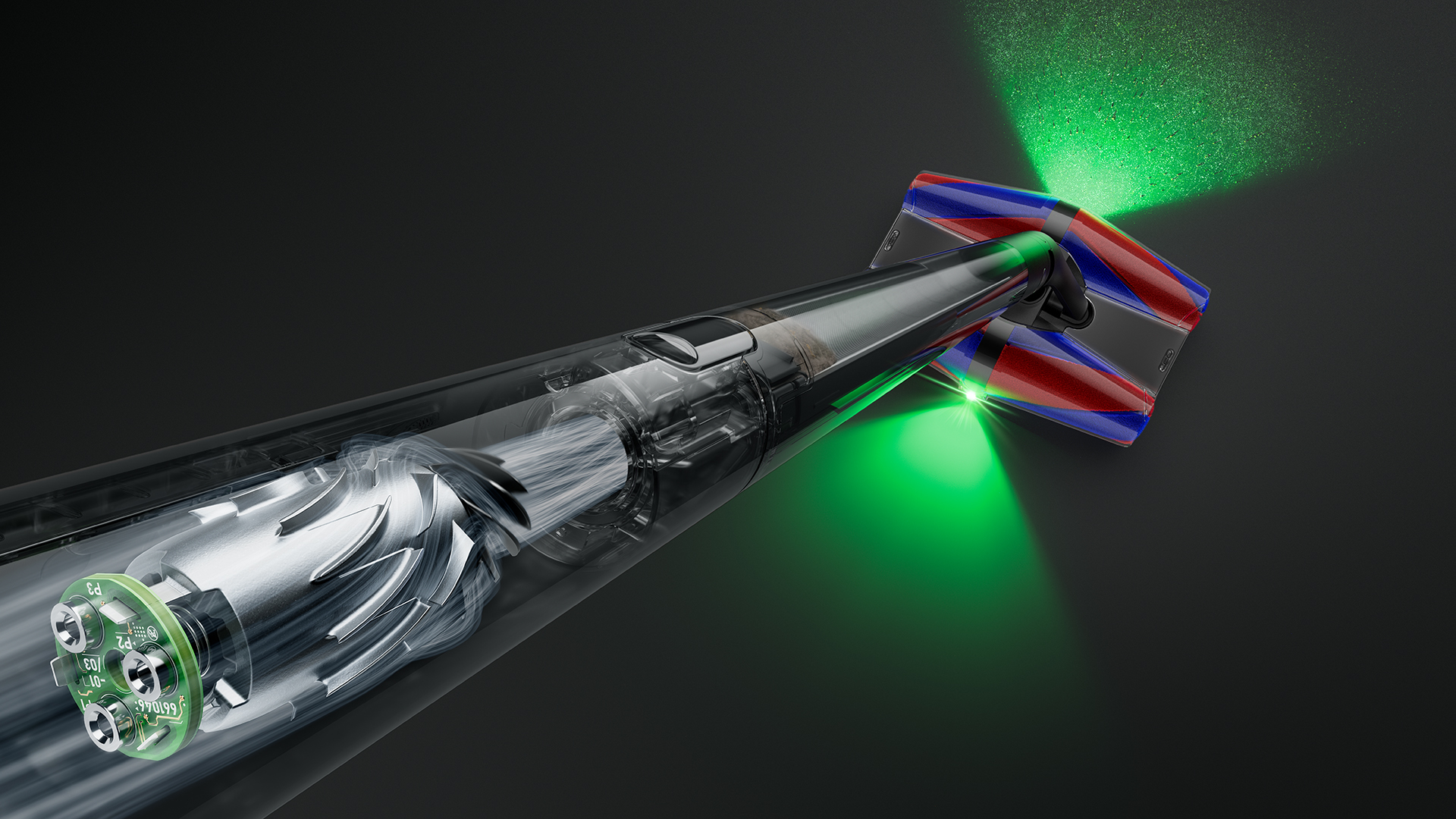

LiDAR, while offering precise 3D mapping, is expensive and struggles in adverse weather conditions like rain and fog, which can scatter its laser signals. It also requires significant computational resources to process its dense data.

Cameras, though cost-effective, are highly dependent on lighting conditions, performing poorly in low light, glare, or rapid lighting changes. They also lack inherent depth perception and struggle with obstructions like dirt, rain, or snow on the lens.

Radar is reliable in detecting objects in various weather conditions, but its low resolution makes it hard to distinguish between small or closely spaced objects. It often generates false positives, detecting irrelevant items that can trigger unnecessary responses. Additionally, radar cannot decipher context or help identify objects visually, unlike with cameras.

By leveraging sensor fusion — combining data from LiDAR, radar, and cameras — these systems gain a more holistic and accurate understanding of their environment, which in turn enhances both safety and real-time decision-making. Keymakr's collaboration with leading ADAS developers has shown how critical this approach is to system reliability. We’ve consistently worked on diverse, high-quality datasets to support model training and refinement.

Waymo VS Tesla: A Tale of Two Autonomous Visions

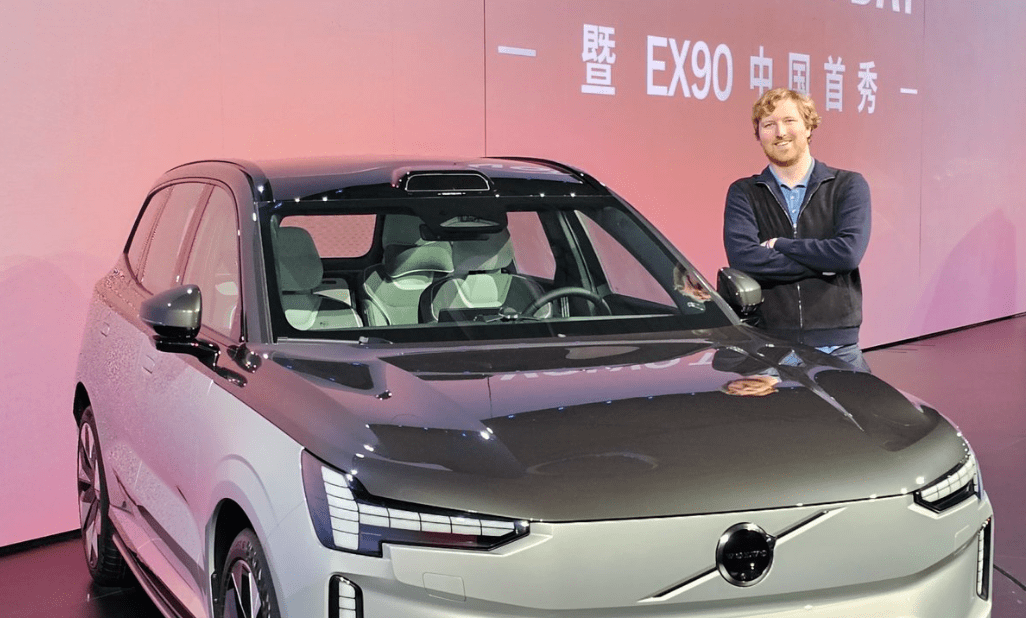

In AV, few comparisons spark as much debate as Tesla and Waymo. Both are pioneering the future of mobility — but with radically different philosophies. So, why does a Waymo car look like a sensor-packed spaceship, while Tesla appears almost free of external sensors?

Let's take a look at the Waymo vehicle. It’s a base Jaguar modified for autonomous driving. On its roof are dozens of sensors: lidars, cameras, spinning laser systems (so-called “spinners”), and radars. There are truly many of them: cameras in the mirrors, sensors on the front and rear bumpers, long-range viewing systems — all of this is synchronized.

If such a vehicle gets into an accident, the engineering team adds new sensors to gather the missing information. Their approach is to use the maximum number of available technologies.

So why doesn’t Tesla follow the same path? One of the main reasons is that Tesla has not yet released its Robotaxi to the market. Also, their approach focuses on cost minimization and innovation. Tesla believes using lidars is impractical due to their high cost: the manufacturing cost of an RGB camera is about $3, whereas a lidar can cost $400 or more. Furthermore, lidars contain mechanical parts — rotating mirrors and motors—which makes them more prone to failure and replacement.

Cameras, by contrast, are static. They have no moving parts, are much more reliable, and can function for decades until the casing degrades or the lens dims. Moreover, cameras are easier to integrate into a car’s design: they can be hidden inside the body, made nearly invisible.

Production approaches also differ significantly. Waymo uses an existing platform — a production Jaguar — onto which sensors are mounted. They don’t have a choice. Tesla, on the other hand, manufactures vehicles from scratch and can plan sensor integration into the body from the outset, concealing them from view. Formally, they will be listed in the specs, but visually, they’ll be almost unnoticeable.

Currently, Tesla uses eight cameras around the car — in the front, rear, side mirrors, and doors. Will they use additional sensors? I believe so.

Based on my experience as a Tesla driver who has also ridden in Waymo vehicles, I believe that incorporating lidar would improve Tesla's Full Self-Driving system. It feels to me that Tesla's FSD currently lacks some accuracy when driving. Adding lidar technology could enhance its ability to navigate challenging conditions like significant sun glare, airborne dust, or fog. This improvement would potentially make the system safer and more reliable compared to relying solely on cameras.

But from the business perspective, when a company develops its own technology, it aims for a competitive advantage — a technological edge. If it can create a solution that is dramatically more efficient and cheaper, it opens the door to market dominance.

Tesla follows this logic. Musk doesn’t want to take the path of other companies like Volkswagen or Baidu, which have also made considerable progress. Even systems like Mobileye and iSight, installed in older cars, already demonstrate decent autonomy.

But Tesla aims to be unique — and that’s business logic. If you don’t offer something radically better, the market won’t choose you.

The post Choosing the Eyes of the Autonomous Vehicle: A Battle of Sensors, Strategies, and Trade-Offs appeared first on Unite.AI.

.jpg)

![[The AI Show Episode 148]: Microsoft’s Quiet AI Layoffs, US Copyright Office’s Bombshell AI Guidance, 2025 State of Marketing AI Report, and OpenAI Codex](https://www.marketingaiinstitute.com/hubfs/ep%20148%20cover%20%281%29.png)

![[The AI Show Episode 146]: Rise of “AI-First” Companies, AI Job Disruption, GPT-4o Update Gets Rolled Back, How Big Consulting Firms Use AI, and Meta AI App](https://www.marketingaiinstitute.com/hubfs/ep%20146%20cover.png)

![[DEALS] Babbel Language Learning: Lifetime Subscription (All Languages) (71% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

![Borderlands 4 Boss Says 'A Real Fan' Will Pay $80 For Games [Update]](https://i.kinja-img.com/image/upload/c_fill,h_675,pg_1,q_80,w_1200/086e4654c281e40d12b833591d2c6fdc.jpg)

_Alan_Wilson_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

_pichetw_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

-xl.jpg)

![Apple Leads Global Wireless Earbuds Market in Q1 2025 [Chart]](https://www.iclarified.com/images/news/97394/97394/97394-640.jpg)

![OpenAI Acquires Jony Ive's 'io' to Build Next-Gen AI Devices [Video]](https://www.iclarified.com/images/news/97399/97399/97399-640.jpg)

![Apple Shares Teaser for 'Chief of War' Starring Jason Momoa [Video]](https://www.iclarified.com/images/news/97400/97400/97400-640.jpg)