Can GPT-image-1 make NSFW?

OpenAI’s newly released GPT-image-1 model promises unparalleled fidelity in text-to-image and image-to-image transformations. Yet a pressing question persists: could this powerful tool be used to generate Not Safe For Work (NSFW) content, and if so, how effectively? In this article, we delve into GPT-image-1’s architecture, its built-in safety mechanisms, real-world attempts to bypass its filters, comparisons with competitor platforms, and the broader ethical landscape surrounding AI-generated adult content. What Are the Official Capabilities and Restrictions of GPT-Image-1? Model Overview GPT-Image-1 was introduced in early May 2025 as part of OpenAI’s API offerings, enabling both image generation (“create” endpoint) and image editing (“edit” endpoint) via simple text prompts. Unlike diffusion-based systems such as DALL·E, GPT-Image-1 employs an autoregressive approach similar to language models, achieving finer control over composition, style, and file format without relying on external pipelines . Safety Guidelines From day one, OpenAI has embedded strict content policies into GPT-Image-1’s architecture. User requests for erotic or otherwise NSFW content are explicitly prohibited: “The assistant should not generate erotica, depictions of illegal or non-consensual sexual activities, or extreme gore”. Moreover, any uploaded images containing watermarks, explicit nudity, or other disallowed content will be rejected at the API level . These safeguards reflect OpenAI’s broader commitment to “safe and beneficial” AI, but they also raise questions about enforcement and potential circumvention. How Does GPT-image-1 Prevent NSFW Outputs? Content Moderation Layers OpenAI has implemented a two-stage safety stack to guard against the generation of disallowed imagery. First, an Initial Policy Validation (IPV) component analyzes incoming prompts for explicit trigger words or phrases commonly associated with NSFW content. Second, a Content Moderation (CM) endpoint reviews either text descriptions or the visual features of generated outputs, flagging or rejecting any content that fails to comply with OpenAI’s usage policies. For images, the moderation pipeline leverages both algorithmic pattern recognition and metadata checks. If a prompt or output is flagged, the API may return a refusal response or replace the image with a lower-fidelity “safe” placeholder. Developers who require more permissive use cases can lower the filter sensitivity, but OpenAI warns that this comes with increased risk and is intended only for trusted environments where human review is compulsory. Policy Prohibitions on Explicit Content OpenAI’s official policy categorically forbids the generation of pornography, deepfake sexual content, and non-consensual or underage nudity. This stance is consistent with the company’s broader commitment to preventing child sexual abuse material (CSAM) and non-consensual intimate imagery. All API customers must agree to these terms, and any violation can lead to immediate revocation of access and potential legal action . In public discussions, OpenAI leadership—including CEO Sam Altman—has acknowledged the complexity of moderating adult content responsibly. Although internal documents hint at “exploratory” work on safe, age-verified erotica generation, the company has reaffirmed that AI-generated pornography will remain banned, with no immediate plans to reverse this policy. Are Users Bypassing GPT-image-1’s Filters? Community-Driven Workarounds Despite robust safeguards, dedicated users on forums like Reddit have shared techniques to circumvent content filters. Strategies involve: Oblique Descriptions: Using indirect language or metaphors (e.g., “towel and foggy mirror” instead of “naked woman in shower”) to imply sexual scenarios without triggering explicit keywords. Artistic Context: Prefacing prompts with art-style instructions (“draw in the style of Renaissance nudes but in pastel colors”), which may slip past initial validation. Batch Generation and Selection: Submitting large batches of slightly varied prompts, then manually selecting any images that approximate the desired NSFW content. However, these methods yield inconsistent and often low-quality results, as the moderation stack still flags many outputs as unsafe. Moreover, manual filtering places additional burdens on users, undermining the seamless creative workflow that GPT-image-1 is designed to provide. False Positives and Quality Trade-Offs On some community threads, users report encountering “false positives”, where benign or artistic prompts are erroneously blocked. Examples include: Artistic Study: Prompts for classical nude figure studies in an academic context flagged as adult content. Historic Artwork Reproductions: Attempts to recreate famous paintings containing nudity (e.g., Michelangelo’s David) rejected

OpenAI’s newly released GPT-image-1 model promises unparalleled fidelity in text-to-image and image-to-image transformations. Yet a pressing question persists: could this powerful tool be used to generate Not Safe For Work (NSFW) content, and if so, how effectively? In this article, we delve into GPT-image-1’s architecture, its built-in safety mechanisms, real-world attempts to bypass its filters, comparisons with competitor platforms, and the broader ethical landscape surrounding AI-generated adult content.

What Are the Official Capabilities and Restrictions of GPT-Image-1?

Model Overview

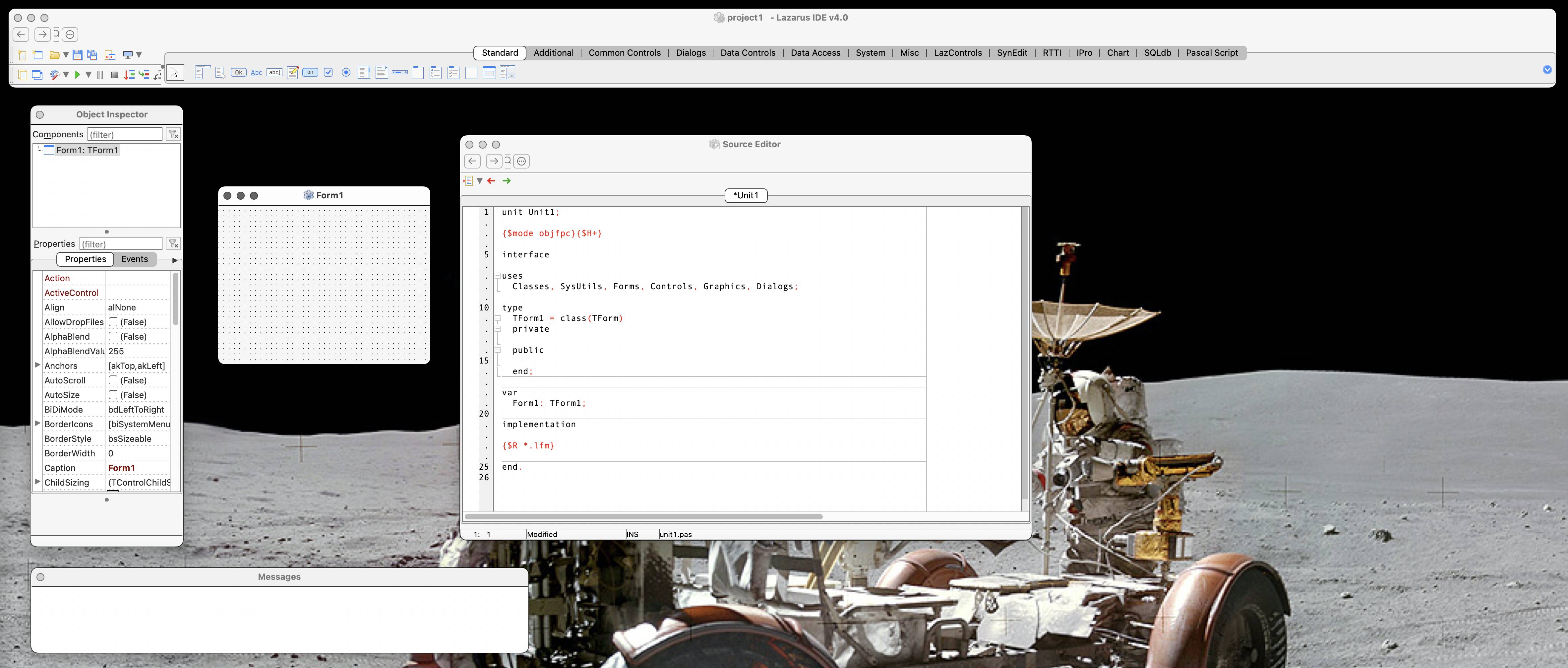

GPT-Image-1 was introduced in early May 2025 as part of OpenAI’s API offerings, enabling both image generation (“create” endpoint) and image editing (“edit” endpoint) via simple text prompts. Unlike diffusion-based systems such as DALL·E, GPT-Image-1 employs an autoregressive approach similar to language models, achieving finer control over composition, style, and file format without relying on external pipelines .

Safety Guidelines

From day one, OpenAI has embedded strict content policies into GPT-Image-1’s architecture. User requests for erotic or otherwise NSFW content are explicitly prohibited: “The assistant should not generate erotica, depictions of illegal or non-consensual sexual activities, or extreme gore”. Moreover, any uploaded images containing watermarks, explicit nudity, or other disallowed content will be rejected at the API level . These safeguards reflect OpenAI’s broader commitment to “safe and beneficial” AI, but they also raise questions about enforcement and potential circumvention.

How Does GPT-image-1 Prevent NSFW Outputs?

Content Moderation Layers

OpenAI has implemented a two-stage safety stack to guard against the generation of disallowed imagery. First, an Initial Policy Validation (IPV) component analyzes incoming prompts for explicit trigger words or phrases commonly associated with NSFW content. Second, a Content Moderation (CM) endpoint reviews either text descriptions or the visual features of generated outputs, flagging or rejecting any content that fails to comply with OpenAI’s usage policies.

For images, the moderation pipeline leverages both algorithmic pattern recognition and metadata checks. If a prompt or output is flagged, the API may return a refusal response or replace the image with a lower-fidelity “safe” placeholder. Developers who require more permissive use cases can lower the filter sensitivity, but OpenAI warns that this comes with increased risk and is intended only for trusted environments where human review is compulsory.

Policy Prohibitions on Explicit Content

OpenAI’s official policy categorically forbids the generation of pornography, deepfake sexual content, and non-consensual or underage nudity. This stance is consistent with the company’s broader commitment to preventing child sexual abuse material (CSAM) and non-consensual intimate imagery. All API customers must agree to these terms, and any violation can lead to immediate revocation of access and potential legal action .

In public discussions, OpenAI leadership—including CEO Sam Altman—has acknowledged the complexity of moderating adult content responsibly. Although internal documents hint at “exploratory” work on safe, age-verified erotica generation, the company has reaffirmed that AI-generated pornography will remain banned, with no immediate plans to reverse this policy.

Are Users Bypassing GPT-image-1’s Filters?

Community-Driven Workarounds

Despite robust safeguards, dedicated users on forums like Reddit have shared techniques to circumvent content filters. Strategies involve:

- Oblique Descriptions: Using indirect language or metaphors (e.g., “towel and foggy mirror” instead of “naked woman in shower”) to imply sexual scenarios without triggering explicit keywords.

- Artistic Context: Prefacing prompts with art-style instructions (“draw in the style of Renaissance nudes but in pastel colors”), which may slip past initial validation.

- Batch Generation and Selection: Submitting large batches of slightly varied prompts, then manually selecting any images that approximate the desired NSFW content.

However, these methods yield inconsistent and often low-quality results, as the moderation stack still flags many outputs as unsafe. Moreover, manual filtering places additional burdens on users, undermining the seamless creative workflow that GPT-image-1 is designed to provide.

False Positives and Quality Trade-Offs

On some community threads, users report encountering “false positives”, where benign or artistic prompts are erroneously blocked. Examples include:

- Artistic Study: Prompts for classical nude figure studies in an academic context flagged as adult content.

- Historic Artwork Reproductions: Attempts to recreate famous paintings containing nudity (e.g., Michelangelo’s David) rejected by the model.

Such incidents highlight the fragility of content filters, which may err on the side of over-moderation to avoid any risk of NSFW leakage. This conservative approach can obstruct legitimate use cases, prompting calls for more nuanced and context-aware moderation mechanisms .

PromptGuard and Soft Prompt Moderation

PromptGuard represents a cutting‐edge defense against NSFW generation: by inserting a learned “safety soft prompt” into the model’s embedding space, it creates an implicit system‐level directive that neutralizes malicious or erotic requests before they reach the decoder. Experiments report an unsafe generation rate as low as 5.8%, while benign image quality remains virtually unaffected .

Jailbreaking Prompt Attack

Conversely, the Jailbreaking Prompt Attack leverages antonym‐based search in the text embedding space, followed by gradient‐masked optimization of discrete tokens to coax diffusion models into producing explicit content. Although originally demonstrated on open‐source and competing closed‐source services (e.g., Stable Diffusion v1.4, DALL·E 2, Midjourney), the underlying principles apply equally to autoregressive models like GPT-Image-1. This highlights the adversarial arms race between content filters and malicious actors

How Does GPT-image-1 Compare to Other Platforms?

Grok-2 vs. GPT-image-1

Platforms like Grok-2 have taken a markedly different approach, offering minimal NSFW restrictions and no watermarking. While this grants users greater artistic freedom, it raises serious ethical and legal concerns, including potential misuse for deepfake pornography and copyright infringement. By contrast, GPT-image-1’s stringent guardrails and C2PA metadata embed provenance and deter illicit sharing .

| Feature | GPT-image-1 | Grok-3 |

|---|---|---|

| NSFW Filtering | Strict (auto/low modes) | Minimal |

| C2PA Metadata | Included | None |

| Deepfake Prevention | Enforced | None |

| Industry Compliance | High | Low |

DALL-E and Midjourney

DALL-E 3 and Midjourney both implement PG-13 style policies, allowing suggestive imagery but prohibiting explicit adult content. DALL-E adds watermarks to discourage misuse, while Midjourney relies on community reporting for moderation. GPT-image-1 aligns more closely with DALL-E in its enforcement rigor but surpasses both in integrated metadata standards and multimodal editing features .

What Are the Ethical and Legal Implications?

Deepfakes and Consent

One of the most alarming risks of NSFW image generation is the creation of non-consensual deepfakes, where a person’s likeness is used without permission. High-profile cases involving celebrities have already resulted in reputational harm and legal actions. OpenAI’s policy explicitly forbids any image that could facilitate such abuses, and its use of metadata seeks to deter bad actors by ensuring images can be traced back to their AI origin.

Child Protection

Any model capable of generating realistic images of people must rigorously guard against the potential for child sexual abuse material (CSAM). OpenAI emphasizes that GPT-image-1’s moderation stack is trained to identify and block any content depicting minors in sexual contexts. This includes both textual prompts and visual cues. Violation of this policy carries severe consequences, including referral to law enforcement when required by law .

Society and Creative Expression

Allowing any form of NSFW content through AI raises questions about societal norms, artistic freedom, and digital rights. Some argue that consensual erotic art has a legitimate place in digital media, provided there are robust safeguards and age verification. Others fear a slippery slope where any relaxation of filters could facilitate illegal or harmful content. OpenAI’s cautious stance—exploring possibilities for age-restricted, responsibly managed erotica while firmly banning pornography—reflects this tension.

What Are the Implications for Developers, Designers, and Users?

Best Practices for Responsible Use

Developers integrating GPT-Image-1 into products must implement layered safety controls:

- Client‐side Filtering: Pre‐screen user inputs for keywords or image metadata associated with NSFW content.

- Server‐side Enforcement: Rely on OpenAI’s moderation API to block disallowed requests, and log attempts for audit and investigation.

- Human Review: Flag ambiguous cases for manual inspection, particularly in high‐risk domains (e.g., adult content platforms).

Designers and end‐users should also be aware of potential model “drift” and adversarial exploits. Regularly updating prompt guidelines and retraining custom moderation layers can mitigate emerging threats.

Future Directions in Safety Research

The dynamic nature of NSFW risks necessitates continuous innovation. Potential research avenues include:

Federated Safety Learning: Harnessing decentralized user feedback on edge devices to collectively improve moderation without compromising privacy.

Adaptive Soft Prompts: Extending PromptGuard to support real‐time adaptation based on user context (e.g., age verification, geopolitical region).

Multi‐modal Consistency Checks: Cross‐validating text prompts against generated image content to detect semantic incongruities indicative of jailbreak attempts.

Conclusion

GPT-image-1 stands at the forefront of multimodal AI, delivering unprecedented capabilities for image generation and editing. Yet with this power comes immense responsibility. While technical safeguards and policy bans firmly block the creation of explicit pornography and deepfakes, determined users continue to test the model’s limits. Comparisons with other platforms underscore the importance of metadata, rigorous moderation, and ethical stewardship.

As OpenAI and the broader AI community grapple with the complexities of NSFW content, the path forward will demand collaboration between developers, regulators, and civil society to ensure that creative innovation does not come at the cost of dignity, consent, and safety. By maintaining transparency, inviting public dialogue, and advancing moderation technology, we can harness the promise of GPT-image-1 while safeguarding against its misuse.

Getting Started

Developers can access GPT-image-1 API and Grok 3 API through CometAPI. To begin, explore the model’s capabilities in the Playground and consult the API guide (model name: gpt-image-1) for detailed instructions. Note that some developers may need to verify their organization before using the model.

\**GPT-Image-1\** API Pricing in CometAPI,20% off the official price:

Output Tokens: $32/ M tokens

Input Tokens: $8 / M tokens

![[The AI Show Episode 146]: Rise of “AI-First” Companies, AI Job Disruption, GPT-4o Update Gets Rolled Back, How Big Consulting Firms Use AI, and Meta AI App](https://www.marketingaiinstitute.com/hubfs/ep%20146%20cover.png)

![Ditching a Microsoft Job to Enter Startup Hell with Lonewolf Engineer Sam Crombie [Podcast #171]](https://cdn.hashnode.com/res/hashnode/image/upload/v1746753508177/0cd57f66-fdb0-4972-b285-1443a7db39fc.png?#)

.jpg?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

-Nintendo-Switch-2-Hands-On-Preview-Mario-Kart-World-Impressions-&-More!-00-10-30.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

-xl.jpg)

![New iPad 11 (A16) On Sale for Just $277.78! [Lowest Price Ever]](https://www.iclarified.com/images/news/97273/97273/97273-640.jpg)

![Apple Foldable iPhone to Feature New Display Tech, 19% Thinner Panel [Rumor]](https://www.iclarified.com/images/news/97271/97271/97271-640.jpg)

![[Weekly funding roundup May 3-9] VC inflow into Indian startups touches new high](https://images.yourstory.com/cs/2/220356402d6d11e9aa979329348d4c3e/WeeklyFundingRoundupNewLogo1-1739546168054.jpg)