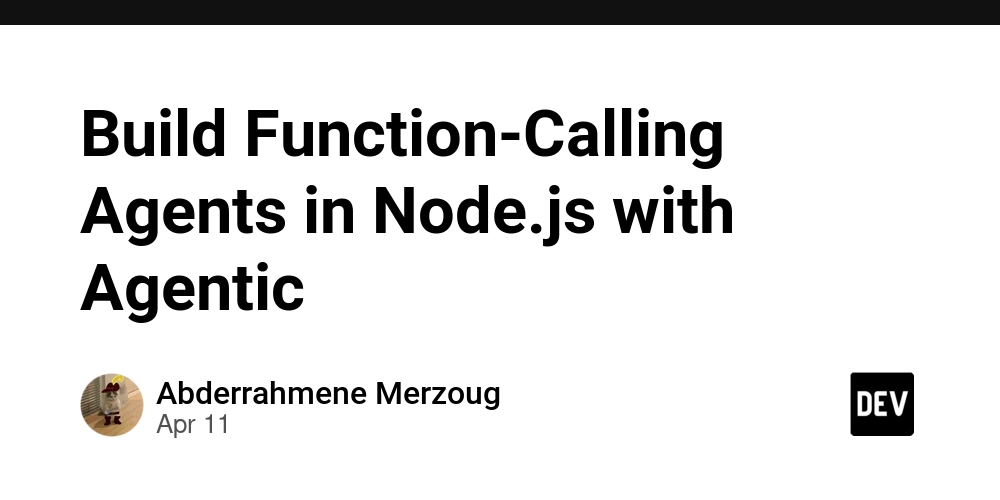

Build Function-Calling Agents in Node.js with Agentic

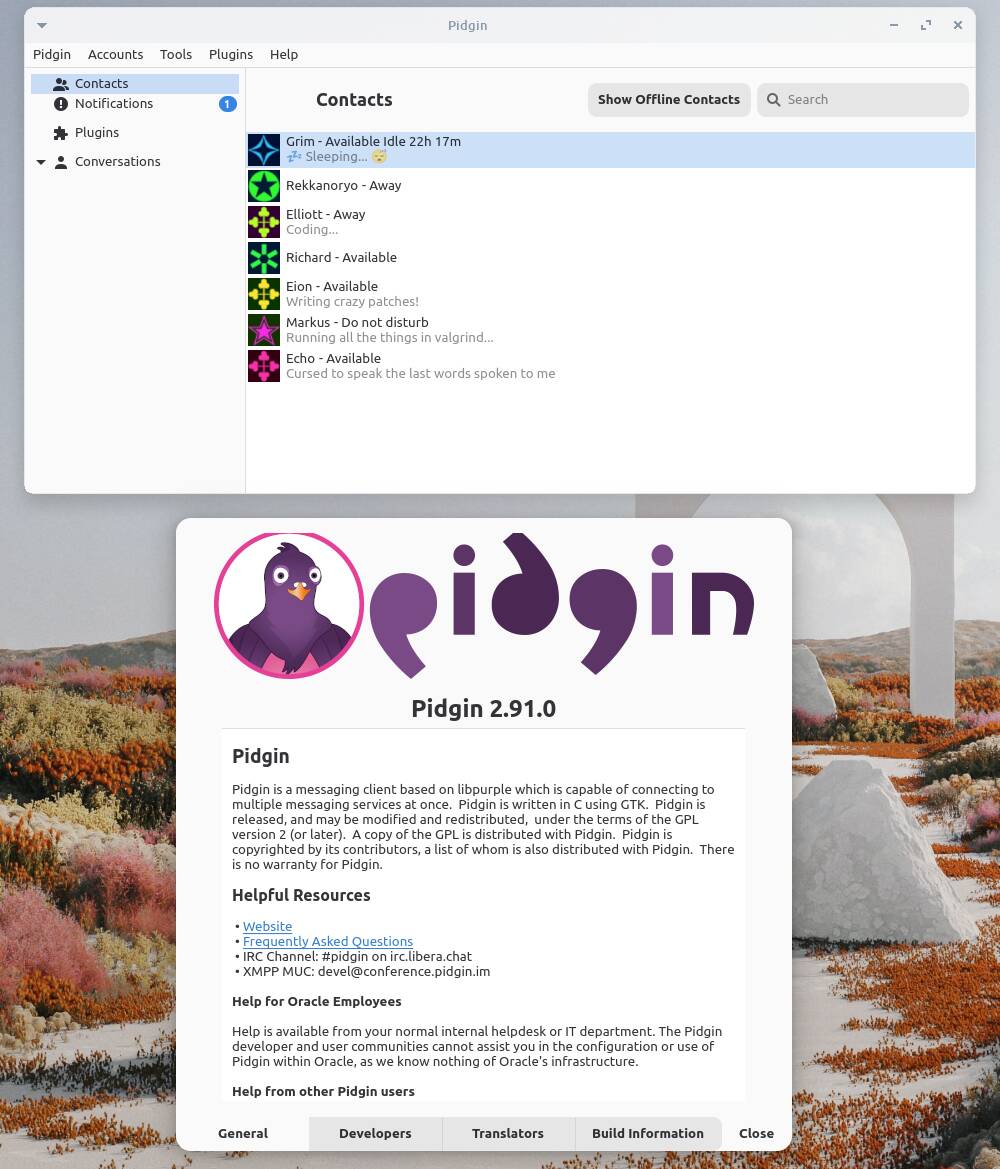

Building applications powered by Large Language Models (LLMs) is exciting, but making them truly useful often means giving them access to the real world – fetching live data, calling APIs, interacting with databases, or executing code. This is where "function calling" or "tool use" comes in, allowing the LLM to request actions from your application. While powerful, implementing this interaction layer can quickly become complex. You need to: Clearly define available tools for the LLM. Format prompts correctly. Parse the LLM's response to detect tool call requests. Extract parameters accurately. Execute the corresponding function in your code. Handle potential errors during execution. Format the tool's result back for the LLM. Manage the conversation history across user messages, assistant responses, and tool interactions. Handle streaming responses for a better user experience. That's a lot of boilerplate! Wouldn't it be great if there was a lightweight, focused way to handle this in Node.js? ✨ Introducing @obayd/agentic Meet @obayd/agentic – a simple yet powerful framework designed specifically to streamline the creation of function-calling LLM agents in Node.js. It focuses on providing the core building blocks you need without unnecessary complexity. What can it do for you? ✅ Fluent Tool Definition: Define tools the LLM can use with a clean, chainable API (Tool.make().description().param()...).

Building applications powered by Large Language Models (LLMs) is exciting, but making them truly useful often means giving them access to the real world – fetching live data, calling APIs, interacting with databases, or executing code. This is where "function calling" or "tool use" comes in, allowing the LLM to request actions from your application.

While powerful, implementing this interaction layer can quickly become complex. You need to:

- Clearly define available tools for the LLM.

- Format prompts correctly.

- Parse the LLM's response to detect tool call requests.

- Extract parameters accurately.

- Execute the corresponding function in your code.

- Handle potential errors during execution.

- Format the tool's result back for the LLM.

- Manage the conversation history across user messages, assistant responses, and tool interactions.

- Handle streaming responses for a better user experience.

That's a lot of boilerplate! Wouldn't it be great if there was a lightweight, focused way to handle this in Node.js?

✨ Introducing @obayd/agentic

Meet @obayd/agentic – a simple yet powerful framework designed specifically to streamline the creation of function-calling LLM agents in Node.js. It focuses on providing the core building blocks you need without unnecessary complexity.

What can it do for you?

- ✅ Fluent Tool Definition: Define tools the LLM can use with a clean, chainable API (

Tool.make().description().param()...).

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

![[DEALS] Microsoft Visual Studio Professional 2022 + The Premium Learn to Code Certification Bundle (97% off) & Other Deals Up To 98% Off](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From Accountant to Data Engineer with Alyson La [Podcast #168]](https://cdn.hashnode.com/res/hashnode/image/upload/v1744420903260/fae4b593-d653-41eb-b70b-031591aa2f35.png?#)

.png?#)

![What Google Messages features are rolling out [April 2025]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2023/12/google-messages-name-cover.png?resize=1200%2C628&quality=82&strip=all&ssl=1)

![iPadOS 19 Will Be More Like macOS [Gurman]](https://www.iclarified.com/images/news/97001/97001/97001-640.jpg)

![Apple TV+ Summer Preview 2025 [Video]](https://www.iclarified.com/images/news/96999/96999/96999-640.jpg)

![Apple Watch SE 2 On Sale for Just $169.97 [Deal]](https://www.iclarified.com/images/news/96996/96996/96996-640.jpg)