"Unlocking LLM Potential: Enhancing Reasoning and Multilingual Mastery"

In a world increasingly driven by artificial intelligence, the potential of Large Language Models (LLMs) stands at the forefront of innovation, yet many still grapple with unlocking their full capabilities. Have you ever wondered how these sophisticated systems can not only generate text but also reason and communicate across multiple languages? If so, you're not alone. As we navigate through this digital landscape filled with linguistic challenges and opportunities, understanding LLMs becomes essential for anyone looking to harness their power effectively. This blog post will delve into the intricacies of enhancing reasoning within language models while simultaneously mastering multilingual communication—a dual challenge that many face in today’s globalized environment. We’ll explore practical strategies to elevate your use of LLMs beyond mere text generation to impactful applications that resonate across cultures and contexts. From real-world applications transforming industries to emerging trends shaping future developments, join us on this journey as we unravel the complexities surrounding LLMs and equip you with insights that could redefine your approach to AI-driven language processing. Are you ready to unlock new possibilities? Let’s dive in! Understanding LLMs: A Brief Overview Large Language Models (LLMs) have revolutionized the field of natural language processing by demonstrating remarkable capabilities in generating human-like text. These models, such as GPT-3 and BERT, leverage vast datasets to learn linguistic patterns and contextual relationships. The performance of LLMs is significantly influenced by their size; larger models tend to exhibit superior reasoning abilities due to enhanced parameter capacity. Research indicates that scaling impacts not only the model's comprehension but also its ability to navigate complex knowledge graphs effectively. Furthermore, advancements like Retrieval-Augmented Generation (RAG) are being explored for improving multilingual applications, allowing these models to retrieve relevant information across languages seamlessly. Key Insights into Model Performance The relationship between graph complexity and reasoning performance is critical in understanding how LLMs operate within synthetic environments. Hyperparameters play a pivotal role in optimizing these interactions, influencing outcomes during training phases. Additionally, concepts such as graph search entropy help quantify the efficiency with which an LLM can traverse knowledge structures for accurate responses. By integrating methodologies like Structural Alignment and Rhetorical Structure Theory (RST), researchers aim to enhance discourse organization within generated texts—ultimately leading towards more coherent outputs that align closely with human communication styles. The Importance of Reasoning in Language Models Reasoning is a critical component that enhances the performance of Large Language Models (LLMs). Recent research indicates that scaling up model size significantly improves reasoning abilities, particularly within complex synthetic multihop reasoning environments. This relationship underscores the necessity for understanding knowledge graph complexity, as it directly influences how well models can navigate intricate information structures. Additionally, hyperparameters play a vital role in shaping reasoning outcomes; fine-tuning these parameters can lead to substantial improvements in LLMs' ability to process and generate coherent responses based on logical deductions. Key Findings on Reasoning Performance The study highlights the generation of synthetic knowledge graphs as an effective method for training LLMs to enhance their reasoning capabilities. By introducing concepts such as graph search entropy, researchers have identified ways to measure and optimize model interactions with data structures. These insights are invaluable for developers aiming to create more sophisticated language models capable of tackling real-world challenges through improved logical consistency and coherence in text generation. Understanding these dynamics not only aids in refining existing models but also paves the way for future advancements in natural language processing technologies. Strategies for Enhancing Multilingual Capabilities To enhance multilingual capabilities in language models, leveraging Retrieval-Augmented Generation (RAG) techniques is essential. Implementing strategies such as tRAG, MultiRAG, and CrossRAG allows models to access diverse linguistic resources effectively. These methods improve retrieval accuracy by utilizing multilingual knowledge bases that facilitate better generative performance across languages. Additionally, integrating linguistically grounded discourse structures can significantly aid in maintaining coherence and fluency when generating text in multiple languages. Utilizing Translation Tools Effectively The choice of translation tools plays a crucial r

In a world increasingly driven by artificial intelligence, the potential of Large Language Models (LLMs) stands at the forefront of innovation, yet many still grapple with unlocking their full capabilities. Have you ever wondered how these sophisticated systems can not only generate text but also reason and communicate across multiple languages? If so, you're not alone. As we navigate through this digital landscape filled with linguistic challenges and opportunities, understanding LLMs becomes essential for anyone looking to harness their power effectively. This blog post will delve into the intricacies of enhancing reasoning within language models while simultaneously mastering multilingual communication—a dual challenge that many face in today’s globalized environment. We’ll explore practical strategies to elevate your use of LLMs beyond mere text generation to impactful applications that resonate across cultures and contexts. From real-world applications transforming industries to emerging trends shaping future developments, join us on this journey as we unravel the complexities surrounding LLMs and equip you with insights that could redefine your approach to AI-driven language processing. Are you ready to unlock new possibilities? Let’s dive in!

Understanding LLMs: A Brief Overview

Large Language Models (LLMs) have revolutionized the field of natural language processing by demonstrating remarkable capabilities in generating human-like text. These models, such as GPT-3 and BERT, leverage vast datasets to learn linguistic patterns and contextual relationships. The performance of LLMs is significantly influenced by their size; larger models tend to exhibit superior reasoning abilities due to enhanced parameter capacity. Research indicates that scaling impacts not only the model's comprehension but also its ability to navigate complex knowledge graphs effectively. Furthermore, advancements like Retrieval-Augmented Generation (RAG) are being explored for improving multilingual applications, allowing these models to retrieve relevant information across languages seamlessly.

Key Insights into Model Performance

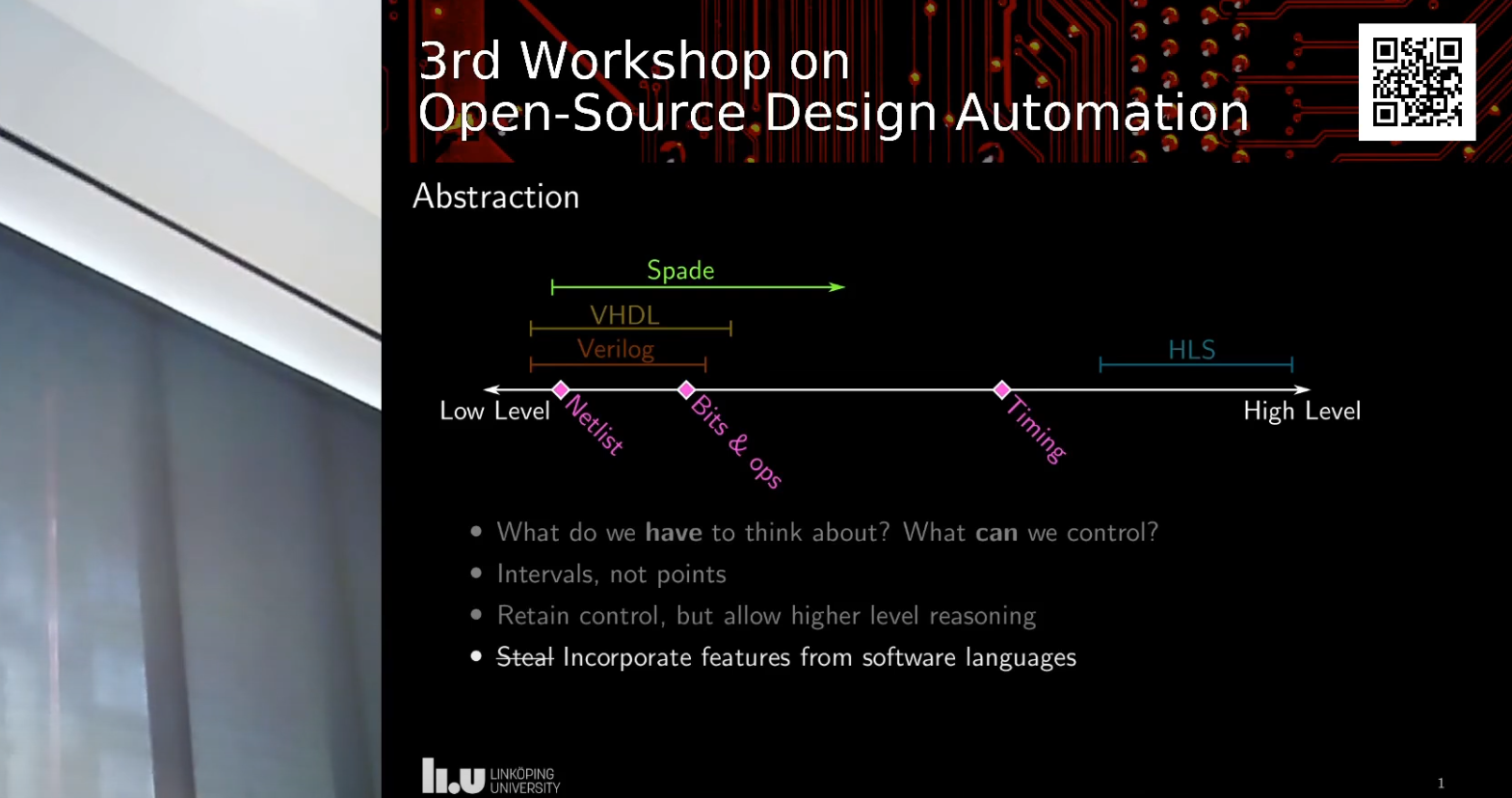

The relationship between graph complexity and reasoning performance is critical in understanding how LLMs operate within synthetic environments. Hyperparameters play a pivotal role in optimizing these interactions, influencing outcomes during training phases. Additionally, concepts such as graph search entropy help quantify the efficiency with which an LLM can traverse knowledge structures for accurate responses. By integrating methodologies like Structural Alignment and Rhetorical Structure Theory (RST), researchers aim to enhance discourse organization within generated texts—ultimately leading towards more coherent outputs that align closely with human communication styles.

The Importance of Reasoning in Language Models

Reasoning is a critical component that enhances the performance of Large Language Models (LLMs). Recent research indicates that scaling up model size significantly improves reasoning abilities, particularly within complex synthetic multihop reasoning environments. This relationship underscores the necessity for understanding knowledge graph complexity, as it directly influences how well models can navigate intricate information structures. Additionally, hyperparameters play a vital role in shaping reasoning outcomes; fine-tuning these parameters can lead to substantial improvements in LLMs' ability to process and generate coherent responses based on logical deductions.

Key Findings on Reasoning Performance

The study highlights the generation of synthetic knowledge graphs as an effective method for training LLMs to enhance their reasoning capabilities. By introducing concepts such as graph search entropy, researchers have identified ways to measure and optimize model interactions with data structures. These insights are invaluable for developers aiming to create more sophisticated language models capable of tackling real-world challenges through improved logical consistency and coherence in text generation. Understanding these dynamics not only aids in refining existing models but also paves the way for future advancements in natural language processing technologies.

Strategies for Enhancing Multilingual Capabilities

To enhance multilingual capabilities in language models, leveraging Retrieval-Augmented Generation (RAG) techniques is essential. Implementing strategies such as tRAG, MultiRAG, and CrossRAG allows models to access diverse linguistic resources effectively. These methods improve retrieval accuracy by utilizing multilingual knowledge bases that facilitate better generative performance across languages. Additionally, integrating linguistically grounded discourse structures can significantly aid in maintaining coherence and fluency when generating text in multiple languages.

Utilizing Translation Tools Effectively

The choice of translation tools plays a crucial role in the performance of multilingual models. By evaluating various translation methodologies—such as neural machine translation versus statistical approaches—developers can identify which tools yield the best results for specific tasks. Furthermore, incorporating user feedback into model training enhances adaptability and responsiveness to real-world language use cases, ultimately leading to improved communication across different linguistic contexts.# Real-World Applications of Advanced LLMs

Advanced Large Language Models (LLMs) have transformed various sectors by enhancing capabilities in natural language processing. One significant application is in customer service, where chatbots powered by LLMs can understand and respond to complex queries, providing users with accurate information efficiently. In the realm of education, these models facilitate personalized learning experiences through intelligent tutoring systems that adapt content based on individual student needs. Furthermore, advanced LLMs are instrumental in content creation across industries; they assist writers by generating ideas or drafting articles while maintaining coherence and relevance.

Multilingual Capabilities

The integration of Retrieval-Augmented Generation (RAG) techniques allows for improved multilingual applications. By leveraging strategies like tRAG and MultiRAG, organizations can enhance their retrieval capabilities across different languages, ensuring more accurate responses in diverse linguistic contexts. This advancement not only streamlines communication but also fosters inclusivity within global markets. Additionally, the use of knowledge graphs enables better contextual understanding and reasoning abilities within multilingual frameworks—essential for developing robust question-answering systems capable of handling cross-lingual inquiries effectively.

In summary, the real-world applications of advanced LLMs span multiple domains including customer support automation, educational tools tailored to learner preferences, and enhanced multilingual interactions that cater to a global audience's needs.

Challenges and Solutions in LLM Development

Large Language Models (LLMs) face significant challenges, particularly in generating coherent long-form text and enhancing reasoning capabilities. One primary challenge is the complexity of knowledge graphs, which can hinder model performance if not properly understood or integrated. To address this, researchers have introduced methods like Structural Alignment that leverage linguistically grounded discourse structures to improve coherence. By employing Rhetorical Structure Theory (RST), models can define discourse segments more effectively, leading to better organization of ideas.

Enhancing Reasoning Performance

Another critical area involves scaling models while maintaining their reasoning abilities. The relationship between model size and graph complexity plays a vital role; larger models often yield improved reasoning but require careful tuning of hyperparameters for optimal performance. Techniques such as synthetic multihop reasoning environments help assess these dynamics by simulating complex scenarios where LLMs must navigate intricate knowledge graphs efficiently. Furthermore, advancements like Retrieval-Augmented Generation (RAG) enhance multilingual capabilities by integrating diverse linguistic resources into training processes, thereby improving accuracy across various languages.

By addressing these challenges through innovative methodologies and frameworks, developers can significantly enhance the efficacy of LLMs in real-world applications while paving the way for future advancements in natural language processing.

Future Trends in Language Model Evolution

The evolution of language models is poised to be significantly influenced by advancements in reasoning capabilities and multilingual processing. As research indicates, scaling model size directly correlates with improved reasoning performance, particularly within complex knowledge graphs. The introduction of synthetic multihop reasoning environments will likely enhance the ability of large language models (LLMs) to navigate intricate relationships and generate coherent outputs. Furthermore, innovative approaches like Structural Alignment are set to redefine how LLMs produce long-form text by integrating linguistically grounded discourse structures that adhere to Rhetorical Structure Theory (RST). This alignment not only fosters better organization but also enhances logical consistency.

Advancements in Retrieval-Augmented Generation

Future trends will also see a rise in Retrieval-Augmented Generation (RAG), especially tailored for multilingual applications. Techniques such as tRAG and MultiRAG promise to optimize retrieval processes across languages, thereby improving accuracy and user experience. By leveraging diverse linguistic resources and enhancing generative abilities through sophisticated translation tools, these strategies aim to overcome existing challenges in cross-lingual NLP tasks. As researchers continue exploring these avenues, we can expect significant strides toward more effective question-answering systems that cater seamlessly to global audiences while maintaining high standards of performance metrics and reliability.

In conclusion, unlocking the potential of large language models (LLMs) hinges on a multifaceted approach that emphasizes both reasoning and multilingual capabilities. Understanding LLMs is essential as they serve as the backbone for numerous applications across various sectors. Enhancing reasoning within these models not only improves their accuracy but also enriches user interactions by enabling more sophisticated responses. Moreover, implementing effective strategies to bolster multilingual mastery ensures that LLMs can cater to diverse linguistic audiences, thereby expanding their usability globally. While challenges in development persist—such as biases and computational limitations—innovative solutions are emerging to address these issues effectively. As we look toward future trends in language model evolution, it becomes clear that continuous advancements will further refine their abilities, making them indispensable tools for communication and information processing in an increasingly interconnected world.

FAQs on Unlocking LLM Potential: Enhancing Reasoning and Multilingual Mastery

1. What are Large Language Models (LLMs)?

Large Language Models (LLMs) are advanced artificial intelligence systems designed to understand, generate, and manipulate human language. They utilize deep learning techniques to process vast amounts of text data, enabling them to perform tasks such as translation, summarization, question-answering, and more.

2. Why is reasoning important in language models?

Reasoning is crucial for language models because it allows them to comprehend context, make inferences, and provide coherent responses that go beyond mere pattern recognition. Enhanced reasoning capabilities enable LLMs to tackle complex queries effectively and produce more accurate outputs that reflect a deeper understanding of the subject matter.

3. What strategies can enhance multilingual capabilities in LLMs?

To enhance multilingual capabilities in LLMs, several strategies can be employed: - Diverse Training Data: Incorporating a wide range of languages during training helps improve fluency across different linguistic contexts. - Transfer Learning: Utilizing knowledge from high-resource languages can assist in improving performance on low-resource languages. - Fine-tuning Techniques: Tailoring models specifically for certain languages or dialects through fine-tuning enhances their accuracy and relevance.

4. What are some real-world applications of advanced LLMs?

Advanced LLMs have numerous real-world applications including: - Customer Support Automation: Providing instant responses to customer inquiries across multiple languages. - Content Creation: Assisting writers by generating ideas or drafting articles based on prompts. - Language Translation Services: Offering improved translations with better contextual understanding between various languages.

5. What challenges do developers face when creating advanced LLMs?

Developers encounter several challenges while developing advanced LLMs such as: - Data Biases: Ensuring fairness by addressing biases present in training datasets. - Resource Intensity: The computational power required for training large models can be significant. - Interpretability Issues: Understanding how decisions are made within these complex models remains a challenge for transparency and trustworthiness.

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

![[DEALS] Microsoft Visual Studio Professional 2022 + The Premium Learn to Code Certification Bundle (97% off) & Other Deals Up To 98% Off](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From Accountant to Data Engineer with Alyson La [Podcast #168]](https://cdn.hashnode.com/res/hashnode/image/upload/v1744420903260/fae4b593-d653-41eb-b70b-031591aa2f35.png?#)

.png?#)

![What Google Messages features are rolling out [April 2025]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2023/12/google-messages-name-cover.png?resize=1200%2C628&quality=82&strip=all&ssl=1)

![iPadOS 19 Will Be More Like macOS [Gurman]](https://www.iclarified.com/images/news/97001/97001/97001-640.jpg)

![Apple TV+ Summer Preview 2025 [Video]](https://www.iclarified.com/images/news/96999/96999/96999-640.jpg)

![Apple Watch SE 2 On Sale for Just $169.97 [Deal]](https://www.iclarified.com/images/news/96996/96996/96996-640.jpg)