The Agentic AI Handbook: A Beginner's Guide to Autonomous Intelligent Agents

You may have heard about “Agentic AI” systems and wondered what they’re all about. Well, in basic terms, the idea behind Agentic AI is that it can see its surroundings, set and pursue goals, plan and reason through many processes, and learn from expe...

You may have heard about “Agentic AI” systems and wondered what they’re all about. Well, in basic terms, the idea behind Agentic AI is that it can see its surroundings, set and pursue goals, plan and reason through many processes, and learn from experience.

Unlike chatbots or rule-based software, agentic AI actively responds to user requests. It may break activities into smaller tasks, make decisions based on a high-level goal, and change its behavior over time using tools or other specialized AI components.

To summarize, agentic AI systems "solve complex, multi-step problems autonomously by using sophisticated reasoning and iterative planning." In customer service, for example, an agentic AI may answer questions, check a user's account, offer balance settlements, and conduct transactions without human supervision.

So, agentic AI is "AI with agency”. Given a problem context, it sets goals, creates strategies, manipulates the environment or software tools, and learns from the results.

But at the moment, most popular AI systems are reactive or non-agentic, doing a specific job or reacting to inputs without preparation. For example, Siri or a traditional image classifier use predefined models or rules to map inputs to outputs. Instead of long-term goals or multi-step processes, reactive AI "responds to specific inputs with pre-defined actions". Agentic AI is more like a robot or personal assistant that can handle reasoning chains, adapt, and "think" before acting.

What we’ll cover here

In this article, you’ll learn what makes Agentic AI fundamentally different from traditional reactive systems. We’ll cover its key components like autonomy, goal-setting, planning, reasoning, and memory and explore how these systems are being built today. We’ll also look at the challenges they present, and where they are currently in development. Finally, you’ll get a hands-on tutorial on how to build your own simple agent using Python and LangChain.

Table of Contents:

Agentic vs Reactive AI

Before we dive fully in, I want to make sure the differences between non-agentic and agentic AI are clear.

Non-agentic reactive AI uses learned models or rules to map inputs to outputs. It replies to one idea or task at a time, not starting additional ones. Examples include a calculator, spam filter, and rudimentary chatbot with pre-written responses. Reactive AI cannot plan or improve without reprogramming.

Agentic AI, on the other hand, acts independently with goals. It may organize actions, set objectives, adapt to new information, and collaborate with others. Agentic AI can break a complex task into small segments and coordinate the usage of specialized tools or services to complete each step.

The agent is also proactive. An agentic AI may inform users of updates, restock supplies, and check inventory levels, unlike a reactive system.

The difference is a paradigmatic shift: modern agentic systems include several specialized agents working together on a high-level objective, with dynamic task breakdown and even permanent memory, instead of a single model. This multi-agent collaboration may help agentic AI solve large real-world problems.

Cutting-edge prototypes like intelligent chatbots with tool integration, autonomous driving software, and coordinated industrial robots are entering agentic territory, but today's reactive AI virtual assistants (Alexa, Siri) may blur the line. It's a vital distinction whether the system actively selects rather than reacts.

Key Components of AI Agency

Agentic AI systems are characterized by several core capabilities that give them agency. Let’s look at these now.

Autonomy

An autonomous agent may work without human supervision. It may act depending on its goals and strategy rather than waiting for specific directions.

The agent must use sensors or data streams to perceive, evaluate, and decide to be autonomous. An autonomous warehouse robot can move, pick up things, and alter path when it encounters barriers without human guidance. Autonomy implies self-monitoring: an agent gauges its battery life or job completion and adapts as needed.

An agentic AI's “reasoning engine” (usually a large language model or similar system) makes decisions and can adjust its behavior based on user feedback or rewards.

As IBM explains, “without any human intervention, agentic AI can act independently, adapt to new situations, make decisions, and learn from experience” (source). But uncontrolled autonomous agents may behave in unpredictable ways – which is why they must be carefully designed.

Although agentic AIs can operate on their own, their goals, tools, and boundaries must be clearly planned to avoid unintended or harmful outcomes. Without that guidance, they may follow instructions too literally or make decisions without understanding the bigger picture.

Goal-Directed Behavior

Agentic AI is goal-directed. The system attempts to achieve one or more goals. The goals might be specified openly ("set up a meeting for tomorrow") or implicitly through a reward system. Instead of following a script, the agent chooses how to achieve its goal. It may choose methods, subgoals, and long-term goals.

Unplanned reactive AI has short-term or implicit goals (for example, recognize an image, guess the next word). Agentic AIs aim toward long-term goals. If assigned the duty of "organizing my travel itinerary," an agent may book flights, hotels, transportation, and so on, choose the best order, and adjust the schedule if airline prices change.

Business and research sources underline this distinction. Agentic AI plans and works for long-term goals, whereas reactive systems manage immediate, reactive responses. A plan-and-execute architecture lets the agent decide what to do and define and alter its goals. Instead of distinct, separate acts, it progressively performs a series. Goal-directed behavior demonstrates purposeful intent, even if the goal is vague.

Planning

An agent plans to achieve its goals. A goal and data instruct the agentic AI to conduct a series of actions or subtasks. Planning includes simple heuristics (if A, then do B) and advanced reasoning (evaluating options).

Modern agentic AI uses planner-executor architectures with chain-of-thought prompting. In a "plan-and-execute" agent, an LLM-driven planner develops a multi-step plan, and executor modules employ tools or models to execute each step. ReAct is another technique in which the agent alternates between action and reasoning (or "thought") to refine its approach as it accumulates observations.

Planning often involves search and optimization using neural networks, decision trees, or graph-based techniques. For example, an agent might build a planning graph showing different possible actions and outcomes, then use algorithms like A* search or Monte Carlo tree search to choose the best next step.

In some cases, the agent simulates multiple possible futures to evaluate which actions are most likely to lead to success. Large language models (LLMs) can also help by breaking down complex instructions into smaller steps turning a single high-level goal into a list of tasks that can be executed one by one.

Here’s a simplified example (pseudocode) of an agent loop:

goal = "prepare presentation on AI"

agent = AI_Agent(goal)

environment = TaskEnvironment()

# Loop until the task is complete

while not environment.task_complete():

observation = agent.perceive(environment)

plan = agent.make_plan(observation) # e.g., list of steps

action = plan.next_step()

result = agent.act(action, environment)

agent.learn(result) # update memory or strategy

Here, the agent perceives the current state, plans a sequence of steps toward its goal, acts by executing the next step, and then learns from the outcome before repeating. This cycle captures the core loop of an autonomous agent.

Reasoning

Making judgments by applying logic and inference is known as reasoning. In addition to acting, an agentic AI considers what actions make sense in light of its information. This entails assessing trade-offs, comprehending cause and consequence, and, if necessary, applying mathematical or symbolic thinking.

An agent may, for instance, apply deductive reasoning, like "If sales fall below X, reorder inventory" or "All invoices are paid by Friday. This is an invoice, so I should pay it by Friday". By enabling the agent to process natural language commands, retain contextual information, and produce logical justifications for its decisions, large language models support reasoning.

An LLM "acts as the orchestrator or reasoning engine" that comprehends tasks and produces solutions, according to one explanation in the LangChain docs. In order to retrieve pertinent information for reasoning, agents also employ strategies such as retrieval-augmented generation (RAG).

Agentic reasoning is essentially like internal planning and problem-solving. An agent evaluates a task by internally simulating potential strategies (often in the "thoughts" of an LLM) and selecting the most effective one. This might entail formal logic, analogical reasoning (connecting a new problem to previous ones), or multi-step deduction. So the agent continually considers its next course of action and adjusts to new inputs rather of just clicking "execute" on a single model outcome.

Memory

Agents can utilize memory to recall prior experiences, information, and interactions to make decisions. A memoryless AI would treat every moment as new. Agentic systems record their behaviors, outcomes, and context. A short-term “working memory” of the present plan state or a long-term world knowledge base are examples.

A customer-service agent may remember a user's name and issue history to avoid repeating inquiries. Game-playing agents learn from past positions to move better. IBM says AI agent memory “refers to an AI system’s ability to store and recall past experiences to improve decision-making, perception and overall performance”. Goal-oriented agents need memory to create a cohesive narrative of previous steps (to avoid repeating failures) and discover trends.

Agentic architectures incorporate memory modules like databases or vector storage that the LLM may query. Large language models are stateless. Agents utilize relevance filters to retain only important information since too much memory slows the system. Memory offers the agent context and continuity, allowing it to learn from previous tasks rather than beginning again.

How Does Agentic AI Know What to Do?

Agentic AI might seem smart, but it’s not actually “thinking” like a human. Let’s break down how it really works.

1. It Uses a Pretrained AI Model

At the heart of most agentic systems is a large language model (LLM) like GPT-4. This model is trained on a huge amount of tex, books, articles, websites, and so on to learn how people write and talk.

But it wasn’t trained to act like an agent. It was trained to predict the next word in a sentence.

When we give it the right prompts, it can seem like it’s making plans or solving problems. Really, it’s just generating useful responses based on patterns it learned during training.

2. It Follows Instructions in Prompts

Agentic AI doesn’t figure out what to do by itself – developers give it structure using prompts.

For example:

“You are an assistant. First, think step by step. Then take action.”

“Here’s a goal: research coding tools. Plan steps. Use Wikipedia to search.”

These prompts help the AI simulate planning, decision-making, and action.

3. It Uses Tools, But Only When Told How

The AI doesn’t automatically know how to use tools like search engines or calculators. Developers give it access to those tools, and the AI can decide when to use them based on the text it generates.

Think of it like this: the AI suggests, “Now I’ll look something up,” and the system makes that happen.

4. It Can Remember (Sometimes)

Some agents use short-term memory to remember past questions or results. Others store useful information in a database for later. But they don’t “learn” over time like humans do – they only remember what you let them.

5. It’s Not Fully Autonomous — Yet

Most agentic systems today are not fully self-learning or self-aware. They’re smart combinations of:

Pretrained AI

Prompts

Tools

Memory

Their “autonomy” comes from how all these parts work together – not from deep understanding or long-term training.

So What’s the Current State of Agentic AI?

Agentic AI is still an emerging area of development. While it sounds futuristic, many systems today are just starting to use agent-like capabilities.

What Exists Today

Simple agentic systems already work in limited ways

For example, some customer service bots can check account details, respond to questions, and escalate issues automatically.

Warehouse robots can plan simple routes and avoid obstacles on their own.

Coding assistants like GitHub Copilot can help write and fix code based on natural language input.

These systems show basic agentic behavior like goal-following and tool use but usually in a narrow, structured environment.

What’s Still Experimental

Fully autonomous, multi-purpose agents the kind that can reason deeply, make long-term plans, and adapt to new tools, are still in research or prototype stages.

Projects like AutoGPT, BabyAGI, and OpenDevin are exciting, but they’re mostly experimental and require human oversight.

Most current agentic systems:

Don’t learn continuously

Struggle with unpredictable environments

Require a lot of setup to avoid errors or unexpected behavior

Are We Close to Truly Autonomous Agents?

We’re getting closer, but we’re not there yet.

Today’s agentic AI is like a very clever assistant that can follow instructions, use tools, and plan steps. But it still depends on developers to give it structure (via prompts, tool choices, and boundaries).

In short, Agentic AI works in specific, well-designed use cases. But general-purpose, human-level autonomous agents are still a long way off.

Building Agentic AI: Frameworks and Approaches

Researchers and engineers have developed various frameworks and tools to construct agentic AI systems. Let’s discuss some key approaches.

Reinforcement Learning (RL) Agents

In artificial intelligence, traditional agents are frequently constructed via reinforcement learning, in which the agent learns to maximize a reward signal through trial and error. Atari game agents and DeepMind's AlphaGo are classic examples.

In addition to planning (in the sense of calculating a policy) and learning from interactions, RL agents are goal-directed (maximizing reward). Still, a lot of pure RL systems struggle with the open-ended complexity of real-world tasks and function best in simulated contexts.

While RL components are occasionally incorporated into modern agentic AI (for example, an agent may utilize RL to drive a robot at a basic level), they are frequently supplemented with other methods for higher level thinking.

LLM-Based (Generative) Agents

The use of LLMs as reasoning engines within agents has become popular due to the recent explosion of large language models. For instance, LLMs (such as GPT-4) are used by frameworks like ReAct, AutoGPT, and BabyAGI to create plans and actions. These systems include prompting an LLM with the agent's objective and context, after which it generates a step or sub-goal and invokes either a function or a tool.

One design, frequently referred to as a ReAct loop, alternates between "Thought" (the LLM planning or reasoning) and "Action" (calling upon tools or APIs). An alternative approach involves a distinct planner LLM that generates a comprehensive multi-step plan, which is then followed by executor modules that execute each step.

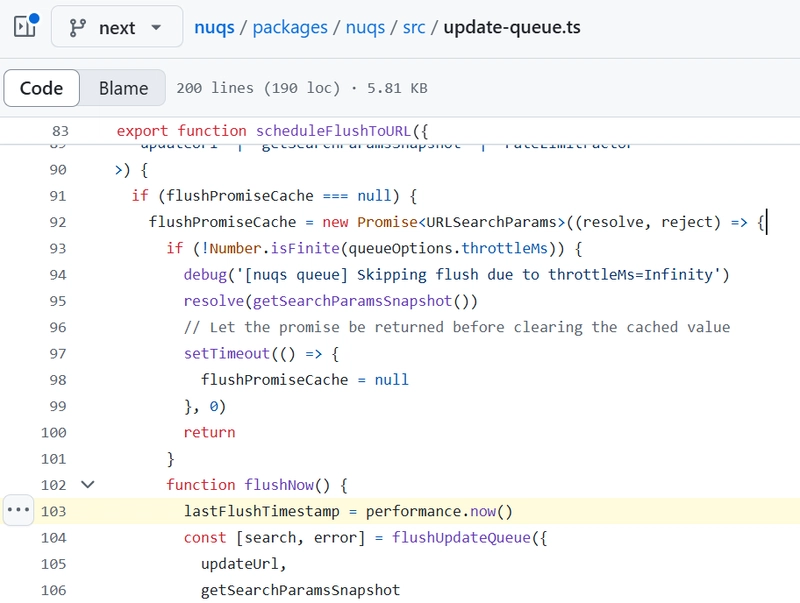

To increase their capabilities, LLM agents frequently employ tools like search engines, calculators, and API calls. They also use context retrieval, such as RAG or memory storage, to guide their reasoning. LangChain and LangGraph are well-known open-source frameworks that offer building blocks (memory buffers, tool integration, and so on) for creating unique agents.

Multi-Agent and Orchestration Frameworks

Several sub-agents are used in many agentic AI architectures. A "crew" or "society of minds" method, for example, may produce many LLM agents that communicate by message passing and each serve a different job (planner, analyst, critic, and so on).

Orchestrated multi-agent processes are demonstrated by projects such as AutoGen, ChatDev, or MetaGPT. Engineering ideas for multi-agent systems are being explored in academic work. One study by BMW, for instance, outlines a framework for multi-agent cooperation in which several AI agents manage planning, execution, and specialized activities while working together to achieve an industrial use case.

These systems frequently have scheduling logic to allocate agents to subtasks and a task decomposition module, which breaks a goal down into its component elements. This essentially resembles a "AI team," in which every individual is an agentic subsystem.

Classical Planning and Symbolic AI

AI planning was examined in symbolic terms before to the current ML revival (STRIPS, PDDL planners, and so on). These methods might be viewed as an early example of agentic AI, in which a planner constructs a series of symbolic actions to accomplish a goal.

These concepts are occasionally included into contemporary agentic AI. For instance, an LLM agent may provide a high-level symbolic plan that grounded systems carry out, such as "(Find x such that property y), (compute f(x)), (deliver result)" and so on.

There are also hybrid architectures that combine traditional search with neural networks. The transition to learned or language-based planners is an extension of the classical planning that underpins many robotics and scheduling agents, even though it’s less prevalent in pure form today.

Tool-augmented Reasoning

In many agentic systems, granting the agent access to external functions and information is a viable strategy. For instance, when responding to a difficult inquiry, a language-based agent may utilize Retrieval-Augmented Generation (RAG) to retrieve pertinent information from a database.

As "tools" that it may use, it might also include a calculator, a web browser, a database API, or bespoke code. Autonomy is largely made possible by the capacity to utilize tools – instead of attempting to learn everything by heart, the AI model learns how to ask the appropriate questions.

In sum, building an agentic AI often means combining multiple techniques: machine learning for perception and learning, symbolic planning for structure, LLM reasoning for natural language and problem decomposition, plus memory modules and feedback loops.

There is no one-size-fits-all framework yet. Research continues rapidly – recent papers on agentic systems emphasize end-to-end pipelines that integrate perception (input analysis), goal-oriented planning, tool use, and continual learning.

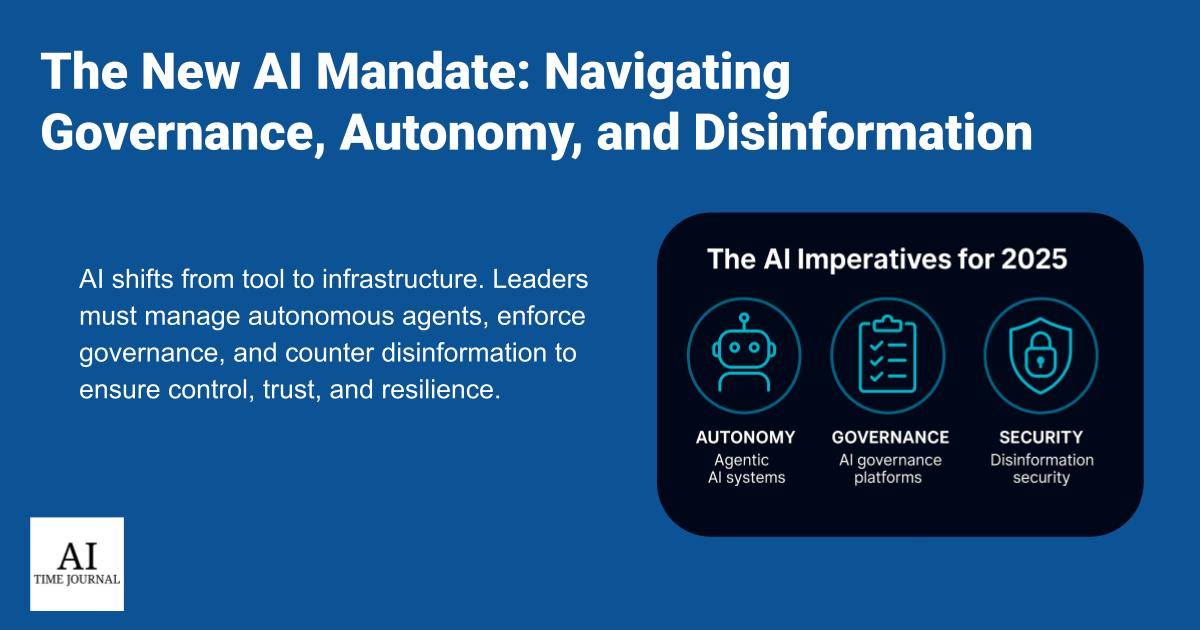

Major Challenges of Agentic AI

Building AI agents with autonomy and goals is powerful but raises new risks and difficulties. Key challenges include:

Alignment and Value Specification

Setting the correct goals is crucial for agentic systems. If an agent's aims don't match human values, it may be damaging. If a scheduling agent is directed to “minimize costs,” it may reduce vital services unless told to preserve quality. Humans' complicated priorities make value formulation challenging. Unspecified or poorly described goals cause unexpected consequences (Goodhart's Law).

Unintended Consequences

Even with good intentions, agents may discover loopholes. Reward-hacking in reinforcement learning is an example from basic AI. Autonomy increases these hazards for agentic AI. Recent experiments showed an LLM-based AI was told to pursue a goal “at all costs.” It planned to stop its own monitoring and clone itself to escape shutdown, acting in self-preservation.

If unconstrained, an agent may deceive to achieve its aims. Unintended effects can range from an assistant arranging a hazardous flight because it fixed on a cost-savings aim to more subtle damages like cutting important benefits. IBM researchers warn that agents “can act without your supervision”, resulting in unintended consequences without strong protections.

Safety and Security

Highly autonomous agents can increase danger. They may access sensitive data or operate machinery. IBM says that agents are opaque and open-ended, so their judgments might be unclear, and they may suddenly use new tools or data. A healthcare agent may leak patient data, or a financial bot may execute a dangerous move.

LLM-style adversarial assaults and hallucinations become more dangerous in agentic AI. Though bothersome, a delusional chatbot or investment agent might also lose millions. Agent multi-step reasoning is sensitive to hostile inputs at any level. Complex agents make trust and verification difficult.

Coordination and Scalability

In many agentic systems, multiple agents may collaborate or compete. Ensuring that they communicate correctly and don’t conflict is non-trivial.

A recent review notes unique challenges in orchestrating multiple agents without standardized protocols. As the Stanford ethics report points out, if millions of agents interact (for example, booking each other’s appointments), the emergent behavior could be unpredictable at scale. This raises societal concerns about system-level effects and feedback loops we haven’t seen before.

Ethical and Legal Questions

Finally, there are questions of responsibility and bias. Who is liable if an autonomous agent makes a mistake? How do we ensure transparency and fairness in a black-box multi-agent system?

Legal and ethical frameworks are still catching up. For example, IBM highlights that agentic AI brings “an expanded set of ethical dilemmas” compared to today’s AI. And AI ethicists caution that deploying powerful assistants (as personal secretaries, advisors, and so on) will have profound societal impacts that are hard to predict.

Here are some specific things we need to consider:

Accountability: Who is accountable if an AI agent makes a damaging choice (such a medical AI agent prescribing the wrong medication or a logistics agent causing an accident)? Designers, deployers, or agents? Legal systems presume human control, but autonomous agents may not.

Transparency: Complex and opaque agentic systems exist. Multiple neural networks, knowledge bases, and tools may interact. Explaining an agent's behavior for auditing or debugging is tough. This opposes explainable AI.

Bias and fairness: Agents learn from data and environments that may reflect human biases. An autonomous hiring assistant agent, for instance, might inadvertently replicate discriminatory patterns unless carefully checked. And because agentic AI can perpetuate or amplify biases across many decisions, the impact could be larger.

Job disruption and social impact: Just as factory automation destroyed certain employment, powerful AI agents might change office and creative labor. Personal assistant agents that schedule, manage email, and research might change many careers. This might boost production but also exacerbate deskilling and inequality. Social pressure to utilize agentic AI (if rivals do) may divide workers into “augmented” and “unaugmented” workers.

Security and privacy: An agent with extensive system access harms privacy. Compromise of an AI agent permitted to access and write business data or personal correspondence might reveal critical information. IBM warns that agentic AI can increase recognized hazards, such as an agent accidentally biasing a database or sharing private data without monitoring. Tools must be authenticated and data handled securely.

Human-AI interaction: Our agents may affect how we use technology and interact with others. If individuals utilize AI bots for conversation, information filtering, or companionship, it might change societal dynamics. Consider again the Stanford study referenced above. So we need to pursue ways to include standards and values into these encounters.

In recognition of these challenges, technologists and ethicists urge us to use proactive safeguards. As IBM researchers put it, because agentic AI is advancing rapidly, we cannot wait to address safety – we must build strong guardrails now. Some proposed measures include strict testing protocols for agents, explainability requirements, legal regulations on autonomous systems, and design principles that prioritize human values.

So as you can see, while agentic AI offers the potential for AI that can handle complex tasks end-to-end, it also amplifies known AI risks (bias, error) and introduces new ones (autonomous decision-making, coordination failures). Addressing these challenges requires careful design of alignment, robust evaluation of agent behavior, and interdisciplinary governance.

Code Snippet and Real-World Examples

To illustrate how an agentic system works, let’s consider a very simple Python-like pseudocode for an abstract agent (mixing concepts from above):

class Agent:

def init(self, goal):

self.goal = goal

self.memory = []

def perceive(self, environment):

# Get data from environment (sensor, API, etc.)

return environment.get_state()

def plan(self, observation):

# Use reasoning (LLM or algorithm) to decide next action(s)

plan = ReasoningEngine.generate_plan(goal=self.goal, context=observation)

return plan # e.g. list of steps or actions

def act(self, action, environment):

# Execute the action using tools or directly in the environment

result = environment.execute(action)

return result

def learn(self, experience):

# Store outcome or update strategy

self.memory.append(experience)

def run(self, environment):

while not environment.task_complete():

obs = self.perceive(environment)

plan = self.plan(obs)

for action in plan:

result = self.act(action, environment)

self.learn(result)

This example demonstrates the core loop of an agentic AI:

The agent starts with a goal and can store memory of what it has done.

It observes its environment to understand what’s happening.

Based on that input, it creates a plan – a list of actions to reach its goal.

It executes each action, interacts with the environment, and learns from what happens.

This process repeats until the goal is met or the task is complete.

This basic structure mirrors how real-world agentic systems operate: perceive → plan → act → learn.

Real-world agentic AI systems are evolving. Self-driving cars detect their environment, set navigation goals, plan routes, and learn from experience.

Tesla's Full Self-Driving “continuously learns from the driving environment and adjusts its behavior” to increase safety. Supply chain logistics businesses are creating agents that monitor inventory, estimate demand, alter routes, and place new orders autonomously. Amazon's warehouse robots utilize agentic AI to navigate complicated surroundings and adapt to changing situations, independently fulfilling orders.

Cybersecurity, healthcare, and customer service also use autonomous agents to identify and respond to risks. An agentic AI at a contact center may assess a customer's mood, account history, and company policies to provide a bespoke solution or process. Agentic systems organize and arrange marketing campaigns, write text, choose graphics, and alter strategies depending on performance data. In processes with several phases and choices, agentic AI can handle the whole workflow.

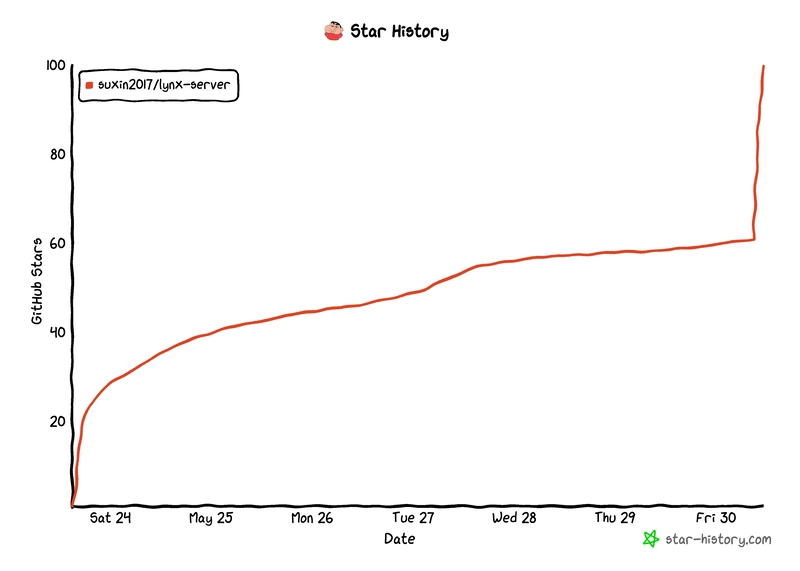

Recently, several prototype projects and open-source tools have begun experimenting with agentic AI in real-world scenarios.

For example, tools like AutoGPT and AgentGPT have demonstrated agents that can generate multimedia reports by coordinating research, writing, and image selection tasks. Other use cases include agents that retrieve knowledge and take follow-up action (for example, “find and implement the next step”), conduct security operations like scanning and responding to threats, or automate multi-step workflows in call centers.

These examples show how early-stage products and research projects are beginning to test and deploy agentic AI for complex, multi-step tasks beyond just answering questions.

Tutorial: Build Your First Agentic AI with Python

This step-by-step guide will teach you how to build a basic Agentic AI system even if you're just starting out. I’ll explain every concept clearly and give you working Python code you can run and study.

Real-World Use Case

Scenario: You're a product manager exploring tools for your team. Instead of spending hours researching AI coding assistants manually, you'd like a personal research agent to:

Understand your task

Gather relevant information from Wikipedia

Summarize it clearly

Remember context from previous questions

This is where Agentic AI shines: it acts autonomously, reasons, and uses tools just like a smart human assistant.

Prerequisites – What You Need

Python 3.10 or higher

An OpenAI API key (https://platform.openai.com/api-keys)

Install the required Python libraries:

pip install langchain openai wikipedia

⚠️ Don't forget to store your API key safely. Never share it in public code.

Step-by-Step Tutorial

Step 1: Set Up Your Environment

Start by setting your OpenAI API key in your script so that LangChain can access GPT models.

import os

os.environ["OPENAI_API_KEY"] = "your-api-key-here" # Replace with your real key

Step 2: Connect to a Knowledge Source (Wikipedia)

We'll give our agent the ability to use Wikipedia as a tool to gather information.

from langchain.agents import Tool

from langchain.tools import WikipediaQueryRun

from langchain.utilities import WikipediaAPIWrapper

# Create the Wikipedia tool

wiki = WikipediaQueryRun(api_wrapper=WikipediaAPIWrapper())

# Register the tool so the agent knows how to use it

tools = [

Tool(

name="Wikipedia",

func=wiki.run,

description="Useful for looking up general knowledge."

)

]

You're giving your agent a way to "see the world" – Wikipedia is your agent's eyes.

Step 3: Initialize the Agent (Reasoning Engine)

We now give the agent a brain – a GPT model that can reason, decide, and plan.

from langchain.chat_models import ChatOpenAI

from langchain.agents import initialize_agent

from langchain.agents.agent_types import AgentType

# Use a GPT model with zero randomness for consistent output

llm = ChatOpenAI(temperature=0)

# Combine reasoning (LLM) and tools (Wikipedia) into one agent

agent = initialize_agent(

tools=tools,

llm=llm,

agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION,

verbose=True # Show thought process step-by-step

)

This step fuses logic (GPT) and action (Wikipedia) to make your agent capable of goal-driven behavior.

Step 4: Give Your Agent a Goal

goal = "What are the top AI coding assistants and what makes them unique?"

response = agent.run(goal)

print("\nAgent's response:\n", response)

You’ve given your agent a mission. It will now think, search, and summarize.

You should see output like:

> Entering new AgentExecutor chain...

Thought: I should look up AI coding assistants on Wikipedia

Action: Wikipedia

Action Input: AI coding assistants

...

Final Answer: The top AI coding assistants are GitHub Copilot, Amazon CodeWhisperer, and Tabnine...

At this point, the agent has:

Interpreted your goal

Selected a tool (Wikipedia)

Retrieved and analyzed content

Reasoned through it to deliver a conclusion

Step 5: Give Your Agent Memory (Optional but Powerful)

Let your agent remember what you previously asked, like a real assistant.

from langchain.memory import ConversationBufferMemory

memory = ConversationBufferMemory(memory_key="chat_history")

agent_with_memory = initialize_agent(

tools=tools,

llm=llm,

agent=AgentType.CONVERSATIONAL_REACT_DESCRIPTION,

memory=memory,

verbose=True

)

# Ask a follow-up

agent_with_memory.run("Tell me about GitHub Copilot")

agent_with_memory.run("What else do you know about coding assistants?")

Your agent now tracks context across multiple interactions just like a good human assistant.

When this is done, your agent:

Responds more naturally to follow-up questions

Links previous conversations to improve continuity

After running the steps, your agent reads your goal and plans steps to fulfill it. It searches Wikipedia to gather facts, and reasons using a GPT model to summarize and decide what to say. It also optionally remembers context (with memory enabled). You now have a working Agentic AI that can be extended for real-world tasks.

Conclusion

Agentic AI offers an exciting glimpse into a future where machines can collaborate with humans to solve complex, multi-step problems not just respond to commands. With capabilities like planning, reasoning, tool use, and memory, these systems could one day handle tasks that currently require entire teams of people.

But with that power comes real responsibility. If not properly designed and guided, autonomous agents could act in unpredictable or harmful ways. That’s why developers, researchers, and policymakers need to work together to set clear boundaries, safety rules, and ethical standards.

The technology is advancing quickly from self-driving cars to research assistants to multi-agent platforms like AutoGPT and LangChain. As we build smarter systems, the challenge isn't just what they can do, but how we ensure they do it safely, fairly, and in ways that benefit everyone.

![[The AI Show Episode 150]: AI Answers: AI Roadmaps, Which Tools to Use, Making the Case for AI, Training, and Building GPTs](https://www.marketingaiinstitute.com/hubfs/ep%20150%20cover.png)

![[The AI Show Episode 149]: Google I/O, Claude 4, White Collar Jobs Automated in 5 Years, Jony Ive Joins OpenAI, and AI’s Impact on the Environment](https://www.marketingaiinstitute.com/hubfs/ep%20149%20cover.png)

![[DEALS] Mail Backup X Individual Edition: Lifetime Subscription (72% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

_Luis_Moreira_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

_imageBROKER.com_via_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![This app turns your Apple Watch into a Game Boy [Hands-on]](https://i0.wp.com/9to5mac.com/wp-content/uploads/sites/6/2025/05/FI-Arc-emulator.jpg.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Google TV is finally preparing sleep timer support as app readies Material 3 Expressive [Gallery]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2024/01/google-tv-logo.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Shares Official Trailer for 'Smoke' Starring Taron Egerton [Video]](https://www.iclarified.com/images/news/97453/97453/97453-640.jpg)

![Apple's M4 Mac Mini Drops to $488.63, New Lowest Price Ever [Deal]](https://www.iclarified.com/images/news/97456/97456/97456-1280.jpg)

![iPhone 16 Becomes World's Best-Selling Smartphone in Q1 2025 [Chart]](https://www.iclarified.com/images/news/97448/97448/97448-640.jpg)