Teachers Using AI to Grade Their Students' Work Sends a Clear Message: They Don't Matter, and Will Soon Be Obsolete

Talk to a teacher lately, and you'll probably get an earful about student attention spans, reading comprehension, and AI cheating. As AI becomes ubiquitous in day to day life — thanks to tech companies forcing it down our throats — it's probably no shocker that students are using software like ChatGPT at a nearly unprecedented scale. One study by the Digital Education Council found that nearly 86 percent of university students use some type of AI in their work. That's causing some fed-up teachers to fight fire with fire, using AI chatbots to score student work in kind. As one […]

Talk to a teacher lately, and you'll probably get an earful about AI's effects on student attention spans, reading comprehension, and cheating.

As AI becomes ubiquitous in everyday life — thanks to tech companies forcing it down our throats — it's probably no shocker that students are using software like ChatGPT at a nearly unprecedented scale. One study by the Digital Education Council found that nearly 86 percent of university students use some type of AI in their work.

That's causing some fed-up teachers to fight fire with fire, using AI chatbots to score their students' work. As one teacher mused on Reddit: "You are welcome to use AI. Just let me know. If you do, the AI will also grade you. You don't write it, I don't read it."

Others are embracing AI with a smile, using it to "tailor math problems to each student," in one example listed by Vice. Some go so far as requiring students to use AI. One professor in Ithaca, NY, shares both ChatGPT's comments on student essays as well as her own, and asks her students to run their essays through AI on their own.

While AI might save educators some time and precious brainpower — which arguably make up the bulk of the gig — the tech isn't even close to cut out for the job, according to researchers at the University of Georgia. While we should probably all know it's a bad idea to grade papers with AI, a new study by the School of Computing at UG gathered data on just how bad it is.

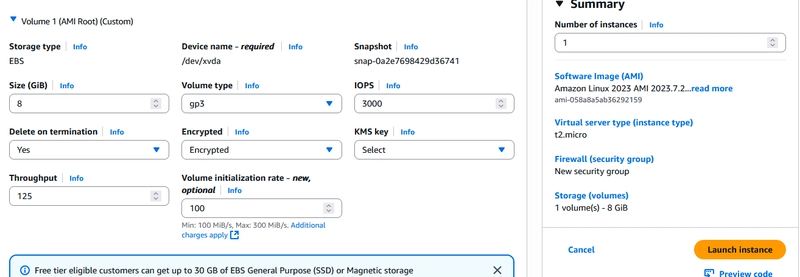

The research tasked the Large Language Model (LLM) Mixtral with grading written responses to middle school homework. Rather than feeding the LLM a human-created rubric, as is usually done in these studies, the UG team tasked Mixtral with creating its own grading system. The results were abysmal.

Compared to a human grader, the LLM accurately graded student work just 33.5 percent of the time. Even when supplied with a human rubric, the model had an accuracy rate of just over 50 percent.

Though the LLM "graded" quickly, its scores were frequently based on flawed logic inherent to LLMs.

"While LLMs can adapt quickly to scoring tasks, they often resort to shortcuts, bypassing deeper logical reasoning expected in human grading," wrote the researchers.

"Students could mention a temperature increase, and the large language model interprets that all students understand the particles are moving faster when temperatures rise," said Xiaoming Zhai, one of the UG researchers. "But based upon the student writing, as a human, we’re not able to infer whether the students know whether the particles will move faster or not."

Though the UG researchers wrote that "incorporating high-quality analytical rubrics designed to reflect human grading logic can mitigate [the] gap and enhance LLMs’ scoring accuracy," a boost from 33.5 to 50 percent accuracy is laughable. Remember, this is the technology that's supposed to bring about a "new epoch" — a technology we've poured more seed money into than any in human history.

If there were a 50 percent chance your car would fail catastrophically on the highway, none of us would be driving. So why is it okay for teachers to take the same gamble with students?

It's just further confirmation that AI is no substitute for a living, breathing teacher, and that isn't likely to change anytime soon. In fact, there's mounting evidence that AI's comprehension abilities are getting worse as time goes on and original data becomes scarce. Recent reporting by the New York Times found that the latest generation of AI models hallucinate as much as 79 percent of the time — way up from past numbers.

When teachers choose to embrace AI, this is the technology they're shoving off onto their kids: notoriously inaccurate, overly eager to please, and prone to spewing outright lies. That's before we even get into the cognitive decline that comes with regular AI use. If this is the answer to the AI cheating crisis, then maybe it'd make more sense to cut out the middle man: close the schools and let the kids go one-on-one with their artificial buddies.

More on AI: People With This Level of Education Use AI the Most at Work

The post Teachers Using AI to Grade Their Students' Work Sends a Clear Message: They Don't Matter, and Will Soon Be Obsolete appeared first on Futurism.

![[The AI Show Episode 146]: Rise of “AI-First” Companies, AI Job Disruption, GPT-4o Update Gets Rolled Back, How Big Consulting Firms Use AI, and Meta AI App](https://www.marketingaiinstitute.com/hubfs/ep%20146%20cover.png)

![[FREE EBOOKS] Offensive Security Using Python, Learn Computer Forensics — 2nd edition & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![Ditching a Microsoft Job to Enter Startup Purgatory with Lonewolf Engineer Sam Crombie [Podcast #171]](https://cdn.hashnode.com/res/hashnode/image/upload/v1746753508177/0cd57f66-fdb0-4972-b285-1443a7db39fc.png?#)

.jpg?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

![T-Mobile discontinues a free number feature but a paid alternative exists [UPDATED]](https://m-cdn.phonearena.com/images/article/170235-two/T-Mobile-discontinues-a-free-number-feature-but-a-paid-alternative-exists-UPDATED.jpg?#)

![Apple's 11th Gen iPad Drops to New Low Price of $277.78 on Amazon [Updated]](https://images.macrumors.com/t/yQCVe42SNCzUyF04yj1XYLHG5FM=/2500x/article-new/2025/03/11th-gen-ipad-orange.jpeg)

![Beats Studio Buds + On Sale for $99.95 [Lowest Price Ever]](https://www.iclarified.com/images/news/96983/96983/96983-640.jpg)

-xl.jpg)

![New iPad 11 (A16) On Sale for Just $277.78! [Lowest Price Ever]](https://www.iclarified.com/images/news/97273/97273/97273-640.jpg)

![[Exclusive] Infinix GT DynaVue: a Prototype that could change everything!](https://www.gizchina.com/wp-content/uploads/images/2025/05/Screen-Shot-2025-05-10-at-16.07.40-PM-copy.png)