Meta's Llama 4 Scandal

A heartfelt apology: Feels great to be back after such a while, with a newly ignited ambition I hope to resume my regular blogging and hope that you guys would receive me with open arms like before and forgive for suddenly disappearing. With that being said, let's get into it. (PS: I tried my best to not mix any personal hatred towards meta :p) Table of Contents: Context LLaMA 4: Impressive on Paper The LM Arena Controversy The Bigger Picture: AI in the Workplace Conclusion Context Over the weekend, Meta unveiled its latest innovation in open-weight AI: the LLaMA 4 family. Marketed as a natively multimodal, mixture-of-experts model with an astonishing 10 million-token context window (for the Scout variant), LLaMA 4 has made waves—not just for its technical specs, but for the controversy that followed. LLaMA 4: Impressive on Paper LLaMA 4 comes in three versions: Scout, Maverick, and Behemoth. The standout here is Scout, with a record-breaking 10 million-token context window—far ahead of competitors like Gemini 2.5 Pro, which supports 2 million. These capabilities promise exciting possibilities, especially for handling large-scale inputs like video, images, and extensive codebases. On paper, LLaMA 4 appears to be a significant step forward for open AI. In practice, though, early user feedback paints a more mixed picture. Despite ranking at the top of the LM Arena leaderboard (second only to Gemini 2.5 Pro), many users have reported underwhelming real-world performance. The model struggled with memory requirements and large codebases, prompting questions about how useful those 10 million tokens really are outside of benchmarking. The LM Arena Controversy LM Arena, known for crowdsourced model comparisons where real users vote on quality in head-to-head chats, became the center of controversy when it was revealed that the model Meta submitted wasn't a pure open-weight release. Instead, it was a fine-tuned version of LLaMA 4, specifically trained to perform well in human preference battles—a move that some saw as undermining the spirit of the leaderboard. LM Arena responded with a pointed statement, saying: "Meta's interpretation of our policy did not match what we expect from model providers. LLaMA 4 looks amazing on paper, but for some reason it's not passing the vibe check." This raised concerns about fairness, transparency, and whether benchmark gaming is becoming a systemic issue in AI evaluation. The Bigger Picture: AI in the Workplace While the LLaMA 4 situation unfolded, another major tech story emerged: a leaked memo from Shopify’s CEO revealed an “AI-first” directive. Before requesting more staff, teams must now justify why tasks can’t be completed with AI. The memo underscored a growing sentiment across industries: AI is not just a tool—it's a strategic necessity. This shift reflects a broader truth: regardless of benchmark drama, companies are increasingly exploring AI not as a novelty, but as a workforce multiplier. As AI continues to mature, transparent deployment, performance reliability, and ethical considerations will matter just as much as flashy specs. Conclusion Despite the leaderboard bump and some valid criticisms, LLaMA 4 remains a meaningful contribution to the open-weight AI ecosystem. It brings powerful capabilities closer to public access, even if it stumbles in real-world applications. The recent events serve as a reminder: as AI models grow more complex, maintaining trust in how they’re evaluated and presented is just as important as the models themselves. Open doesn't always mean perfect, but it does mean accountable. And in an AI landscape shaped increasingly by closed models and corporate secrecy, that accountability still matters.

A heartfelt apology: Feels great to be back after such a while, with a newly ignited ambition I hope to resume my regular blogging and hope that you guys would receive me with open arms like before and forgive for suddenly disappearing.

With that being said, let's get into it.

(PS: I tried my best to not mix any personal hatred towards meta :p)

Table of Contents:

- Context

- LLaMA 4: Impressive on Paper

- The LM Arena Controversy

- The Bigger Picture: AI in the Workplace

- Conclusion

Context

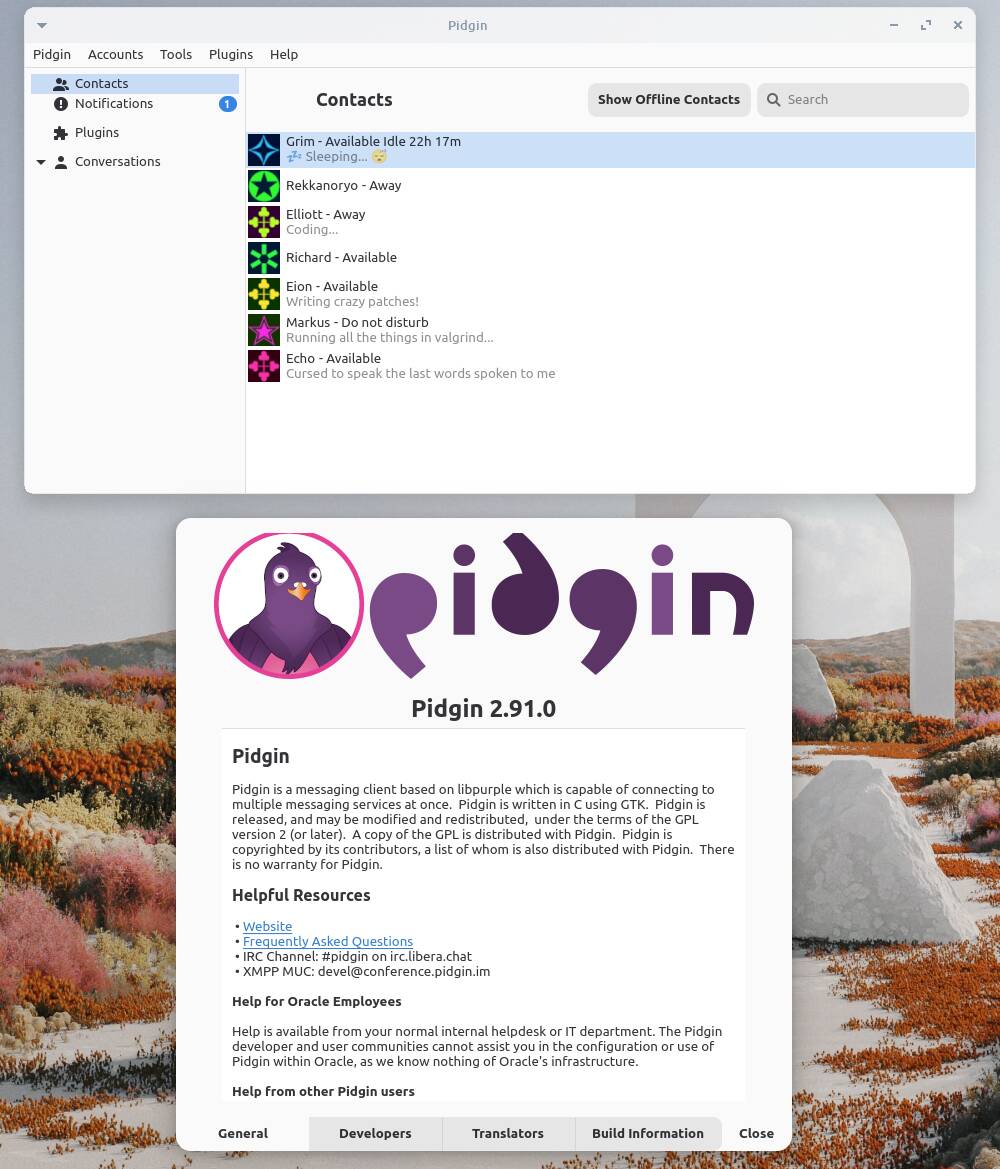

Over the weekend, Meta unveiled its latest innovation in open-weight AI: the LLaMA 4 family. Marketed as a natively multimodal, mixture-of-experts model with an astonishing 10 million-token context window (for the Scout variant), LLaMA 4 has made waves—not just for its technical specs, but for the controversy that followed.

LLaMA 4: Impressive on Paper

LLaMA 4 comes in three versions: Scout, Maverick, and Behemoth. The standout here is Scout, with a record-breaking 10 million-token context window—far ahead of competitors like Gemini 2.5 Pro, which supports 2 million. These capabilities promise exciting possibilities, especially for handling large-scale inputs like video, images, and extensive codebases. On paper, LLaMA 4 appears to be a significant step forward for open AI.

In practice, though, early user feedback paints a more mixed picture. Despite ranking at the top of the LM Arena leaderboard (second only to Gemini 2.5 Pro), many users have reported underwhelming real-world performance. The model struggled with memory requirements and large codebases, prompting questions about how useful those 10 million tokens really are outside of benchmarking.

The LM Arena Controversy

LM Arena, known for crowdsourced model comparisons where real users vote on quality in head-to-head chats, became the center of controversy when it was revealed that the model Meta submitted wasn't a pure open-weight release. Instead, it was a fine-tuned version of LLaMA 4, specifically trained to perform well in human preference battles—a move that some saw as undermining the spirit of the leaderboard.

LM Arena responded with a pointed statement, saying:

"Meta's interpretation of our policy did not match what we expect from model providers. LLaMA 4 looks amazing on paper, but for some reason it's not passing the vibe check."

This raised concerns about fairness, transparency, and whether benchmark gaming is becoming a systemic issue in AI evaluation.

The Bigger Picture: AI in the Workplace

While the LLaMA 4 situation unfolded, another major tech story emerged: a leaked memo from Shopify’s CEO revealed an “AI-first” directive. Before requesting more staff, teams must now justify why tasks can’t be completed with AI. The memo underscored a growing sentiment across industries: AI is not just a tool—it's a strategic necessity.

This shift reflects a broader truth: regardless of benchmark drama, companies are increasingly exploring AI not as a novelty, but as a workforce multiplier. As AI continues to mature, transparent deployment, performance reliability, and ethical considerations will matter just as much as flashy specs.

Conclusion

Despite the leaderboard bump and some valid criticisms, LLaMA 4 remains a meaningful contribution to the open-weight AI ecosystem. It brings powerful capabilities closer to public access, even if it stumbles in real-world applications. The recent events serve as a reminder: as AI models grow more complex, maintaining trust in how they’re evaluated and presented is just as important as the models themselves.

Open doesn't always mean perfect, but it does mean accountable. And in an AI landscape shaped increasingly by closed models and corporate secrecy, that accountability still matters.

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

![[DEALS] Microsoft Visual Studio Professional 2022 + The Premium Learn to Code Certification Bundle (97% off) & Other Deals Up To 98% Off](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From Accountant to Data Engineer with Alyson La [Podcast #168]](https://cdn.hashnode.com/res/hashnode/image/upload/v1744420903260/fae4b593-d653-41eb-b70b-031591aa2f35.png?#)

.png?#)

![Apple TV+ Summer Preview 2025 [Video]](https://www.iclarified.com/images/news/96999/96999/96999-640.jpg)

![Apple Watch SE 2 On Sale for Just $169.97 [Deal]](https://www.iclarified.com/images/news/96996/96996/96996-640.jpg)

![Apple Posts Full First Episode of 'Your Friends & Neighbors' on YouTube [Video]](https://www.iclarified.com/images/news/96990/96990/96990-640.jpg)