How to Set Up AWS EFS Static Provisioning Across Multiple Kubernetes Namespaces

Bitnami PostgreSQL is a widely-used container image with the default being to run safely as a non-root user. But persistent storage—especially shared storage between environments such as dev and test—becomes a problem. Here in this blog post, I'll walk you through how I used AWS EFS static provisioning to share storage between two namespaces with Bitnami PostgreSQL running on Kubernetes. Why Static Provisioning? While dynamic provisioning is easy, static provisioning offers full control. It allows you to set a PersistentVolume (PV) by hand which corresponds with an AWS EFS File System or Access Point—ideal for environments where there are multiple environments (e.g., dev and test). As we’re using static provisioning, there’s no need to define a StorageClass for EFS. Full control over PersistentVolume (PV) setup. A way to reuse the same EFS volume across different namespaces. Simpler debugging for permission or access issues. No need to define a StorageClass What We’re Building A PostgreSQL setup running in two separate namespaces: dev and test Both environments mount the same EFS volume PostgreSQL data is shared Prerequisites Before you begin: A running Kubernetes cluster (K3s, EKS, etc.) An AWS EFS file system already created Project Structure Your repo should look like this: deployment-files/ ├── deployment-dev/ │ └── pv-dev.yml, pvc-dev.yml, postgres.yml └── deployment-test/ └── pv-test.yml, pvc-test.yml, postgres*.yml Step 1: Create EFS Access Point To prevent permission issues when mounting EFS across namespaces, create an Access Point from the AWS Console with: User ID: 1001 Group ID: 1001 Permissions: 0775 Install EFS CSI Driver in your cluster: kubectl apply -k "github.com/kubernetes-sigs/aws-efs-csi-driver/deploy/kubernetes/overlays/stable/?ref=release-1.7" You can also use Helm for EFS CSI Driver installation. Step 2: Define the PV and PVC Set your pv’s volumeHandle section with both fs file system id and access point id : volumeHandle: fs-::fsap- Leave storageClassName empty. pv-dev.yml: For pvc also leave storageClassName empty. pvc-dev.yml: Create PV and PVC as the same for the test namespace. The test namespace PV will also point to the same access point in the EFS. Step 3: Configure PostgreSQL Deployment Make sure the deployment uses fsGroup: 1001 in its securityContext to match EFS Access Point permissions: securityContext: fsGroup: 1001 Step 4: Deploy Namespaces Deploy to dev: kubectl create namespace dev kubectl apply -f deployment-files/deployment-dev/ -n dev Check the logs to verify that the PostgreSQL PV and PVC are bound, and the postgres pod is running. Deploy to test: kubectl create namespace test kubectl apply -f deployment-files/deployment-test/ -n test Check the logs to verify that the PostgreSQL PV and PVC are bound, and the postgres pod is running. Outcome You now have a shared EFS volume accessed by PostgreSQL pods running in different namespaces.

Bitnami PostgreSQL is a widely-used container image with the default being to run safely as a non-root user. But persistent storage—especially shared storage between environments such as dev and test—becomes a problem. Here in this blog post, I'll walk you through how I used AWS EFS static provisioning to share storage between two namespaces with Bitnami PostgreSQL running on Kubernetes.

Why Static Provisioning?

While dynamic provisioning is easy, static provisioning offers full control. It allows you to set a PersistentVolume (PV) by hand which corresponds with an AWS EFS File System or Access Point—ideal for environments where there are multiple environments (e.g., dev and test). As we’re using static provisioning, there’s no need to define a StorageClass for EFS.

- Full control over PersistentVolume (PV) setup.

- A way to reuse the same EFS volume across different namespaces.

- Simpler debugging for permission or access issues.

- No need to define a StorageClass

What We’re Building

A PostgreSQL setup running in two separate namespaces: dev and test

- Both environments mount the same EFS volume

- PostgreSQL data is shared

Prerequisites

Before you begin:

- A running Kubernetes cluster (K3s, EKS, etc.)

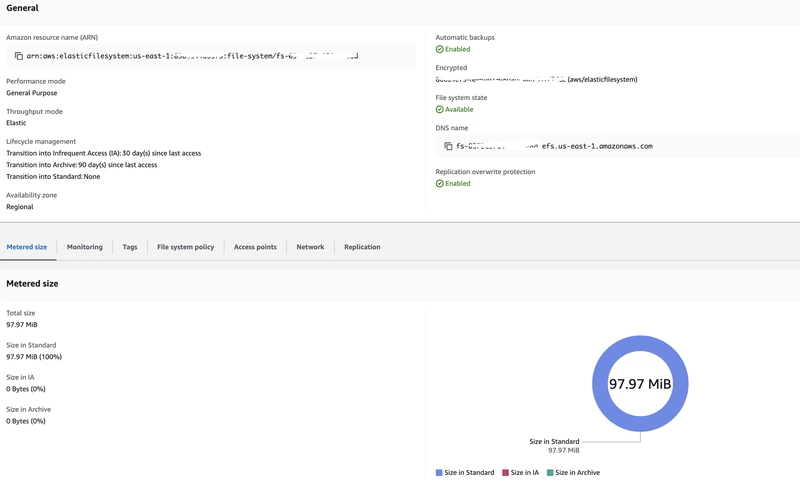

- An AWS EFS file system already created

Project Structure

Your repo should look like this:

deployment-files/

├── deployment-dev/

│ └── pv-dev.yml, pvc-dev.yml, postgres.yml

└── deployment-test/

└── pv-test.yml, pvc-test.yml, postgres*.yml

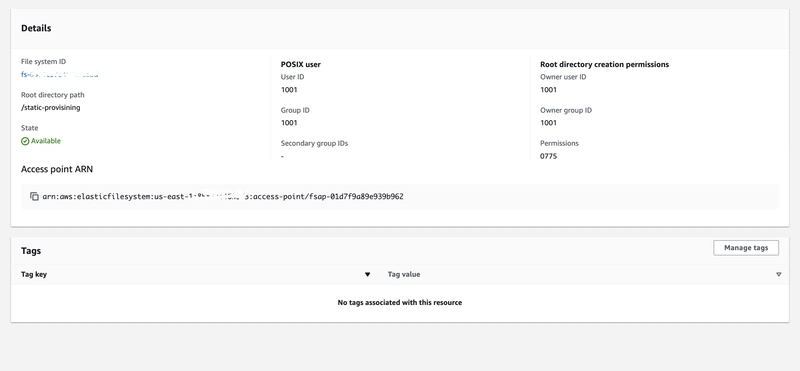

Step 1: Create EFS Access Point

To prevent permission issues when mounting EFS across namespaces, create an Access Point from the AWS Console with:

- User ID: 1001

- Group ID: 1001

- Permissions: 0775

Install EFS CSI Driver in your cluster:

kubectl apply -k "github.com/kubernetes-sigs/aws-efs-csi-driver/deploy/kubernetes/overlays/stable/?ref=release-1.7"

You can also use Helm for EFS CSI Driver installation.

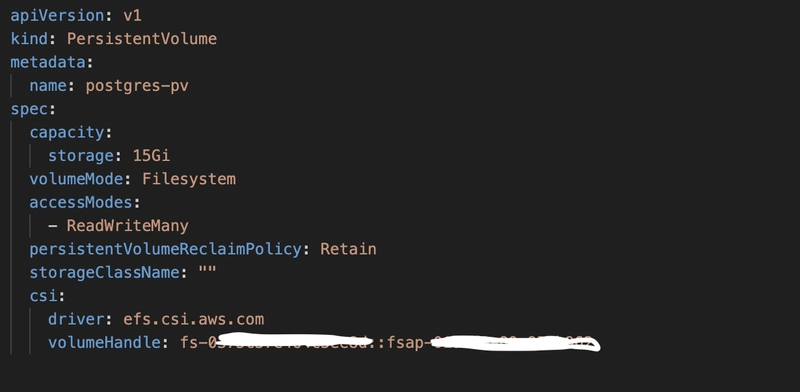

Step 2: Define the PV and PVC

Set your pv’s volumeHandle section with both fs file system id and access point id :

volumeHandle: fs-::fsap-

Leave storageClassName empty.

pv-dev.yml:

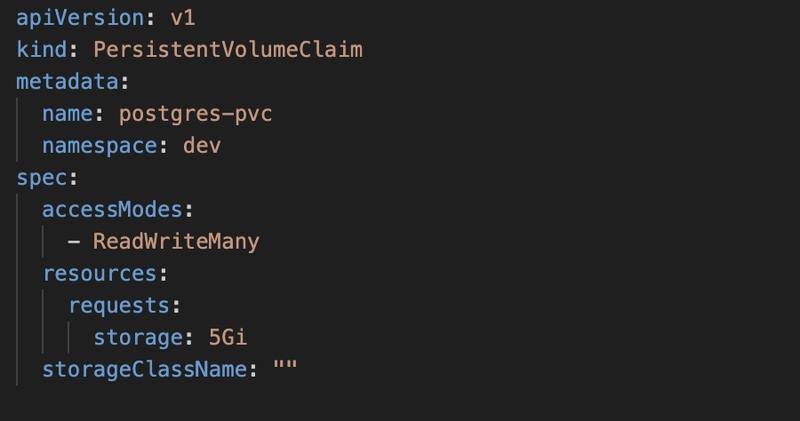

For pvc also leave storageClassName empty.

pvc-dev.yml:

Create PV and PVC as the same for the test namespace. The test namespace PV will also point to the same access point in the EFS.

Step 3: Configure PostgreSQL Deployment

Make sure the deployment uses fsGroup: 1001 in its securityContext to match EFS Access Point permissions:

securityContext:

fsGroup: 1001

Step 4: Deploy Namespaces

Deploy to dev:

kubectl create namespace dev

kubectl apply -f deployment-files/deployment-dev/ -n dev

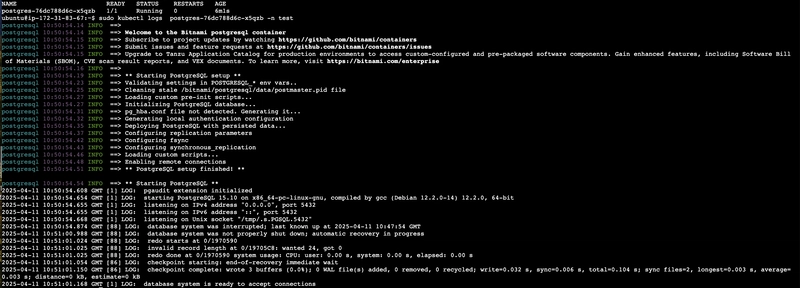

Check the logs to verify that the PostgreSQL PV and PVC are bound, and the postgres pod is running.

Deploy to test:

kubectl create namespace test

kubectl apply -f deployment-files/deployment-test/ -n test

Check the logs to verify that the PostgreSQL PV and PVC are bound, and the postgres pod is running.

Outcome

You now have a shared EFS volume accessed by PostgreSQL pods running in different namespaces.

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

![From Accountant to Data Engineer with Alyson La [Podcast #168]](https://cdn.hashnode.com/res/hashnode/image/upload/v1744420903260/fae4b593-d653-41eb-b70b-031591aa2f35.png?#)

.png?#)

![Apple Watch SE 2 On Sale for Just $169.97 [Deal]](https://www.iclarified.com/images/news/96996/96996/96996-640.jpg)

![Apple Posts Full First Episode of 'Your Friends & Neighbors' on YouTube [Video]](https://www.iclarified.com/images/news/96990/96990/96990-640.jpg)