How I Built an AI-Powered Medical Diagnostic Tool

My Story: From Patient to Builder Before I dive into the technology, let me share a bit of my personal journey-because this project is deeply personal. For as long as I can remember, I’ve been on the patient side of healthcare. During my four years of college, I battled typhoid not once, but four separate times. As if that wasn’t enough, I also endured a grueling 1.5-year fight with tuberculosis. My medical journey didn’t stop there: I’ve had to undergo inguinal hernia surgery twice-once on one side when I was in 9th class, and again on the other side in 2024. These experiences gave me a unique perspective. I know what it’s like to wait anxiously for test results, to feel confused by medical jargon, and to wish for clear, fast, and reliable answers. That’s why I set out to build a tool that could help patients and doctors alike-making diagnostics more accessible, understandable, and empowering for everyone. Introduction Imagine a world where anyone-doctors, researchers, or even patients-can upload a medical image or report and instantly receive a detailed, AI-powered analysis. That's the vision behind my Medical Diagnostic Tool, a project that brings together deep learning, natural language processing, and a user-friendly web interface to make advanced diagnostics accessible to everyone. In this post, I'll walk you through how I built this tool, the technology behind it, and how you can use or extend it for your own needs. Whether you're a developer, a healthcare professional, or just curious about AI in medicine, you'll find practical insights, code snippets, and visual diagrams to make everything clear-even if you're a complete beginner. Why Build a Medical Diagnostic Tool? Healthcare is full of data-X-rays, CT scans, lab reports, and more. But interpreting this data quickly and accurately is a huge challenge, especially in busy clinics or underserved areas. AI can help bridge this gap by: Speeding up diagnosis Reducing human error Providing a "second opinion" for clinicians Empowering patients with understandable results My goal was to create a tool that's not just powerful, but also easy to use and open for anyone to improve. System Overview: How Does It All Work? Here's a high-level look at the system: Frontend: Clean, mobile-first React app for uploading files and viewing results. Backend: FastAPI server that routes requests to the right analysis pipeline. Imaging Pipeline: Deep learning models for X-rays, CT, MRI, and ultrasound. Text Pipeline: NLP models for extracting info from medical reports and lab results. Results: Structured findings, confidence scores, and visual explanations. Step-by-Step: From Upload to Diagnosis 1. Uploading Your Data Users can upload images (like X-rays or MRIs) or PDF reports. The interface is designed to be as simple as possible-just drag, drop, or snap a photo with your phone. Mobile-first design ensures it works great on any device. 2. Smart Preprocessing The backend automatically detects the type of file and its medical modality (e.g., X-ray vs. MRI). Images are resized, normalized, and prepared for the AI models. PDFs are scanned for text using OCR. 3. AI Analysis Imaging: The tool uses pre-trained deep learning models (DenseNet, EfficientNet, ResNet) fine-tuned on medical datasets. It can spot abnormalities, highlight them with heatmaps, and estimate confidence. Text: For reports, the system uses advanced NLP (like BioClinicalBERT) to extract diagnoses, lab values, and recommendations. It even flags abnormal lab results based on reference ranges. 4. Results & Visualization Results are shown in a clear, structured format: Primary Findings: Most likely diagnoses, with confidence scores. Secondary Findings: Other possible issues or notes. Differential Diagnosis: Ranked list of possible conditions. Heatmaps: Visual overlays on images to show areas of concern. Lab Value Flags: Highlights abnormal results in red. All results are explained in plain language, so anyone can understand them. Under the Hood: Key Technologies Backend (FastAPI + Python) FastAPI for blazing-fast, async APIs. Pydantic for data validation. Torch, torchvision, timm for deep learning. Transformers for NLP. PIL, OpenCV, PyMuPDF for image and PDF processing. Example: Loading an X-ray Model import timm import torch model = timm.create_model('densenet121', pretrained=True) model.classifier = torch.nn.Linear(model.classifier.in_features, 14) # 14 chest conditions model.load_state_dict(torch.load('models/weights/chexpert_densenet121.pt')) model.eval() Imaging Pipeline Handles X-ray, CT, MRI, and ultrasound. Uses transfer learning and custom fine-tuning. Generates heatmaps for explainability. Estimates uncertainty with Monte Carlo dropout. Example: Generating a Heatmap def generate_heatmap(image, class

My Story: From Patient to Builder

Before I dive into the technology, let me share a bit of my personal journey-because this project is deeply personal.

For as long as I can remember, I’ve been on the patient side of healthcare. During my four years of college, I battled typhoid not once, but four separate times. As if that wasn’t enough, I also endured a grueling 1.5-year fight with tuberculosis. My medical journey didn’t stop there: I’ve had to undergo inguinal hernia surgery twice-once on one side when I was in 9th class, and again on the other side in 2024.

These experiences gave me a unique perspective. I know what it’s like to wait anxiously for test results, to feel confused by medical jargon, and to wish for clear, fast, and reliable answers. That’s why I set out to build a tool that could help patients and doctors alike-making diagnostics more accessible, understandable, and empowering for everyone.

Introduction

Imagine a world where anyone-doctors, researchers, or even patients-can upload a medical image or report and instantly receive a detailed, AI-powered analysis. That's the vision behind my Medical Diagnostic Tool, a project that brings together deep learning, natural language processing, and a user-friendly web interface to make advanced diagnostics accessible to everyone.

In this post, I'll walk you through how I built this tool, the technology behind it, and how you can use or extend it for your own needs. Whether you're a developer, a healthcare professional, or just curious about AI in medicine, you'll find practical insights, code snippets, and visual diagrams to make everything clear-even if you're a complete beginner.

Why Build a Medical Diagnostic Tool?

Healthcare is full of data-X-rays, CT scans, lab reports, and more. But interpreting this data quickly and accurately is a huge challenge, especially in busy clinics or underserved areas. AI can help bridge this gap by:

- Speeding up diagnosis

- Reducing human error

- Providing a "second opinion" for clinicians

- Empowering patients with understandable results

My goal was to create a tool that's not just powerful, but also easy to use and open for anyone to improve.

System Overview: How Does It All Work?

Here's a high-level look at the system:

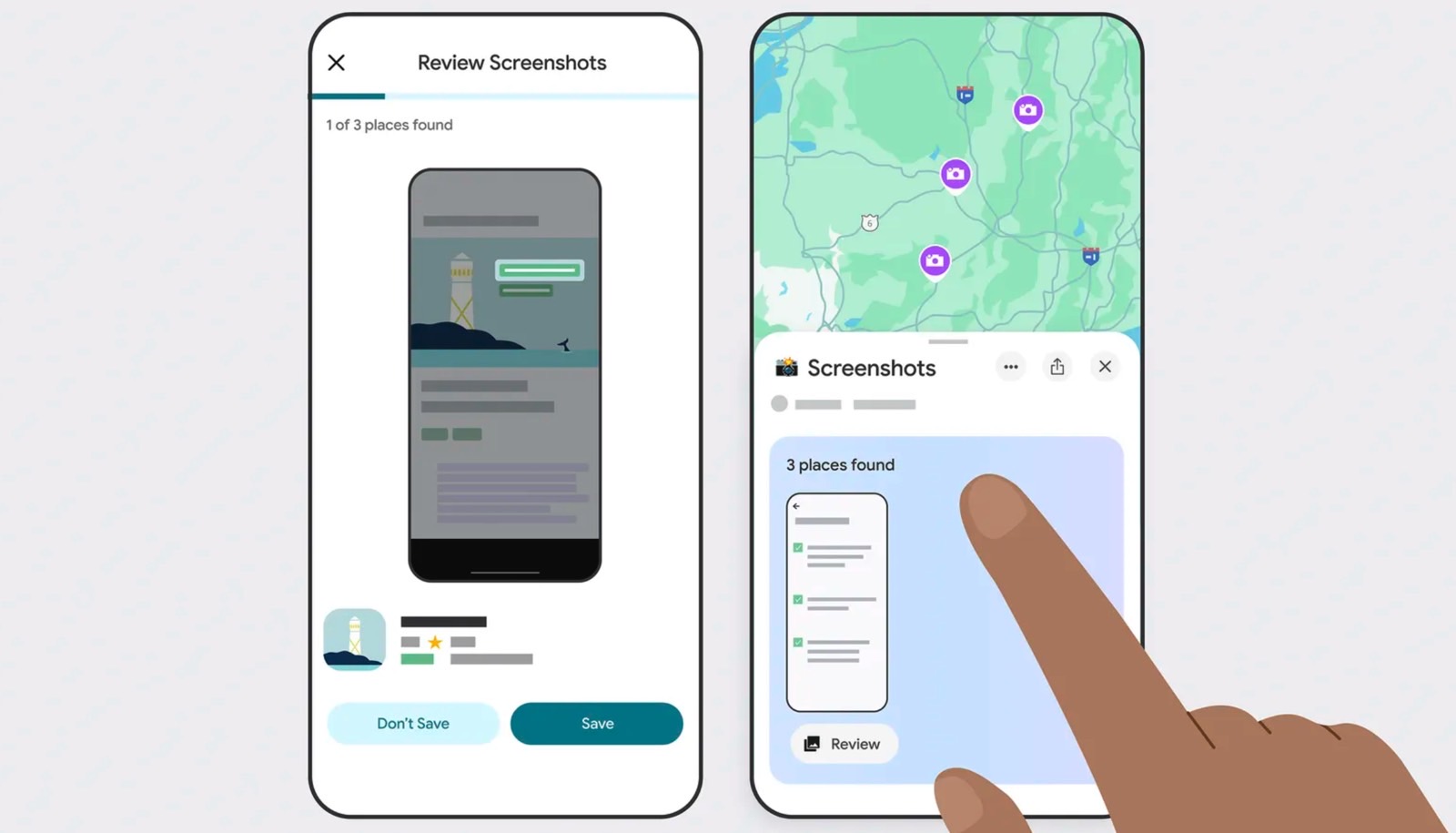

- Frontend: Clean, mobile-first React app for uploading files and viewing results.

- Backend: FastAPI server that routes requests to the right analysis pipeline.

- Imaging Pipeline: Deep learning models for X-rays, CT, MRI, and ultrasound.

- Text Pipeline: NLP models for extracting info from medical reports and lab results.

- Results: Structured findings, confidence scores, and visual explanations.

Step-by-Step: From Upload to Diagnosis

1. Uploading Your Data

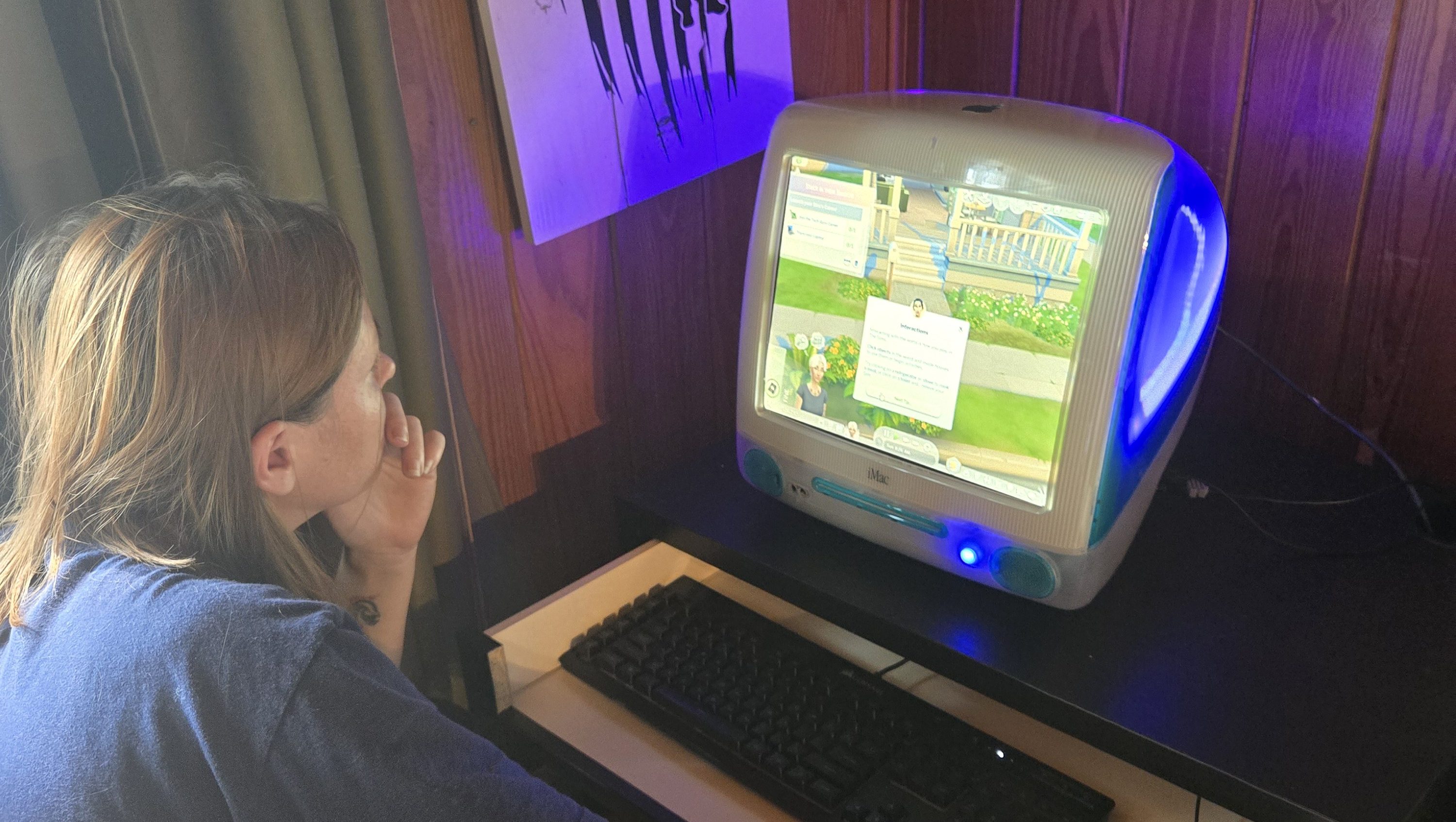

Users can upload images (like X-rays or MRIs) or PDF reports. The interface is designed to be as simple as possible-just drag, drop, or snap a photo with your phone.

Mobile-first design ensures it works great on any device.

2. Smart Preprocessing

The backend automatically detects the type of file and its medical modality (e.g., X-ray vs. MRI). Images are resized, normalized, and prepared for the AI models. PDFs are scanned for text using OCR.

3. AI Analysis

- Imaging: The tool uses pre-trained deep learning models (DenseNet, EfficientNet, ResNet) fine-tuned on medical datasets. It can spot abnormalities, highlight them with heatmaps, and estimate confidence.

- Text: For reports, the system uses advanced NLP (like BioClinicalBERT) to extract diagnoses, lab values, and recommendations. It even flags abnormal lab results based on reference ranges.

4. Results & Visualization

Results are shown in a clear, structured format:

- Primary Findings: Most likely diagnoses, with confidence scores.

- Secondary Findings: Other possible issues or notes.

- Differential Diagnosis: Ranked list of possible conditions.

- Heatmaps: Visual overlays on images to show areas of concern.

- Lab Value Flags: Highlights abnormal results in red.

All results are explained in plain language, so anyone can understand them.

Under the Hood: Key Technologies

Backend (FastAPI + Python)

- FastAPI for blazing-fast, async APIs.

- Pydantic for data validation.

- Torch, torchvision, timm for deep learning.

- Transformers for NLP.

- PIL, OpenCV, PyMuPDF for image and PDF processing.

Example: Loading an X-ray Model

import timm

import torch

model = timm.create_model('densenet121', pretrained=True)

model.classifier = torch.nn.Linear(model.classifier.in_features, 14) # 14 chest conditions

model.load_state_dict(torch.load('models/weights/chexpert_densenet121.pt'))

model.eval()

Imaging Pipeline

- Handles X-ray, CT, MRI, and ultrasound.

- Uses transfer learning and custom fine-tuning.

- Generates heatmaps for explainability.

- Estimates uncertainty with Monte Carlo dropout.

Example: Generating a Heatmap

def generate_heatmap(image, class_idx):

# Get model output

output = model(image)

# Setup for gradient computation

model.zero_grad()

output[0, class_idx].backward(retain_graph=True)

# Get gradients flowing back to last convolutional layer

gradients = self.get_activation_gradients()

activations = self.get_activations().detach()

# Pool gradients across color channels

weights = torch.mean(gradients, dim=[0, 2, 3])

# Create heatmap by weighted combination of activation maps

cam = torch.zeros(activations.shape[1:], dtype=torch.float32)

for i, w in enumerate(weights):

cam += w * activations[0, i, :, :]

# Apply ReLU and normalize

cam = np.maximum(cam.cpu(), 0)

cam = cam / np.max(cam)

# Resize and overlay on original image

return self.overlay_heatmap(cam, original_image)

Text Pipeline

- Extracts text from PDFs with OCR.

- Named Entity Recognition (NER) for diagnoses, labs, and more.

- Classifies report types (radiology, pathology, labs).

- Flags abnormal lab values.

Example: Lab Value Extraction

reference_ranges = {

"hemoglobin": {"unit": "g/dL", "low": 12.0, "high": 16.0},

"wbc": {"unit": "K/µL", "low": 4.5, "high": 11.0},

"platelets": {"unit": "K/µL", "low": 150, "high": 450},

"glucose": {"unit": "mg/dL", "low": 70, "high": 100},

"creatinine": {"unit": "mg/dL", "low": 0.6, "high": 1.2}

}

def is_abnormal(lab_name, value):

if lab_name.lower() in reference_ranges:

range_info = reference_ranges[lab_name.lower()]

return value < range_info["low"] or value > range_info["high"]

return False

Frontend (React + Tailwind CSS)

- Drag-and-drop file upload

- Progress bars and loading states

- Responsive, touch-friendly UI

- Results displayed with clear headings, tables, and color-coded highlights

Real-World Use Cases

For Clinicians

- Get a quick "second opinion" on tricky cases

- Screen large patient populations efficiently

- Standardize interpretation across different providers

- Prioritize urgent cases through automated triage

For Researchers

- Analyze large imaging datasets quickly

- Create annotated training materials

- Benchmark against expert diagnoses

- Generate standardized case presentations

For Patients

- Better understand your medical images and reports

- Get context for interpreting test results

- Access educational content related to your condition

- Have documentation for seeking second opinions

How to Get Started

1.Install

cd medical-diagnostic-tool

pip install -r requirements.txt

npm install

2. Run the Backend

cd medical_service

uvicorn main:app --reload

3. Run the Frontend

cd ..

npm start

4. Try the API

You can also use the REST API directly:

// Example JavaScript integration

async function analyzeMedicalImage(imageFile) {

const formData = new FormData();

formData.append('file', imageFile);

formData.append('file_type', 'image');

formData.append('analysis_type', 'comprehensive');

const response = await fetch('https://your-api-endpoint/analyze', {

method: 'POST',

body: formData

});

return await response.json();

}

Customization & Extensibility

-

Add new models: Drop your own PyTorch weights in the

models/weightsfolder. -

Change reference ranges: Edit the

reference_rangesdictionary in the text pipeline. - Integrate with hospital systems: The API is ready for integration with EMR/EHR platforms.

- Translate the UI: The frontend supports multiple languages.

What's Next?

Model Improvements

- Integration of Vision Transformer architectures

- Support for 3D volumes (CT/MRI series)

- Fine-tuning on specialized datasets

Better Explainability

- More advanced visualization techniques

- Natural language explanations of findings

- Links to relevant clinical guidelines

Integration Capabilities

- DICOM compatibility for medical imaging

- FHIR-compliant data exchange

- EMR/EHR system plugins

Final Thoughts

AI won't replace doctors—but it can make their work faster, more accurate, and more accessible. My hope is that this tool inspires others to build, share, and improve open medical AI for everyone.

With the right approach, AI can democratize access to medical diagnostics, helping clinicians make better decisions and patients understand their health better.

Disclaimer: This tool is for research and educational purposes only. It should not be used as the sole basis for clinical decisions without professional oversight.

If you found this helpful, please share or leave a comment!

![[The AI Show Episode 146]: Rise of “AI-First” Companies, AI Job Disruption, GPT-4o Update Gets Rolled Back, How Big Consulting Firms Use AI, and Meta AI App](https://www.marketingaiinstitute.com/hubfs/ep%20146%20cover.png)

![[DEALS] The Premium Python Programming PCEP Certification Prep Bundle (67% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![Honor 400 series officially launching on May 22 as design is revealed [Video]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/05/honor-400-series-announcement-1.png?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Beats Studio Pro Wireless Headphones Now Just $169.95 - Save 51%! [Deal]](https://www.iclarified.com/images/news/97258/97258/97258-640.jpg)