From Beginner to Pro: Docker + Terraform for Scalable AI Agents

Introduction As AI and machine learning workloads grow more complex, developers and DevOps engineers are looking for reliable, reproducible, and scalable ways to deploy them. While tools like Docker and Terraform are widely known, many developers haven’t yet fully unlocked their combined potential, especially when it comes to deploying AI agents or LLMs across cloud or hybrid environments. This guide walks you through the journey from Docker and Terraform basics to building scalable infrastructure for modern AI/ML systems. Whether you’re a beginner trying to get your first container up and running or an expert deploying multi-agent LLM setups with GPU-backed infrastructure, this article is for you. Docker 101: Containerizing Your First AI Model Let’s start with Docker. Containers make it easier to package and ship your applications. Here’s a quick example of containerizing a PyTorch-based inference model. Dockerfile: FROM python:3.9-slim WORKDIR /app COPY requirements.txt . RUN pip install -r requirements.txt COPY . . CMD ["python", "inference.py"] Build & Run: docker build -t ai-agent . docker run -p 5000:5000 ai-agent You now have a reproducible and portable AI model running in a container! Terraform 101: Your Infrastructure as Code Now let’s set up the infrastructure to run this container in the cloud using Terraform. Basic Terraform Script: provider "aws" { region = "us-east-1" } resource "aws_instance" "agent" { ami = "ami-0abcdef1234567890" # Choose a GPU-compatible AMI instance_type = "g4dn.xlarge" provisioner "remote-exec" { inline = [ "sudo docker run -d -p 5000:5000 ai-agent" ] } } Deploy: terraform init terraform apply Boom your container is live on an EC2 instance! Integrating Docker + Terraform: Scalable AI Agent Setup Now, we combine both tools to: Auto-provision compute with Terraform Pull and run your Docker images automatically Scale agents dynamically by changing Terraform variables Example: variable "agent_count" { default = 3 } resource "aws_instance" "agent" { count = var.agent_count ami = "ami-0abc123456" instance_type = "g4dn.xlarge" ... } This lets you spin up multiple Dockerized AI agents across your cloud fleet—perfect for inference APIs or retrieval-augmented generation (RAG) systems. Advanced Use Case: AI Agents with Multi-GPU, CI/CD & Terraform Imagine this setup: Each agent runs an OpenAI-compatible LLM locally (e.g., Mistral, Ollama, LLaMA.cpp) Terraform provisions GPU instances and networking Docker builds include prompt routers and memory systems GitHub Actions auto-triggers Terraform for deployments Benefits: Reproducibility across dev, staging, and prod Cost savings via spot instances Seamless rollback via Terraform state This is modern MLOps, containerized. ☁️ Hybrid Multi-Cloud AI with Docker + Terraform You can even expand this setup to support: Azure or GCP compute targets Multi-region failover Local LLM agents in Docker Swarm clusters (home lab, edge) Pro Tip: Use Terraform Cloud or Atlantis for remote state and team workflows. Visual Overview: How Docker and Terraform Work Together to Deploy AI Agents This diagram maps the full lifecycle from writing infrastructure-as-code, containerizing models, and deploying everything automatically. Simulated Real-World Project: Structure, README & CLI This structure outlines a robust setup designed for deploying and testing Docker + Terraform AI agents in hybrid cloud environments. It’s a scalable, reliable framework that can be leveraged for complex AI deployments.

Introduction

As AI and machine learning workloads grow more complex, developers and DevOps engineers are looking for reliable, reproducible, and scalable ways to deploy them. While tools like Docker and Terraform are widely known, many developers haven’t yet fully unlocked their combined potential, especially when it comes to deploying AI agents or LLMs across cloud or hybrid environments.

This guide walks you through the journey from Docker and Terraform basics to building scalable infrastructure for modern AI/ML systems.

Whether you’re a beginner trying to get your first container up and running or an expert deploying multi-agent LLM setups with GPU-backed infrastructure, this article is for you.

Docker 101: Containerizing Your First AI Model

Let’s start with Docker. Containers make it easier to package and ship your applications. Here’s a quick example of containerizing a PyTorch-based inference model.

Dockerfile:

FROM python:3.9-slim

WORKDIR /app

COPY requirements.txt .

RUN pip install -r requirements.txt

COPY . .

CMD ["python", "inference.py"]

Build & Run:

docker build -t ai-agent .

docker run -p 5000:5000 ai-agent

You now have a reproducible and portable AI model running in a container!

Terraform 101: Your Infrastructure as Code

Now let’s set up the infrastructure to run this container in the cloud using Terraform.

Basic Terraform Script:

provider "aws" {

region = "us-east-1"

}

resource "aws_instance" "agent" {

ami = "ami-0abcdef1234567890" # Choose a GPU-compatible AMI

instance_type = "g4dn.xlarge"

provisioner "remote-exec" {

inline = [

"sudo docker run -d -p 5000:5000 ai-agent"

]

}

}

Deploy:

terraform init

terraform apply

Boom your container is live on an EC2 instance!

Integrating Docker + Terraform: Scalable AI Agent Setup

Now, we combine both tools to:

- Auto-provision compute with Terraform

- Pull and run your Docker images automatically

- Scale agents dynamically by changing Terraform variables

Example:

variable "agent_count" {

default = 3

}

resource "aws_instance" "agent" {

count = var.agent_count

ami = "ami-0abc123456"

instance_type = "g4dn.xlarge"

...

}

This lets you spin up multiple Dockerized AI agents across your cloud fleet—perfect for inference APIs or retrieval-augmented generation (RAG) systems.

Advanced Use Case: AI Agents with Multi-GPU, CI/CD & Terraform

Imagine this setup:

- Each agent runs an OpenAI-compatible LLM locally (e.g., Mistral, Ollama, LLaMA.cpp)

- Terraform provisions GPU instances and networking

- Docker builds include prompt routers and memory systems

- GitHub Actions auto-triggers Terraform for deployments

Benefits:

- Reproducibility across dev, staging, and prod

- Cost savings via spot instances

- Seamless rollback via Terraform state

This is modern MLOps, containerized.

☁️ Hybrid Multi-Cloud AI with Docker + Terraform

You can even expand this setup to support:

- Azure or GCP compute targets

- Multi-region failover

- Local LLM agents in Docker Swarm clusters (home lab, edge)

Pro Tip: Use Terraform Cloud or Atlantis for remote state and team workflows.

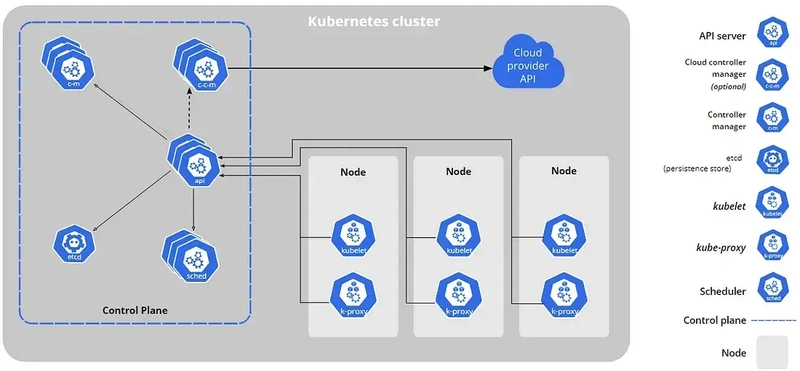

Visual Overview: How Docker and Terraform Work Together to Deploy AI Agents

This diagram maps the full lifecycle from writing infrastructure-as-code, containerizing models, and deploying everything automatically.

Simulated Real-World Project: Structure, README & CLI

This structure outlines a robust setup designed for deploying and testing Docker + Terraform AI agents in hybrid cloud environments. It’s a scalable, reliable framework that can be leveraged for complex AI deployments.

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

![[DEALS] Microsoft 365: 1-Year Subscription (Family/Up to 6 Users) (23% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From Art School Drop-out to Microsoft Engineer with Shashi Lo [Podcast #170]](https://cdn.hashnode.com/res/hashnode/image/upload/v1746203291209/439bf16b-c820-4fe8-b69e-94d80533b2df.png?#)

![Re-designing a Git/development workflow with best practices [closed]](https://i.postimg.cc/tRvBYcrt/branching-example.jpg)

(1).jpg?#)

_Inge_Johnsson-Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![The Material 3 Expressive redesign of Google Clock leaks out [Gallery]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2024/03/Google-Clock-v2.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![What Google Messages features are rolling out [May 2025]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2023/12/google-messages-name-cover.png?resize=1200%2C628&quality=82&strip=all&ssl=1)

![New Apple iPad mini 7 On Sale for $399! [Lowest Price Ever]](https://www.iclarified.com/images/news/96096/96096/96096-640.jpg)

![Apple to Split iPhone Launches Across Fall and Spring in Major Shakeup [Report]](https://www.iclarified.com/images/news/97211/97211/97211-640.jpg)

![Apple to Move Camera to Top Left, Hide Face ID Under Display in iPhone 18 Pro Redesign [Report]](https://www.iclarified.com/images/news/97212/97212/97212-640.jpg)

![Apple Developing Battery Case for iPhone 17 Air Amid Battery Life Concerns [Report]](https://www.iclarified.com/images/news/97208/97208/97208-640.jpg)