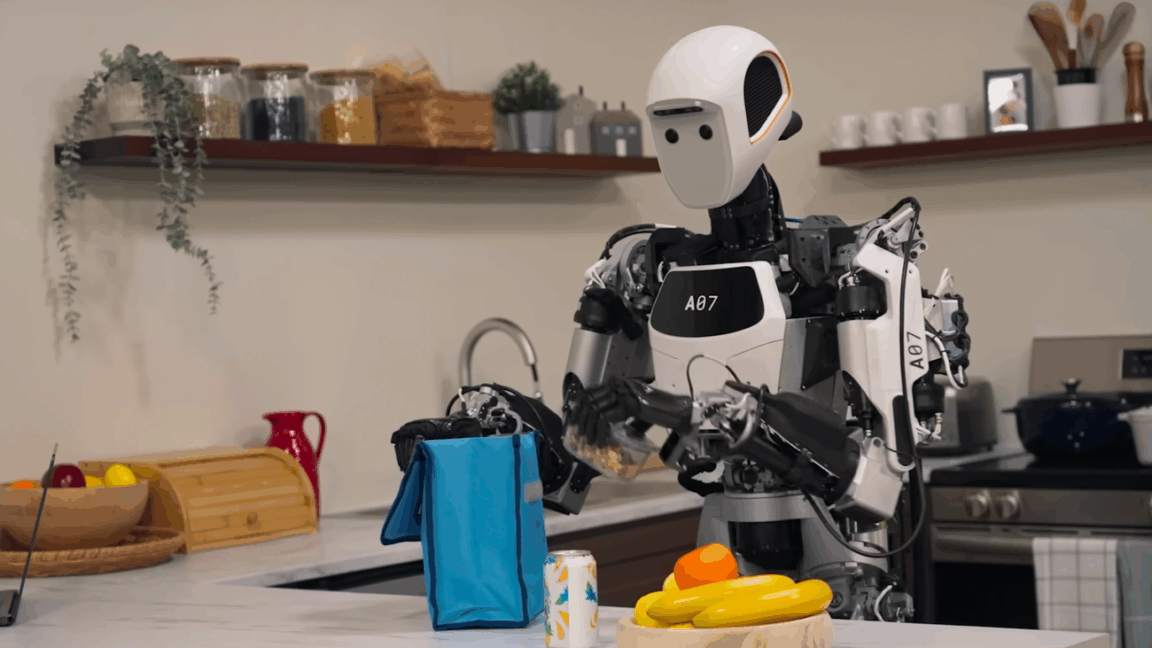

Google releases a new Gemini Robotics On-Device model with an SDK and says the vision language action model can adapt to new tasks in 50 to 100 demonstrations (Ryan Whitwam/Ars Technica)

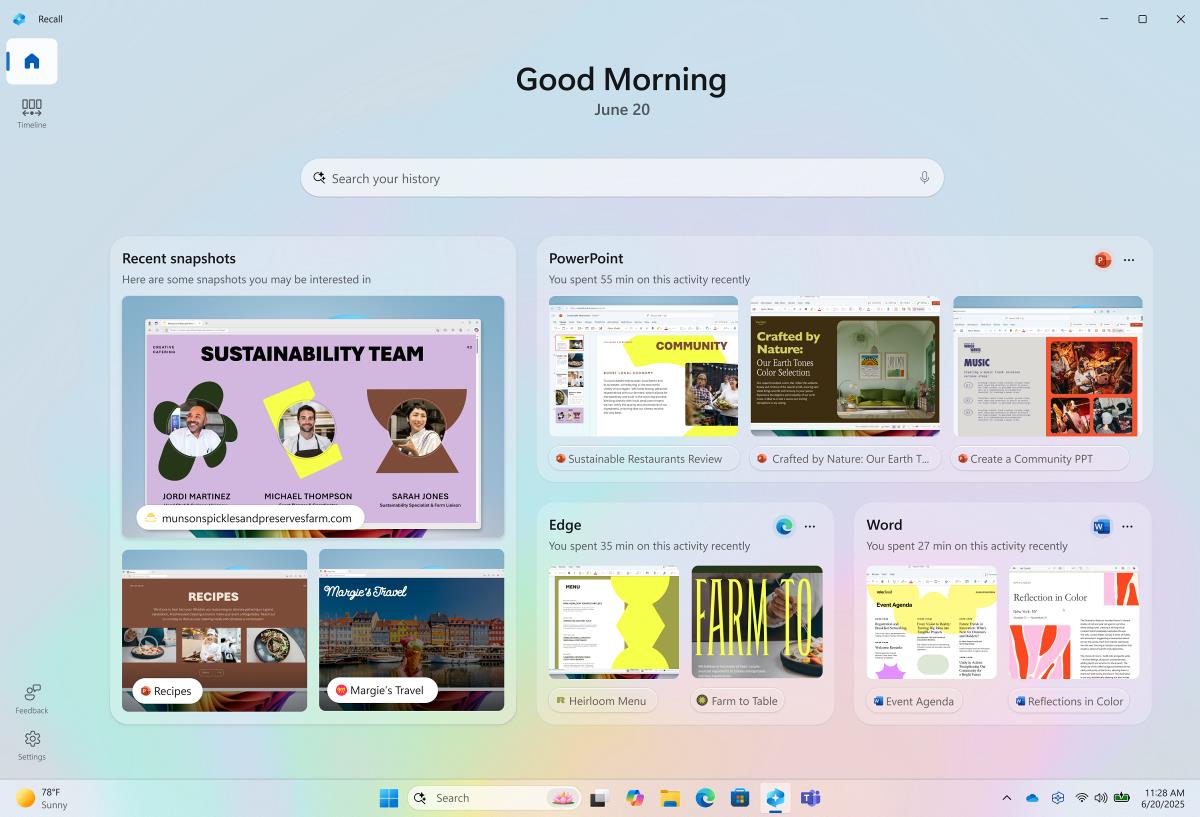

Ryan Whitwam / Ars Technica: Google releases a new Gemini Robotics On-Device model with an SDK and says the vision language action model can adapt to new tasks in 50 to 100 demonstrations — We sometimes call chatbots like Gemini and ChatGPT “robots,” but generative AI is also playing a growing role in real, physical robots.

![]() Ryan Whitwam / Ars Technica:

Ryan Whitwam / Ars Technica:

Google releases a new Gemini Robotics On-Device model with an SDK and says the vision language action model can adapt to new tasks in 50 to 100 demonstrations — We sometimes call chatbots like Gemini and ChatGPT “robots,” but generative AI is also playing a growing role in real, physical robots.

![[The AI Show Episode 155]: The New Jobs AI Will Create, Amazon CEO: AI Will Cut Jobs, Your Brain on ChatGPT, Possible OpenAI-Microsoft Breakup & Veo 3 IP Issues](https://www.marketingaiinstitute.com/hubfs/ep%20155%20cover.png)

![GrandChase tier list of the best characters available [June 2025]](https://media.pocketgamer.com/artwork/na-33057-1637756796/grandchase-ios-android-3rd-anniversary.jpg?#)

.jpg?#)

_ArtemisDiana_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Apple Considers LX Semicon and LG Innotek Components for iPad OLED Displays [Report]](https://www.iclarified.com/images/news/97699/97699/97699-640.jpg)

![Apple Releases New Beta Firmware for AirPods Pro 2 and AirPods 4 [8A293c]](https://www.iclarified.com/images/news/97704/97704/97704-640.jpg)