Future of Dev Work

Meta is building AI agents to replace mid-level software engineers. Not to support them. Not to assist them. To replace them. Let that settle in your mind for a second. Not because it’s shocking—because it’s not—but because deep down, many of us saw this coming. This isn’t science fiction. This is product strategy. And for tech executives making high-stakes decisions, it’s a tempting one. If someone walks into a boardroom and says: “We can cut 40% of engineering costs with AI systems that never get tired, never negotiate salary, never take parental leave, and never push back...” You’d expect hesitation. You’d hope for it. But what you usually get is a raised eyebrow, a spreadsheet, and a green light. Because from a cold, economic standpoint it makes sense. But what follows isn’t just a shift in tools. It’s a shift in truth. A Glimpse into the Darker Timeline The job market tightens. Companies downsize and restructure. Developers, many of whom once felt safe in their mid-level roles, are thrown into a race that has no finish line only faster lanes. It starts subtly. A few tools get introduced. Code review times drop. AI pair programming becomes the norm. Then slowly, decision-makers ask: Do we really need a full team anymore? Can a few leads and an AI system handle most of it? What if the AI can not only code but think? And here’s where it starts to hit home: We’re not just automating routine tasks. We’re outsourcing thinking. This Isn’t About Code It’s About Identity Let’s strip this down. For years, developers held onto a sense of worth that came from building, solving, improving. Code wasn’t just work it was an extension of thought, of creativity, of contribution. But what happens when a machine writes cleaner, faster, safer code than you? What happens when AI not only fixes bugs but anticipates them? What happens when it does this at scale, with no complaints and no burnout? Suddenly, “being good” isn't enough. Suddenly, “10 years of experience” doesn’t carry the weight it used to. Suddenly, your value isn’t about what you can do it’s about what only you can do. And that list? It’s shrinking. Heavy Questions We Can’t Keep Avoiding We’re at a crossroads, and most people are still walking like it’s a straight path. We need to ask: What does human creativity really mean when AI can now architect, design, test, and deploy? Will we regulate this growth, or let the free market optimize everything it touches, including our own sense of relevance? Are we building a future we want to live in, or just reacting to one we weren’t prepared for? Because this is no longer just about the future of work. It’s about how we define value. How we define purpose. How we define us. Are We Still in the Room? This is the turning point. Not 10 years from now. Now. The idea of AI replacing jobs isn’t theory anymore it’s pilot-tested and roadmap-approved. And it’s not just about engineers. Designers, writers, analysts, PMs we’re all on this path. We’re all passengers on a train that’s speeding up, and many still haven’t looked out the window. So here’s the uncomfortable part: Are we shaping this moment, or just surviving it? Are we leaning into the change with intention—or being quietly displaced while we upskill into irrelevance? This isn’t fearmongering. It’s reality-checking. Because we don’t need more hype or doomscrolling. We need honest conversations. Real strategy. And a willingness to ask the questions that make us squirm. Not because we want to be right. Because we still want to matter. What’s your take? Are we co-creating the next era of tech—or simply watching it unfold from the sidelines, hoping we’ll still have a seat? The Countermove But here’s the twist they didn’t see coming. While tech giants race to centralize intelligence, many of us are quietly decentralizing it. We’re building our own local AI agents. Not to chase profit. Not to serve ads. But to solve real problems. We train them to debug the buggy enterprise software we're forced to use. We teach them to automate the boring parts of our workday. We shape them around our needs—not corporate roadmaps. And maybe, just maybe, we’re inching toward a future where we don’t rely on corporations for every tool, every fix, every spark of innovation. Because when open source communities start baking in intelligent agents—tuned to real human needs, shared across borders, and built with transparency—we start taking power back. Maybe we won’t care about the next update from a big tech company. Maybe we won’t even notice when their platforms break. Because we’ll already have something better: collaboration, ownership, and tools that evolve with us—not at our expense. We’ve always known that the best ideas don’t come from the top. They come from people on the ground, solving what matters. So while some optimize for control, others will build for freedom. And the question won’t be “Who owns the future?” It’ll be “Who’

Meta is building AI agents to replace mid-level software engineers.

Not to support them.

Not to assist them.

To replace them.

Let that settle in your mind for a second. Not because it’s shocking—because it’s not—but because deep down, many of us saw this coming.

This isn’t science fiction. This is product strategy.

And for tech executives making high-stakes decisions, it’s a tempting one.

If someone walks into a boardroom and says:

“We can cut 40% of engineering costs with AI systems that never get tired, never negotiate salary, never take parental leave, and never push back...”

You’d expect hesitation. You’d hope for it. But what you usually get is a raised eyebrow, a spreadsheet, and a green light.

Because from a cold, economic standpoint it makes sense.

But what follows isn’t just a shift in tools. It’s a shift in truth.

A Glimpse into the Darker Timeline

The job market tightens.

Companies downsize and restructure.

Developers, many of whom once felt safe in their mid-level roles, are thrown into a race that has no finish line only faster lanes.

It starts subtly.

A few tools get introduced.

Code review times drop.

AI pair programming becomes the norm.

Then slowly, decision-makers ask:

Do we really need a full team anymore?

Can a few leads and an AI system handle most of it?

What if the AI can not only code but think?

And here’s where it starts to hit home:

We’re not just automating routine tasks.

We’re outsourcing thinking.

This Isn’t About Code It’s About Identity

Let’s strip this down.

For years, developers held onto a sense of worth that came from building, solving, improving.

Code wasn’t just work it was an extension of thought, of creativity, of contribution.

But what happens when a machine writes cleaner, faster, safer code than you?

What happens when AI not only fixes bugs but anticipates them?

What happens when it does this at scale, with no complaints and no burnout?

Suddenly, “being good” isn't enough.

Suddenly, “10 years of experience” doesn’t carry the weight it used to.

Suddenly, your value isn’t about what you can do it’s about what only you can do.

And that list? It’s shrinking.

Heavy Questions We Can’t Keep Avoiding

We’re at a crossroads, and most people are still walking like it’s a straight path.

We need to ask:

What does human creativity really mean when AI can now architect, design, test, and deploy?

Will we regulate this growth, or let the free market optimize everything it touches, including our own sense of relevance?

Are we building a future we want to live in, or just reacting to one we weren’t prepared for?

Because this is no longer just about the future of work.

It’s about how we define value.

How we define purpose.

How we define us.

Are We Still in the Room?

This is the turning point.

Not 10 years from now.

Now.

The idea of AI replacing jobs isn’t theory anymore it’s pilot-tested and roadmap-approved.

And it’s not just about engineers.

Designers, writers, analysts, PMs we’re all on this path.

We’re all passengers on a train that’s speeding up, and many still haven’t looked out the window.

So here’s the uncomfortable part:

Are we shaping this moment, or just surviving it?

Are we leaning into the change with intention—or being quietly displaced while we upskill into irrelevance?

This isn’t fearmongering.

It’s reality-checking.

Because we don’t need more hype or doomscrolling.

We need honest conversations. Real strategy. And a willingness to ask the questions that make us squirm.

Not because we want to be right.

Because we still want to matter.

What’s your take?

Are we co-creating the next era of tech—or simply watching it unfold from the sidelines, hoping we’ll still have a seat?

The Countermove

But here’s the twist they didn’t see coming.

While tech giants race to centralize intelligence, many of us are quietly decentralizing it.

We’re building our own local AI agents.

Not to chase profit.

Not to serve ads.

But to solve real problems.

We train them to debug the buggy enterprise software we're forced to use.

We teach them to automate the boring parts of our workday.

We shape them around our needs—not corporate roadmaps.

And maybe, just maybe, we’re inching toward a future where we don’t rely on corporations for every tool, every fix, every spark of innovation.

Because when open source communities start baking in intelligent agents—tuned to real human needs, shared across borders, and built with transparency—we start taking power back.

Maybe we won’t care about the next update from a big tech company.

Maybe we won’t even notice when their platforms break.

Because we’ll already have something better: collaboration, ownership, and tools that evolve with us—not at our expense.

We’ve always known that the best ideas don’t come from the top.

They come from people on the ground, solving what matters.

So while some optimize for control, others will build for freedom.

And the question won’t be “Who owns the future?”

It’ll be “Who’s still building one worth living in?”

Long live collaboration.

![[The AI Show Episode 149]: Google I/O, Claude 4, White Collar Jobs Automated in 5 Years, Jony Ive Joins OpenAI, and AI’s Impact on the Environment](https://www.marketingaiinstitute.com/hubfs/ep%20149%20cover.png)

_Illia_Uriadnikov_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![PSA: Spotify facing widespread outage [U: Fixed]](https://i0.wp.com/9to5mac.com/wp-content/uploads/sites/6/2023/06/spotify-logo-2.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

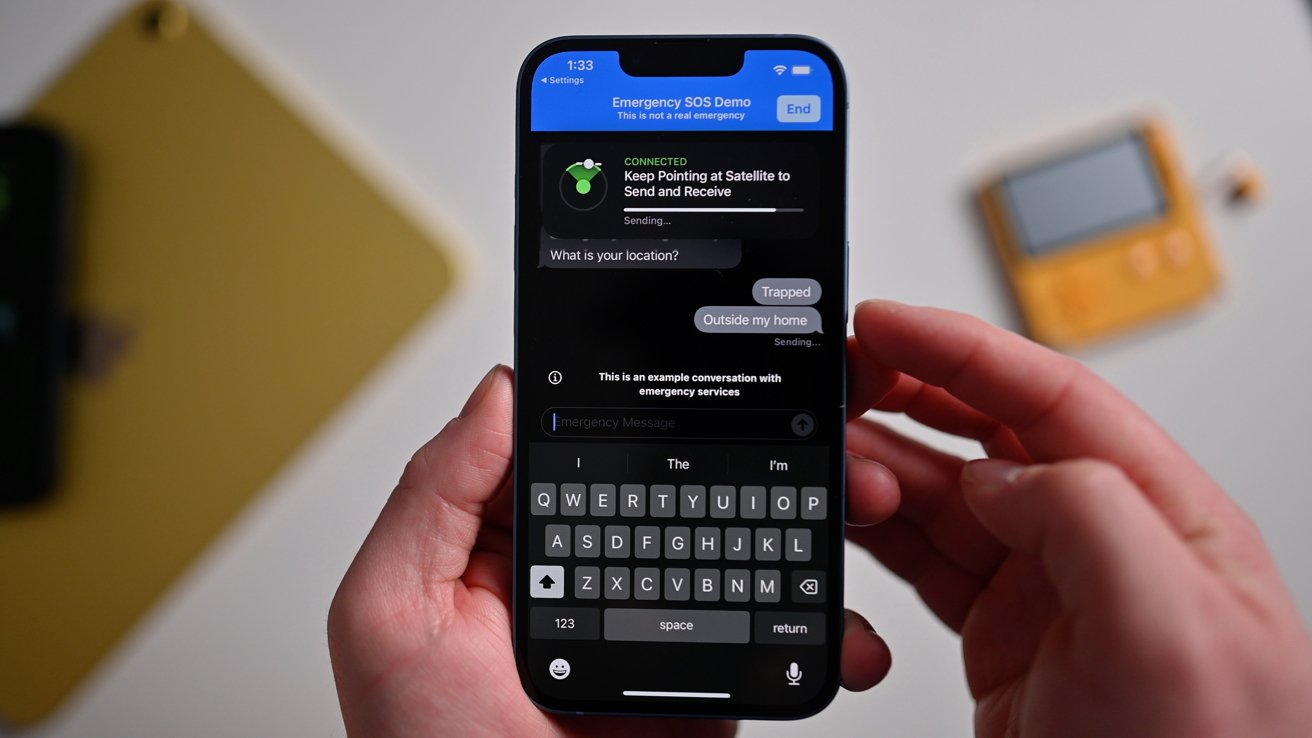

![Apple Turned Down Musk's $5B Starlink Deal — Now the Consequences Are Mounting [Report]](https://www.iclarified.com/images/news/97432/97432/97432-640.jpg)

![WhatsApp Finally Launches iPad App [Download]](https://www.iclarified.com/images/news/97435/97435/97435-640.jpg)

![T-Mobile, Verizon and AT&T under fire for lack of transparency on surveillance [UPDATED]](https://m-cdn.phonearena.com/images/article/170786-two/T-Mobile-Verizon-and-AT-T-under-fire-for-lack-of-transparency-on-surveillance-UPDATED.jpg?#)