From Zero to Local LLM: A Developer's Guide to Docker Model Runner

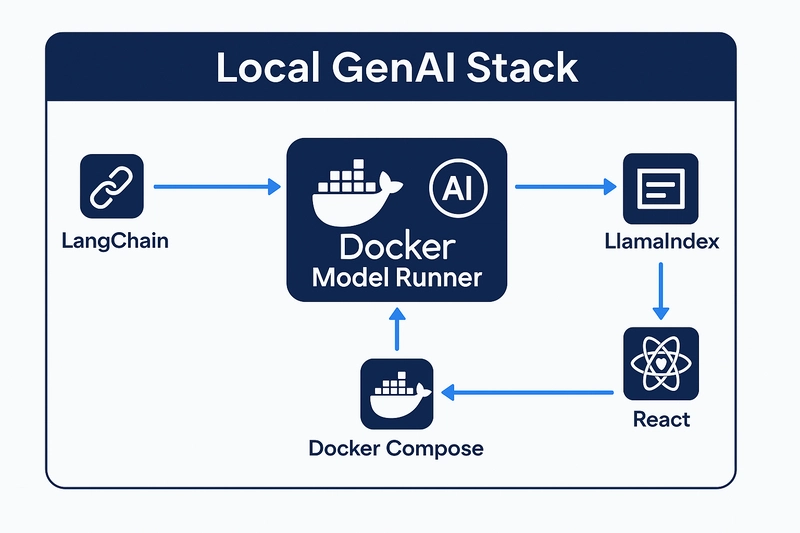

Build your own local-first GenAI stack with Docker, LangChain, and no GPU. Why Local LLMs Matter The rise of large language models (LLMs) has revolutionized how we build applications. But deploying them locally? That’s still a pain for most developers. Between model formats, dependency hell, hardware constraints, and weird CLI tools, running even a small LLM on your laptop can feel like navigating a minefield. Docker Model Runner changes that. It brings the power of container-native development to local AI workflows so you can focus on building, not battling toolchains. The Developer Pain Points: Too many formats: GGUF, PyTorch, ONNX, TF... Dependency issues and messy build scripts Need for GPUs or arcane CUDA configs No consistent local APIs for experimentation Docker Model Runner solves these by: Standardizing model access via Docker images Running fast with llama.cpp under the hood Providing OpenAI-compatible APIs out of the box Integrating directly with Docker Desktop

Build your own local-first GenAI stack with Docker, LangChain, and no GPU.

Why Local LLMs Matter

The rise of large language models (LLMs) has revolutionized how we build applications. But deploying them locally? That’s still a pain for most developers. Between model formats, dependency hell, hardware constraints, and weird CLI tools, running even a small LLM on your laptop can feel like navigating a minefield.

Docker Model Runner changes that. It brings the power of container-native development to local AI workflows so you can focus on building, not battling toolchains.

The Developer Pain Points:

- Too many formats: GGUF, PyTorch, ONNX, TF...

- Dependency issues and messy build scripts

- Need for GPUs or arcane CUDA configs

- No consistent local APIs for experimentation

Docker Model Runner solves these by:

- Standardizing model access via Docker images

- Running fast with llama.cpp under the hood

- Providing OpenAI-compatible APIs out of the box

- Integrating directly with Docker Desktop

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

![From Accountant to Data Engineer with Alyson La [Podcast #168]](https://cdn.hashnode.com/res/hashnode/image/upload/v1744420903260/fae4b593-d653-41eb-b70b-031591aa2f35.png?#)

.png?#)

![Apple Watch SE 2 On Sale for Just $169.97 [Deal]](https://www.iclarified.com/images/news/96996/96996/96996-640.jpg)

![Apple Posts Full First Episode of 'Your Friends & Neighbors' on YouTube [Video]](https://www.iclarified.com/images/news/96990/96990/96990-640.jpg)