Developers, Stop Guessing , Real Users Are Telling You What Works

As developers, we think we know how users interact with our apps. We make educated guesses, run emulator tests, and roll out A/B experiments, hoping something sticks. But here’s the truth: real users behave differently from our test assumptions. And unless we’re testing on actual devices in real-world conditions, we’re missing critical insights. Emulators ≠ Reality Emulators are fast and accessible, but they’re also idealized environments. They don’t capture: Real touch interactions Network fluctuations Device-specific rendering quirks Battery/power impacts on performance In production, these things matter , a lot. That floating button that worked perfectly on your dev machine might be half-clipped on a mid-range Android. A/B Testing Is Only As Good As the Data Behind It A/B testing works, but only if it’s based on how users experience your app. If you're running tests without accounting for real device behavior, your insights are limited. You might think layout B is outperforming layout A, when in reality, layout A simply loaded faster on most users' devices. Why Real Device Testing Wins Here’s where platforms like NativeBridge come in. They help developers test UI changes directly on real devices as well as emulators — and collect deep interaction metrics like: Tap heatmaps Scroll behavior UI responsiveness on specific models Performance differences across OS versions These are things you can’t simulate reliably. What This Looks Like in Practice Imagine you're testing a new onboarding screen. With NativeBridge: You deploy both versions (A/B) across a fleet of real devices. You track how users actually interact — where they drop off, where they get stuck, how long animations take to render. You optimize not just for clicks, but for fluid, device-native experiences. The feedback loop becomes faster, smarter, and grounded in reality. TL;DR Stop guessing how your users behave, they’re already telling you. You just need the right tools to listen. These tools make it possible to run scalable, UI-first A/B tests on actual devices. And once you do that, your app gets better — not just in theory, but where it counts: on your users’ screens.

As developers, we think we know how users interact with our apps. We make educated guesses, run emulator tests, and roll out A/B experiments, hoping something sticks.

But here’s the truth: real users behave differently from our test assumptions. And unless we’re testing on actual devices in real-world conditions, we’re missing critical insights.

Emulators ≠ Reality

Emulators are fast and accessible, but they’re also idealized environments. They don’t capture:

- Real touch interactions

- Network fluctuations

- Device-specific rendering quirks

- Battery/power impacts on performance In production, these things matter , a lot. That floating button that worked perfectly on your dev machine might be half-clipped on a mid-range Android.

A/B Testing Is Only As Good As the Data Behind It

A/B testing works, but only if it’s based on how users experience your app. If you're running tests without accounting for real device behavior, your insights are limited. You might think layout B is outperforming layout A, when in reality, layout A simply loaded faster on most users' devices.

Why Real Device Testing Wins

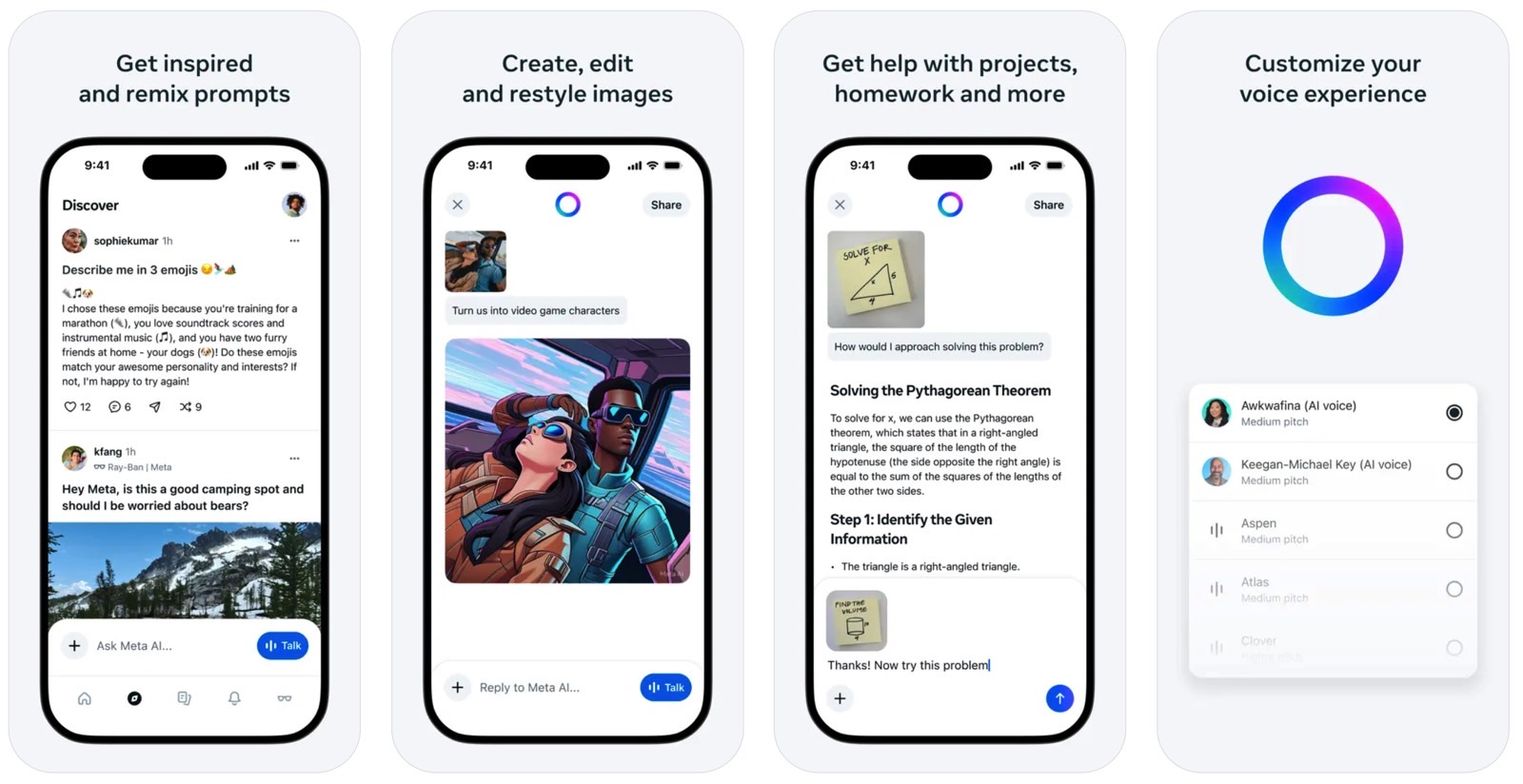

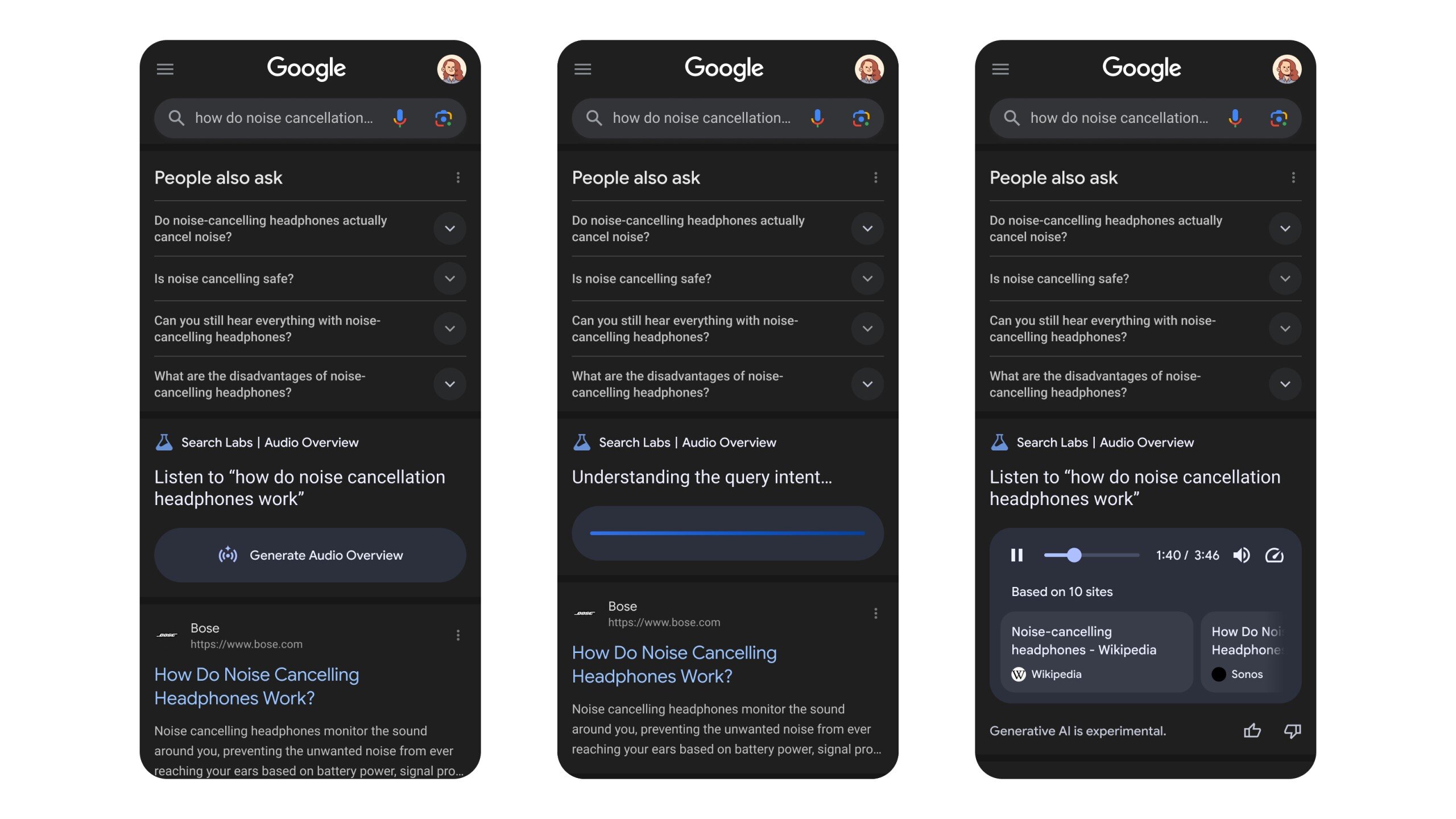

Here’s where platforms like NativeBridge come in. They help developers test UI changes directly on real devices as well as emulators — and collect deep interaction metrics like:

- Tap heatmaps

- Scroll behavior

- UI responsiveness on specific models

- Performance differences across OS versions

These are things you can’t simulate reliably.

What This Looks Like in Practice

- Imagine you're testing a new onboarding screen. With NativeBridge:

- You deploy both versions (A/B) across a fleet of real devices.

- You track how users actually interact — where they drop off, where they get stuck, how long animations take to render.

- You optimize not just for clicks, but for fluid, device-native experiences.

The feedback loop becomes faster, smarter, and grounded in reality.

TL;DR

Stop guessing how your users behave, they’re already telling you. You just need the right tools to listen.

These tools make it possible to run scalable, UI-first A/B tests on actual devices. And once you do that, your app gets better — not just in theory, but where it counts: on your users’ screens.

![[The AI Show Episode 152]: ChatGPT Connectors, AI-Human Relationships, New AI Job Data, OpenAI Court-Ordered to Keep ChatGPT Logs & WPP’s Large Marketing Model](https://www.marketingaiinstitute.com/hubfs/ep%20152%20cover.png)

.jpg?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

_Andreas_Prott_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

_designer491_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Samsung Galaxy Tab S11 runs Geekbench, here's the chipset it will use [Updated]](https://fdn.gsmarena.com/imgroot/news/25/06/samsung-galaxy-tab-s11-ultra-geekbench/-952x498w6/gsmarena_000.jpg)

![Apple’s latest CarPlay update revives something Android Auto did right 10 years ago [Gallery]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/06/carplay-live-activities-1.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Everything new in Android 16 QPR1 Beta 2 [Gallery]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/06/Android-16-logo-1.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![3DMark Launches Native Benchmark App for macOS [Video]](https://www.iclarified.com/images/news/97603/97603/97603-640.jpg)

![Craig Federighi: Putting macOS on iPad Would 'Lose What Makes iPad iPad' [Video]](https://www.iclarified.com/images/news/97606/97606/97606-640.jpg)