Can AI Be Smart and Private? A Practical Look at Federated Learning

AI needs data. Privacy needs protection. Can we have both? Artificial Intelligence is powerful—but it comes with a catch. Every breakthrough in prediction, personalization, or automation is powered by massive amounts of data. But that data often includes personal information: health records, financial transactions, or user behavior. This raises a question we can no longer ignore: Can we build intelligent systems without compromising privacy? The Problem with Centralized AI Most machine learning workflows follow a centralized model: collect data from users, store it in a centralized server, and train a model on that aggregated data. This approach, while effective for learning, creates vulnerabilities: Security breaches from a single point of failure Privacy risks, especially in finance and healthcare Non-compliance with laws like HIPAA, GDPR, and CCPA With rising user awareness and stricter data laws, this method is increasingly unsustainable. Enter Federated Learning Federated Learning (FL) changes the paradigm: the data stays where it is—on devices or local servers—and the model comes to it. Each node (a smartphone, hospital server, bank, etc.) trains a local model on its own private data. Then only the model updates (like gradients or weights) are sent to a central server. The server aggregates these updates and sends back a better global model. This way, sensitive raw data never leaves the device. Why Developers Should Care Federated learning isn’t just theoretical—it’s being used today in: Healthcare: for disease prediction without sharing patient data Finance: for fraud detection without pooling transactions Smartphones: like Gboard improving keyboard predictions without logging keystrokes It’s a natural fit for edge computing, privacy-first apps, and decentralized systems. Tools and Frameworks to Explore If you're ready to explore production-level FL, check out: TensorFlow Federated Flower – A flexible framework for federated learning OpenMined PySyft – For secure multi-party computation and FL PySEAL – Homomorphic encryption in Python Final Thoughts Federated learning won’t replace all centralized models—but it’s a powerful tool in the privacy-first AI toolbox. It helps developers comply with data laws, preserve user trust, and deploy smarter models across decentralized systems. The real question is no longer “Can we do it?”, but “Why aren’t we already?” What Do You Think? Have you tried building federated or privacy-aware models? What tools or libraries have you used? Would you like to see a tutorial with real-world datasets? Drop a comment or tag me—let’s build privacy-preserving AI together!

AI needs data. Privacy needs protection. Can we have both?

Artificial Intelligence is powerful—but it comes with a catch. Every breakthrough in prediction, personalization, or automation is powered by massive amounts of data. But that data often includes personal information: health records, financial transactions, or user behavior.

This raises a question we can no longer ignore: Can we build intelligent systems without compromising privacy?

The Problem with Centralized AI

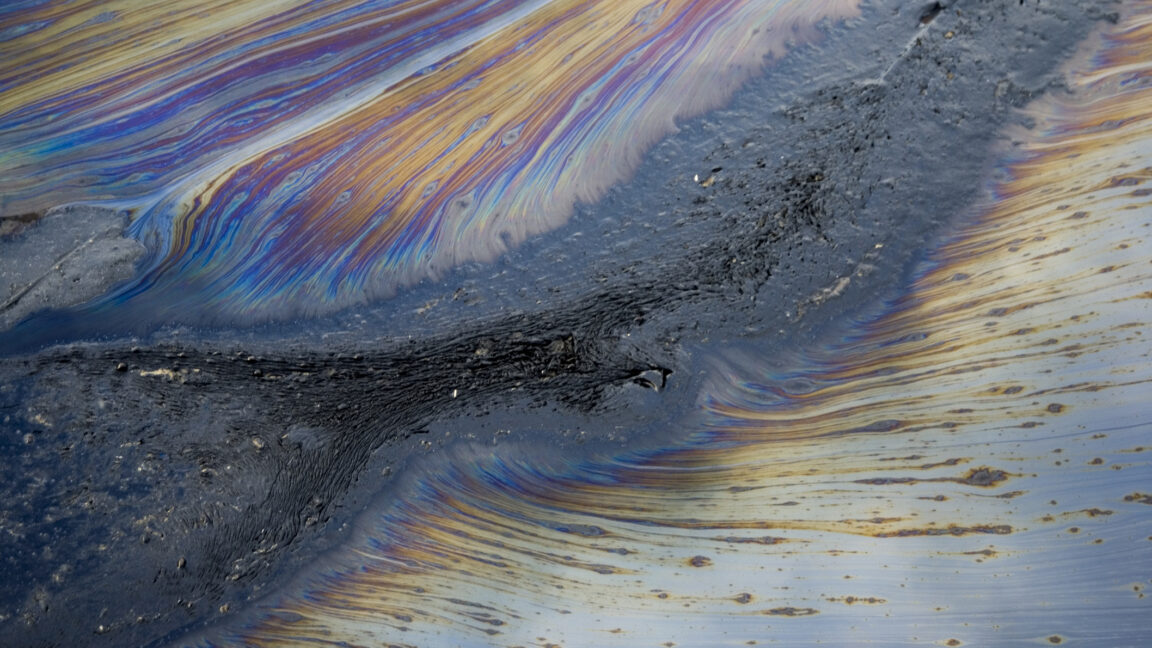

Most machine learning workflows follow a centralized model: collect data from users, store it in a centralized server, and train a model on that aggregated data.

This approach, while effective for learning, creates vulnerabilities:

Security breaches from a single point of failure

Privacy risks, especially in finance and healthcare

Non-compliance with laws like HIPAA, GDPR, and CCPA

With rising user awareness and stricter data laws, this method is increasingly unsustainable.

Enter Federated Learning

Federated Learning (FL) changes the paradigm: the data stays where it is—on devices or local servers—and the model comes to it.

Each node (a smartphone, hospital server, bank, etc.) trains a local model on its own private data. Then only the model updates (like gradients or weights) are sent to a central server. The server aggregates these updates and sends back a better global model.

This way, sensitive raw data never leaves the device.

Why Developers Should Care

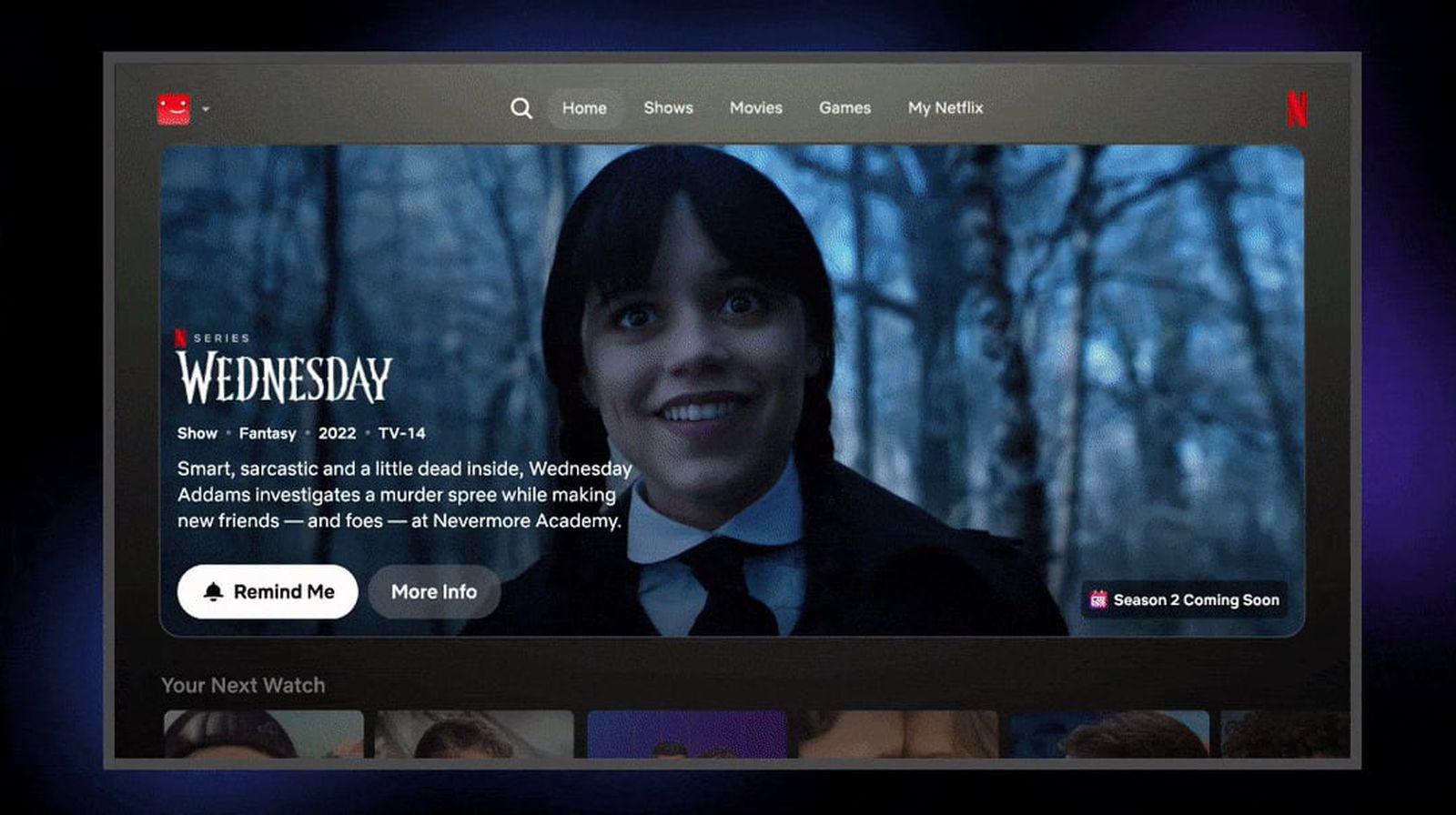

Federated learning isn’t just theoretical—it’s being used today in:

Healthcare: for disease prediction without sharing patient data

Finance: for fraud detection without pooling transactions

Smartphones: like Gboard improving keyboard predictions without logging keystrokes

It’s a natural fit for edge computing, privacy-first apps, and decentralized systems.

Tools and Frameworks to Explore

If you're ready to explore production-level FL, check out:

TensorFlow Federated

Flower – A flexible framework for federated learning

OpenMined PySyft – For secure multi-party computation and FL

PySEAL – Homomorphic encryption in Python

Final Thoughts

Federated learning won’t replace all centralized models—but it’s a powerful tool in the privacy-first AI toolbox. It helps developers comply with data laws, preserve user trust, and deploy smarter models across decentralized systems.

The real question is no longer “Can we do it?”, but “Why aren’t we already?”

What Do You Think?

Have you tried building federated or privacy-aware models?

What tools or libraries have you used?

Would you like to see a tutorial with real-world datasets?

Drop a comment or tag me—let’s build privacy-preserving AI together!

![[The AI Show Episode 146]: Rise of “AI-First” Companies, AI Job Disruption, GPT-4o Update Gets Rolled Back, How Big Consulting Firms Use AI, and Meta AI App](https://www.marketingaiinstitute.com/hubfs/ep%20146%20cover.png)

![Honor 400 series officially launching on May 22 as design is revealed [Video]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/05/honor-400-series-announcement-1.png?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Beats Studio Pro Wireless Headphones Now Just $169.95 - Save 51%! [Deal]](https://www.iclarified.com/images/news/97258/97258/97258-640.jpg)