Build robust and observable MCP servers to supercharge your LLMs with Go

MCP opens a lot of new capabilities for LLMs by offering them the possibility to trigger backend code. And Go is a very solid choice to create MCP servers, due to its resilience and scalability. Yokai released its new MCP server module: you can easily create MCP tools, resources and prompts, while the framework handles the SSE/stdio exposition, with built-in o11y (automatic logs, traces and metrics). Yokai comes with a MCP server demo application to see it in action, you can try it with your favorite MCP compatible AI application (Cursor, Claude desktop, ...) or simply via the provided MCP inspector. If you want to know more about this, you can go to the Yokai MCP server module documentation where you'll find getting started instructions, technical documentation and the demo.

MCP opens a lot of new capabilities for LLMs by offering them the possibility to trigger backend code. And Go is a very solid choice to create MCP servers, due to its resilience and scalability.

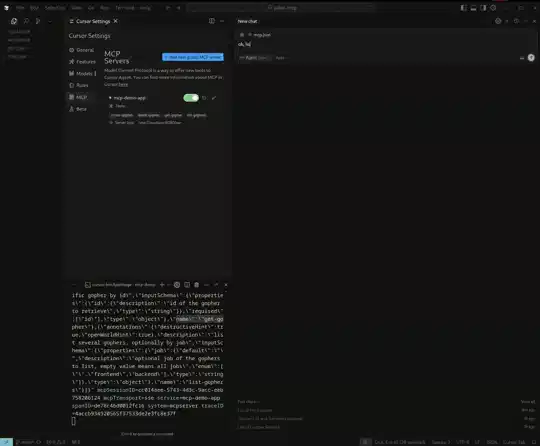

Yokai released its new MCP server module: you can easily create MCP tools, resources and prompts, while the framework handles the SSE/stdio exposition, with built-in o11y (automatic logs, traces and metrics).

Yokai comes with a MCP server demo application to see it in action, you can try it with your favorite MCP compatible AI application (Cursor, Claude desktop, ...) or simply via the provided MCP inspector.

If you want to know more about this, you can go to the Yokai MCP server module documentation where you'll find getting started instructions, technical documentation and the demo.

![[The AI Show Episode 146]: Rise of “AI-First” Companies, AI Job Disruption, GPT-4o Update Gets Rolled Back, How Big Consulting Firms Use AI, and Meta AI App](https://www.marketingaiinstitute.com/hubfs/ep%20146%20cover.png)

![Ditching a Microsoft Job to Enter Startup Purgatory with Lonewolf Engineer Sam Crombie [Podcast #171]](https://cdn.hashnode.com/res/hashnode/image/upload/v1746753508177/0cd57f66-fdb0-4972-b285-1443a7db39fc.png?#)

.jpg?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

-xl.jpg)

![New iPad 11 (A16) On Sale for Just $277.78! [Lowest Price Ever]](https://www.iclarified.com/images/news/97273/97273/97273-640.jpg)

![Apple Foldable iPhone to Feature New Display Tech, 19% Thinner Panel [Rumor]](https://www.iclarified.com/images/news/97271/97271/97271-640.jpg)