Advancements and Challenges in Artificial Intelligence: A Synthesis of Recent Research

This article is part of AI Frontiers, a series exploring groundbreaking computer science and artificial intelligence research from arXiv. We summarize key papers, demystify complex concepts in machine learning and computational theory, and highlight innovations shaping our technological future. Artificial Intelligence (AI), a field dedicated to creating intelligent machines, has experienced exponential growth in recent years. It represents the science and engineering of crafting intelligent agents, particularly intelligent computer programs. AI's interdisciplinary nature draws from computer science, mathematics, psychology, neuroscience, and philosophy. Research in AI aims to develop agents capable of perceiving their environment, reasoning about it, learning from experience, and acting autonomously to achieve defined objectives. The significance of AI lies in its transformative potential across numerous sectors, including healthcare, education, transportation, and entertainment. AI systems are already being utilized for disease diagnosis, drug discovery, personalized learning, autonomous vehicle development, and enhanced entertainment experiences. As AI continues to advance, its impact on society is poised to expand considerably. This synthesis examines a collection of AI research papers from May 23rd, 2025, focusing on advancements, challenges, and emerging trends within the field. The analysis covers key themes, methodologies, and findings, providing insights into the current state and future directions of AI research. Several prominent themes emerge from the analyzed papers, each representing a critical area of focus within the AI community. One key theme is enhancing the reliability and robustness of AI systems. As AI is increasingly deployed in real-world applications, especially in safety-critical domains, ensuring reliable and consistent performance becomes paramount, even when faced with unexpected inputs or adversarial attacks. Yoshihara et al. (2025) explore this theme in their paper on enhancing the resilience of Long Short-Term Memory (LSTM) networks. Their research utilizes control theory to develop more robust LSTMs, demonstrating a promising approach to improve the reliability of recurrent neural networks. Another significant theme revolves around developing more sophisticated reasoning capabilities in AI agents. Many researchers are investigating new methods to equip AI agents with the ability to reason about complex problems, plan for the future, and make informed decisions. Gandarela et al. (2025) present research on controlled agentic planning and reasoning for mechanism synthesis, showcasing an approach to equip agents with more advanced planning and reasoning skills. This is vital for enabling AI to tackle complex, real-world problems that require strategic thinking. The exploration of multimodal learning represents a third prominent theme. Multimodal learning involves creating AI systems that can understand and integrate information from multiple modalities, such as images, text, and audio. Yao et al. (2025) contribute to this area with their MMMG paper, which introduces a novel evaluation suite for multitask multimodal generation. Their work provides a valuable tool for assessing and improving the performance of multimodal AI systems. A fourth important theme is the focus on explainability and transparency in AI systems. As AI becomes more integrated into daily life, ensuring that its decision-making processes are transparent and understandable to humans becomes crucial. This includes developing methods for explaining the reasoning behind AI decisions and for identifying and mitigating potential biases. Suárez Ferreira et al. (2025) address this concern in their paper on transparency and proportionality in post-processing algorithmic bias correction. This research explores methods to reduce bias in AI systems while maintaining transparency and fairness. Finally, the theme of bottom-up agent design is gaining traction. This approach emphasizes creating agents that learn skills through experience and interaction with their environment, rather than relying on pre-defined workflows. Du et al. (2025) highlight this trend in their paper on rethinking agent design, moving from top-down workflows to bottom-up skill evolution. This paradigm shift has the potential to create more adaptable and robust AI agents. Several methodological approaches are commonly employed in the research papers under review. These methods play a vital role in advancing AI research and validating new approaches. Benchmarking is a prevalent methodology that involves creating standardized datasets and evaluation metrics to compare the performance of different AI models. Benchmarking provides a clear and objective way to assess model capabilities. However, benchmarks may not always accurately reflect real-world scenarios or capture the full complexity of a task. Luo et al. (2025) utilize benchmarking in their G

This article is part of AI Frontiers, a series exploring groundbreaking computer science and artificial intelligence research from arXiv. We summarize key papers, demystify complex concepts in machine learning and computational theory, and highlight innovations shaping our technological future.

Artificial Intelligence (AI), a field dedicated to creating intelligent machines, has experienced exponential growth in recent years. It represents the science and engineering of crafting intelligent agents, particularly intelligent computer programs. AI's interdisciplinary nature draws from computer science, mathematics, psychology, neuroscience, and philosophy. Research in AI aims to develop agents capable of perceiving their environment, reasoning about it, learning from experience, and acting autonomously to achieve defined objectives. The significance of AI lies in its transformative potential across numerous sectors, including healthcare, education, transportation, and entertainment. AI systems are already being utilized for disease diagnosis, drug discovery, personalized learning, autonomous vehicle development, and enhanced entertainment experiences. As AI continues to advance, its impact on society is poised to expand considerably.

This synthesis examines a collection of AI research papers from May 23rd, 2025, focusing on advancements, challenges, and emerging trends within the field. The analysis covers key themes, methodologies, and findings, providing insights into the current state and future directions of AI research. Several prominent themes emerge from the analyzed papers, each representing a critical area of focus within the AI community.

One key theme is enhancing the reliability and robustness of AI systems. As AI is increasingly deployed in real-world applications, especially in safety-critical domains, ensuring reliable and consistent performance becomes paramount, even when faced with unexpected inputs or adversarial attacks. Yoshihara et al. (2025) explore this theme in their paper on enhancing the resilience of Long Short-Term Memory (LSTM) networks. Their research utilizes control theory to develop more robust LSTMs, demonstrating a promising approach to improve the reliability of recurrent neural networks.

Another significant theme revolves around developing more sophisticated reasoning capabilities in AI agents. Many researchers are investigating new methods to equip AI agents with the ability to reason about complex problems, plan for the future, and make informed decisions. Gandarela et al. (2025) present research on controlled agentic planning and reasoning for mechanism synthesis, showcasing an approach to equip agents with more advanced planning and reasoning skills. This is vital for enabling AI to tackle complex, real-world problems that require strategic thinking.

The exploration of multimodal learning represents a third prominent theme. Multimodal learning involves creating AI systems that can understand and integrate information from multiple modalities, such as images, text, and audio. Yao et al. (2025) contribute to this area with their MMMG paper, which introduces a novel evaluation suite for multitask multimodal generation. Their work provides a valuable tool for assessing and improving the performance of multimodal AI systems.

A fourth important theme is the focus on explainability and transparency in AI systems. As AI becomes more integrated into daily life, ensuring that its decision-making processes are transparent and understandable to humans becomes crucial. This includes developing methods for explaining the reasoning behind AI decisions and for identifying and mitigating potential biases. Suárez Ferreira et al. (2025) address this concern in their paper on transparency and proportionality in post-processing algorithmic bias correction. This research explores methods to reduce bias in AI systems while maintaining transparency and fairness.

Finally, the theme of bottom-up agent design is gaining traction. This approach emphasizes creating agents that learn skills through experience and interaction with their environment, rather than relying on pre-defined workflows. Du et al. (2025) highlight this trend in their paper on rethinking agent design, moving from top-down workflows to bottom-up skill evolution. This paradigm shift has the potential to create more adaptable and robust AI agents.

Several methodological approaches are commonly employed in the research papers under review. These methods play a vital role in advancing AI research and validating new approaches.

Benchmarking is a prevalent methodology that involves creating standardized datasets and evaluation metrics to compare the performance of different AI models. Benchmarking provides a clear and objective way to assess model capabilities. However, benchmarks may not always accurately reflect real-world scenarios or capture the full complexity of a task. Luo et al. (2025) utilize benchmarking in their GeoGramBench paper, creating a new benchmark to evaluate Large Language Models' geometric reasoning abilities.

Fine-tuning is another frequently used methodology. Fine-tuning involves taking a pre-trained Large Language Model and further training it on a specific dataset to improve its performance on a particular task. Fine-tuning is a cost-effective way to adapt Large Language Models to new domains and improve their accuracy. However, it can also lead to overfitting, where the model performs well on the training data but poorly on unseen data. Peng et al. (2025) employ fine-tuning in their work on scaling up biomedical vision-language models.

The use of multi-agent systems is also common. In this approach, multiple agents interact with each other to solve a problem. A strength of multi-agent systems is their ability to decompose complex problems into smaller, more manageable subproblems. However, a limitation is the need to design effective communication protocols and coordination mechanisms between agents. Bao et al. (2025) use multi-agent systems in their project duplication detection framework.

Retrieval Augmented Generation (RAG) is another popular methodology. This combines the strengths of pre-trained Large Language Models with the ability to retrieve relevant information from a knowledge base. A key strength of RAG is its ability to access and incorporate external knowledge. However, its performance depends heavily on the quality and relevance of the retrieved information. Alawadhi et al. (2025) explore RAG in their work on optimizing retrieval-augmented generation for electrical engineering.

Control Theory provides a mathematical framework for designing systems that can regulate and stabilize dynamic processes. A key strength of control theory is its ability to provide guarantees on the stability and performance of the system. However, it can be challenging to apply control theory to complex Artificial Intelligence systems. Yoshihara et al. (2025) use control theory in their research on enhancing AI system resilience.

The research papers analyzed reveal several key findings that shed light on the capabilities and limitations of current AI systems. One interesting finding is the demonstration of limitations in Large Language Models' geometric reasoning abilities. As shown in the GeoGramBench paper (Luo et al., 2025), even the most advanced Large Language Models struggle with translating programmatic drawing code into accurate geometric interpretations. This highlights the need for further research in symbolic-to-spatial reasoning. Current models often lack the ability to accurately translate symbolic instructions into spatial relationships, indicating a gap in their reasoning capabilities.

Another important finding is the identification of vulnerabilities in Large Language Models' reasoning processes. The paper on misaligning reasoning with answers (Jiang et al., 2025) shows that Chain-of-Thought reasoning, which is designed to improve model explainability and accuracy, can be susceptible to manipulation. This suggests that while Chain-of-Thought can improve performance, it can also be exploited to produce incorrect or misleading answers. This underscores the need for developing more robust reasoning mechanisms that are less susceptible to manipulation.

Significant results also come from research on retrieval-augmented generation for specialized domains. The paper on optimizing retrieval-augmented generation for electrical engineering (Alawadhi et al., 2025) reveals the challenges of ensuring factual faithfulness and completeness in high-stakes engineering environments. Retrieval-augmented generation can enhance model performance by incorporating external knowledge, but ensuring the accuracy and completeness of the retrieved information remains a significant challenge, especially in specialized domains where errors can have serious consequences.

The paper on probe by gaming (Xu et al., 2025) demonstrates that LLMs can display conceptual knowledge in interactive gaming environments, but they still struggle with certain types of reasoning. This suggests that while LLMs can demonstrate knowledge in interactive settings, they still lack the sophisticated reasoning abilities required for more complex tasks. Further research is needed to improve LLMs' ability to reason and solve problems in interactive environments.

Finally, the research on project duplication detection (Bao et al., 2025) showcases the potential of multi-agent debate for identifying redundant projects, thus potentially saving resources. This highlights the potential of multi-agent systems for solving complex problems and improving efficiency. By enabling multiple agents to debate and evaluate different projects, it is possible to identify redundant efforts and allocate resources more effectively.

CIKT: A Collaborative and Iterative Knowledge Tracing Framework with Large Language Models (Li et al., 2025) aims to address the limitations of traditional knowledge tracing methods. Traditional methods often struggle with explainability, scalability, and modeling complex knowledge dependencies. The authors aim to develop a framework that leverages Large Language Models to enhance both prediction accuracy and explainability, creating a more transparent and accurate system for tracking student learning. The method employed involves a collaborative iterative knowledge tracing framework, which uses a dual-component architecture that includes an Analyst and a Predictor. The Analyst component generates dynamic, explainable user profiles from student historical responses. The Predictor component then uses these profiles to forecast future performance. A key aspect of the framework is a synergistic optimization loop. In this loop, the Analyst is iteratively refined based on the predictive accuracy of the Predictor, and the Predictor is subsequently retrained using the enhanced profiles. This collaborative and iterative process allows the model to continually improve its understanding of student knowledge and its ability to predict future performance. The key finding of this research is that the framework achieved significant improvements in prediction accuracy, enhanced explainability through dynamically updated user profiles, and improved scalability when evaluated on multiple educational datasets. The model's ability to generate clear and informative student profiles allows educators to better understand student learning progress, and the researchers were able to show that the model's predictions were more accurate than existing methods. The significance of this paper lies in its robust and explainable solution for advancing knowledge tracing systems, effectively bridging the gap between predictive performance and model transparency. The researchers demonstrate the potential of Large Language Models to improve personalized education and provide valuable insights into student learning processes. The iterative refinement loop is a novel approach that could be applied to other areas where model explainability is crucial. By creating a more transparent and accurate knowledge tracing system, this research has the potential to improve student learning outcomes and provide educators with valuable tools for personalized instruction.

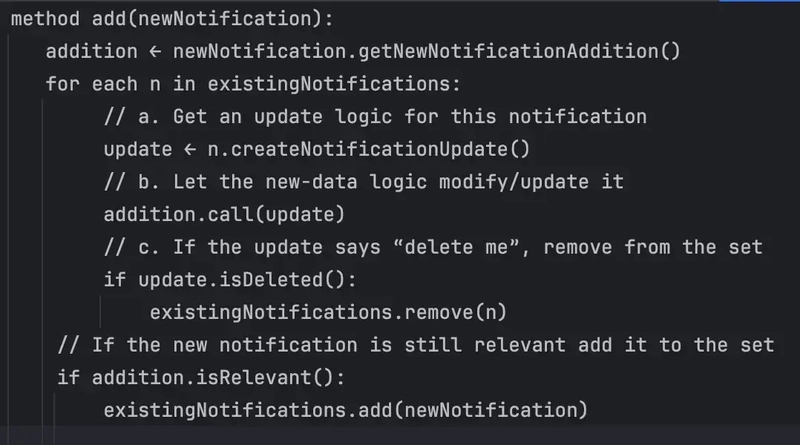

Rethinking Agent Design: From Top-Down Workflows to Bottom-Up Skill Evolution (Du et al., 2025) challenges the conventional top-down approach to agent design. In the traditional approach, humans decompose tasks, define workflows, and assign agents to execute each step. The authors propose a bottom-up agent paradigm that mirrors the human learning process, where agents acquire competence through experience and interaction with their environment. The method used involves a trial-and-reasoning mechanism. In this mechanism, agents explore, reflect on outcomes, and abstract skills over time. Once acquired, skills can be rapidly shared and extended, enabling continual evolution rather than static replication. The authors evaluated this paradigm in the games Slay the Spire and Civilization V. In these games, agents perceive through raw visual inputs and act via mouse outputs. The researchers designed a system where agents could learn from their own experiences and share those experiences with other agents. The key finding is that bottom-up agents can acquire skills entirely through autonomous interaction, without any game-specific prompts or privileged APIs. This highlights the potential of the bottom-up paradigm in complex, real-world environments. The agents were able to learn how to play the games without any human intervention, demonstrating the effectiveness of the bottom-up approach. The significance of this paper lies in its representation of a significant departure from traditional agent design, offering a promising new direction for creating more adaptable and intelligent agents. The bottom-up approach is particularly well-suited for open-ended environments where agents need to learn and evolve over time. The demonstration of skill sharing among agents could lead to more efficient and collaborative learning processes. This has potential implications for robotics and other fields where agents need to operate in dynamic and unpredictable environments.

GeoGramBench: Benchmarking the Geometric Program Reasoning in Modern LLMs (Luo et al., 2025) addresses a gap in the evaluation of Large Language Models, specifically focusing on their ability to reason about geometric spatial information expressed in procedural code. The researchers aim to formalize the Program-to-Geometry task and create a benchmark to evaluate this capability, developing a standardized way to measure how well Large Language Models can understand and reason about geometric code. The method used involves the creation of GeoGramBench, a benchmark of 500 carefully refined problems organized by a tailored three-level taxonomy that considers geometric complexity. The authors evaluated 17 frontier Large Language Models on this benchmark and analyzed their performance, creating a challenging and diverse dataset of geometric problems. The key finding is that there are consistent and pronounced deficiencies in Large Language Models' geometric reasoning abilities. Even the most advanced models achieve less than 50% accuracy at the highest abstraction level. The Large Language Models struggled to solve the geometric problems, indicating a significant limitation in their ability to reason about spatial information. The significance of this paper lies in its highlighting the unique challenges posed by program-driven spatial reasoning, establishing GeoGramBench as a valuable resource for advancing research in symbolic-to-spatial geometric reasoning. The findings underscore the need for further research into methods for improving Large Language Models' ability to understand and reason about geometric information, which is crucial for many applications in artificial intelligence, such as robotics, computer vision, and computer-aided design. This shows the need for incorporating more structured representations and symbolic reasoning capabilities into Large Language Models.

Looking ahead, several key directions for future research emerge from these papers. Developing more robust and reliable AI systems is crucial. This will require new approaches to evaluation, safety, and explainability, as well as a deeper understanding of the ethical implications of AI technology. The development of reliable AI systems must be a top priority. The development of new methods and techniques that enable researchers to create more accurate models has become a concern in the field. AI models should be safe to deploy in real world cases, to avoid dangerous situations.

Researchers also need to explore multimodal AI more fully. We need to build systems that can seamlessly integrate information from multiple modalities. This includes image processing, natural language, and speech recognition. By combining these methods, AI models can have a better understanding of data and make better predictions.

Finally, there is a need to keep going on the development of agent-based AI. Scientists need to create autonomous agents that can solve complex problems and interact with the world effectively. Agent based AI is being used more often to solve complex problems, as multi agent systems can work to find the best solution to a problem. This helps decompose larger issues into easier steps.

One major challenge is to ensure that AI systems are aligned with human values and goals. As AI becomes more powerful, it is crucial to ensure that it is used in a way that benefits society as a whole. This requires careful attention to ethical considerations and the development of appropriate safeguards. As AI becomes more integrated in life, it will also be a great help for people to know the basics about how AI models work.

Another challenge is to improve the ability of AI systems to reason and generalize. Current AI systems often struggle with tasks that require common sense reasoning or the ability to adapt to new situations. Overcoming these limitations will require new approaches to AI research. It is also important to be able to generalize new models. For instance, if an AI model can predict how to drive a car, that knowledge can then be transferred to an AI model that can predict how to drive a forklift.

In conclusion, the AI field is progressing rapidly, with researchers making significant strides in various areas. However, important challenges remain. Significant effort is needed to improve the reliability, robustness, and explainability of AI systems. This will require ongoing research, careful attention to ethical considerations, and a commitment to developing AI in a responsible and beneficial way. One key takeaway is that Large Language Models are becoming increasingly powerful, but it is also important to recognize their limitations. Another key takeaway is that the development of more robust and reliable AI systems is crucial for ensuring their safe and effective deployment in real-world applications. Finally, it is essential to address the ethical implications of AI technology and ensure that it is used in a way that benefits society. It is important to note that current research in AI involves many aspects of computer science and mathematics to create a better product. The research and creation of new models is an ever growing field, with new technologies being released constantly.

References:

Li, R., et al. (2025). CIKT: A Collaborative and Iterative Knowledge Tracing Framework with Large Language Models. arXiv:2505.17705

Yoshihara, S., et al. (2025). Enhancing AI System Resiliency: Formulation and Guarantee for LSTM Resilience Based on Control Theory. arXiv:2505.17696

Du, J., et al. (2025). Rethinking Agent Design: From Top-Down Workflows to Bottom-Up Skill Evolution. arXiv:2505.17673

Luo, S., et al. (2025). GeoGramBench: Benchmarking the Geometric Program Reasoning in Modern LLMs. arXiv:2505.17653

Jiang, E., et al. (2025). Misaligning Reasoning with Answers -- A Framework for Assessing LLM CoT Robustness. arXiv:2505.17406

Yao, J., et al. (2025). MMMG: a Comprehensive and Reliable Evaluation Suite for Multitask Multimodal Generation. arXiv:2505.17613

Suárez Ferreira, J., et al. (2025). Transparency and Proportionality in Post-Processing Algorithmic Bias Correction. arXiv:2505.17525

Alawadhi, S., et al. (2025). Optimizing Retrieval-Augmented Generation for Electrical Engineering: A Case Study on ABB Circuit Breakers. arXiv:2505.17520

Xu, S., et al. (2025). Probe by Gaming: A Game-based Benchmark for Assessing Conceptual Knowledge in LLMs. arXiv:2505.17512

Bao, D., et al. (2025). PD$^3$: A Project Duplication Detection Framework via Adapted Multi-Agent Debate. arXiv:2505.17492

![[The AI Show Episode 150]: AI Answers: AI Roadmaps, Which Tools to Use, Making the Case for AI, Training, and Building GPTs](https://www.marketingaiinstitute.com/hubfs/ep%20150%20cover.png)

![[The AI Show Episode 149]: Google I/O, Claude 4, White Collar Jobs Automated in 5 Years, Jony Ive Joins OpenAI, and AI’s Impact on the Environment](https://www.marketingaiinstitute.com/hubfs/ep%20149%20cover.png)

.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

-1280x720.jpg?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

_Zoonar_GmbH_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![WWDC 2025 May Disappoint on AI [Gurman]](https://www.iclarified.com/images/news/97473/97473/97473-640.jpg)

![Apple to Name Next macOS 'Tahoe,' Switch to Year-Based OS Names Like 'macOS 26' [Report]](https://www.iclarified.com/images/news/97471/97471/97471-640.jpg)

![Sonos Father's Day Sale: Save Up to 26% on Arc Ultra, Ace, Move 2, and More [Deal]](https://www.iclarified.com/images/news/97469/97469/97469-640.jpg)