What is Transformer (deep learning architecture)?

The transformer is a deep learning architecture that was developed by researchers at Google and is based on the multi-head attention mechanism, which was proposed in the 2017 paper "Attention Is All You Need".[1] Text is converted to numerical representations called tokens, and each token is converted into a vector via lookup from a word embedding table.[1] At each layer, each token is then contextualized within the scope of the context window with other (unmasked) tokens via a parallel multi-head attention mechanism, allowing the signal for key tokens to be amplified and less important tokens to be diminished. Transformers have the advantage of having no recurrent units, therefore requiring less training time than earlier recurrent neural architectures (RNNs) such as long short-term memory (LSTM).[2] Later variations have been widely adopted for training large language models (LLM) on large (language) datasets.[3] Transformers were first developed as an improvement over previous architectures for machine translation,[4][5] but have found many applications since. They are used in large-scale natural language processing, computer vision (vision transformers), reinforcement learning,[6][7] audio,[8] multimodal learning, robotics,[9] and even playing chess.[10] It has also led to the development of pre-trained systems, such as generative pre-trained transformers (GPTs)[11] and BERT12. All transformers have the same primary components: Tokenizers, which convert text into tokens. Embedding layer, which converts tokens and positions of the tokens into vector representations. Transformer layers, which carry out repeated transformations on the vector representations, extracting more and more linguistic information. These consist of alternating attention and feedforward layers. There are two major types of transformer layers: encoder layers and decoder layers, with further variants. Un-embedding layer, which converts the final vector representations back to a probability distribution over the tokens. The following description follows exactly the Transformer as described in the original paper. There are variants, described in the following section. By convention, we write all vectors as row vectors. This, for example, means that pushing a vector through a linear layer means multiplying it by a weight matrix on the right, as x W {\displaystyle xW}. Tokenization edit Main article: Lexical analysis As the Transformer architecture natively processes numerical data, not text, there must be a translation between text and tokens. A token is an integer that represents a character, or a short segment of characters. On the input side, the input text is parsed into a token sequence. Similarly, on the output side, the output tokens are parsed back to text. The module doing the conversion between texts and token sequences is a tokenizer. The set of all tokens is the vocabulary of the tokenizer, and its size is the vocabulary size n vocabulary {\displaystyle n_{\text{vocabulary}}}. When faced with tokens outside the vocabulary, typically a special token is used, written as "[UNK]" for "unknown". Some commonly used tokenizers are byte pair encoding, WordPiece, and SentencePiece. Embedding edit Further information: Word embedding Each token is converted into an embedding vector via a lookup table. Equivalently stated, it multiplies a one-hot representation of the token by an embedding matrix M {\displaystyle M}. For example, if the input token is 3 {\displaystyle 3}, then the one-hot representation is [ 0 , 0 , 0 , 1 , 0 , 0 , … ] {\displaystyle [0,0,0,1,0,0,\dots ]}, and its embedding vector is E m b e d ( 3 ) = [ 0 , 0 , 0 , 1 , 0 , 0 , … ] M {\displaystyle \mathrm {Embed} (3)=[0,0,0,1,0,0,\dots ]M}The token embedding vectors are added to their respective positional encoding vectors (see below), producing the sequence of input vectors. The number of dimensions in an embedding vector is called hidden size or embedding size and written as d emb {\displaystyle d_{\text{emb}}}.[35] This size is written as d model {\displaystyle d_{\text{model}}} in the original Transformer paper.[1] Un-embedding edit An un-embedding layer is almost the reverse of an embedding layer. Whereas an embedding layer converts a token into a vector, an un-embedding layer converts a vector into a probability distribution over tokens. The un-embedding layer is a linear-softmax layer: U n E m b e d ( x ) = s o f t m a x ( x W + b ) {\displaystyle \mathrm {UnEmbed} (x)=\mathrm {softmax} (xW+b)}The matrix has shape ( d emb , n vocabulary ) {\displaystyle (d_{\text{emb}},n_{\text{vocabulary}})}. The embedding matrix M {\displaystyle M} and the un-embedding matrix W {\displaystyle W} are sometimes required to be transposes of each other, a practice called weight tying.[52] Train Model Methods for stabilizing training edit The plain transformer architecture had difficulty converging. In the original paper[1] the authors recommended using learning r

The transformer is a deep learning architecture that was developed by researchers at Google and is based on the multi-head attention mechanism, which was proposed in the 2017 paper "Attention Is All You Need".[1] Text is converted to numerical representations called tokens, and each token is converted into a vector via lookup from a word embedding table.[1] At each layer, each token is then contextualized within the scope of the context window with other (unmasked) tokens via a parallel multi-head attention mechanism, allowing the signal for key tokens to be amplified and less important tokens to be diminished. Transformers have the advantage of having no recurrent units, therefore requiring less training time than earlier recurrent neural architectures (RNNs) such as long short-term memory (LSTM).[2] Later variations have been widely adopted for training large language models (LLM) on large (language) datasets.[3]

Transformers were first developed as an improvement over previous architectures for machine translation,[4][5] but have found many applications since. They are used in large-scale natural language processing, computer vision (vision transformers), reinforcement learning,[6][7] audio,[8] multimodal learning, robotics,[9] and even playing chess.[10] It has also led to the development of pre-trained systems, such as generative pre-trained transformers (GPTs)[11] and BERT12.

All transformers have the same primary components:

Tokenizers, which convert text into tokens.

Embedding layer, which converts tokens and positions of the tokens into vector representations.

Transformer layers, which carry out repeated transformations on the vector representations, extracting more and more linguistic information. These consist of alternating attention and feedforward layers. There are two major types of transformer layers: encoder layers and decoder layers, with further variants.

Un-embedding layer, which converts the final vector representations back to a probability distribution over the tokens.

The following description follows exactly the Transformer as described in the original paper. There are variants, described in the following section.

By convention, we write all vectors as row vectors. This, for example, means that pushing a vector through a linear layer means multiplying it by a weight matrix on the right, as x W {\displaystyle xW}.

Tokenization

edit

Main article: Lexical analysis

As the Transformer architecture natively processes numerical data, not text, there must be a translation between text and tokens. A token is an integer that represents a character, or a short segment of characters. On the input side, the input text is parsed into a token sequence. Similarly, on the output side, the output tokens are parsed back to text. The module doing the conversion between texts and token sequences is a tokenizer.

The set of all tokens is the vocabulary of the tokenizer, and its size is the vocabulary size n vocabulary {\displaystyle n_{\text{vocabulary}}}. When faced with tokens outside the vocabulary, typically a special token is used, written as "[UNK]" for "unknown".

Some commonly used tokenizers are byte pair encoding, WordPiece, and SentencePiece.

Embedding

edit

Further information: Word embedding

Each token is converted into an embedding vector via a lookup table. Equivalently stated, it multiplies a one-hot representation of the token by an embedding matrix M {\displaystyle M}. For example, if the input token is 3 {\displaystyle 3}, then the one-hot representation is [ 0 , 0 , 0 , 1 , 0 , 0 , … ] {\displaystyle [0,0,0,1,0,0,\dots ]}, and its embedding vector is

E m b e d ( 3 ) = [ 0 , 0 , 0 , 1 , 0 , 0 , … ] M

{\displaystyle \mathrm {Embed} (3)=[0,0,0,1,0,0,\dots ]M}The token embedding vectors are added to their respective positional encoding vectors (see below), producing the sequence of input vectors.

The number of dimensions in an embedding vector is called hidden size or embedding size and written as d emb {\displaystyle d_{\text{emb}}}.[35] This size is written as d model {\displaystyle d_{\text{model}}} in the original Transformer paper.[1]

Un-embedding

edit

An un-embedding layer is almost the reverse of an embedding layer. Whereas an embedding layer converts a token into a vector, an un-embedding layer converts a vector into a probability distribution over tokens.

The un-embedding layer is a linear-softmax layer:

U n E m b e d ( x ) = s o f t m a x ( x W + b )

{\displaystyle \mathrm {UnEmbed} (x)=\mathrm {softmax} (xW+b)}The matrix has shape ( d emb , n vocabulary ) {\displaystyle (d_{\text{emb}},n_{\text{vocabulary}})}. The embedding matrix M {\displaystyle M} and the un-embedding matrix W {\displaystyle W} are sometimes required to be transposes of each other, a practice called weight tying.[52]

Train Model

Methods for stabilizing training

edit

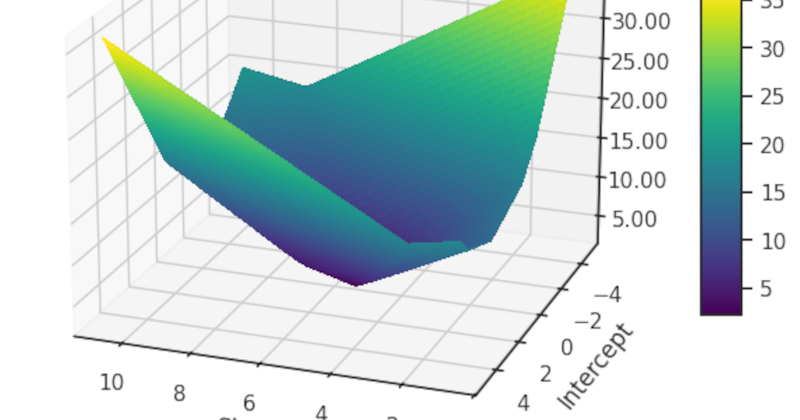

The plain transformer architecture had difficulty converging. In the original paper[1] the authors recommended using learning rate warmup. That is, the learning rate should linearly scale up from 0 to maximal value for the first part of the training (usually recommended to be 2% of the total number of training steps), before decaying again.

A 2020 paper found that using layer normalization before (instead of after) multiheaded attention and feedforward layers stabilizes training, not requiring learning rate warmup.[46]

Pretrain-finetune

edit

Transformers typically are first pretrained by self-supervised learning on a large generic dataset, followed by supervised fine-tuning on a small task-specific dataset. The pretrain dataset is typically an unlabeled large corpus, such as The Pile. Tasks for pretraining and fine-tuning commonly include:

language modeling[12]

next-sentence prediction[12]

question answering[3]

reading comprehension

sentiment analysis[1]

paraphrasing[1]

The T5 transformer report[47] documents a large number of natural language pretraining tasks. Some examples are:

restoring or repairing incomplete or corrupted text. For example, the input, "Thank you ~~ me to your party ~~ week", might generate the output, "Thank you for inviting me to your party last week".

translation between natural languages (machine translation)

judging the pragmatic acceptability of natural language. For example, the following sentence might be judged "not acceptable",[48] because even though it is syntactically well-formed, it is improbable in ordinary human usage: The course is jumping well.

Note that while each of these tasks is trivial or obvious for human native speakers of the language (or languages), they have typically proved challenging for previous generations of machine learning architecture.

![[The AI Show Episode 146]: Rise of “AI-First” Companies, AI Job Disruption, GPT-4o Update Gets Rolled Back, How Big Consulting Firms Use AI, and Meta AI App](https://www.marketingaiinstitute.com/hubfs/ep%20146%20cover.png)

_Aleksey_Funtap_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

_Sergey_Tarasov_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Apple Foldable iPhone to Feature New Display Tech, 19% Thinner Panel [Rumor]](https://www.iclarified.com/images/news/97271/97271/97271-640.jpg)

![Apple Developing New Chips for Smart Glasses, Macs, AI Servers [Report]](https://www.iclarified.com/images/news/97269/97269/97269-640.jpg)

![Apple Shares New Mother's Day Ad: 'A Gift for Mom' [Video]](https://www.iclarified.com/images/news/97267/97267/97267-640.jpg)

![Apple Shares Official Trailer for 'Stick' Starring Owen Wilson [Video]](https://www.iclarified.com/images/news/97264/97264/97264-640.jpg)