Explore the essentials of ETCD, a powerful distributed database

1. Introduction With the increasing adoption of containerized applications and microservice-based architectures, the demand for scalable, consistent, and fault-tolerant storage systems has grown. ETCD has emerged as the de facto standard for distributed key-value storage in Kubernetes. This paper aims to offer an in-depth understanding of ETCD's internal mechanisms, its integration into cloud-native platforms, and its broader implications in the realm of distributed computing. ETCD enables critical capabilities such as dynamic configuration, service registration, leader election, and state synchronization in distributed systems. Understanding how ETCD works not only provides insight into Kubernetes but also offers valuable lessons in distributed consensus, replication, and system design. 2. Background and Theoretical Foundations 2.1 Origin and Development ETCD was created by CoreOS as part of its vision to simplify the deployment and management of container-based infrastructure. Its core principles include simplicity, speed, and strong consistency. The project gained rapid adoption and was eventually donated to the CNCF, where it became one of the foundation's early graduated projects. Since then, the ETCD community has grown substantially. Contributions span performance tuning, ecosystem tooling, security enhancements, and better integration with orchestration systems. 2.2 Raft Consensus Algorithm The Raft consensus algorithm underpins ETCD’s reliability. Raft maintains consensus among nodes by electing a leader who handles all client write requests. This leader replicates log entries to follower nodes. If the leader fails, a new one is automatically elected. Raft ensures consistency through a series of rules regarding log matching, commit policies, and election timeouts. By abstracting away complex failure scenarios, Raft offers engineers a more intuitive alternative to Paxos. Recommended Reading: Ongaro, D., & Ousterhout, J. (2014). In Search of an Understandable Consensus Algorithm (Raft). USENIX ATC. 3. System Architecture and Operational Model 3.1 Cluster Composition An ETCD cluster must consist of an odd number of nodes to maintain quorum. At any time, only one node acts as the leader, while the others are followers. Write requests are routed through the leader and then replicated across the cluster. To reduce write latency and increase reliability, ETCD uses batching, log compaction, and snapshotting. These mechanisms allow the cluster to manage large volumes of state changes while maintaining performance. 3.2 Data Storage and WAL ETCD uses a Write-Ahead Log (WAL) and snapshots to manage persistence. The WAL logs each write before committing it to the BoltDB storage backend. Snapshots reduce disk usage and recovery time by capturing periodic full states. Log compaction and defragmentation are automated processes in ETCD, minimizing storage bloat and improving query speed. 3.3 API and Access ETCD provides a powerful gRPC-based API (v3), supporting a wide array of functions beyond basic CRUD: Atomic Compare-And-Swap (CAS) operations Range queries Lease-based keys with TTL Event-based watchers for reactive designs CLI tools like etcdctl and libraries in Go, Python, and Rust allow integration across diverse platforms. 4. Integration with Kubernetes Kubernetes uses ETCD as its sole source of cluster state. Every resource created or modified within Kubernetes is stored in ETCD: Cluster topology (Nodes, API Servers) Workloads (Pods, ReplicaSets, Deployments) Policies (RBAC, NetworkPolicies) Secrets and ConfigMaps 4.1 Controller Loop Dependency Controllers in Kubernetes read from ETCD and work to reconcile actual and desired states. The kube-apiserver is the only component that communicates directly with ETCD, ensuring a clear separation of responsibilities. Example: If a pod crashes, the kubelet reports the failure. The ReplicaSet controller checks ETCD, sees fewer replicas than desired, and instructs the scheduler to create a new pod. 5. Key Features 5.1 Scalability ETCD supports high concurrency and low latency through distributed replication and request pipelining. It can handle tens of thousands of keys and thousands of operations per second. Large Kubernetes clusters rely on horizontally scalable ETCD backends for rapid reconciliation and failover. 5.2 High Availability High availability is achieved via quorum-based consensus. ETCD guarantees consistency even during network partitions, as long as the majority of nodes remain reachable. Leader re-elections are quick, minimizing downtime. Multi-AZ deployments are recommended to improve fault tolerance. 5.3 Security ETCD supports mutual TLS (mTLS) for both client-server and peer-to-peer communication. It also enables client certificate authentication and role-based access p

1. Introduction

With the increasing adoption of containerized applications and microservice-based architectures, the demand for scalable, consistent, and fault-tolerant storage systems has grown. ETCD has emerged as the de facto standard for distributed key-value storage in Kubernetes. This paper aims to offer an in-depth understanding of ETCD's internal mechanisms, its integration into cloud-native platforms, and its broader implications in the realm of distributed computing.

ETCD enables critical capabilities such as dynamic configuration, service registration, leader election, and state synchronization in distributed systems. Understanding how ETCD works not only provides insight into Kubernetes but also offers valuable lessons in distributed consensus, replication, and system design.

2. Background and Theoretical Foundations

2.1 Origin and Development

ETCD was created by CoreOS as part of its vision to simplify the deployment and management of container-based infrastructure. Its core principles include simplicity, speed, and strong consistency. The project gained rapid adoption and was eventually donated to the CNCF, where it became one of the foundation's early graduated projects.

Since then, the ETCD community has grown substantially. Contributions span performance tuning, ecosystem tooling, security enhancements, and better integration with orchestration systems.

2.2 Raft Consensus Algorithm

The Raft consensus algorithm underpins ETCD’s reliability. Raft maintains consensus among nodes by electing a leader who handles all client write requests. This leader replicates log entries to follower nodes. If the leader fails, a new one is automatically elected.

Raft ensures consistency through a series of rules regarding log matching, commit policies, and election timeouts. By abstracting away complex failure scenarios, Raft offers engineers a more intuitive alternative to Paxos.

Recommended Reading: Ongaro, D., & Ousterhout, J. (2014). In Search of an Understandable Consensus Algorithm (Raft). USENIX ATC.

3. System Architecture and Operational Model

3.1 Cluster Composition

An ETCD cluster must consist of an odd number of nodes to maintain quorum. At any time, only one node acts as the leader, while the others are followers. Write requests are routed through the leader and then replicated across the cluster.

To reduce write latency and increase reliability, ETCD uses batching, log compaction, and snapshotting. These mechanisms allow the cluster to manage large volumes of state changes while maintaining performance.

3.2 Data Storage and WAL

ETCD uses a Write-Ahead Log (WAL) and snapshots to manage persistence. The WAL logs each write before committing it to the BoltDB storage backend. Snapshots reduce disk usage and recovery time by capturing periodic full states.

Log compaction and defragmentation are automated processes in ETCD, minimizing storage bloat and improving query speed.

3.3 API and Access

ETCD provides a powerful gRPC-based API (v3), supporting a wide array of functions beyond basic CRUD:

- Atomic Compare-And-Swap (CAS) operations

- Range queries

- Lease-based keys with TTL

- Event-based watchers for reactive designs

CLI tools like etcdctl and libraries in Go, Python, and Rust allow integration across diverse platforms.

4. Integration with Kubernetes

Kubernetes uses ETCD as its sole source of cluster state. Every resource created or modified within Kubernetes is stored in ETCD:

- Cluster topology (Nodes, API Servers)

- Workloads (Pods, ReplicaSets, Deployments)

- Policies (RBAC, NetworkPolicies)

- Secrets and ConfigMaps

4.1 Controller Loop Dependency

Controllers in Kubernetes read from ETCD and work to reconcile actual and desired states. The kube-apiserver is the only component that communicates directly with ETCD, ensuring a clear separation of responsibilities.

Example: If a pod crashes, the kubelet reports the failure. The ReplicaSet controller checks ETCD, sees fewer replicas than desired, and instructs the scheduler to create a new pod.

5. Key Features

5.1 Scalability

ETCD supports high concurrency and low latency through distributed replication and request pipelining. It can handle tens of thousands of keys and thousands of operations per second.

Large Kubernetes clusters rely on horizontally scalable ETCD backends for rapid reconciliation and failover.

5.2 High Availability

High availability is achieved via quorum-based consensus. ETCD guarantees consistency even during network partitions, as long as the majority of nodes remain reachable.

Leader re-elections are quick, minimizing downtime. Multi-AZ deployments are recommended to improve fault tolerance.

5.3 Security

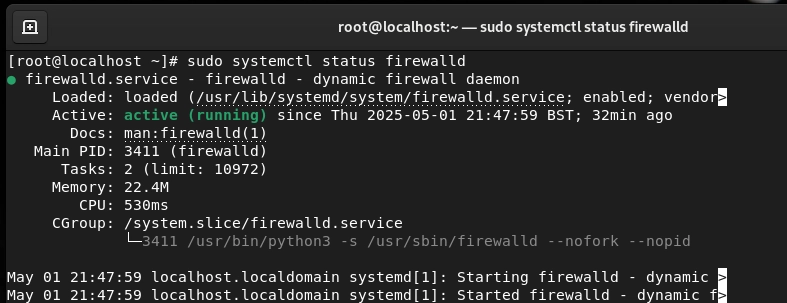

ETCD supports mutual TLS (mTLS) for both client-server and peer-to-peer communication. It also enables client certificate authentication and role-based access policies.

Tip: Use automated certificate rotation and enforce RBAC to minimize risk.

5.4 Real-Time Configuration with Watches

ETCD watches allow services to react immediately to state changes. Watches are often used for:

- Dynamic configuration reloads

- Service status monitoring

- Custom controller logic

Example: A CI/CD system watching /deployments/pending in ETCD can trigger deployment workflows when new keys appear.

6. Use Cases and Application Domains

6.1 Configuration Management

Centralizing config in ETCD promotes consistency and traceability. Configurations can be versioned, dynamically updated, and scoped per namespace or service.

6.2 Service Discovery

In smaller architectures or when outside Kubernetes, ETCD provides lightweight service discovery. Combined with TTL and leases, ephemeral service registration becomes feasible.

6.3 Distributed Coordination

ETCD can be used to build:

- Leader elections

- Distributed locks

- Session management

Example:

etcdctl lease grant 60

etcdctl put /election/db-primary instance1 --lease=123456

6.4 Backup and Disaster Recovery

Snapshots and WAL backups form the basis for disaster recovery. Administrators should:

- Schedule periodic snapshots

- Store them offsite

- Test restores in isolated environments

Tools like etcdutl streamline snapshot analysis and compaction.

7. Comparative Analysis with Other Systems

| Feature | ETCD | Consul | ZooKeeper | Redis |

|---|---|---|---|---|

| Strong Consistency | ✅ | ❌ | ✅ | ❌ |

| Native Kubernetes Integration | ✅ | ❌ | ❌ | ❌ |

| Watch/Notification | ✅ | ✅ | ✅ | ❌ |

| GUI Dashboard | ❌ | ✅ | ❌ | ✅ |

| TTL Support | ✅ | ✅ | ❌ | ✅ |

| In-Memory | ❌ | ❌ | ❌ | ✅ |

While Consul and ZooKeeper offer rich service discovery and coordination, ETCD excels in simplicity, integration, and strong consistency guarantees.

8. Implementation Guidelines

8.1 Best Practices

- Maintain odd-numbered node counts (e.g., 3, 5, 7)

- Separate ETCD from user workloads

- Use SSDs for low latency and better WAL throughput

- Regularly defragment data stores

- Set resource limits and alerts on memory/disk usage

8.2 Deployment Tools

-

kubeadminitializes secure clusters with ETCD - Helm charts provide quick testing environments

- Operators (e.g., etcd-operator) automate scaling, backup, and restoration

8.3 Common Pitfalls

- Ignoring disk I/O leads to slow WAL writes

- Improper peer discovery results in split-brain scenarios

- Not monitoring leader churn can obscure larger network issues

9. Future Directions and Research Opportunities

Future innovation in ETCD may include:

- Integration with CRDTs for better partition handling

- Extended observability and visual cluster health tools

- Native support for edge deployments and hybrid clouds

- LSM-tree-based storage engine alternatives

Academically, there is ongoing research into alternative consensus models, such as EPaxos and HotStuff, which may eventually inspire new implementations or ETCD forks.

10. Conclusion

ETCD exemplifies the principles of reliability, simplicity, and consistency in distributed system design. Its foundational role in Kubernetes has made it one of the most widely used consensus-based key-value stores in production. Mastering ETCD equips system administrators, DevOps teams, and cloud engineers with essential knowledge for designing robust, scalable systems.

As the demand for high-availability services grows, ETCD will remain a critical infrastructure component for orchestrating modern distributed applications.

References

- Ongaro, D., & Ousterhout, J. (2014). In Search of an Understandable Consensus Algorithm (Raft). USENIX ATC.

- Cloud Native Computing Foundation. ETCD Project Page

- Kubernetes Documentation. ETCD in Kubernetes

- GitHub Repository. etcd-io/etcd

- The Secret Lives of Data. Raft Visualization

- Red Hat. (2022). Best Practices for Running etcd in Production

-Reviewer-Photo-SOURCE-Julian-Chokkattu-(no-border).jpg)

![[The AI Show Episode 146]: Rise of “AI-First” Companies, AI Job Disruption, GPT-4o Update Gets Rolled Back, How Big Consulting Firms Use AI, and Meta AI App](https://www.marketingaiinstitute.com/hubfs/ep%20146%20cover.png)

![Life in Startup Pivot Hell with Ex-Microsoft Lonewolf Engineer Sam Crombie [Podcast #171]](https://cdn.hashnode.com/res/hashnode/image/upload/v1746753508177/0cd57f66-fdb0-4972-b285-1443a7db39fc.png?#)

-Nintendo-Switch-2-Hands-On-Preview-Mario-Kart-World-Impressions-&-More!-00-10-30.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

.jpg?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

_Andrey_Khokhlov_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

_Aleksey_Funtap_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Some of the best accessories to pair with your Pixel 9 [Video]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2024/10/Accessories-Header.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Foldable iPhone to Feature New Display Tech, 19% Thinner Panel [Rumor]](https://www.iclarified.com/images/news/97271/97271/97271-640.jpg)

![Apple Developing New Chips for Smart Glasses, Macs, AI Servers [Report]](https://www.iclarified.com/images/news/97269/97269/97269-640.jpg)

![Apple Shares New Mother's Day Ad: 'A Gift for Mom' [Video]](https://www.iclarified.com/images/news/97267/97267/97267-640.jpg)