Voices at the Threshold

In the quiet corners of recording studios, a technological storm is brewing. Artificial intelligence, capable of replicating the unique timbres and resonant qualities of human speech, promises innovation—but brings with it profound ethical challenges. Where voice actors once saw stable creative livelihoods, they now confront serious questions about consent, identity, ownership, and even the meaning of artistic integrity itself. Together, let’s step carefully into this nuanced landscape—where the human voice and machine capability meet, mix, and inevitably conflict. The Human Voice as Personal Identity For voice performers, their instrument isn’t merely technical or professional. It's deeply personal: a distinctive signature inseparable from their identity. Unlike written words or musical notes, a voice embodies a person's emotions, intentions, identity—capturing nuances as subtle as personality itself. This personal dimension transforms voice replication from a mere technical advance into an ethical minefield. At stake isn’t just property, but personhood itself. The Meaning of True Consent Consent provides our first ethical foundation stone. Yet consent isn't always straightforward. Truly ethical practice requires informing performers not just that their voices might be used, but how precisely they're captured, stored, reproduced, and—crucially—to what extent they're safeguarded. The risks aren't hypothetical. Unprotected voice data could lead to identity theft or malicious replication. Ethical responsibility means performers must possess clear awareness and granular control over voice reproduction at every stage. Protecting Ephemeral Identity A voice is fleeting yet recognisable—a puzzling tension that our current legal frameworks struggle to capture. Traditional intellectual property protections revolve around tangible, fixed works. But how do we protect something ephemeral like vocal nuance that AI can reproduce precisely and indefinitely? Proposals like the "NO FAKES Act" offer some hope of protection against unauthorised replicas. Yet fundamental questions persist unanswered: Who, precisely, owns AI-generated voices? Performers? Developers? Producers? These aren't abstract legal theories—they shape livelihoods, creative possibilities, and ethical responsibilities. Navigating Job Security Beyond existential questions of identity lay pressing concerns about professional viability. AI voices offer tempting advantages: scalability, availability, cost-efficiency. Still, artificial voices can't yet fully replicate human emotional nuance or improvisational artistry—qualities that create moving, meaningful performances. Yet this reassurance provides little comfort when markets value efficiency above experience. Many voice actors worry about gradual displacement, wondering if they'll be shifted aside by technology’s relentless march forward. Could collaboration provide a solution? Rather than complete AI substitution, perhaps a union of human and machine capabilities could elevate creative outputs while honouring human contributions—in essence, preserving art’s essential humanity. Controversies That Define the Moment Four pivotal incidents illuminate these ethical dilemmas: OpenAI's "Sky" Controversy OpenAI’s AI model "Sky" stood accused of closely mimicking a notable voice actor's signature without explicit permission. This controversy thrust AI ethics into sharp public view, reinforcing the necessity of obtaining explicit, documented consent before training machine models on distinctive human voices. LOVO's Legal Battle LOVO’s litigation centred around alleged misuse of voice data without proper authorisation. The controversy highlighted a clear need for transparency in data collection and active compliance with privacy regulations—underscoring that ambitious technology carries significant ethical responsibilities. ELVIS Act The proposed ELVIS Act sought to safeguard against unauthorised commercial use of deceased celebrities' voices. While clearly well-intentioned—aiming to protect legacies—it raised difficult questions about posthumous consent, resurrected identities, and the commercialisation of those no longer able to speak for themselves. SAG-AFTRA’s AI Agreement Amidst uncertainty arose an important milestone: SAG-AFTRA’s landmark AI voice agreement with Replica Studios. This arrangement defined clearly informed consent procedures and fair compensation guidelines. For supporters, this represented significant progress—a robust framework protecting voice artists. Yet critics expressed concern, labelling the agreement as inadequate. They argued that, far from securing the profession's future, these concessions could further erode traditional employment, ultimately sacrificing long-term stability for short-term compensation. The agreement thus sparked heated debate—a vivid reflection of th

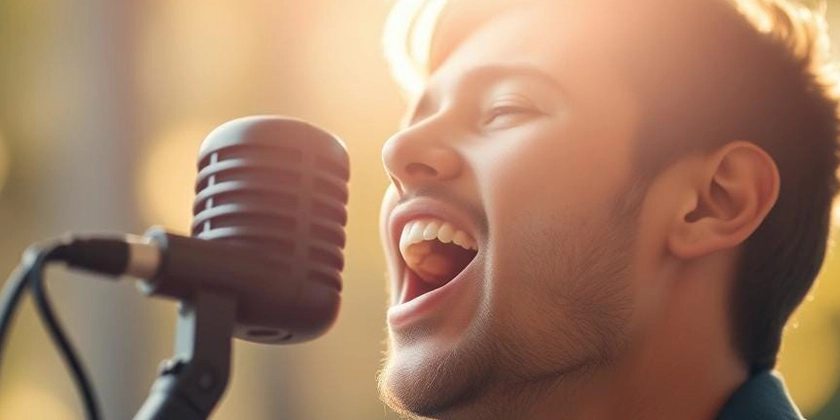

In the quiet corners of recording studios, a technological storm is brewing. Artificial intelligence, capable of replicating the unique timbres and resonant qualities of human speech, promises innovation—but brings with it profound ethical challenges. Where voice actors once saw stable creative livelihoods, they now confront serious questions about consent, identity, ownership, and even the meaning of artistic integrity itself.

Together, let’s step carefully into this nuanced landscape—where the human voice and machine capability meet, mix, and inevitably conflict.

The Human Voice as Personal Identity

For voice performers, their instrument isn’t merely technical or professional. It's deeply personal: a distinctive signature inseparable from their identity. Unlike written words or musical notes, a voice embodies a person's emotions, intentions, identity—capturing nuances as subtle as personality itself.

This personal dimension transforms voice replication from a mere technical advance into an ethical minefield. At stake isn’t just property, but personhood itself.

The Meaning of True Consent

Consent provides our first ethical foundation stone. Yet consent isn't always straightforward. Truly ethical practice requires informing performers not just that their voices might be used, but how precisely they're captured, stored, reproduced, and—crucially—to what extent they're safeguarded.

The risks aren't hypothetical. Unprotected voice data could lead to identity theft or malicious replication. Ethical responsibility means performers must possess clear awareness and granular control over voice reproduction at every stage.

Protecting Ephemeral Identity

A voice is fleeting yet recognisable—a puzzling tension that our current legal frameworks struggle to capture. Traditional intellectual property protections revolve around tangible, fixed works. But how do we protect something ephemeral like vocal nuance that AI can reproduce precisely and indefinitely?

Proposals like the "NO FAKES Act" offer some hope of protection against unauthorised replicas. Yet fundamental questions persist unanswered: Who, precisely, owns AI-generated voices? Performers? Developers? Producers?

These aren't abstract legal theories—they shape livelihoods, creative possibilities, and ethical responsibilities.

Navigating Job Security

Beyond existential questions of identity lay pressing concerns about professional viability. AI voices offer tempting advantages: scalability, availability, cost-efficiency. Still, artificial voices can't yet fully replicate human emotional nuance or improvisational artistry—qualities that create moving, meaningful performances.

Yet this reassurance provides little comfort when markets value efficiency above experience. Many voice actors worry about gradual displacement, wondering if they'll be shifted aside by technology’s relentless march forward.

Could collaboration provide a solution? Rather than complete AI substitution, perhaps a union of human and machine capabilities could elevate creative outputs while honouring human contributions—in essence, preserving art’s essential humanity.

Controversies That Define the Moment

Four pivotal incidents illuminate these ethical dilemmas:

OpenAI's "Sky" Controversy

OpenAI’s AI model "Sky" stood accused of closely mimicking a notable voice actor's signature without explicit permission. This controversy thrust AI ethics into sharp public view, reinforcing the necessity of obtaining explicit, documented consent before training machine models on distinctive human voices.

LOVO's Legal Battle

LOVO’s litigation centred around alleged misuse of voice data without proper authorisation. The controversy highlighted a clear need for transparency in data collection and active compliance with privacy regulations—underscoring that ambitious technology carries significant ethical responsibilities.

ELVIS Act

The proposed ELVIS Act sought to safeguard against unauthorised commercial use of deceased celebrities' voices. While clearly well-intentioned—aiming to protect legacies—it raised difficult questions about posthumous consent, resurrected identities, and the commercialisation of those no longer able to speak for themselves.

SAG-AFTRA’s AI Agreement

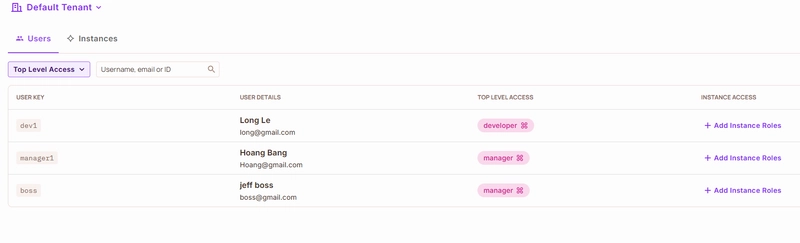

Amidst uncertainty arose an important milestone: SAG-AFTRA’s landmark AI voice agreement with Replica Studios. This arrangement defined clearly informed consent procedures and fair compensation guidelines.

For supporters, this represented significant progress—a robust framework protecting voice artists. Yet critics expressed concern, labelling the agreement as inadequate. They argued that, far from securing the profession's future, these concessions could further erode traditional employment, ultimately sacrificing long-term stability for short-term compensation.

The agreement thus sparked heated debate—a vivid reflection of the tensions inherent within balancing innovation and professional protection.

Practical Pathways Forward

Ethical AI implementation cannot afford to be purely theoretical. Concrete actions are imperative if innovation is genuinely to harmonise with responsibility. The voice-technology ecosystem must commit itself fully:

Establishing Complete Transparency

Transparency forms the ethical bedrock for trustworthy integration. Clear labelling for synthetic vocal performances ensures performers, producers, and audiences fully understand AI’s involvement—building an environment of trust and informed choice.

Consent Must Evolve

Europe’s GDPR laws offer a powerful model for achieving truly informed, continuous consent—giving voice performers autonomy over their "digital vocal identities". Ethical responsibility involves ongoing mechanisms for actors to manage, update, or withdraw consent at any stage the project might require.

Rewarding Human Contribution

As voice technologies develop, robust compensation models become increasingly urgent—royalties, licensing structures, or residual payments that value human input fairly within the ongoing processes of AI training, creation, and marketing.

Protecting Security and Integrity

Secure technologies aren’t luxuries—they’re fundamental prerequisites. State-of-the-art encryption measures, robust security protocols, and vigilant oversight are all necessary to defend vocal data from misuse, malfeasance, and exploitation.

Collaborative Industry Standards

Finally, comprehensive standards must emerge democratically, through collaborative dialogue encompassing unions, regulators, creators, and technology developers. Such genuine collaboration fosters responsiveness and responsible ethical practice—protecting humans without stifling innovation.

Towards Harmonious Integration

AI's impact on voice acting needn't be adversarial. Done right, collaboration can unlock extraordinary creative possibilities, uniquely augmenting human artistry rather than replacing it.

Yet genuine harmony demands continuous vigilance. Ethical safeguards ensure technological advancements serve human expression—not distort, suppress, or diminish it. To achieve this delicate balance, we must remain steadfast in our commitment to transparent practices and equitable solutions.

In the delicate interplay between human creativity and digital prowess—artistic authenticity and technological ingenuity—the pathway is challenging, but ultimately hopeful. The answer isn't to retreat from AI’s possibilities but to embrace them responsibly and ethically, ensuring human ideas, emotions, and voices remain valued and protected.

The future stands waiting—let’s meet it together, unified in vision, committed in intention, and guided at every step by integrity.

Publishing History

- URL: https://rawveg.substack.com/p/voices-at-the-threshold

- Date: 14th April 2025

![[The AI Show Episode 146]: Rise of “AI-First” Companies, AI Job Disruption, GPT-4o Update Gets Rolled Back, How Big Consulting Firms Use AI, and Meta AI App](https://www.marketingaiinstitute.com/hubfs/ep%20146%20cover.png)

![[DEALS] The Premium Python Programming PCEP Certification Prep Bundle (67% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

_Aleksey_Funtap_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

_Sergey_Tarasov_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Apple Foldable iPhone to Feature New Display Tech, 19% Thinner Panel [Rumor]](https://www.iclarified.com/images/news/97271/97271/97271-640.jpg)

![Apple Developing New Chips for Smart Glasses, Macs, AI Servers [Report]](https://www.iclarified.com/images/news/97269/97269/97269-640.jpg)

![Apple Shares New Mother's Day Ad: 'A Gift for Mom' [Video]](https://www.iclarified.com/images/news/97267/97267/97267-640.jpg)

![Apple Shares Official Trailer for 'Stick' Starring Owen Wilson [Video]](https://www.iclarified.com/images/news/97264/97264/97264-640.jpg)