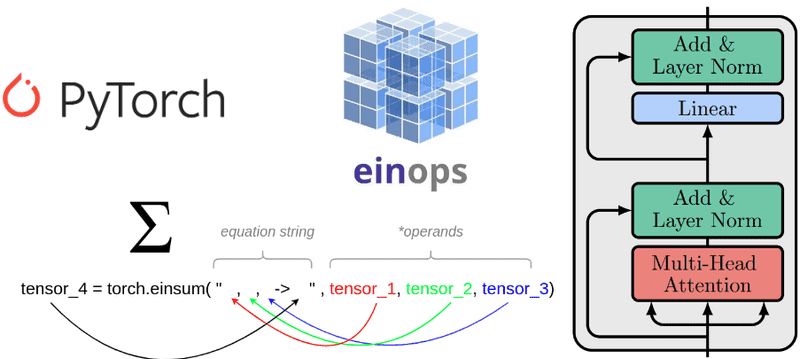

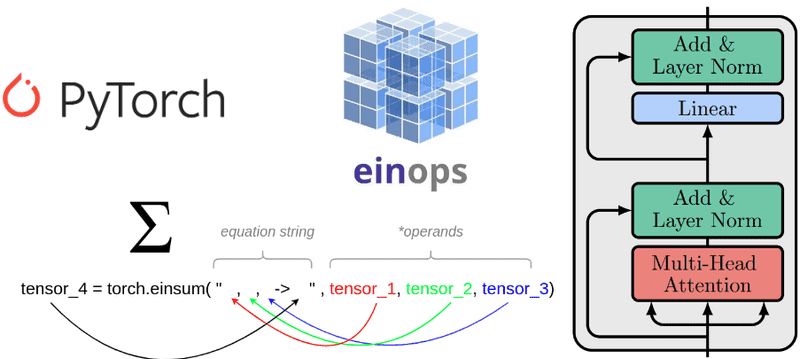

Understanding einsum for Deep learning: implement a transformer with multi-head self-attention from scratch

Learn about the einsum notation and einops by coding a custom multi-head self-attention unit and a transformer block

May 31, 2025 0

May 30, 2025 0

May 30, 2025 0

May 12, 2025 0

May 12, 2025 0

May 12, 2025 0

May 12, 2025 0

May 12, 2025 0

May 12, 2025 0

May 12, 2025 0

May 30, 2025 0

May 29, 2025 0

May 23, 2025 0

May 16, 2025 0

May 27, 2025 0

Jun 1, 2025 0

May 29, 2025 0

May 27, 2025 0

May 26, 2025 0

May 16, 2025 0

Jun 1, 2025 0

May 31, 2025 0

May 30, 2025 0

May 30, 2025 0

Or register with email

May 12, 2025 0

May 12, 2025 0

May 12, 2025 0

May 12, 2025 0

May 12, 2025 0

This site uses cookies. By continuing to browse the site you are agreeing to our use of cookies.