Thought 20250507 What is MCP

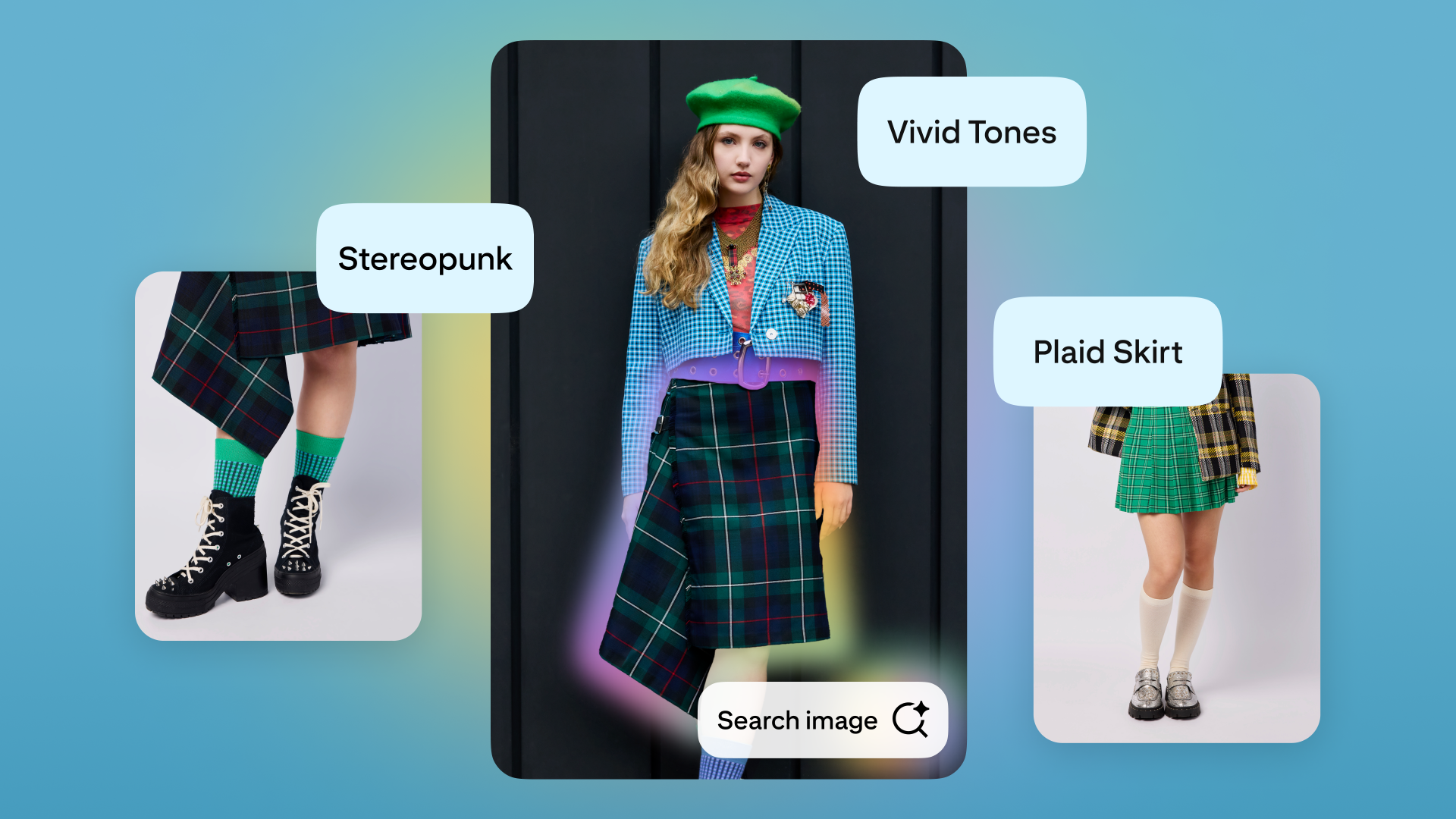

There is a lot of noise on "MCP (Model Context Protocol)" and as someone at the forefront of technology we have no choice but to follow it up. Often, despite the initial waryneess the AI hype drains my energy, some careful study turns out to generate useful insights. MCP is a protocol, but the majority of the protocol work is already done when OpenAI annouced tool use for ChatGPT. What matters now is the standardization process of the publisher of MCP, i.e. Anthropic (Creator of Claude models and tools). In addition to the publications, MCP offers server/client implementations for C#, Python, JavaScript - and that can speed up and streamline the development process. Whenever an experienced developers sees the tool use API (e.g. from OpenAI), they will immediately think of automatic code generation and streamline and tool definition process, and develop custom tooling to define those tools and serve those tools. MCP offers a ready-to-use (language-of-your-choice) implementation for a stated server. But here is a critical observation: does MCP has anything to do with AI/LLM at all? It's reinforcing what should have already been done: making systems reflective and accessible from an outside system. Here is a pun: it requires existing systems to be more reasonable (also see Forbes article on GEO). Traditionally, when a software is developed if the original developer doesn't expose useful APIs then power users have no obvious way of automating it, except through the more hacky GUI automation route. Now with the pressure of AI, vendors have to expose useful interface. LLM with its natural language nature makes it easiser for them to pick, compared to picking a more specific scripting language (or rest API), especially when the MCP server/client framework is stated and uses JsonRPC. Also see: Medium post on "Some Reflections on MCP".

There is a lot of noise on "MCP (Model Context Protocol)" and as someone at the forefront of technology we have no choice but to follow it up. Often, despite the initial waryneess the AI hype drains my energy, some careful study turns out to generate useful insights.

MCP is a protocol, but the majority of the protocol work is already done when OpenAI annouced tool use for ChatGPT. What matters now is the standardization process of the publisher of MCP, i.e. Anthropic (Creator of Claude models and tools). In addition to the publications, MCP offers server/client implementations for C#, Python, JavaScript - and that can speed up and streamline the development process.

Whenever an experienced developers sees the tool use API (e.g. from OpenAI), they will immediately think of automatic code generation and streamline and tool definition process, and develop custom tooling to define those tools and serve those tools. MCP offers a ready-to-use (language-of-your-choice) implementation for a stated server.

But here is a critical observation: does MCP has anything to do with AI/LLM at all? It's reinforcing what should have already been done: making systems reflective and accessible from an outside system. Here is a pun: it requires existing systems to be more reasonable (also see Forbes article on GEO).

Traditionally, when a software is developed if the original developer doesn't expose useful APIs then power users have no obvious way of automating it, except through the more hacky GUI automation route. Now with the pressure of AI, vendors have to expose useful interface. LLM with its natural language nature makes it easiser for them to pick, compared to picking a more specific scripting language (or rest API), especially when the MCP server/client framework is stated and uses JsonRPC.

Also see: Medium post on "Some Reflections on MCP".

![[The AI Show Episode 146]: Rise of “AI-First” Companies, AI Job Disruption, GPT-4o Update Gets Rolled Back, How Big Consulting Firms Use AI, and Meta AI App](https://www.marketingaiinstitute.com/hubfs/ep%20146%20cover.png)

![[DEALS] The Premium Python Programming PCEP Certification Prep Bundle (67% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

_Aleksey_Funtap_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

_Sergey_Tarasov_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Apple Developing New Chips for Smart Glasses, Macs, AI Servers [Report]](https://www.iclarified.com/images/news/97269/97269/97269-640.jpg)

![Apple Shares New Mother's Day Ad: 'A Gift for Mom' [Video]](https://www.iclarified.com/images/news/97267/97267/97267-640.jpg)

![Apple Shares Official Trailer for 'Stick' Starring Owen Wilson [Video]](https://www.iclarified.com/images/news/97264/97264/97264-640.jpg)