The Future of GitOps: Integrating AI, FinOps, and GreenOps for Intelligent Operations

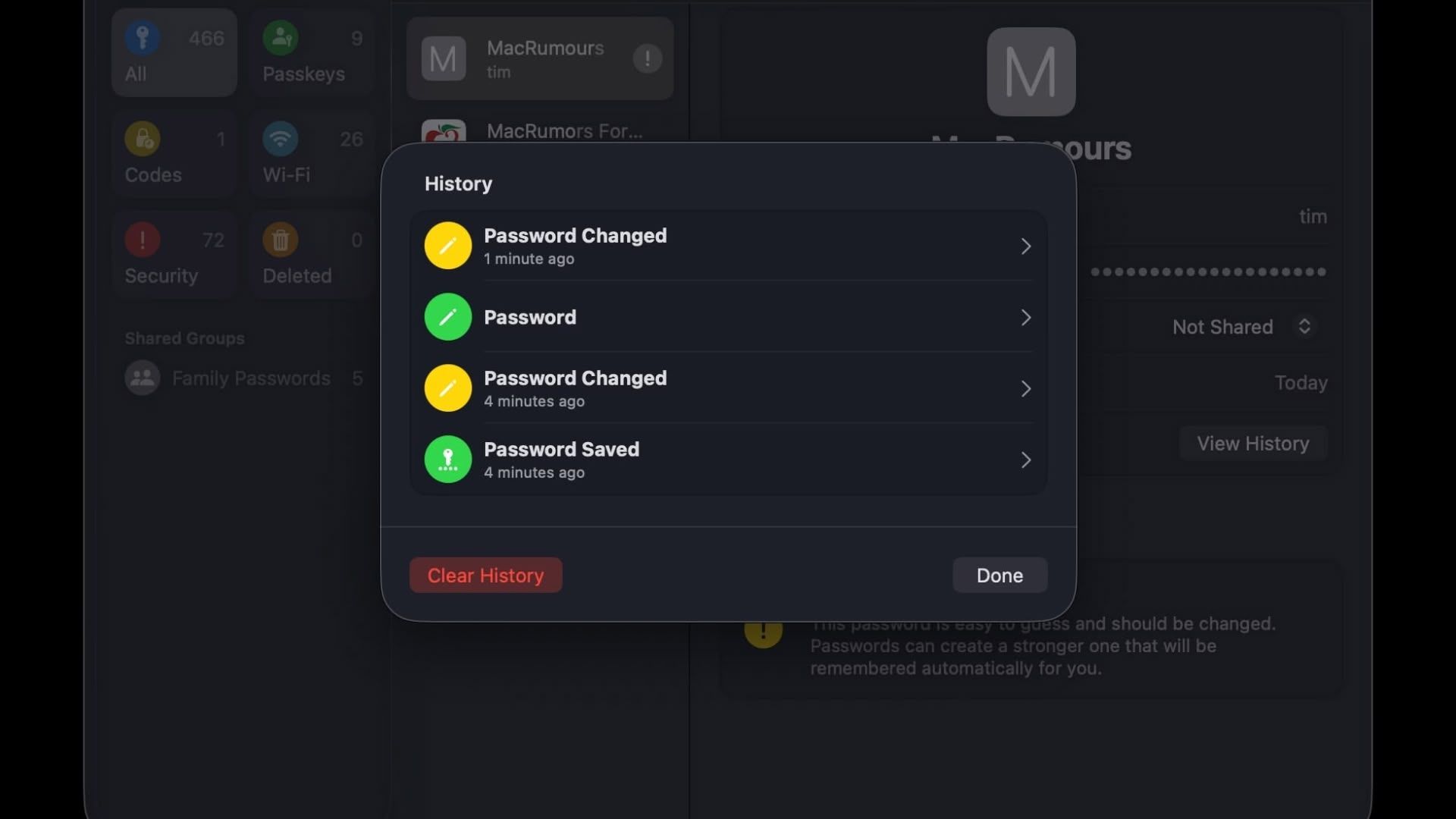

Introduction: The Evolution of GitOps GitOps has revolutionized how organizations manage their infrastructure and applications, ushering in an era of declarative, automated, and auditable operations. At its core, GitOps hinges on four foundational principles: declarative infrastructure, using Git as the single source of truth for the desired state, automated synchronization of the actual state with the desired state in Git, and continuous reconciliation to detect and correct any deviations. This methodology has brought unparalleled transparency, stability, and speed to software delivery. However, as cloud-native environments grow in complexity and cost, and as sustainability becomes a critical concern, the evolution of GitOps naturally progresses to integrate more intelligent, financially astute, and environmentally conscious practices. This article explores the cutting-edge convergence of GitOps with Artificial Intelligence (AI), Financial Operations (FinOps), and Green Operations (GreenOps), highlighting how these powerful paradigms are not just coexisting but actively enhancing and redefining the automated, declarative, and auditable nature of GitOps. AI-Powered GitOps: Intelligent Automation and Self-Healing The integration of AI and Machine Learning (ML) into GitOps workflows represents a significant leap forward in intelligent automation. AI/ML models, including Large Language Models (LLMs) and intelligent agents, can augment GitOps by enabling systems to proactively detect anomalies, predict potential issues, and even autonomously propose or implement changes. This moves beyond simple automation to genuine self-healing and optimization. Use Cases: Proactive Anomaly Detection & Self-Healing: AI models can continuously observe system states, performance metrics, and logs. By learning normal operational patterns, they can identify deviations (anomalies) that might indicate misconfigurations, performance bottlenecks, or security threats. Upon detection, an AI agent could autonomously generate a Git pull request (PR) to restore the desired state—for instance, scaling down unused resources to save costs or correcting a misconfiguration that leads to performance degradation. Intelligent Manifest Generation & Optimization: LLMs can assist in generating optimized Kubernetes manifests or infrastructure-as-code (IaC) templates. Trained on vast datasets of best practices, security guidelines, and performance metrics, these models can suggest or even create configurations tailored for specific performance goals, cost considerations, or compliance requirements. Automated Code Review & Compliance: AI agents can be integrated into the GitOps pipeline to review infrastructure code in Git before it's merged. They can scan for security vulnerabilities, compliance breaches against organizational policies, or architectural violations, providing immediate feedback and preventing problematic code from entering the production environment. AI for MLOps: GitOps principles are perfectly suited for managing the lifecycle of AI/ML models themselves. From versioning training data and model artifacts in Git to deploying and managing model serving infrastructure, GitOps ensures that the entire MLOps pipeline is declarative, auditable, and repeatable. Here's a conceptual YAML manifest that defines an AI-driven optimization policy, demonstrating how such policies could be declared and reconciled within a GitOps framework: apiVersion: gitops.example.com/v1alpha1 kind: AIOptimizationPolicy metadata: name: smart-resource-scaling namespace: production spec: targetWorkload: deployment/my-api-service optimizationGoals: - type: costEfficiency metric: "cpu_utilization_avg" threshold: "60%" # Scale down if avg CPU below 60% for an hour - type: performance metric: "latency_p99" threshold: "200ms" # Scale up if P99 latency exceeds 200ms aiAgent: name: predictive-scaler-bot modelRef: "openai-gpt-4o" # or a custom ML model actions: - type: scaleReplicas direction: "down" ifCondition: "costEfficiency" - type: scaleReplicas direction: "up" ifCondition: "performance" dryRun: false # Set to true for policy simulation without immediate application This declarative policy, managed by GitOps, allows an AI agent to take actions based on defined metrics and goals, ensuring that infrastructure is continuously optimized for cost and performance.

Introduction: The Evolution of GitOps

GitOps has revolutionized how organizations manage their infrastructure and applications, ushering in an era of declarative, automated, and auditable operations. At its core, GitOps hinges on four foundational principles: declarative infrastructure, using Git as the single source of truth for the desired state, automated synchronization of the actual state with the desired state in Git, and continuous reconciliation to detect and correct any deviations. This methodology has brought unparalleled transparency, stability, and speed to software delivery. However, as cloud-native environments grow in complexity and cost, and as sustainability becomes a critical concern, the evolution of GitOps naturally progresses to integrate more intelligent, financially astute, and environmentally conscious practices. This article explores the cutting-edge convergence of GitOps with Artificial Intelligence (AI), Financial Operations (FinOps), and Green Operations (GreenOps), highlighting how these powerful paradigms are not just coexisting but actively enhancing and redefining the automated, declarative, and auditable nature of GitOps.

AI-Powered GitOps: Intelligent Automation and Self-Healing

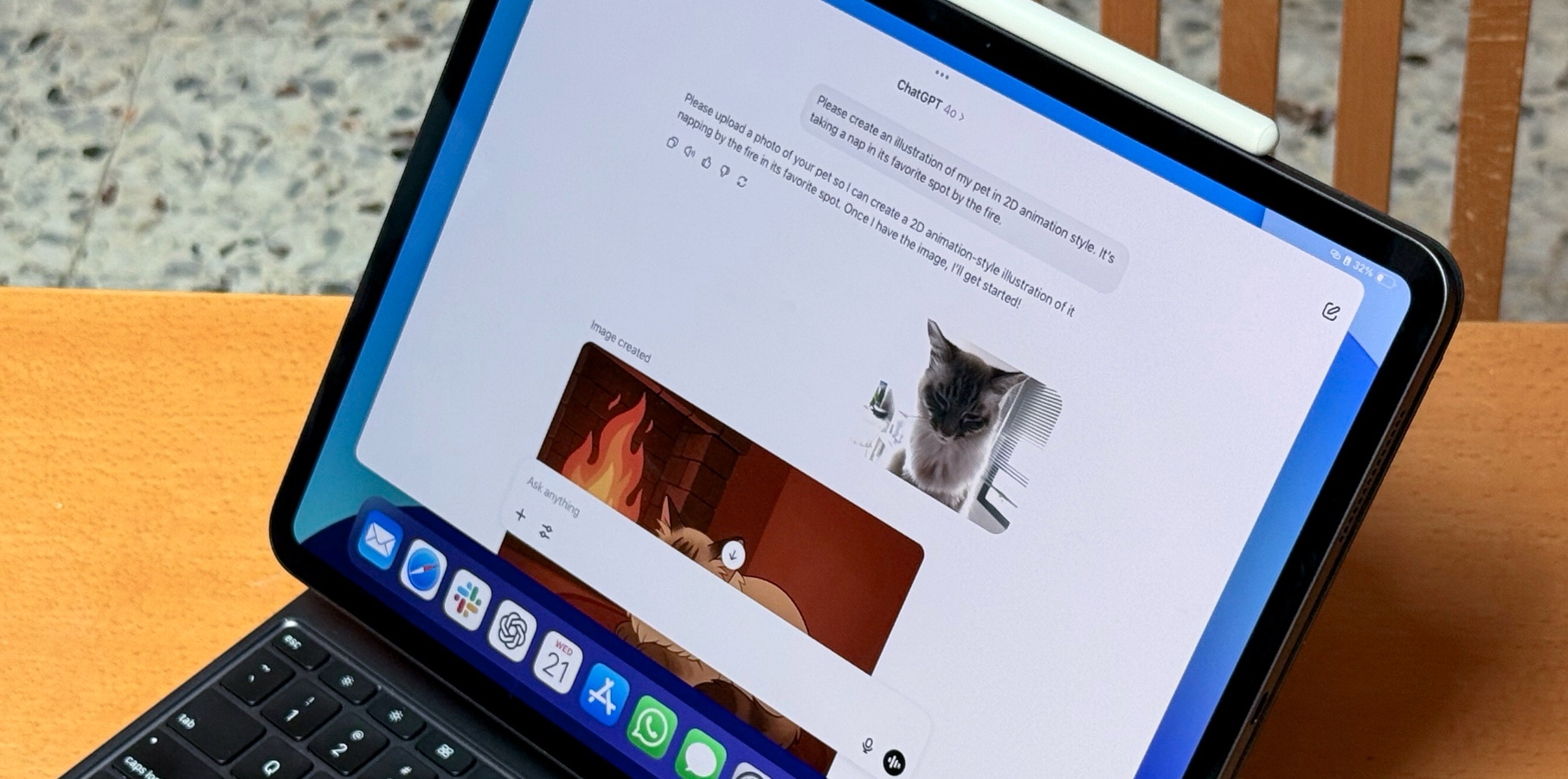

The integration of AI and Machine Learning (ML) into GitOps workflows represents a significant leap forward in intelligent automation. AI/ML models, including Large Language Models (LLMs) and intelligent agents, can augment GitOps by enabling systems to proactively detect anomalies, predict potential issues, and even autonomously propose or implement changes. This moves beyond simple automation to genuine self-healing and optimization.

Use Cases:

- Proactive Anomaly Detection & Self-Healing: AI models can continuously observe system states, performance metrics, and logs. By learning normal operational patterns, they can identify deviations (anomalies) that might indicate misconfigurations, performance bottlenecks, or security threats. Upon detection, an AI agent could autonomously generate a Git pull request (PR) to restore the desired state—for instance, scaling down unused resources to save costs or correcting a misconfiguration that leads to performance degradation.

- Intelligent Manifest Generation & Optimization: LLMs can assist in generating optimized Kubernetes manifests or infrastructure-as-code (IaC) templates. Trained on vast datasets of best practices, security guidelines, and performance metrics, these models can suggest or even create configurations tailored for specific performance goals, cost considerations, or compliance requirements.

- Automated Code Review & Compliance: AI agents can be integrated into the GitOps pipeline to review infrastructure code in Git before it's merged. They can scan for security vulnerabilities, compliance breaches against organizational policies, or architectural violations, providing immediate feedback and preventing problematic code from entering the production environment.

- AI for MLOps: GitOps principles are perfectly suited for managing the lifecycle of AI/ML models themselves. From versioning training data and model artifacts in Git to deploying and managing model serving infrastructure, GitOps ensures that the entire MLOps pipeline is declarative, auditable, and repeatable.

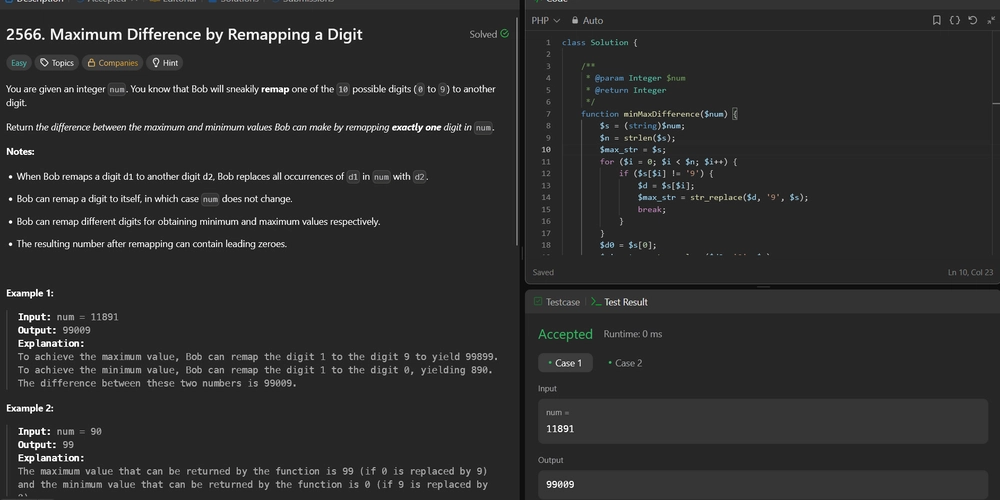

Here's a conceptual YAML manifest that defines an AI-driven optimization policy, demonstrating how such policies could be declared and reconciled within a GitOps framework:

apiVersion: gitops.example.com/v1alpha1

kind: AIOptimizationPolicy

metadata:

name: smart-resource-scaling

namespace: production

spec:

targetWorkload: deployment/my-api-service

optimizationGoals:

- type: costEfficiency

metric: "cpu_utilization_avg"

threshold: "60%" # Scale down if avg CPU below 60% for an hour

- type: performance

metric: "latency_p99"

threshold: "200ms" # Scale up if P99 latency exceeds 200ms

aiAgent:

name: predictive-scaler-bot

modelRef: "openai-gpt-4o" # or a custom ML model

actions:

- type: scaleReplicas

direction: "down"

ifCondition: "costEfficiency"

- type: scaleReplicas

direction: "up"

ifCondition: "performance"

dryRun: false # Set to true for policy simulation without immediate application

This declarative policy, managed by GitOps, allows an AI agent to take actions based on defined metrics and goals, ensuring that infrastructure is continuously optimized for cost and performance.

![[The AI Show Episode 152]: ChatGPT Connectors, AI-Human Relationships, New AI Job Data, OpenAI Court-Ordered to Keep ChatGPT Logs & WPP’s Large Marketing Model](https://www.marketingaiinstitute.com/hubfs/ep%20152%20cover.png)

.jpg?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

_designer491_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![3DMark Launches Native Benchmark App for macOS [Video]](https://www.iclarified.com/images/news/97603/97603/97603-640.jpg)

![Craig Federighi: Putting macOS on iPad Would 'Lose What Makes iPad iPad' [Video]](https://www.iclarified.com/images/news/97606/97606/97606-640.jpg)