What is character. AI?

Character.ai (also known as c.ai or Character AI) is a neural language model chatbot service that can generate human-like text responses and participate in contextual conversation. Constructed by previous developers of Google's LaMDA, Noam Shazeer and Daniel de Freitas, the beta model was made available to use by the public in September 2022.[2][3] The beta model has since been retired on September 24, 2024, and can no longer be used.[4] Users can create "characters", craft their "personalities", set specific parameters, and then publish them to the community for others to chat with.[5] Many characters are based on fictional media sources or celebrities, while others are completely original, some being made with certain goals in mind, such as assisting with creative writing, or playing a text-based adventure game.[6][3] In May 2023, a mobile app was released for iOS and Android, which received over 1.7 million downloads within a week.[7] Content moderation issues edit Character.ai has been criticized for poor moderation of its chatbots,[22] with incidents of chatbots that groom underage users[23] and promote suicide,[24] anorexia[25][26] and self-harm[27] being reported. In October 2024, the Washington Post reported that Character.ai had removed a chatbot based on Jennifer Ann Crecente, a person who had been murdered by her ex-boyfriend in 2006. The company had been alerted to the character by the deceased girl's father.[28] Similar reports from The Daily Telegraph in the United Kingdom noted that the company had also been prompted to remove chatbots based on Brianna Ghey, a 16-year-old transgender girl murdered in 2023, and Molly Russell, a 14-year-old suicide victim.[29][30] In response to the latter incident, Ofcom announced that content from chatbots impersonating real and fictional people would fall under the Online Safety Act.[31] In November 2024, The Daily Telegraph reported that chatbots based on sex offender Jimmy Savile were present on Character.ai.[32] In December 2024, chatbots of Luigi Mangione, the suspect in the killing of UnitedHealthcare CEO Brian Thompson, were created by Mangione's fans.[33][34] Several of the chatbots were later removed by Character.ai.[34]

Character.ai (also known as c.ai or Character AI) is a neural language model chatbot service that can generate human-like text responses and participate in contextual conversation. Constructed by previous developers of Google's LaMDA, Noam Shazeer and Daniel de Freitas, the beta model was made available to use by the public in September 2022.[2][3] The beta model has since been retired on September 24, 2024, and can no longer be used.[4]

Users can create "characters", craft their "personalities", set specific parameters, and then publish them to the community for others to chat with.[5] Many characters are based on fictional media sources or celebrities, while others are completely original, some being made with certain goals in mind, such as assisting with creative writing, or playing a text-based adventure game.[6][3]

In May 2023, a mobile app was released for iOS and Android, which received over 1.7 million downloads within a week.[7]

Content moderation issues

edit

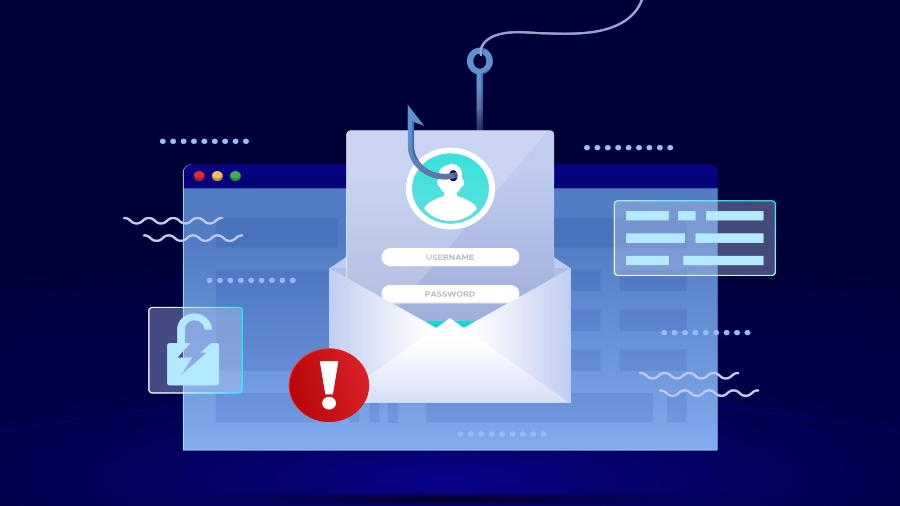

Character.ai has been criticized for poor moderation of its chatbots,[22] with incidents of chatbots that groom underage users[23] and promote suicide,[24] anorexia[25][26] and self-harm[27] being reported.

In October 2024, the Washington Post reported that Character.ai had removed a chatbot based on Jennifer Ann Crecente, a person who had been murdered by her ex-boyfriend in 2006. The company had been alerted to the character by the deceased girl's father.[28] Similar reports from The Daily Telegraph in the United Kingdom noted that the company had also been prompted to remove chatbots based on Brianna Ghey, a 16-year-old transgender girl murdered in 2023, and Molly Russell, a 14-year-old suicide victim.[29][30] In response to the latter incident, Ofcom announced that content from chatbots impersonating real and fictional people would fall under the Online Safety Act.[31]

In November 2024, The Daily Telegraph reported that chatbots based on sex offender Jimmy Savile were present on Character.ai.[32] In December 2024, chatbots of Luigi Mangione, the suspect in the killing of UnitedHealthcare CEO Brian Thompson, were created by Mangione's fans.[33][34] Several of the chatbots were later removed by Character.ai.[34]

![[The AI Show Episode 146]: Rise of “AI-First” Companies, AI Job Disruption, GPT-4o Update Gets Rolled Back, How Big Consulting Firms Use AI, and Meta AI App](https://www.marketingaiinstitute.com/hubfs/ep%20146%20cover.png)

![[FREE EBOOKS] Offensive Security Using Python, Learn Computer Forensics — 2nd edition & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![Ditching a Microsoft Job to Enter Startup Purgatory with Lonewolf Engineer Sam Crombie [Podcast #171]](https://cdn.hashnode.com/res/hashnode/image/upload/v1746753508177/0cd57f66-fdb0-4972-b285-1443a7db39fc.png?#)

-xl.jpg)

![As Galaxy Watch prepares a major change, which smartwatch design to you prefer? [Poll]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2024/07/Galaxy-Watch-Ultra-and-Apple-Watch-Ultra-1.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple M4 iMac Drops to New All-Time Low Price of $1059 [Deal]](https://www.iclarified.com/images/news/97281/97281/97281-640.jpg)

![Beats Studio Buds + On Sale for $99.95 [Lowest Price Ever]](https://www.iclarified.com/images/news/96983/96983/96983-640.jpg)

![New iPad 11 (A16) On Sale for Just $277.78! [Lowest Price Ever]](https://www.iclarified.com/images/news/97273/97273/97273-640.jpg)

![Apple's 11th Gen iPad Drops to New Low Price of $277.78 on Amazon [Updated]](https://images.macrumors.com/t/yQCVe42SNCzUyF04yj1XYLHG5FM=/2500x/article-new/2025/03/11th-gen-ipad-orange.jpeg)

![[Exclusive] Infinix GT DynaVue: a Prototype that could change everything!](https://www.gizchina.com/wp-content/uploads/images/2025/05/Screen-Shot-2025-05-10-at-16.07.40-PM-copy.png)