Top 5 Local LLM Tools and Models in 2025

Harness powerful AI language models locally in 2025 — for privacy, speed, and cost savings Running Large Language Models (LLMs) locally has become increasingly feasible and popular in 2025. Developers and businesses are turning to self-hosted AI for full data control, zero subscription fees, and offline accessibility. Below, we review the top 5 local LLM tools and models, complete with installation steps and example commands to get you started quickly. Why Run LLMs Locally in 2025? Complete Data Privacy: Your inputs never leave your device. No Subscription Costs: Unlimited use without fees. Offline Operation: Work without internet dependency. Customization: Fine-tune models for niche tasks. Reduced Latency: Faster responses without network delay. Top 5 Local LLM Tools in 2025 1. Ollama Most user-friendly local LLM platform Easy one-line commands to run powerful models Supports 30+ models like Llama 3, DeepSeek, Phi-3 Cross-platform (Windows, macOS, Linux) OpenAI-compatible API Installation & Usage # Download from https://ollama.com/download and install # Run a model directly: ollama run qwen:0.5b # Smaller hardware option: ollama run phi3:mini API example: curl http://localhost:11434/api/chat -d '{ "model": "qwen:0.5b", "messages": [ {"role": "user", "content": "Explain quantum computing in simple terms"} ] }' Best for: Users wanting simple commands with powerful results. 2. LM Studio Best GUI-based solution Intuitive graphical interface for model management Built-in chat with history and parameter tuning OpenAI-compatible API server Installation & Usage Download installer from lmstudio.ai Use the "Discover" tab to browse and download models Chat via the built-in interface or enable the API server in the Developer tab No code snippet — mostly GUI driven Best for: Non-technical users preferring visual controls. 3. text-generation-webui Flexible web UI for various models Easy install with pip or conda Supports multiple backends (GGUF, GPTQ, AWQ) Extensions and knowledge base support Quickstart with portable build: # Download portable build from GitHub Releases # Unzip and run: text-generation-webui --listen Open browser at http://localhost:5000 Download models directly through UI Best for: Users wanting powerful features with a web interface. 4. GPT4All Desktop app optimized for Windows Pre-configured models ready to use Chat interface with conversation memory Local document analysis support Installation & Usage Download app from gpt4all.io Run and download models via built-in downloader Chat directly through the desktop app Best for: Windows users who want a polished desktop experience. 5. LocalAI Developer’s choice for API integration Supports multiple model architectures (GGUF, ONNX, PyTorch) Drop-in OpenAI API replacement Docker-ready for easy deployment Run LocalAI with Docker: # CPU-only: docker run -ti --name local-ai -p 8080:8080 localai/localai:latest-cpu # Nvidia GPU support: docker run -ti --name local-ai -p 8080:8080 --gpus all localai/localai:latest-gpu-nvidia-cuda-12 # Full CPU+GPU image: docker run -ti --name local-ai -p 8080:8080 localai/localai:latest Access model browser at: http://localhost:8080/browse/ Best for: Developers needing flexible, API-compatible local LLM hosting. Bonus Tool: Jan ChatGPT alternative fully offline Powered by Cortex AI engine Runs popular LLMs like Llama, Gemma, Mistral, Qwen locally OpenAI-compatible API and extensible plugin system Installation & Usage Download installer from jan.ai Launch and download models from built-in library Use chat interface or enable API server for integration Best Local LLM Models in 2025 Model Memory Req. Strengths Compatible Tools Llama 3 8B: 16GB General knowledge, reasoning Ollama, LM Studio, LocalAI, Jan 70B: High Commercial-quality performance All tools Phi-3 Mini 4K tokens, 8GB Coding, logic, concise replies All tools DeepSeek Coder (7B) 16GB Programming & debugging Ollama, LM Studio, text-gen-webui, Jan Qwen2 7B / 72B Multilingual, summarization Ollama, LM Studio, LocalAI, Jan Mistral NeMo (8B) 16GB Business, document analysis Ollama, LM Studio, text-gen-webui, Jan Conclusion Local LLM tools have matured greatly in 2025, providing strong alternatives to cloud AI. Whether you want simple command-line usage, graphical interfaces, web UIs, or full developer APIs — there’s a local solution ready for you. Running LLMs locally ensures privacy, zero costs, offline capabilities, and faster response times. References Top 5 Local LLM Tools and Models in 2025 Download Ollama Download LM Studio Download GPT4ALL

Harness powerful AI language models locally in 2025 — for privacy, speed, and cost savings

Running Large Language Models (LLMs) locally has become increasingly feasible and popular in 2025. Developers and businesses are turning to self-hosted AI for full data control, zero subscription fees, and offline accessibility. Below, we review the top 5 local LLM tools and models, complete with installation steps and example commands to get you started quickly.

Why Run LLMs Locally in 2025?

- Complete Data Privacy: Your inputs never leave your device.

- No Subscription Costs: Unlimited use without fees.

- Offline Operation: Work without internet dependency.

- Customization: Fine-tune models for niche tasks.

- Reduced Latency: Faster responses without network delay.

Top 5 Local LLM Tools in 2025

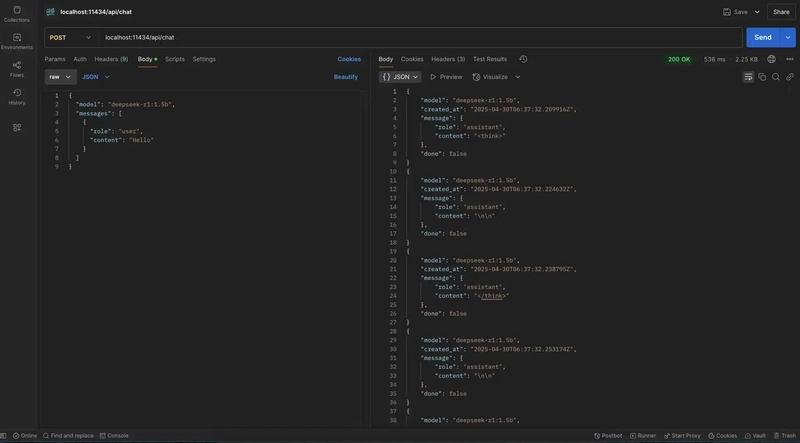

1. Ollama

Most user-friendly local LLM platform

- Easy one-line commands to run powerful models

- Supports 30+ models like Llama 3, DeepSeek, Phi-3

- Cross-platform (Windows, macOS, Linux)

- OpenAI-compatible API

Installation & Usage

# Download from https://ollama.com/download and install

# Run a model directly:

ollama run qwen:0.5b

# Smaller hardware option:

ollama run phi3:mini

API example:

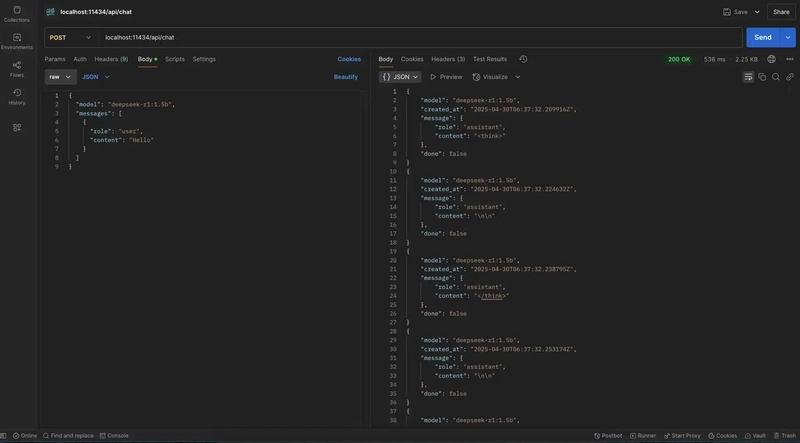

curl http://localhost:11434/api/chat -d '{

"model": "qwen:0.5b",

"messages": [

{"role": "user", "content": "Explain quantum computing in simple terms"}

]

}'

Best for: Users wanting simple commands with powerful results.

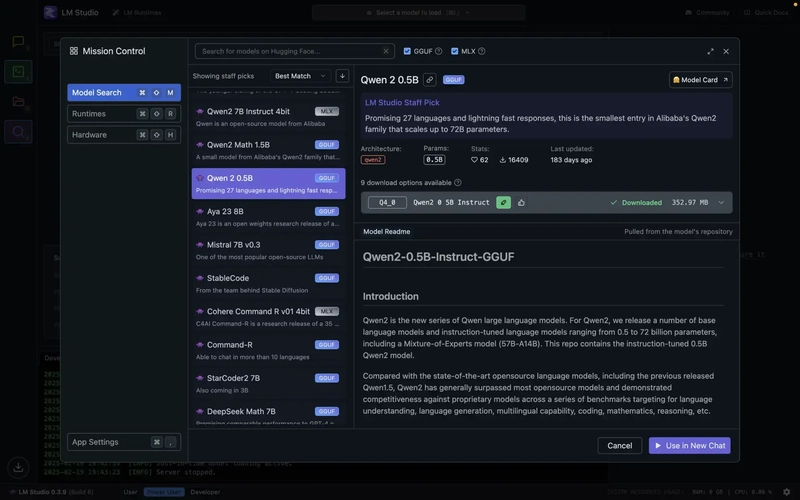

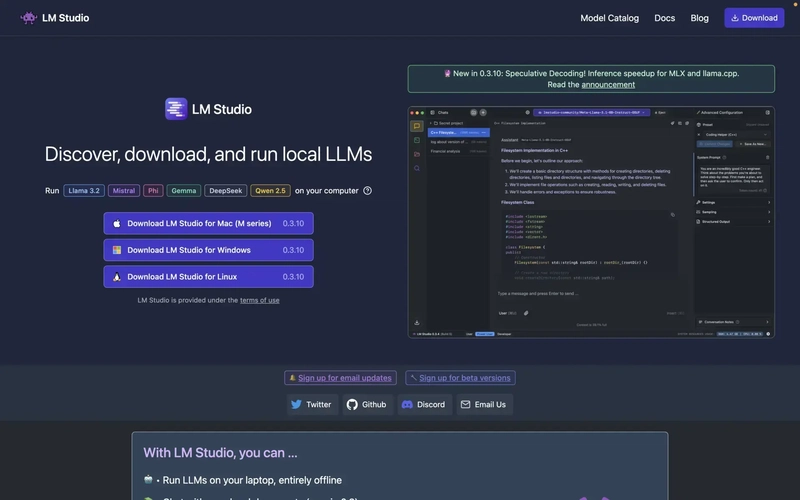

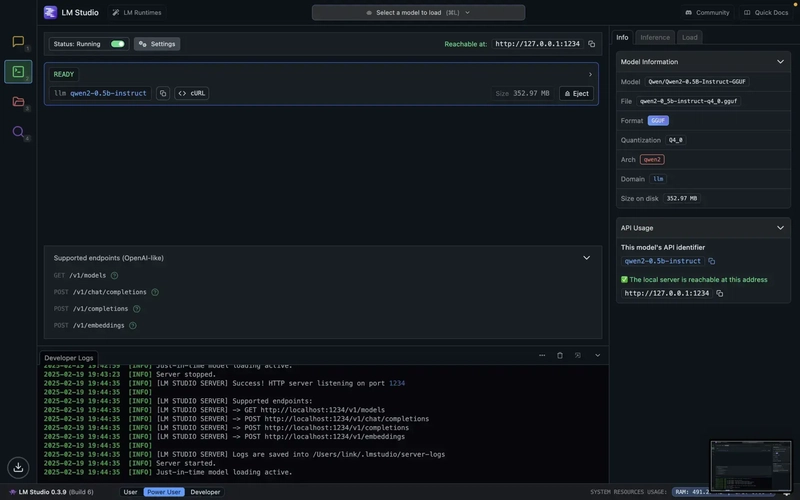

2. LM Studio

Best GUI-based solution

- Intuitive graphical interface for model management

- Built-in chat with history and parameter tuning

- OpenAI-compatible API server

Installation & Usage

Download installer from lmstudio.ai

Use the "Discover" tab to browse and download models

- Chat via the built-in interface or enable the API server in the Developer tab

No code snippet — mostly GUI driven

Best for: Non-technical users preferring visual controls.

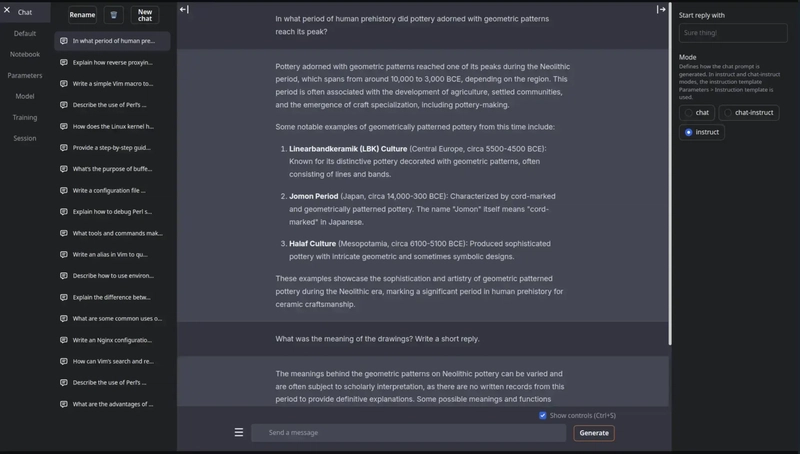

3. text-generation-webui

Flexible web UI for various models

- Easy install with pip or conda

- Supports multiple backends (GGUF, GPTQ, AWQ)

- Extensions and knowledge base support

Quickstart with portable build:

# Download portable build from GitHub Releases

# Unzip and run:

text-generation-webui --listen

- Open browser at

http://localhost:5000 - Download models directly through UI

Best for: Users wanting powerful features with a web interface.

4. GPT4All

Desktop app optimized for Windows

- Pre-configured models ready to use

- Chat interface with conversation memory

- Local document analysis support

Installation & Usage

- Download app from gpt4all.io

- Run and download models via built-in downloader

- Chat directly through the desktop app

Best for: Windows users who want a polished desktop experience.

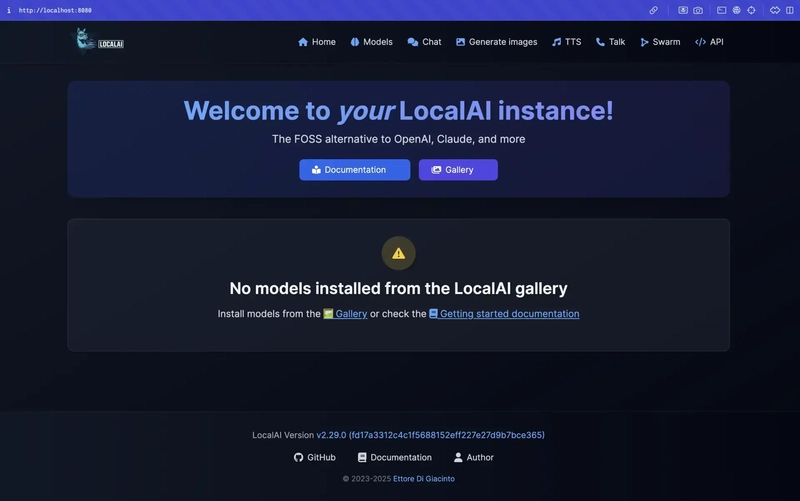

5. LocalAI

Developer’s choice for API integration

- Supports multiple model architectures (GGUF, ONNX, PyTorch)

- Drop-in OpenAI API replacement

- Docker-ready for easy deployment

Run LocalAI with Docker:

# CPU-only:

docker run -ti --name local-ai -p 8080:8080 localai/localai:latest-cpu

# Nvidia GPU support:

docker run -ti --name local-ai -p 8080:8080 --gpus all localai/localai:latest-gpu-nvidia-cuda-12

# Full CPU+GPU image:

docker run -ti --name local-ai -p 8080:8080 localai/localai:latest

- Access model browser at:

http://localhost:8080/browse/

Best for: Developers needing flexible, API-compatible local LLM hosting.

Bonus Tool: Jan

ChatGPT alternative fully offline

- Powered by Cortex AI engine

- Runs popular LLMs like Llama, Gemma, Mistral, Qwen locally

- OpenAI-compatible API and extensible plugin system

Installation & Usage

- Download installer from jan.ai

- Launch and download models from built-in library

- Use chat interface or enable API server for integration

Best Local LLM Models in 2025

| Model | Memory Req. | Strengths | Compatible Tools |

|---|---|---|---|

| Llama 3 | 8B: 16GB | General knowledge, reasoning | Ollama, LM Studio, LocalAI, Jan |

| 70B: High | Commercial-quality performance | All tools | |

| Phi-3 Mini | 4K tokens, 8GB | Coding, logic, concise replies | All tools |

| DeepSeek Coder (7B) | 16GB | Programming & debugging | Ollama, LM Studio, text-gen-webui, Jan |

| Qwen2 | 7B / 72B | Multilingual, summarization | Ollama, LM Studio, LocalAI, Jan |

| Mistral NeMo (8B) | 16GB | Business, document analysis | Ollama, LM Studio, text-gen-webui, Jan |

Conclusion

Local LLM tools have matured greatly in 2025, providing strong alternatives to cloud AI. Whether you want simple command-line usage, graphical interfaces, web UIs, or full developer APIs — there’s a local solution ready for you. Running LLMs locally ensures privacy, zero costs, offline capabilities, and faster response times.

.jpg)

![[The AI Show Episode 151]: Anthropic CEO: AI Will Destroy 50% of Entry-Level Jobs, Veo 3’s Scary Lifelike Videos, Meta Aims to Fully Automate Ads & Perplexity’s Burning Cash](https://www.marketingaiinstitute.com/hubfs/ep%20151%20cover.png)

![[DEALS] Internxt Cloud Storage: Lifetime Subscription (85% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From electrical engineering student to CTO with Hitesh Choudhary [Podcast #175]](https://cdn.hashnode.com/res/hashnode/image/upload/v1749158756824/3996a2ad-53e5-4a8f-ab97-2c77a6f66ba3.png?#)

.jpg?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

.jpg?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

_Michael_Vi_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Google Messages rolls out taller, 14-line text field [U]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2024/06/Google-Messages-2.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Planning Futuristic 'Glasswing' iPhone With Curved Glass and No Cutouts [Gurman]](https://www.iclarified.com/images/news/97534/97534/97534-640.jpg)