This IIT-Backed Startup Can Run Llama 2 on CPUs… No Need GPUs to Run AI Anymore..

I was just opening LinkedIn casually — that usual scroll before sleep, and suddenly one post caught my eye. Runs LLMs like T5 and Bloom-7B without GPU, just on CPU. I blinked twice. At first, I thought it’s just another startup doing the same “no GPU required” drama. We’ve all seen those lines.. “Edge-friendly, lightweight, runs offline...” and then boom — nothing new. But this one felt different. The name? Kompact AI. What Even Is Kompact AI? (And Why It Feels Legit) From what I gathered, Kompact AI is building something called ICAN — a Common AI-Language Runtime. Basically, a system that supports 10+ programming languages and makes AI models run efficiently on CPUs. Here’s where it gets real…. They’re not just talking about basic models. They’re saying they can run inference, fine-tuning, and even light training of models like T5 and Bloom-7B — on CPUs. What Exactly Is AI, and Why Does It Need So Much Power? At its core, AI—particularly deep learning—relies on neural networks that perform massive amounts of matrix computations. These computations are needed for two key processes…. Training This is when a model learns from data. It adjusts itself based on feedback and gets better with time. It’s computationally heavy and typically requires specialized hardware like GPUs or TPUs. Inference..... Once the model is trained, we use it to make predictions. Inference requires less computational power than training, but it’s still demanding, especially for large models or real-time tasks. So, What Makes Kompact AI Different from the Rest? Here’s where Kompact AI stands out: it claims to run heavy AI models like T5 and Bloom-7B on CPUs—no GPUs required. But wait, isn’t that supposed to be impossible? CPUs These are general-purpose processors designed for a wide range of tasks. They’re great at complex, sequential logic and have fewer cores (usually 4–16) than GPUs. GPUs Designed for parallel tasks, GPUs have thousands of smaller cores optimized for repetitive, matrix-heavy operations, making them ideal for AI. So how does Kompact AI make running AI models like T5 on a CPU even possible? What kind of tech is happening behind the scenes? What Could Be Going On Behind the Scenes? Let’s Break It Down. There are three main factors we need to address to run AI effectively on CPUs..... Computational Requirements: AI models need substantial computing power for matrix operations. Can CPUs handle these heavy tasks? Hardware Capabilities: CPUs have strengths like multi-core processing and large caches, but they lack GPU-level parallelism. How does Kompact AI manage these differences? Software Optimization: The way the software is written makes a huge difference in how well AI models run on CPUs. What kind of optimizations could make it work? Let’s dive deeper into these aspects and try to understand what’s going on. How Do We Simplify AI to Make It Run on a CPU? Computational Requirements: AI models, especially large language models (LLMs) like T5 or Bloom-7B, have billions of parameters. Running these models, especially for tasks like training, can be computationally intensive. But, is there a way to make these tasks less demanding? Inference: This is when we use a pre-trained model to make predictions. It’s much less computationally demanding than training. Fine-tuning: This is when you adjust an already trained model on new data. It’s more demanding than inference but still much lighter than full-scale training. Could Kompact AI focus on these lighter tasks—like inference and fine-tuning—so that it avoids the heavy computational cost of full-scale training? Hardware Capabilities: CPUs, while not as parallel as GPUs, are still quite powerful in their own right. Could Kompact AI be taking advantage of things like…. Multiple cores that can handle tasks simultaneously. SIMD (Single Instruction, Multiple Data), allowing the CPU to process multiple pieces of data within each core. Large caches that reduce memory latency? How does Kompact AI tap into these strengths of CPUs to handle AI workloads efficiently? Software Optimization: Most AI frameworks (like TensorFlow or PyTorch) are designed to take advantage of GPUs. On CPUs, this often leads to underutilization of resources. Could Kompact AI be doing something radically different with its software optimization to make the most of CPU power? How Do We Rebuild the AI Solution for CPUs? What if Kompact AI's approach works by rethinking how AI models are structured and executed? Could it work like this? Model Optimization: By quantizing, pruning, and distilling large models, could Kompact AI reduce the computational load, making it feasible for CPU-based systems to handle tasks like inference or fine-tuning efficiently? Leverage CPU Strengths: Could Kompact AI split computations across multiple CPU cores and use SIMD instructions to handle matrix oper

I was just opening LinkedIn casually — that usual scroll before sleep, and suddenly one post caught my eye.

Runs LLMs like T5 and Bloom-7B without GPU, just on CPU.

I blinked twice. At first, I thought it’s just another startup doing the same “no GPU required” drama.

We’ve all seen those lines.. “Edge-friendly, lightweight, runs offline...” and then boom — nothing new.

But this one felt different. The name? Kompact AI.

What Even Is Kompact AI? (And Why It Feels Legit)

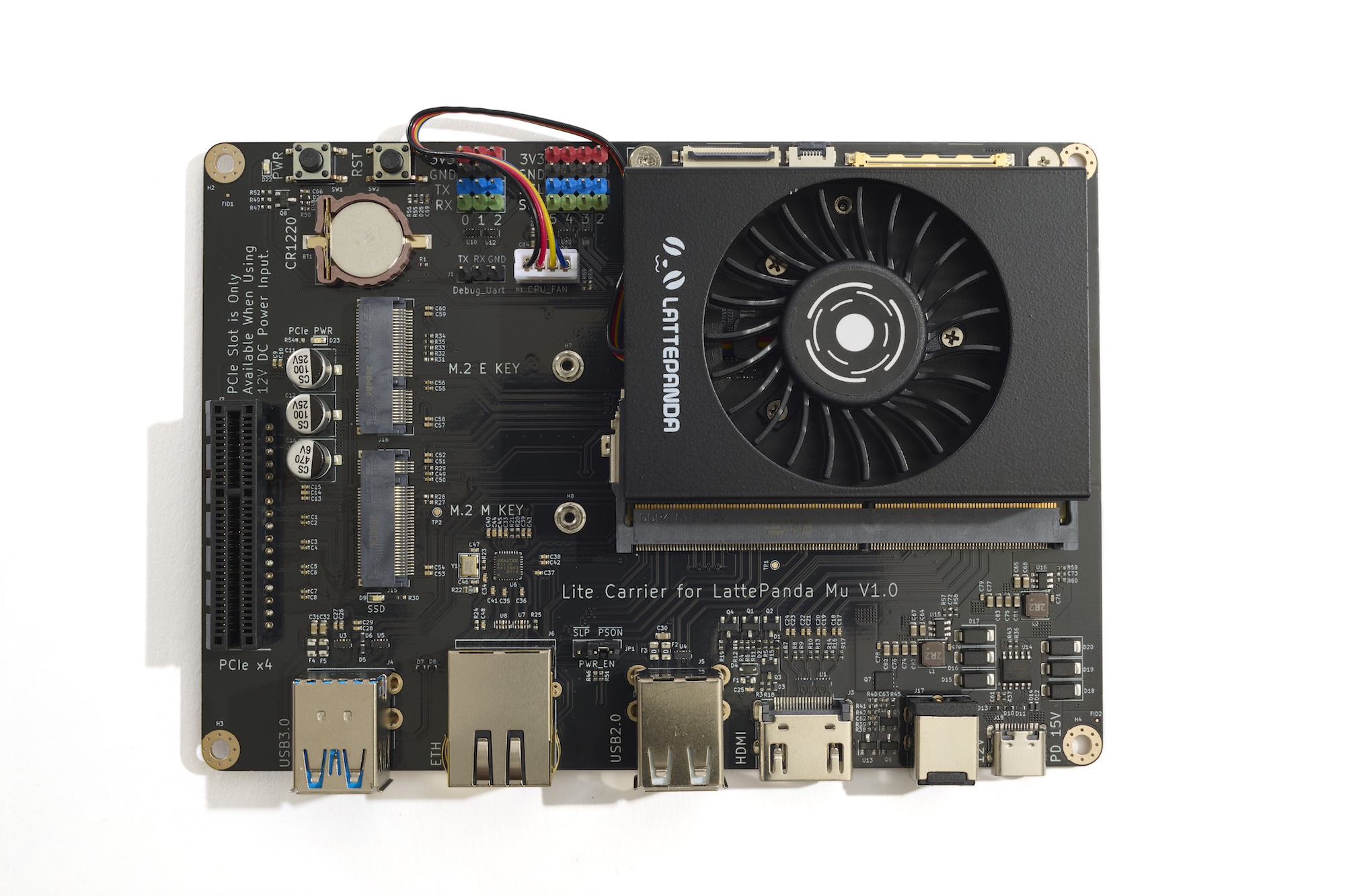

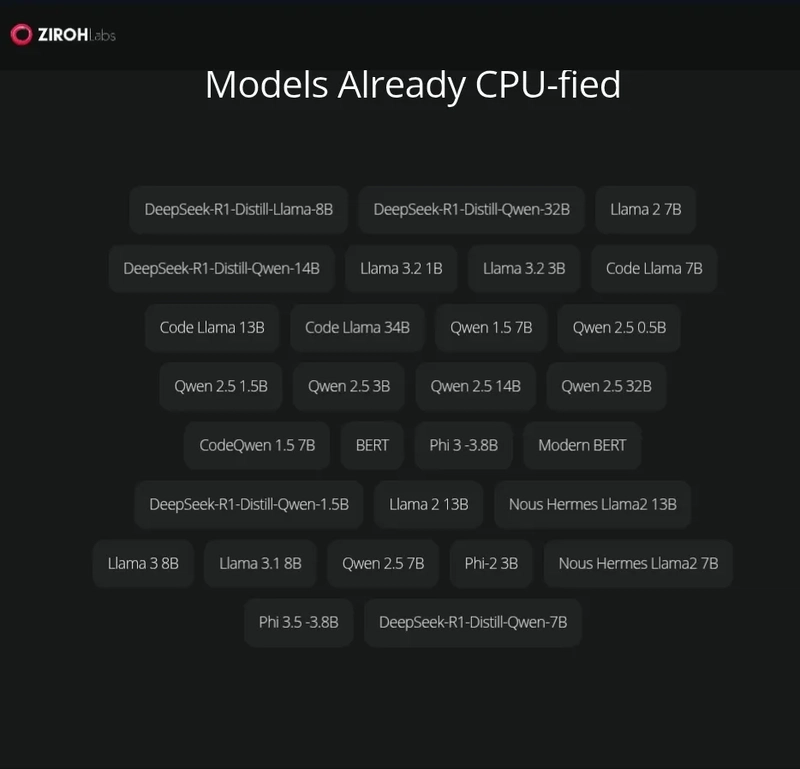

From what I gathered, Kompact AI is building something called ICAN — a Common AI-Language Runtime. Basically, a system that supports 10+ programming languages and makes AI models run efficiently on CPUs.

Here’s where it gets real….

They’re not just talking about basic models. They’re saying they can run inference, fine-tuning, and even light training of models like T5 and Bloom-7B — on CPUs.

What Exactly Is AI, and Why Does It Need So Much Power?

At its core, AI—particularly deep learning—relies on neural networks that perform massive amounts of matrix computations. These computations are needed for two key processes….

Training

This is when a model learns from data. It adjusts itself based on feedback and gets better with time.

It’s computationally heavy and typically requires specialized hardware like GPUs or TPUs.

Inference.....

Once the model is trained, we use it to make predictions. Inference requires less computational power than training, but it’s still demanding, especially for large models or real-time tasks.

So, What Makes Kompact AI Different from the Rest?

Here’s where Kompact AI stands out: it claims to run heavy AI models like T5 and Bloom-7B on CPUs—no GPUs required. But wait, isn’t that supposed to be impossible?

CPUs

These are general-purpose processors designed for a wide range of tasks. They’re great at complex, sequential logic and have fewer cores (usually 4–16) than GPUs.

GPUs

Designed for parallel tasks, GPUs have thousands of smaller cores optimized for repetitive, matrix-heavy operations, making them ideal for AI.

So how does Kompact AI make running AI models like T5 on a CPU even possible? What kind of tech is happening behind the scenes?

What Could Be Going On Behind the Scenes? Let’s Break It Down.

There are three main factors we need to address to run AI effectively on CPUs.....

Computational Requirements: AI models need substantial computing power

for matrix operations. Can CPUs handle these heavy tasks?Hardware Capabilities: CPUs have strengths like multi-core processing and large caches, but they lack GPU-level parallelism. How does Kompact AI manage these differences?

Software Optimization: The way the software is written makes a huge difference in how well AI models run on CPUs. What kind of optimizations could make it work?

Let’s dive deeper into these aspects and try to understand what’s going on.

How Do We Simplify AI to Make It Run on a CPU?

Computational Requirements: AI models, especially large language models (LLMs) like T5 or Bloom-7B, have billions of parameters. Running these models, especially for tasks like training, can be computationally intensive. But, is there a way to make these tasks less demanding?

Inference: This is when we use a pre-trained model to make predictions. It’s much less computationally demanding than training.

Fine-tuning: This is when you adjust an already trained model on new data. It’s more demanding than inference but still much lighter than full-scale training.

Could Kompact AI focus on these lighter tasks—like inference and fine-tuning—so that it avoids the heavy computational cost of full-scale training?Hardware Capabilities: CPUs, while not as parallel as GPUs, are still quite powerful in their own right. Could Kompact AI be taking advantage of things like….

Multiple cores that can handle tasks simultaneously.

SIMD (Single Instruction, Multiple Data), allowing the CPU to process multiple pieces of data within each core.

Large caches that reduce memory latency?

How does Kompact AI tap into these strengths of CPUs to handle AI workloads efficiently?

Software Optimization: Most AI frameworks (like TensorFlow or PyTorch) are designed to take advantage of GPUs. On CPUs, this often leads to underutilization of resources.

Could Kompact AI be doing something radically different with its software optimization to make the most of CPU power?

How Do We Rebuild the AI Solution for CPUs?

What if Kompact AI's approach works by rethinking how AI models are structured and executed? Could it work like this?

- Model Optimization: By quantizing, pruning, and distilling large models, could Kompact AI reduce the computational load, making it feasible for CPU-based systems to handle tasks like inference or fine-tuning efficiently?

Leverage CPU Strengths: Could Kompact AI split computations across multiple CPU cores and use SIMD instructions to handle matrix operations without needing GPU-like parallelism?

Optimized Runtime: What if Kompact AI uses a custom runtime environment (maybe something like ICAN) tailored to CPUs, optimizing code execution and minimizing resource usage?

Does this mean we can make large models like Bloom-7B run smoothly on a CPU after all?

How Does It All Come Together to Make AI Work on CPUs?

By combining model optimization, multi-core parallelism, SIMD, and an optimized runtime, how does Kompact AI make running large AI models like Bloom-7B on CPUs actually work?

Could it be that Kompact AI works like this?

- Quantized and pruned versions of models that reduce their size, making them manageable on a CPU.

- Parallel execution across multiple CPU cores to handle large models by distributing the workload efficiently.

- The ICAN runtime ensures minimal overhead, achieving up to 3x the performance of traditional CPU execution in frameworks like TensorFlow or PyTorch.

Is it possible that Kompact AI can bypass the need for GPUs and still deliver solid performance?

So, What’s the Big Picture?

Kompact AI does something groundbreaking: it rethinks how AI models are structured and executed. Could this be the future of AI?

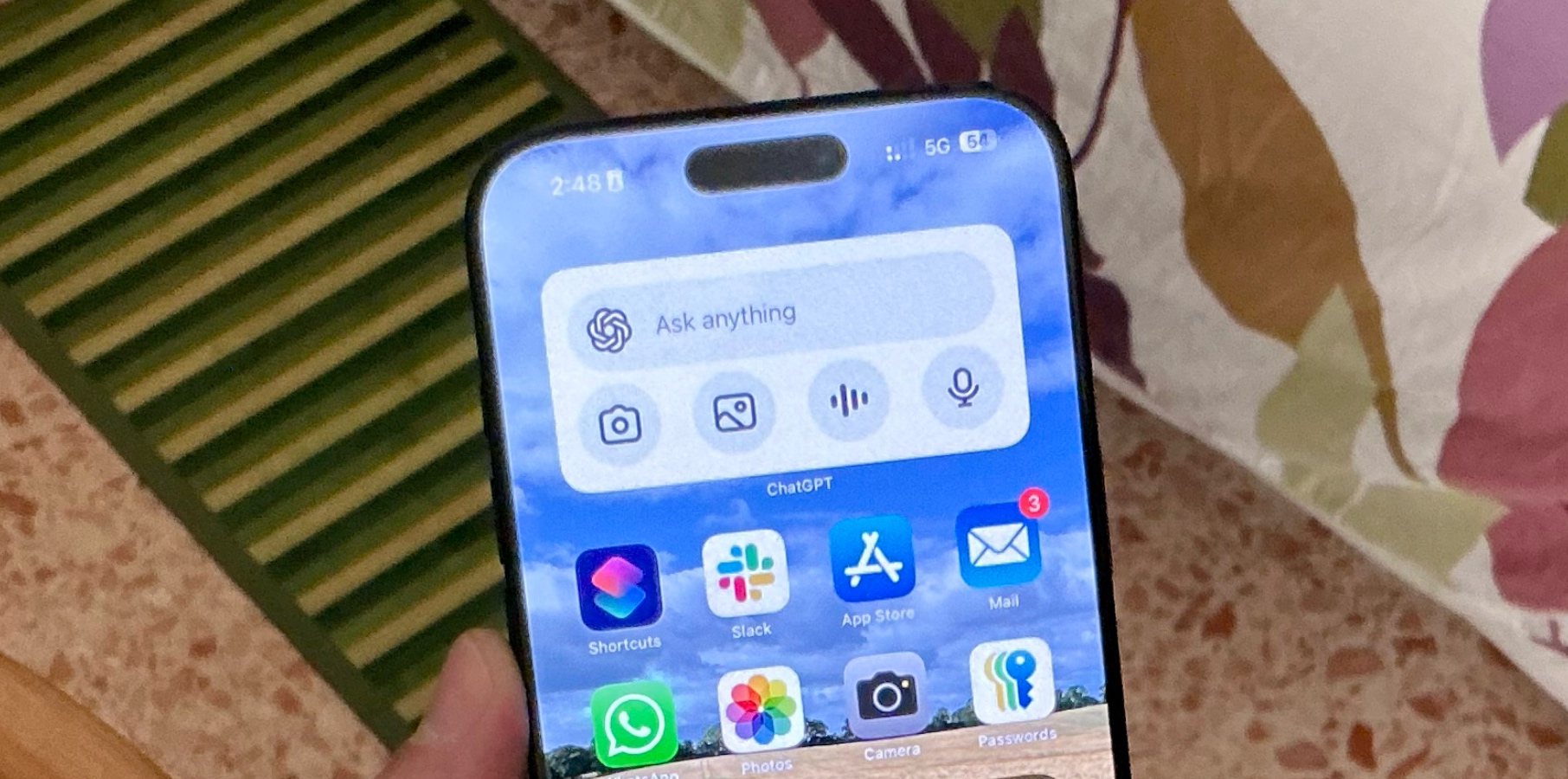

Through techniques like quantization, pruning, and distillation, along with efficient multi-core parallelism and a custom runtime (ICAN), Kompact AI makes AI models run efficiently on CPUs—no GPUs required. Is this the kind of innovation that could democratize AI, making it more accessible for offline and edge devices, where specialized hardware like GPUs may not be available?

.jpg)

![[The AI Show Episode 151]: Anthropic CEO: AI Will Destroy 50% of Entry-Level Jobs, Veo 3’s Scary Lifelike Videos, Meta Aims to Fully Automate Ads & Perplexity’s Burning Cash](https://www.marketingaiinstitute.com/hubfs/ep%20151%20cover.png)

![[DEALS] Internxt Cloud Storage: Lifetime Subscription (85% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From electrical engineering student to CTO with Hitesh Choudhary [Podcast #175]](https://cdn.hashnode.com/res/hashnode/image/upload/v1749158756824/3996a2ad-53e5-4a8f-ab97-2c77a6f66ba3.png?#)

.jpg?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

.jpg?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

_Michael_Vi_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Google Messages rolls out taller, 14-line text field [U]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2024/06/Google-Messages-2.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Planning Futuristic 'Glasswing' iPhone With Curved Glass and No Cutouts [Gurman]](https://www.iclarified.com/images/news/97534/97534/97534-640.jpg)

![✨ [24] -](https://media2.dev.to/dynamic/image/width%3D1000,height%3D500,fit%3Dcover,gravity%3Dauto,format%3Dauto/https:%2F%2Fdev-to-uploads.s3.amazonaws.com%2Fuploads%2Farticles%2Fv4oak8f7012ul5b36wiz.png)