The Algorithmic Muse

The relationship between musician and tool is undergoing a radical remix. We’re not talking incremental upgrades – this is a fundamental shift, one that’s dismantling the established hierarchy of creative control. For years, digital audio workstations were inert extensions of a composer’s will. Now, artificial intelligence is stepping out of the box, evolving from passive instrument to active collaborator, and forcing a crucial question: when does assistance become authorship? Across the globe, from bedroom producers to professional studios, AI platforms are demonstrating a dazzling capacity for both imitation and innovation. AIVA doesn’t just generate music; it sculpts sonic landscapes – crafting sweeping orchestral scores one moment, then improvising bebop riffs with uncanny flair, all calibrated to a musician’s initial direction. Apple’s Logic Pro's features, such as virtual session players, are pushing boundaries – offering a responsiveness that feels less like algorithmic calculation and more like intuitive collaboration, intelligently adding layers and anticipating harmonic shifts. But the real disruption isn’t just about synthesis. WavTool, built as a conversational AI, functions as a real-time creative sounding board, offering workflow suggestions and intelligent editing assistance without demanding centre stage. Simultaneously, platforms like Splash Pro are democratizing composition, allowing anyone to generate original, royalty-free tracks and even experiment with AI-generated vocal textures via simple text prompts. And LANDR, traditionally a mastering service, is expanding its remit, offering personalized feedback, distribution channels, and essentially lowering the friction to not just making music, but making a living from it. This isn’t about replacing artists; it’s about leveling the playing field and unlocking uncharted creative territory. The Algorithmic Studio The velocity of AI development is dismantling the gatekeepers of music creation, ushering in an era of unprecedented access. For decades, crafting compelling music demanded years of formal training and a significant investment in equipment—a barrier that effectively silenced countless potential artists. Now, that equation is fracturing. Platforms like Soundful and BandLab SongStarter are lowering the floor, offering intuitive interfaces that translate musical ideas into tangible form, even for those without a background in production. Forget complex DAWs and endless tutorials. SOUNDRAW’s browser-based editor, for example, lets users rapidly prototype and refine ideas, generating polished, royalty-free tracks with remarkable speed. This isn't about dumbing down the process; it’s about streamlining the laborious aspects, allowing creators to focus on the core artistic vision. Splash Pro goes further, actively encouraging sonic exploration via AI-powered vocal modeling and fluid genre mixing. The platform isn't just simplifying the technical hurdles, it's extending creative possibility, pushing beyond the conventional boundaries of established styles. This move towards inclusivity isn’t merely a happy byproduct, but a direct response to a digital culture increasingly resistant to exclusivity. The shift extends beyond individual empowerment. AI-driven stem separation and manipulation tools are reshaping music collaboration, dismantling geographical constraints. Artists can now dissect existing tracks, isolate instruments, and remix elements remotely with a fluidity that was previously impossible. This enables the blending of disparate sonic palettes, resulting in music that’s not just diverse in its inspiration, but fundamentally reflects a globalized creative network—a symphony assembled from disparate, yet harmonized, voices. This shift begs the question: what innovative sounds will arise from this newly accessible creative landscape? The Algorithmic Soundtrack to Your Life The era of algorithmic guesswork in music is fading, replaced by a more sophisticated understanding of the listener. Artificial intelligence is evolving beyond simple recommendation engines, constructing profoundly personal soundscapes – a continuous, adaptive score to your life. This emerging reality isn’t about better playlists; it's about a direct, physiological connection between sound and state. Integrated biometric sensors, woven into wearables and even ambient home systems, are feeding real-time data – respiration rate, heart rate variability, even subtle shifts in vocal tone – into AI engines. These algorithms don't simply react to your data; they proactively anticipate your needs. A rising pulse during a deadline might trigger an energizing shift in tempo and instrumentation, while detecting stress in your voice could cue a cascading wave of calming frequencies. Streaming services are already mining a wealth of environmental data – your calendar appointments, sleep cycle analysis, location, and even the ambient noise picked up

The relationship between musician and tool is undergoing a radical remix. We’re not talking incremental upgrades – this is a fundamental shift, one that’s dismantling the established hierarchy of creative control. For years, digital audio workstations were inert extensions of a composer’s will. Now, artificial intelligence is stepping out of the box, evolving from passive instrument to active collaborator, and forcing a crucial question: when does assistance become authorship?

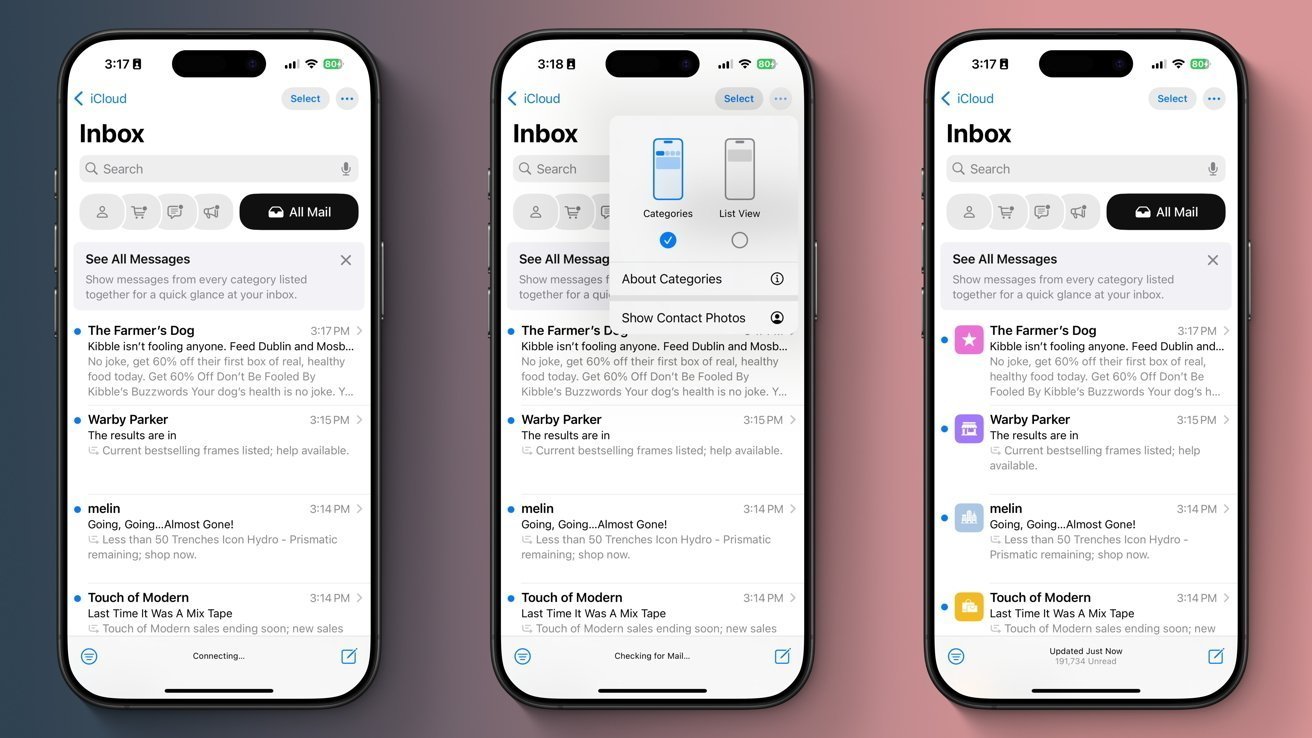

Across the globe, from bedroom producers to professional studios, AI platforms are demonstrating a dazzling capacity for both imitation and innovation. AIVA doesn’t just generate music; it sculpts sonic landscapes – crafting sweeping orchestral scores one moment, then improvising bebop riffs with uncanny flair, all calibrated to a musician’s initial direction. Apple’s Logic Pro's features, such as virtual session players, are pushing boundaries – offering a responsiveness that feels less like algorithmic calculation and more like intuitive collaboration, intelligently adding layers and anticipating harmonic shifts.

But the real disruption isn’t just about synthesis. WavTool, built as a conversational AI, functions as a real-time creative sounding board, offering workflow suggestions and intelligent editing assistance without demanding centre stage. Simultaneously, platforms like Splash Pro are democratizing composition, allowing anyone to generate original, royalty-free tracks and even experiment with AI-generated vocal textures via simple text prompts. And LANDR, traditionally a mastering service, is expanding its remit, offering personalized feedback, distribution channels, and essentially lowering the friction to not just making music, but making a living from it. This isn’t about replacing artists; it’s about leveling the playing field and unlocking uncharted creative territory.

The Algorithmic Studio

The velocity of AI development is dismantling the gatekeepers of music creation, ushering in an era of unprecedented access. For decades, crafting compelling music demanded years of formal training and a significant investment in equipment—a barrier that effectively silenced countless potential artists. Now, that equation is fracturing.

Platforms like Soundful and BandLab SongStarter are lowering the floor, offering intuitive interfaces that translate musical ideas into tangible form, even for those without a background in production. Forget complex DAWs and endless tutorials. SOUNDRAW’s browser-based editor, for example, lets users rapidly prototype and refine ideas, generating polished, royalty-free tracks with remarkable speed. This isn't about dumbing down the process; it’s about streamlining the laborious aspects, allowing creators to focus on the core artistic vision.

Splash Pro goes further, actively encouraging sonic exploration via AI-powered vocal modeling and fluid genre mixing. The platform isn't just simplifying the technical hurdles, it's extending creative possibility, pushing beyond the conventional boundaries of established styles. This move towards inclusivity isn’t merely a happy byproduct, but a direct response to a digital culture increasingly resistant to exclusivity.

The shift extends beyond individual empowerment. AI-driven stem separation and manipulation tools are reshaping music collaboration, dismantling geographical constraints. Artists can now dissect existing tracks, isolate instruments, and remix elements remotely with a fluidity that was previously impossible. This enables the blending of disparate sonic palettes, resulting in music that’s not just diverse in its inspiration, but fundamentally reflects a globalized creative network—a symphony assembled from disparate, yet harmonized, voices. This shift begs the question: what innovative sounds will arise from this newly accessible creative landscape?

The Algorithmic Soundtrack to Your Life

The era of algorithmic guesswork in music is fading, replaced by a more sophisticated understanding of the listener. Artificial intelligence is evolving beyond simple recommendation engines, constructing profoundly personal soundscapes – a continuous, adaptive score to your life.

This emerging reality isn’t about better playlists; it's about a direct, physiological connection between sound and state. Integrated biometric sensors, woven into wearables and even ambient home systems, are feeding real-time data – respiration rate, heart rate variability, even subtle shifts in vocal tone – into AI engines. These algorithms don't simply react to your data; they proactively anticipate your needs. A rising pulse during a deadline might trigger an energizing shift in tempo and instrumentation, while detecting stress in your voice could cue a cascading wave of calming frequencies.

Streaming services are already mining a wealth of environmental data – your calendar appointments, sleep cycle analysis, location, and even the ambient noise picked up by your devices – to anticipate sonic needs before you consciously register them. But the most intriguing frontier lies in AI-generated music itself. These aren't pre-composed tracks selected by a smart system, but dynamically created sonic textures, evolving in real-time based on your biological feedback. It’s a blurring of lines: the listener isn’t merely receiving content, but becoming a co-creator, shaping the soundscape through their own physiology.

The result isn't just sophisticated entertainment. Developers envision these personalized auditory environments as tools for focused work, optimized relaxation, and proactive mental health support, moving beyond passive listening towards a future where sound isn't just heard, it’s felt – and fundamentally, understood by the machine composing it. This represents a new kind of emotional operating system, sculpted by algorithms and experienced, uniquely, by you.

The AI Muse

As AI capabilities expand, a core question remains: can algorithms authentically capture and convey human emotion? We’re beyond simply generating technically proficient art; the goalposts have shifted to capturing the messy, often illogical core of human emotional experience.

Composer Sarah Chen is exploring this very idea, making it a central tenet of her work. Her work, and the increasing body of neurological research tracking listener responses to AI-composed music, reveals something surprising: genuine emotional connection is happening. Brain scans aren't reporting cold calculation; they’re showcasing the familiar neurological signatures of empathy, nostalgia, and even catharsis when audiences encounter AI-generated melodies.

Platforms like Suno and AIVA are leading this charge, rapidly evolving from novelty acts to sophisticated collaborators. They're not just mimicking emotional tropes; their algorithms are learning to manipulate sonic structures in ways that bypass conscious analysis and tap directly into limbic systems. This isn’t about “fooling” listeners, but bypassing the learned filters of expectation.

But the real promise isn’t simply AI replicating emotion, but amplifying it. The current wave of tools appears poised to enrich, rather than erode, human artistic expression, offering composers and musicians a new palette for exploring vulnerability, nuance, and the complex architecture of emotional resonance. AI, in this view, isn’t replacing the artist, but becoming a complex, unpredictable, and increasingly sensitive instrument. This represents a shift from automation toward augmentation, a development with profound implications for both music and our understanding of emotional intelligence.

Cultivating a Generation of Algorithmic Artists

Realizing the potential of AI in creative fields requires not just technological advancements, but also a workforce equipped to use it responsibly and innovatively. Across the globe, institutions of higher learning – and increasingly, secondary schools – are scrambling to integrate AI literacy into existing curricula, with a particular focus on its implications for musical creation and the complex ethics surrounding its use.

Beyond basic software training, these programs are pioneering specialized courses. “Prompt engineering,” the art of precisely directing AI’s output, is now a core skill, alongside deep dives into the ethical gray areas of AI collaboration – authorship, bias, and the very definition of originality. Law schools are responding in kind, preparing a new generation of legal professionals to navigate the swiftly shifting landscape of intellectual property rights in an age where algorithms can compose, perform, and remix.

But the emphasis isn’t solely technical. Educators are prioritizing what they call ‘meta-skills’: a critical framework for evaluating AI’s output, a nuanced understanding of algorithmic biases, and the ability to synthesize insights across disciplines. The aim isn’t merely to create technically proficient workers, but to cultivate a symbiotic relationship between human intuition and algorithmic capabilities.

Consequently, tomorrow’s music classrooms are morphing into experimental design labs – spaces where students can actively deconstruct, remix, and reimagine the creative process with AI, not just using it. This isn't merely about acquiring technological fluency; it's about redefining core concepts of musicianship, exploring new forms of artistic expression, and ultimately, charting the future of human-AI partnership in the creative realm. The emerging challenge is not how AI will replace artists, but how artists will redefine themselves _through_AI.

Democratising Sonic Creation

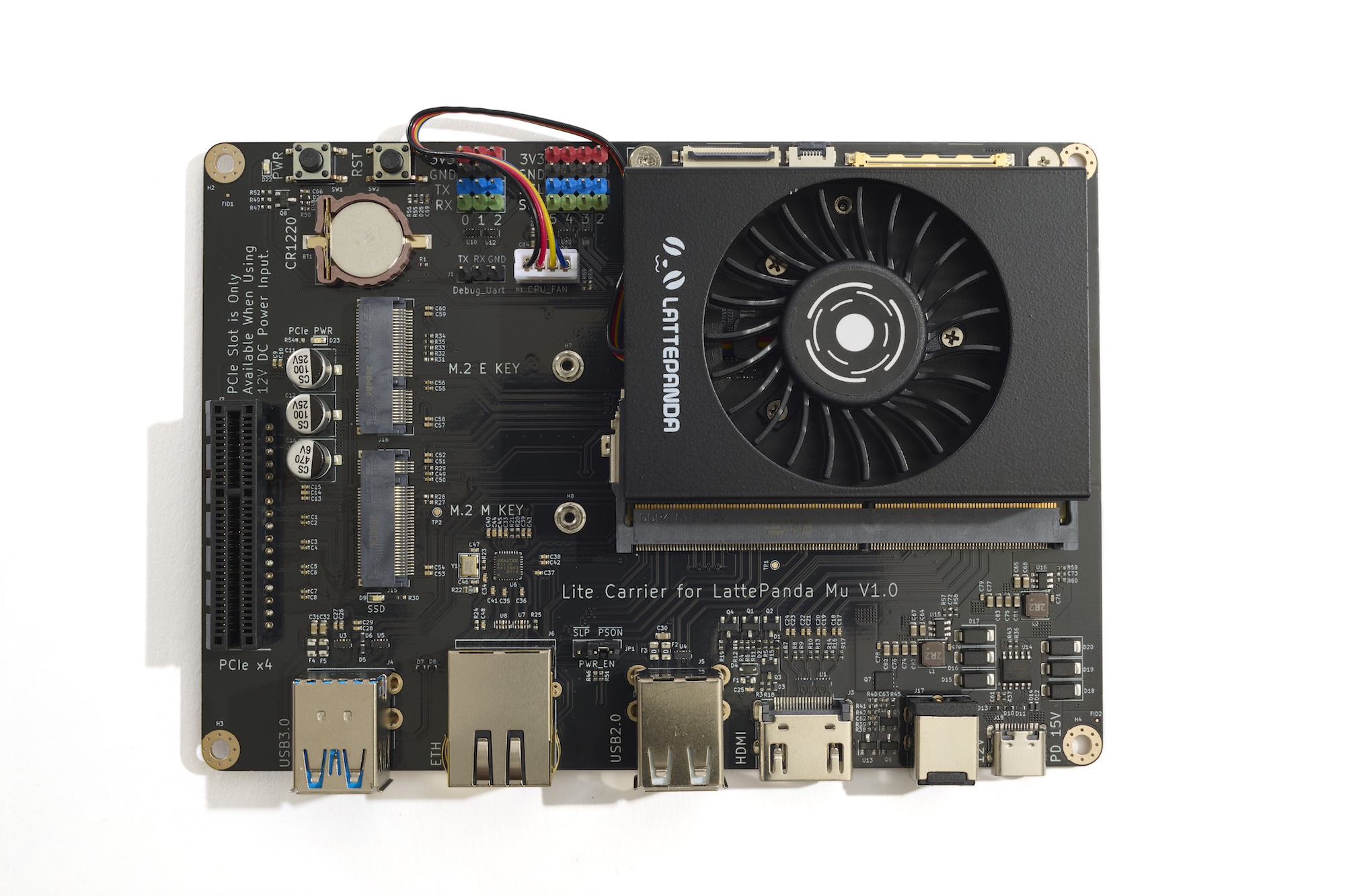

Historically, achieving a polished, professional sound required years of dedicated study – now, artificial intelligence is dramatically shortening that learning curve, effectively serving as a tireless, infinitely patient studio mentor. Platforms like LANDR and iZotope Neutron go beyond simple automation, dynamically analyzing tracks and offering real-time feedback – translating the nuanced language of ‘good sound’ into actionable parameters. Even a novice can leverage these tools to approach studio-quality results.

This extends beyond simple processing. Emerging DAWs are integrating AI to actively teach production techniques. These aren't static tutorials; these tools respond to user choices, offering conversational guidance on arrangement, sound design, and even harmonic structure. Imagine an interface that doesn’t just suggest a compression setting, but explains why it’s appropriate for the instrument and the broader mix. Companies like SOUNDRAW and Splash Pro are pioneering this approach, delivering instantaneous adaptive mixing and intelligent arrangement suggestions, fostering an environment of confident experimentation.

Crucially, AI isn’t eliminating the role of the musician; it's augmenting it. It’s shifting the studio dynamic from an intimidating, gatekept space to an accessible, intuitive playground where creative exploration is prioritized. The result isn’t just faster workflows, but a fundamental democratization of sonic creation, unlocking potential in anyone with an idea and a microphone. It's about removing technical barriers to entry and empowering a new generation of sound architects.

Navigating the Human-AI Creative Divide

The promise of seamless human-AI collaboration isn’t a matter of simply plugging in and playing; it demands a delicate calibration across rapidly evolving ethical, legal, and cultural terrains. The core question isn't if AI can create, but who owns what it makes, and under what conditions.

Current legal systems are straining to address AI-generated content, consistently reinforcing the necessity of demonstrable human authorship for copyright to apply. This isn't a blanket rejection of AI’s role, but a firm stance on accountability and creative intent. Globally, bodies are now scrambling to establish workable standards: detailed tracking of inputs and outputs, unambiguous attribution protocols, radical transparency around royalty distribution, and robust, informed consent mechanisms for data usage. The challenge is to build frameworks that incentivize innovation while preserving artists' agency.

Beyond copyright, a growing coalition of advocacy groups—including the Human Artistry Campaign and the Cultural Data Integrity Project—are focusing on the deeper implications for data privacy and cultural preservation. They’re pushing for safeguards against the misuse of personal data and demanding AI applications demonstrate cultural sensitivity. Within the music industry, these concerns are particularly acute, prompting explorations of data standards that protect artist intellectual property, foster genuine cross-cultural collaboration (rather than extractive sampling), and actively prevent harmful appropriation.

However, the conversation isn't solely defensive. Platforms like Kits AI are piloting proactive solutions with programs such as “Kits Earn,” directly compensating human contributions – from composers and vocalists to instrumentalists – and establishing functional, equitable exchange models. This emerging consensus suggests the future of creative work isn’t a zero-sum game. The focus is shifting toward investing in reskilling initiatives, offering training programs that empower existing talent to adapt and thrive alongside AI, ensuring a smoother transition into this increasingly algorithmic era and reshaping the very definition of creative labour.

AI, Equity, and the Future of Music Revenue

The music industry is undergoing a seismic shift, not driven by a new genre or a disruptive artist, but by the complex algorithms reshaping its economic foundations. Artificial intelligence isn’t just a tool for making music anymore; it’s becoming critical for making a living from it. Platforms like Velveteen.ai are emerging as powerful analytical engines, offering independent musicians unprecedented insight into audience behaviour – predicting viral potential, optimising streaming strategies, and ultimately, maximizing royalty payouts. This isn't about replacing A&R, but augmenting it with data, leveling the playing field for artists who previously lacked access to expensive market research.

Beyond predictive analytics, blockchain technology is offering a compelling solution to the long-standing opacity of music rights and royalties. Smart contracts are automating the traditionally convoluted process of tracking usage and distributing payments, ensuring that contributions from every collaborator – from songwriters and producers to featured instrumentalists – are accurately and transparently compensated. This granular level of financial accountability promises to address historical inequities and foster a more cooperative creative ecosystem.

However, the promise of algorithmic fairness isn’t automatic. A conscious effort is required to prevent AI from simply reinforcing existing power structures. Initiatives are now focusing on proactive inclusivity: targeted grant programs are channeling resources towards artists from underrepresented backgrounds, while accessible technical training programs are equipping underserved communities with the skills to navigate this evolving landscape. The challenge lies in fostering innovation without sacrificing diversity of sound or allowing a handful of tech giants to consolidate control over the future of musical expression. The goal isn’t simply more revenue, but equitable revenue – a system where creative value is rewarded regardless of connections or capital.

Beyond Human-Machine Dichotomies

Artificial intelligence is swiftly transitioning from a science fiction concept to a tangible creative force, with artists like Holly Herndon and Imogen Heap at the forefront of this revolution. Herndon’s ongoing project with Spawn, her AI “baby,” isn’t simply about using a tool, but cultivating a recursive artistic dialogue – a continuously learning, evolving partnership that challenges conventional authorship. Simultaneously, Imogen Heap’s groundbreaking Mi.Mu gloves don’t just translate movement into sound; they forge a seamlessly responsive link between physical performance and digital architecture, expanding the vocabulary of embodied expression.

The resulting live performances are far removed from predictable electronic music sets. Instead, audiences are witnessing AI-powered improvisation that generates unpredictable, dynamic soundscapes, sculpted in real-time through a fluid collaboration. This isn’t about displacing human musicians, but about amplifying their capabilities and unlocking entirely new avenues for sonic exploration. Beyond the concert hall, generative AI is finding a compelling home in immersive installations at festivals and galleries worldwide. These environments leverage the technology’s adaptive nature to craft interactive, ephemeral experiences – digital ecosystems that respond and morph based on audience participation. This interplay, where algorithmic innovation meets deliberate human intention, isn't just generating novel artworks; it’s actively redrawing the contours of artistic practice and, in doing so, seeding the cultural zeitgeist with exciting, and sometimes unsettling, new possibilities. The central question has shifted from whether AI will impact art to how we will define creativity itself as the line between human and machine continues to blur.

Into the Experience and Beyond

The future of artificial intelligence isn’t about automation, but about radically expanding possibilities for creativity. We’re moving beyond single-input AI – text-to-image, or audio generation – and into a realm of genuinely multimodal systems capable of seamlessly weaving together sound, vision, and narrative. This isn't just about technical feats, but the unlocking of fundamentally new experiential forms.

Early research is already delivering glimpses of what’s to come. Picture software capable of interpreting nuanced emotional states, communicated through language, and translating them into dynamically evolving musical compositions. Picture virtual reality environments dynamically sculpted by AI-generated soundscapes, responding in real-time to user interaction. Artists are prototyping generative dance installations, where movement itself becomes a brushstroke for AI-composed scores. Crucially, this technology also offers a powerful avenue for cultural preservation: algorithms are now being deployed to meticulously document and even recreate endangered musical traditions, ensuring they aren't lost to time.

Beyond artistic expression, the potential for therapeutic application is equally compelling. AI-designed soundscapes are being pioneered as personalized interventions for mental health, offering bespoke auditory experiences tailored to individual neurological needs. These integrated approaches – bringing together neuroscience, cutting-edge AI, and the inherent power of creative expression – signal a burgeoning field poised to dramatically expand the scope of cognitive and social therapies. The ambition extends beyond therapy and toward enhancement: designing personalized sonic and visual environments to optimize human wellbeing, and push boundaries in areas such as neuroplasticity and social connection. The question isn't if AI will reshape creativity and wellbeing, but how and at what scale.

A Future Toward Unified Creativity

The evolving relationship between artificial intelligence and human creativity isn’t a threat, but a powerful remix. Forget narratives of replacement; the future of sound isn’t about machines making music for us, but a new ecosystem of artistic partnerships where AI becomes a profoundly powerful instrument. This isn’t simply about generating novel melodies, but about unlocking unexplored emotional depths and amplifying the nuances of individual artistic voice.

However, this potential won’t materialize automatically. Building genuinely collaborative AI tools requires a deliberate, multi-faceted approach. We need to move beyond tech demos and address the complex ethical and economic considerations at play. Crucially, informed policy – developed through open dialogue between artists, developers, and audiences – is paramount to navigating this transition. The challenge isn’t merely to avoid a sterile uniformity imposed by algorithms, but to ensure the benefits—and the profits—are widely distributed, respecting cultural context and rewarding genuine innovation.

Consider the implications for copyright, for authorship, for the livelihood of musicians. These aren’t tangential concerns; they’re fundamental to ensuring a vibrant, equitable future for music. We’re talking about a profound shift in how sound is conceived, composed, and consumed—a dynamic interplay where intuitive human expression is augmented, not supplanted, by the processing power of AI. The ultimate goal is a richer, more diverse sonic landscape – a collective symphony mirroring the full range of human experience, driven by a novel form of harmonic intelligence. This represents an opportunity to not just create more music, but to fundamentally redefine what music can be.

References and Further Information

AIVA: https://www.aiva.ai/ - AI music composition platform capable of generating orchestral scores and other musical styles.

Apple Logic Pro: https://www.apple.com/logic-pro/ - Digital Audio Workstation (DAW) featuring AI-powered virtual session players and intelligent music creation tools.

WavTool: https://wavtool.ai/ - Conversational AI assisting with music production workflow and editing.

Splash Pro: https://splash.pro/ - Platform for generating royalty-free music and AI-generated vocal textures.

LANDR: https://www.landr.com/ - Mastering service expanded to offer distribution and artist support tools.

Soundful: https://soundful.com/ - AI music generator focused on ease of use and royalty-free tracks.

BandLab SongStarter: https://songstarter.bandlab.com/ - AI-powered tool for generating song ideas and starting compositions.

SOUNDRAW: https://soundraw.io/ - Browser-based music editor enabling rapid prototyping and royalty-free music generation.

Suno: https://www.suno.ai/ - AI music generation platform that creates songs with lyrics.

iZotope Neutron: https://www.izotope.com/neutron - AI-powered mixing and mastering plugin.

Kits AI: https://kits.ai/ - AI platform for music creation offering a "Kits Earn" program to compensate contributors.

Velveteen.ai: https://velveteen.ai/ - AI-powered music analytics platform.

Mi.Mu Gloves: https://imogenheap.com/mimugloves/ - Wearable sensors translating movement into sound, developed by Imogen Heap.

Publishing History

- URL: https://rawveg.substack.com/p/the-algorithmic-muse

- Date: 5th May 2025

![[The AI Show Episode 151]: Anthropic CEO: AI Will Destroy 50% of Entry-Level Jobs, Veo 3’s Scary Lifelike Videos, Meta Aims to Fully Automate Ads & Perplexity’s Burning Cash](https://www.marketingaiinstitute.com/hubfs/ep%20151%20cover.png)

![[DEALS] Internxt Cloud Storage: Lifetime Subscription (85% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From electrical engineering student to CTO with Hitesh Choudhary [Podcast #175]](https://cdn.hashnode.com/res/hashnode/image/upload/v1749158756824/3996a2ad-53e5-4a8f-ab97-2c77a6f66ba3.png?#)

_Michael_Vi_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Review: CalDigit TS5 Plus Thunderbolt 5 dock – a supercharged version of the best dock for Mac [Video]](https://i0.wp.com/9to5mac.com/wp-content/uploads/sites/6/2025/06/CalDigit-TS5-Plus-Review.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![UGREEN FineTrack Smart Tracker With Apple Find My Support Drops to $9.99 [50% Off]](https://www.iclarified.com/images/news/97529/97529/97529-640.jpg)

![watchOS 26 May Bring Third-Party Widgets to Control Center [Report]](https://www.iclarified.com/images/news/97520/97520/97520-640.jpg)