Snowflake Algorithm: UUID Generation for Distributed Systems

Leapcell: The Best of Serverless Web Hosting Detailed Explanation of Distributed ID Generation Schemes and the Snowflake Algorithm I. Background In internet business systems, there are various types of IDs. These IDs need to ensure global uniqueness, and such IDs are called distributed IDs. Distributed IDs need to meet characteristics such as uniqueness, increasing trend, high availability, and high performance. The Snowflake algorithm is a distributed ID generation scheme, which was applied by Twitter to its internal distributed projects. After this algorithm was open-sourced, it was recognized by major companies. Under this influence, major companies have successively developed distributed ID generators with their own characteristics. Before delving into the Snowflake algorithm, it is necessary to provide an overview of the commonly used distributed ID generation schemes. II. Distributed ID Generation Schemes 2.1 UUID The core idea of this algorithm is to generate a UUID by combining the machine's network card, local time, and a random number. Advantages: It can be generated locally. The generation process is simple, with good performance, and there is no risk of high availability issues. Disadvantages: The generated ID is too long, which will cause storage redundancy. Moreover, the ID is unordered and unreadable, resulting in low query efficiency. 2.2 Database Auto-incrementing ID Adopt the database's id auto-increment strategy, such as MySQL's auto_increment. To achieve high availability, multiple databases can be used, and different step sizes can be set respectively to generate non-repeating IDs. Advantages: The IDs generated by the database are absolutely ordered, and the way to achieve high availability is relatively simple. Disadvantages: It requires independent deployment of database instances, which is costly, and there is a performance bottleneck. 2.3 ID Generation by Redis All command operations in Redis are single-threaded. Redis itself provides auto-increment atomic commands such as incr and increby, so it can ensure that the generated IDs are unique and ordered. To deal with the performance bottleneck of a single node, a Redis cluster can be used to obtain higher throughput. For example, in a cluster containing 5 Redis nodes, the initial values of each Redis can be set to 1, 2, 3, 4, and 5 respectively, and the step size is set to 5. The IDs generated by each Redis are as follows: A: 1, 6, 11, 16, 21 B: 2, 7, 12, 17, 22 C: 3, 8, 13, 18, 23 D: 4, 9, 14, 19, 24 E: 5, 10, 15, 20, 25 The step size and initial value need to be determined in advance. Using a Redis cluster can also avoid the single-point failure problem. In addition, Redis is more suitable for generating serial numbers that start from 0 every day. For example, the order number can adopt the form of "date + daily auto-increment number". A Key is generated in Redis every day and incremented through INCR. Advantages: It does not depend on the database, is flexible and convenient to use, and has better performance than the database; the numeric ID is naturally ordered, which is very helpful for scenarios such as pagination or those that require sorting. Disadvantages: If Redis is not used in the system, introducing this component will increase the system complexity, and the workload of coding and configuration is also relatively large. 2.4 Snowflake Algorithm The Snowflake algorithm was applied by Twitter to its internal distributed projects and has been widely recognized by major domestic companies after being open-sourced. Twitter introduced this algorithm through its official blog on Children's Day in 2010 to identify each tweet. The Snowflake algorithm uses 64 bits to store the ID: The highest bit is reserved and not used for now. The next 41 bits are used as the timestamp, with the smallest time unit being milliseconds. The 41-bit timestamp can be used for approximately 69 years (2^41 -1). To make full use of the time representation range, the timestamp can start counting from the time when the system was first deployed, such as 2020-02-02 00:00:00. The next 10 bits are used as the machine ID (worker id), which can support 1024 machines. The 10 bits can also be divided into two parts, one part as the data center ID and one part as the machine ID. For example, if divided in a 5:5 ratio, it can support 32 data centers, and each data center can accommodate up to 32 machines at most. The last 12 bits are the auto-increment ID within a unit time (millisecond), which can represent 4096 IDs, that is, each machine can generate at most 4096 IDs per millisecond. The number of bits occupied by the timestamp, machine ID, and auto-increment ID can be adjusted according to the actual situation. The Snowflake algorithm has basic sequentiality because its first few bits are the timestamp, and the IDs can be sorted by time. III. D

Leapcell: The Best of Serverless Web Hosting

Detailed Explanation of Distributed ID Generation Schemes and the Snowflake Algorithm

I. Background

In internet business systems, there are various types of IDs. These IDs need to ensure global uniqueness, and such IDs are called distributed IDs. Distributed IDs need to meet characteristics such as uniqueness, increasing trend, high availability, and high performance.

The Snowflake algorithm is a distributed ID generation scheme, which was applied by Twitter to its internal distributed projects. After this algorithm was open-sourced, it was recognized by major companies. Under this influence, major companies have successively developed distributed ID generators with their own characteristics. Before delving into the Snowflake algorithm, it is necessary to provide an overview of the commonly used distributed ID generation schemes.

II. Distributed ID Generation Schemes

2.1 UUID

The core idea of this algorithm is to generate a UUID by combining the machine's network card, local time, and a random number.

- Advantages: It can be generated locally. The generation process is simple, with good performance, and there is no risk of high availability issues.

- Disadvantages: The generated ID is too long, which will cause storage redundancy. Moreover, the ID is unordered and unreadable, resulting in low query efficiency.

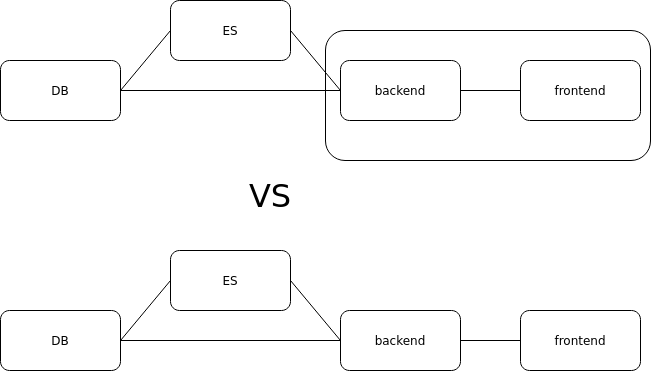

2.2 Database Auto-incrementing ID

Adopt the database's id auto-increment strategy, such as MySQL's auto_increment. To achieve high availability, multiple databases can be used, and different step sizes can be set respectively to generate non-repeating IDs.

- Advantages: The IDs generated by the database are absolutely ordered, and the way to achieve high availability is relatively simple.

- Disadvantages: It requires independent deployment of database instances, which is costly, and there is a performance bottleneck.

2.3 ID Generation by Redis

All command operations in Redis are single-threaded. Redis itself provides auto-increment atomic commands such as incr and increby, so it can ensure that the generated IDs are unique and ordered. To deal with the performance bottleneck of a single node, a Redis cluster can be used to obtain higher throughput. For example, in a cluster containing 5 Redis nodes, the initial values of each Redis can be set to 1, 2, 3, 4, and 5 respectively, and the step size is set to 5. The IDs generated by each Redis are as follows:

- A: 1, 6, 11, 16, 21

- B: 2, 7, 12, 17, 22

- C: 3, 8, 13, 18, 23

- D: 4, 9, 14, 19, 24

- E: 5, 10, 15, 20, 25

The step size and initial value need to be determined in advance. Using a Redis cluster can also avoid the single-point failure problem. In addition, Redis is more suitable for generating serial numbers that start from 0 every day. For example, the order number can adopt the form of "date + daily auto-increment number". A Key is generated in Redis every day and incremented through INCR.

- Advantages: It does not depend on the database, is flexible and convenient to use, and has better performance than the database; the numeric ID is naturally ordered, which is very helpful for scenarios such as pagination or those that require sorting.

- Disadvantages: If Redis is not used in the system, introducing this component will increase the system complexity, and the workload of coding and configuration is also relatively large.

2.4 Snowflake Algorithm

The Snowflake algorithm was applied by Twitter to its internal distributed projects and has been widely recognized by major domestic companies after being open-sourced. Twitter introduced this algorithm through its official blog on Children's Day in 2010 to identify each tweet.

The Snowflake algorithm uses 64 bits to store the ID:

- The highest bit is reserved and not used for now.

- The next 41 bits are used as the timestamp, with the smallest time unit being milliseconds. The 41-bit timestamp can be used for approximately 69 years (2^41 -1). To make full use of the time representation range, the timestamp can start counting from the time when the system was first deployed, such as 2020-02-02 00:00:00.

- The next 10 bits are used as the machine ID (worker id), which can support 1024 machines. The 10 bits can also be divided into two parts, one part as the data center ID and one part as the machine ID. For example, if divided in a 5:5 ratio, it can support 32 data centers, and each data center can accommodate up to 32 machines at most.

- The last 12 bits are the auto-increment ID within a unit time (millisecond), which can represent 4096 IDs, that is, each machine can generate at most 4096 IDs per millisecond.

The number of bits occupied by the timestamp, machine ID, and auto-increment ID can be adjusted according to the actual situation. The Snowflake algorithm has basic sequentiality because its first few bits are the timestamp, and the IDs can be sorted by time.

III. Detailed Explanation of the Snowflake Algorithm

3.1 Golang Core Code

var (

// It can be set as the time when the system was first launched, in milliseconds. It occupies 41 bits and can be used for 69 years.

Epoch int64 = 12345688374657

// The ID of the instance occupies 10 bits, and it can support up to 1024 instances.

NodeBits uint8 = 10

// The step size occupies 12 bits. Each instance can generate at most 2^12 = 4096 non-repeating data per millisecond.

StepBits uint8 = 12

)

// Generation algorithm

func (n *Node) Generate() ID {

n.mu.Lock()

// The number of milliseconds elapsed from the set timestamp to now

now := time.Since(n.epoch).Nanoseconds() / 1000000

// If it is the same as the last recorded time, increment step by 1; otherwise, reset step to 0

if now == n.time {

n.step = (n.step + 1) & n.stepMask

if n.step == 0 {

for now <= n.time {

now = time.Since(n.epoch).Nanoseconds() / 1000000

}

}

} else {

n.step = 0

}

n.time = now

// Timestamp 41 bits | node 10 bits | step 12 bits

r := ID((now)<<n.timeShift |

(n.node << n.nodeShift) |

(n.step),

)

n.mu.Unlock()

return r

}

The specific bit allocation can be adjusted according to your own needs, such as (41, 10, 12), (41, 6, 16), etc. The complete Golang code repo: snowflake github.

3.2 Advantages

- Less storage: Only 8 bytes are required.

- High readability: The ID has a certain structure and meaning.

- Good performance: IDs can be generated centrally or on independent nodes.

3.3 Disadvantages

Time rollback will lead to the repeated generation of IDs.

3.4 Solutions to Clock Rollback

2 bits can be taken from the 10 bits of the machine ID as the clock rollback bits. When a clock rollback is detected, increment the rollback bits by 1, and when it reaches the maximum value, it will cycle from 0. For example, adjust the 10-bit machine number to an 8-bit machine number + 2-bit rollback bits. Every time a clock rollback is detected, increment the rollback bits by 1. When the rollback bits are greater than the maximum value (3), reset it to 0. In the same time period, the clock rollback is supported up to 3 times. After each rollback, due to the different clock rollback bits, the finally generated IDs are also different.

Leapcell: The Best of Serverless Web Hosting

Finally, I would like to recommend a platform that is most suitable for deploying Go services: Leapcell

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

.png?#)

.webp?#)

.webp?#)

![[Fixed] Gemini app is failing to generate Audio Overviews](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/03/Gemini-Audio-Overview-cover.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![What’s new in Android’s April 2025 Google System Updates [U: 4/14]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/01/google-play-services-3.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Seeds tvOS 18.5 Beta 2 to Developers [Download]](https://www.iclarified.com/images/news/97011/97011/97011-640.jpg)

![Apple Releases macOS Sequoia 15.5 Beta 2 to Developers [Download]](https://www.iclarified.com/images/news/97014/97014/97014-640.jpg)