Reference Architecture for AI Developer Productivity

In this article we'll lay out a reference architecture for an in-house AI assistant that can help development teams work with AI agents connected to their data directly from their integrated development environment (IDE) as well as providing a web portal for other team members to be able to interact with the same capabilities through their browser for non-programming tasks. Developer Productivity Opportunities with AI Since large language models (LLMs) gained mass popularity with the advent of GPT Turbo 3.5 (Chat GPT), many teams have been looking at LLMs as an opportunity to improve team productivity when writing and maintaining code. Teams have looked to integrate AI agents into their development workflows to help with the following tasks: Scaffolding new code by writing standardized but tedious pieces of code Generating unit tests around specific existing code Drafting documentation of public methods and classes Guided refactoring and code review of existing code that is in an unmaintainable state Helping analyze error messages to expedite troubleshooting of known issues Of course, anything involving AI must be done in a secure manner that protects the organization's intellectual property. Additionally, publicly available LLMs and coding assistants are missing organizational context such as your internal documentation, standards, work items, or even relevant configuration data or context from a local database. This eliminates some opportunities for AI systems to help your team at a deeper level, such as cases where the system could query cloud resources or configuration to provide answers to targeted queries. This means that a simple solution like an IDE plugin pointing directly to a public model may not always be a viable solution for security or capability purposes. A custom solution is sometimes needed to protect organizational privacy needs and serve up relevant context to the organization through dedicated AI assistants connected to model context protocol (MCP) servers and optionally self-hosted LLMs provided by the organization. The rest of this article will outline the major parts of such a system. A sample architecture An AI assistant agent system is composed of the following components: A model provider that serves up one or more LLMs in an organization-approved manner One or more model context protocol (MCP) servers that provide additional resources to your AI agent. An IDE Plugin connecting your IDE to the other parts of the system and providing a chat interface The model layer One of the easiest ways of gaining peace of mind over the use of your data is to customize which model your IDE plugin interacts with. There's a very real difference between the privacy concerns of a public OpenAI chat model versus one hosted on Azure in a more partitioned environment where your data will not be retained or used to train future models. For organizations wanting extreme control, a model can be deployed and hosted on network so your data never leaves your premises. Technologies like Ollama and LM Studio allow you to run LLMs on your own devices for free, though these do not typically provide access to some of the more recent commercial models. Additionally, your hardware must be sufficient to fit these models into memory and deal with incoming requests. This requires a sufficient graphics card / graphics chipset along with enough RAM to fit the model completely in memory. Underpowered machines or insufficient RAM may result in models that fail to function or models that operate at only a fraction of their normal speed. The IDE Plugin There are a growing number of IDE plugins that provide chat capabilities including GitHub Copilot, Continue.dev, and Roo Code. There are even already some dedicated AI IDEs such as Cursor. These tools allow you to have inline conversations with AI agents in your IDE and provide context about your code and structure. The agent in turn can chat back to you, edit code with your permission, or even act in an agentic manner and make a series of iterative changes and observations about the results of these changes in order to achieve a larger result. Leading EDJE fully expects the trend of custom IDE plugins and IDEs to continue forward much in the same way that models continue to improve, so we don't want to focus on specific implementations in this article. Instead, we want to be prescriptive in what you should look for in an IDE plugin. An IDE plugin should: Not send your data to a server beyond your control Allow you to customize which LLM you are interacting with, so you can provide your own LLMs Allow you to specify one or more model context protocol (MCP) servers to augment your agent with capabilities. Additional features such as allowing the user to specify which files are included in the conversation and conversation management are also important from a usability and productivity perspective

In this article we'll lay out a reference architecture for an in-house AI assistant that can help development teams work with AI agents connected to their data directly from their integrated development environment (IDE) as well as providing a web portal for other team members to be able to interact with the same capabilities through their browser for non-programming tasks.

Developer Productivity Opportunities with AI

Since large language models (LLMs) gained mass popularity with the advent of GPT Turbo 3.5 (Chat GPT), many teams have been looking at LLMs as an opportunity to improve team productivity when writing and maintaining code. Teams have looked to integrate AI agents into their development workflows to help with the following tasks:

- Scaffolding new code by writing standardized but tedious pieces of code

- Generating unit tests around specific existing code

- Drafting documentation of public methods and classes

- Guided refactoring and code review of existing code that is in an unmaintainable state

- Helping analyze error messages to expedite troubleshooting of known issues

Of course, anything involving AI must be done in a secure manner that protects the organization's intellectual property.

Additionally, publicly available LLMs and coding assistants are missing organizational context such as your internal documentation, standards, work items, or even relevant configuration data or context from a local database. This eliminates some opportunities for AI systems to help your team at a deeper level, such as cases where the system could query cloud resources or configuration to provide answers to targeted queries.

This means that a simple solution like an IDE plugin pointing directly to a public model may not always be a viable solution for security or capability purposes.

A custom solution is sometimes needed to protect organizational privacy needs and serve up relevant context to the organization through dedicated AI assistants connected to model context protocol (MCP) servers and optionally self-hosted LLMs provided by the organization.

The rest of this article will outline the major parts of such a system.

A sample architecture

An AI assistant agent system is composed of the following components:

- A model provider that serves up one or more LLMs in an organization-approved manner

- One or more model context protocol (MCP) servers that provide additional resources to your AI agent.

- An IDE Plugin connecting your IDE to the other parts of the system and providing a chat interface

The model layer

One of the easiest ways of gaining peace of mind over the use of your data is to customize which model your IDE plugin interacts with.

There's a very real difference between the privacy concerns of a public OpenAI chat model versus one hosted on Azure in a more partitioned environment where your data will not be retained or used to train future models.

For organizations wanting extreme control, a model can be deployed and hosted on network so your data never leaves your premises. Technologies like Ollama and LM Studio allow you to run LLMs on your own devices for free, though these do not typically provide access to some of the more recent commercial models.

Additionally, your hardware must be sufficient to fit these models into memory and deal with incoming requests. This requires a sufficient graphics card / graphics chipset along with enough RAM to fit the model completely in memory. Underpowered machines or insufficient RAM may result in models that fail to function or models that operate at only a fraction of their normal speed.

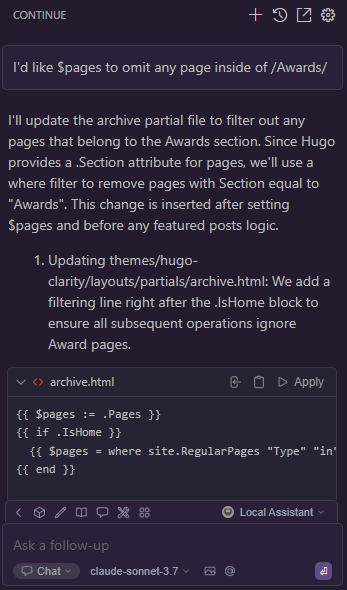

The IDE Plugin

There are a growing number of IDE plugins that provide chat capabilities including GitHub Copilot, Continue.dev, and Roo Code. There are even already some dedicated AI IDEs such as Cursor.

These tools allow you to have inline conversations with AI agents in your IDE and provide context about your code and structure. The agent in turn can chat back to you, edit code with your permission, or even act in an agentic manner and make a series of iterative changes and observations about the results of these changes in order to achieve a larger result.

Leading EDJE fully expects the trend of custom IDE plugins and IDEs to continue forward much in the same way that models continue to improve, so we don't want to focus on specific implementations in this article.

Instead, we want to be prescriptive in what you should look for in an IDE plugin.

An IDE plugin should:

- Not send your data to a server beyond your control

- Allow you to customize which LLM you are interacting with, so you can provide your own LLMs

- Allow you to specify one or more model context protocol (MCP) servers to augment your agent with capabilities.

Additional features such as allowing the user to specify which files are included in the conversation and conversation management are also important from a usability and productivity perspective.

The context layer

The final component of our AI agent architecture involves providing our system with additional context beyond the code the IDE provides and the capabilities of our LLMs.

This additional context comes through Model Context Protocol servers which connect your AI agent to additional skills in a standardized manner.

For example, a MCP server might provide built-in skills such as random number generation or a way of getting the current date. Alternatively a MCP server could call out to an internal or external API to provide additional data, such as relevant documents from a knowledgebase retrieved via RAG search in a manner similar to that discussed in our web chat agent reference architecture.

Your plugin may use multiple MCP servers running locally, with some of these servers provided by your organization and others provided by other organizations.

For example, when working on a project a developer might use:

- A MCP server containing organization-wide information

- A MCP server for information specifically related to the developer's project or team

- A MCP server provided by their cloud hosting provider, allowing for querying cloud resources

- A MCP server provided by GitHub for querying version control history

By combining internal and external MCP servers, developers can customize the skills available to them based on what tasks they're working on and their current organizational context. Integrating external MCP servers also allows your team to save implementation costs by reusing shared capabilities provided by external vendors instead of implementing those capabilities themselves in their own custom MCP server.

MCP servers may be run locally or may be referenced via a HTTP location. At the time of this writing a true authentication-friendly standard for web-based MCP servers has not been fully defined so most MCP servers are currently deployed locally, but we expect this to change as technologies evolve.

Additional Concerns

This document outlines an AI coding assistant architecture consisting of customizable models, MCP servers providing additional context and capabilities, and an IDE plugin to tie things together.

At the moment standards around MCP servers and the availability and capabilities of IDE plugins and IDEs is rapidly evolving. You may find that your organization's IDEs are not currently allowing the degree of customization of model source and MCP servers that is mentioned in this article.

In this case, or the case where non-developers want these same capabilities from outside of an IDE, you may want to consider implementing a web portal for chatting with custom MCP servers and organization-approved LLMs hosted in approved places as we'll discuss in a future reference architecture.

Conclusion

AI assistants are powerful capabilities that can help improve the effectiveness of your team, but privacy and control are critical factors for organizations.

Self-hosting models or specifying a custom location for a cloud-hosted model gives organizations additional control over where their data is going and how it is retained.

The arrival of MCP servers also reduces the need for custom AI orchestration solutions like Semantic Kernel and instead pushes AI skillsets to more centralized locations where they can be connected to from your IDE plugin. By integrating MCP servers into your workflow you allow for modular AI capabilities to augment your existing workflows and inject additional organizational, operational, or team contexts into your conversations.

![[The AI Show Episode 146]: Rise of “AI-First” Companies, AI Job Disruption, GPT-4o Update Gets Rolled Back, How Big Consulting Firms Use AI, and Meta AI App](https://www.marketingaiinstitute.com/hubfs/ep%20146%20cover.png)

![Beats Studio Pro Wireless Headphones Now Just $169.95 - Save 51%! [Deal]](https://www.iclarified.com/images/news/97258/97258/97258-640.jpg)