Rate Limit Handling: Using Randomized Delays with Cloud Tasks

As a developer, we often integrate with external API. There are a lot of consideration when working with external service such as API availability, latency, network failure, API timeout, and rate limit. We gonna talk about rate limit. It's a common design to handle and manage system load when exposing the API to external partner. From API's POV, we need to determine like how many request per second our API should handle, otherwise we will reject it to maintain the API latency and other performance metrics. If we talk from consumer POV, we will thing how our outbound request to the API pass the rate limit or how not to get caught by rate limiter. In low traffic application, it's not a problem, we wont hit the rate limit, but for mid - large scale traffic it might be an issue. Use case In the use case, we receive a request from other consumer, it can be direct API call or push pubsub. Where from one request it can generate 10 to 20 API call to external service which is rate limited for 10req/s. Think about a feature that will allow user to update goods information to other external API provider. User can have many goods and external provider only provide single update not bulk update. API A is responsible for receiving request from internal consumer and filtering the data (for example, check if user is exist or how many goods does user have). After that, it will get all of the goods data, for example we find 30 datas which will be updated to API B simultaneously. Let see API B, this is responsible for calling external API. By using API B we can control the request delivery to external API provider. Above we get 30 datas, means we will get 30 requests almost at the same seconds, maybe only have milis difference. Since the max RSP is 10, If we don't have any logic to handle rate limit here, it will eventually fail. Before that, we can add a queue to handle the request delivery to external API. We can use BullMQ. https://bullmq.io/ BullMQ will help use to manage the rate limit for our outbound request to external API. import { Queue, Worker, QueueScheduler } from 'bullmq'; // Create a queue with rate limiting options const queue = new Queue('rate-limited-queue', { connection: { host: 'localhost', port: 6379 }, defaultJobOptions: { // Limit to 10 jobs per 30 seconds limiter: { max: 10, duration: 30000 } } }); // Queue Scheduler is required for rate limiting const scheduler = new QueueScheduler('rate-limited-queue', { connection: { host: 'localhost', port: 6379 } }); // Create worker with rate limiting const worker = new Worker('rate-limited-queue', async (job) => { console.log(`Processing job ${job.id} at ${new Date().toISOString()}`); await processJob(job.data); }, { connection: { host: 'localhost', port: 6379 }, // Process max 2 jobs concurrently concurrency: 2, // Rate limit the worker itself limiter: { max: 5, duration: 1000 // 5 jobs per second } }); After implementing BullMQ, if the API does not throw rate limit error, so you can use this solution and don't make everything more complicated. In our case, we still got rate limit error quite often because the external API latency is sometimes exceeding 1s. That means, all 10 requests must have below 1s latency, for example if 7 requests have < 1s and else have more than 1s, the next 10 coming requests will be executed in the next second, we might see 3 requests fail, because 3 requests from the previous second were not done yet. Disclaimer, it might be depends on how external API provider handle their rate limit logic. Before go to more complicated solution using Cloud Task, we can leverage setTimeout() in NodeJS. We can create randomized request to API B using setTimeout. const delay = Math.floor(Math.random() * 30000); // Random delay 0-30000ms (30 seconds) setTimeout(async () => { // function to call API B here }, delay); It's more than enough, if we need something more persistent for distributed system, we can use Cloud Task to create delayed task. It will have retry mechanism automatically when something happen for example a network issue. It will also avoid data loss when the server/pod is shutdown/restarted suddenly. const { CloudTasksClient } = require('@google-cloud/tasks'); const client = new CloudTasksClient(); async function createDelayedTask(payload) { const project = 'your-project-id'; const queue = 'your-queue-name'; const location = 'us-central1'; const parent = client.queuePath(project, location, queue); // Generate random delay between 0-30 seconds const delaySeconds = Math.floor(Math.random() * 31); const delayMs = delaySeconds * 1000; // Calculate schedule time const scheduleTime = new Date(); scheduleTime.setMilliseconds(scheduleTime.getMilliseconds() + delayMs); const task = { httpRequest: { httpMethod: 'POST', url: 'https://your-service.com/process-tas

As a developer, we often integrate with external API. There are a lot of consideration when working with external service such as API availability, latency, network failure, API timeout, and rate limit.

We gonna talk about rate limit.

It's a common design to handle and manage system load when exposing the API to external partner. From API's POV, we need to determine like how many request per second our API should handle, otherwise we will reject it to maintain the API latency and other performance metrics.

If we talk from consumer POV, we will thing how our outbound request to the API pass the rate limit or how not to get caught by rate limiter. In low traffic application, it's not a problem, we wont hit the rate limit, but for mid - large scale traffic it might be an issue.

Use case

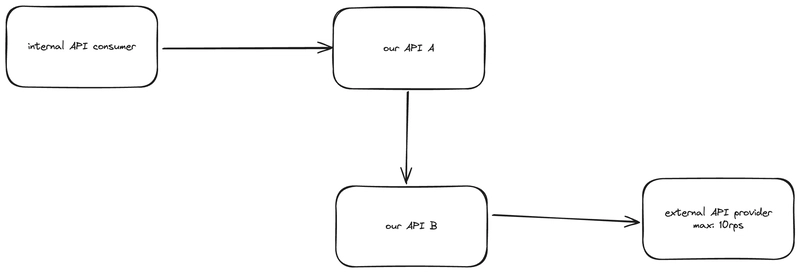

In the use case, we receive a request from other consumer, it can be direct API call or push pubsub. Where from one request it can generate 10 to 20 API call to external service which is rate limited for 10req/s.

Think about a feature that will allow user to update goods information to other external API provider. User can have many goods and external provider only provide single update not bulk update.

API A is responsible for receiving request from internal consumer and filtering the data (for example, check if user is exist or how many goods does user have). After that, it will get all of the goods data, for example we find 30 datas which will be updated to API B simultaneously.

Let see API B, this is responsible for calling external API. By using API B we can control the request delivery to external API provider.

Above we get 30 datas, means we will get 30 requests almost at the same seconds, maybe only have milis difference.

Since the max RSP is 10, If we don't have any logic to handle rate limit here, it will eventually fail.

Before that, we can add a queue to handle the request delivery to external API. We can use BullMQ.

BullMQ will help use to manage the rate limit for our outbound request to external API.

import { Queue, Worker, QueueScheduler } from 'bullmq';

// Create a queue with rate limiting options

const queue = new Queue('rate-limited-queue', {

connection: {

host: 'localhost',

port: 6379

},

defaultJobOptions: {

// Limit to 10 jobs per 30 seconds

limiter: {

max: 10,

duration: 30000

}

}

});

// Queue Scheduler is required for rate limiting

const scheduler = new QueueScheduler('rate-limited-queue', {

connection: {

host: 'localhost',

port: 6379

}

});

// Create worker with rate limiting

const worker = new Worker('rate-limited-queue', async (job) => {

console.log(`Processing job ${job.id} at ${new Date().toISOString()}`);

await processJob(job.data);

}, {

connection: {

host: 'localhost',

port: 6379

},

// Process max 2 jobs concurrently

concurrency: 2,

// Rate limit the worker itself

limiter: {

max: 5,

duration: 1000 // 5 jobs per second

}

});

After implementing BullMQ, if the API does not throw rate limit error, so you can use this solution and don't make everything more complicated.

In our case, we still got rate limit error quite often because the external API latency is sometimes exceeding 1s. That means, all 10 requests must have below 1s latency, for example if 7 requests have < 1s and else have more than 1s, the next 10 coming requests will be executed in the next second, we might see 3 requests fail, because 3 requests from the previous second were not done yet.

Disclaimer, it might be depends on how external API provider handle their rate limit logic.

Before go to more complicated solution using Cloud Task, we can leverage setTimeout() in NodeJS. We can create randomized request to API B using setTimeout.

const delay = Math.floor(Math.random() * 30000); // Random delay 0-30000ms (30 seconds)

setTimeout(async () => {

// function to call API B here

}, delay);

It's more than enough, if we need something more persistent for distributed system, we can use Cloud Task to create delayed task. It will have retry mechanism automatically when something happen for example a network issue. It will also avoid data loss when the server/pod is shutdown/restarted suddenly.

const { CloudTasksClient } = require('@google-cloud/tasks');

const client = new CloudTasksClient();

async function createDelayedTask(payload) {

const project = 'your-project-id';

const queue = 'your-queue-name';

const location = 'us-central1';

const parent = client.queuePath(project, location, queue);

// Generate random delay between 0-30 seconds

const delaySeconds = Math.floor(Math.random() * 31);

const delayMs = delaySeconds * 1000;

// Calculate schedule time

const scheduleTime = new Date();

scheduleTime.setMilliseconds(scheduleTime.getMilliseconds() + delayMs);

const task = {

httpRequest: {

httpMethod: 'POST',

url: 'https://your-service.com/process-task-API-B', // API B

headers: {

'Content-Type': 'application/json',

},

body: Buffer.from(JSON.stringify(payload)).toString('base64'),

},

scheduleTime: {

seconds: scheduleTime.getTime() / 1000,

},

};

try {

const [response] = await client.createTask({ parent, task });

console.log(`Task created: ${response.name}`);

console.log(`Scheduled with ${delaySeconds} seconds delay`);

return response;

} catch (error) {

console.error('Error creating task:', error);

throw error;

}

}

While not entirely eliminating the potential for more than 10 simultaneous external API requests, distributing these 30 calls across separate seconds greatly reduces this likelihood.

30 requests from the example in above use case.

Thanks for reading, hope you enjoy it.

Let's connect with me. https://linkedin.com/in/burhanahmeed

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

_NicoElNino_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Standalone Meta AI App Released for iPhone [Download]](https://www.iclarified.com/images/news/97157/97157/97157-640.jpg)