Google I/O 2025: New Tools, APIs and Everything Devs Need to Know

Google I/O was a landmark event for developers, with the company doubling down on its vision on AI-first software development. This year's announcements were lazer-focussed on empowering builders with new AI models, APIs and tools especially through Gemini platform. Here's a deep dive into the developer-centric innovations unveiled at unveiled at Google I/O 2025. Gemini 2.5: Smarter, Faster, and More Controllable The centerpiece of Google’s developer story is the Gemini 2.5 family of AI models. Gemini 2.5 Pro, now featuring the advanced "Deep Think" mode, enables the model to consider multiple hypotheses before responding. It dramatically boosted its performance on complex coding and math tasks. This upgrade positions Gemini at the top of industry benchmarks, with significant improvements in reasoning and accuracy. Gemini 2.5 Flash, the more efficient sibling, has also been enhanced for better reasoning, multimodality, coding, and long-context capabilities. It delivers near-Pro performance while using 20–30% fewer tokens, making it ideal for cost-sensitive applications. Developers now have more transparency and control over how Gemini models operate. Features like "thought summaries" help explain model decisions, and the upcoming "thinking budgets" will allow fine-tuning of cost and response quality. Gemini API and Google AI Studio: Rapid Prototyping and Agentic Apps Google AI Studio, now integrated with Gemini 2.5 Pro, is the fastest environment for evaluating models and building with the Gemini API. The platform’s native code editor and GenAI SDK let developers instantly generate web apps from text, images, or video prompts. Starter apps and templates help jumpstart projects, while new generative media models like Imagen and Veo expand creative possibilities. A major leap is the ability to build "agentic" experiences. With Gemini’s advanced reasoning, developers can create agents that understand context, perform tasks, and even browse the web using the new Computer Use API. The experimental URL Context feature lets Gemini pull full-page context from URLs, making it far easier to build assistants and research tools. Gemini SDKs are also adopting the Model Context Protocol (MCP), simplifying integration with open-source tools and expanding the ecosystem for agentic applications. Jules Coding Agent: Automating Codebase Tasks Jules, Google’s new asynchronous coding agent, is now in public beta. Designed to work directly with GitHub repositories, Jules can automate tasks such as version upgrades, writing tests, updating features, and fixing bugs. It operates autonomously on a cloud VM, makes coordinated codebase edits, runs tests, and allows developers to review and merge changes via pull requests. This tool aims to dramatically speed up development cycles and reduce manual coding overhead. Stitch UI Designer: AI-Powered UI Generation Stitch is an experimental tool that leverages Gemini 2.5 Pro to generate user interface designs and frontend code from natural language or image prompts. Developers and designers can describe their desired application, specify layout or color preferences, and Stitch will produce visual designs and exportable code. It also supports iterative design, allowing multiple variants and seamless export to tools like Figma for further refinement ML Kit GenAI APIs: On-Device AI for Android Google introduced new ML Kit GenAI APIs powered by Gemini Nano, enabling developers to add intelligent, personalized features to Android apps that run directly on-device. A showcase app, Androidify, demonstrates how users can create personalized Android robots from selfies, illustrating the creative potential of these new APIs. Building Across Platforms: Android, Web, and XR While AI took center stage, Google reinforced its commitment to cross-platform development: Android: The new Material Three Expressive design system (announced pre-I/O) and deep Gemini integration in Android Studio streamline app creation and testing. Web: Chrome 135 introduces new CSS primitives for interactive UI elements, while the Interest Invoker API enables advanced UI without JavaScript. XR and Spatial Computing: Google previewed advancements in Android XR, supporting immersive experiences and spatial devices, and teased new smart glasses and 3D video calling via Project Astra and Google Beam. Summary Table: Key Developer-Focused Features Here's a summary for all the above unveiled features and releases. Feature/Tool Description Gemini 2.5 Pro & Flash Advanced AI models with improved reasoning, multimodality, audio-visual input/output Gemini API & SDK Rapid prototyping, URL Context, MCP support, GenAI SDK for app generation Jules Coding Agent Asynchronous coding agent for automating codebase tasks via GitHub Stitch UI Designer AI-powered UI design and code generation tool Gemini in Android Studio AI codi

Google I/O was a landmark event for developers, with the company doubling down on its vision on AI-first software development. This year's announcements were lazer-focussed on empowering builders with new AI models, APIs and tools especially through Gemini platform. Here's a deep dive into the developer-centric innovations unveiled at unveiled at Google I/O 2025.

Gemini 2.5: Smarter, Faster, and More Controllable

The centerpiece of Google’s developer story is the Gemini 2.5 family of AI models. Gemini 2.5 Pro, now featuring the advanced "Deep Think" mode, enables the model to consider multiple hypotheses before responding. It dramatically boosted its performance on complex coding and math tasks. This upgrade positions Gemini at the top of industry benchmarks, with significant improvements in reasoning and accuracy.

Gemini 2.5 Flash, the more efficient sibling, has also been enhanced for better reasoning, multimodality, coding, and long-context capabilities. It delivers near-Pro performance while using 20–30% fewer tokens, making it ideal for cost-sensitive applications.

Developers now have more transparency and control over how Gemini models operate. Features like "thought summaries" help explain model decisions, and the upcoming "thinking budgets" will allow fine-tuning of cost and response quality.

Gemini API and Google AI Studio: Rapid Prototyping and Agentic Apps

Google AI Studio, now integrated with Gemini 2.5 Pro, is the fastest environment for evaluating models and building with the Gemini API. The platform’s native code editor and GenAI SDK let developers instantly generate web apps from text, images, or video prompts. Starter apps and templates help jumpstart projects, while new generative media models like Imagen and Veo expand creative possibilities.

A major leap is the ability to build "agentic" experiences. With Gemini’s advanced reasoning, developers can create agents that understand context, perform tasks, and even browse the web using the new Computer Use API. The experimental URL Context feature lets Gemini pull full-page context from URLs, making it far easier to build assistants and research tools.

Gemini SDKs are also adopting the Model Context Protocol (MCP), simplifying integration with open-source tools and expanding the ecosystem for agentic applications.

Jules Coding Agent: Automating Codebase Tasks

Jules, Google’s new asynchronous coding agent, is now in public beta. Designed to work directly with GitHub repositories, Jules can automate tasks such as version upgrades, writing tests, updating features, and fixing bugs. It operates autonomously on a cloud VM, makes coordinated codebase edits, runs tests, and allows developers to review and merge changes via pull requests. This tool aims to dramatically speed up development cycles and reduce manual coding overhead.

Stitch UI Designer: AI-Powered UI Generation

Stitch is an experimental tool that leverages Gemini 2.5 Pro to generate user interface designs and frontend code from natural language or image prompts. Developers and designers can describe their desired application, specify layout or color preferences, and Stitch will produce visual designs and exportable code. It also supports iterative design, allowing multiple variants and seamless export to tools like Figma for further refinement

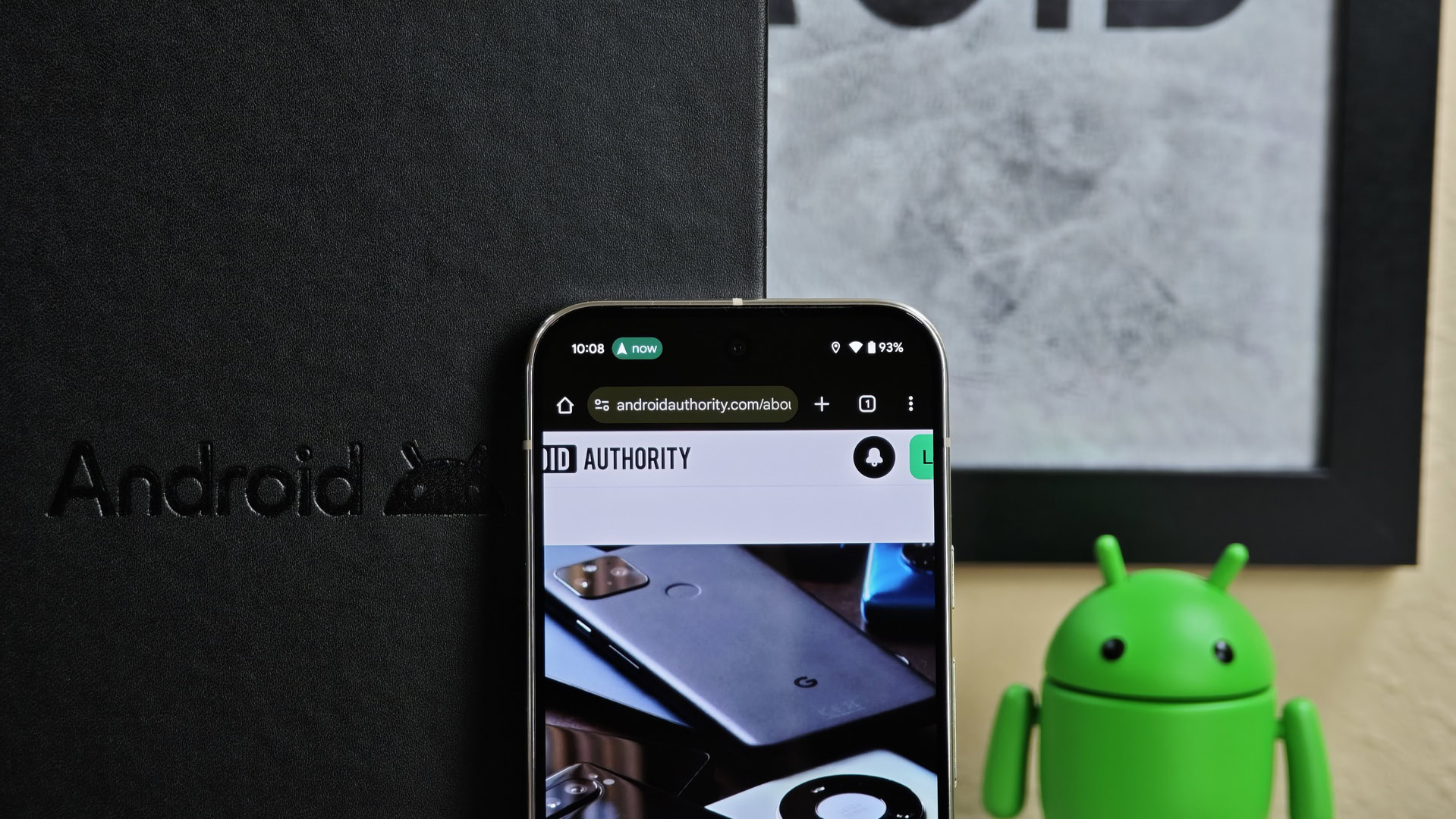

ML Kit GenAI APIs: On-Device AI for Android

Google introduced new ML Kit GenAI APIs powered by Gemini Nano, enabling developers to add intelligent, personalized features to Android apps that run directly on-device. A showcase app, Androidify, demonstrates how users can create personalized Android robots from selfies, illustrating the creative potential of these new APIs.

Building Across Platforms: Android, Web, and XR

While AI took center stage, Google reinforced its commitment to cross-platform development:

Android: The new Material Three Expressive design system (announced pre-I/O) and deep Gemini integration in Android Studio streamline app creation and testing.

Web: Chrome 135 introduces new CSS primitives for interactive UI elements, while the Interest Invoker API enables advanced UI without JavaScript.

XR and Spatial Computing: Google previewed advancements in Android XR, supporting immersive experiences and spatial devices, and teased new smart glasses and 3D video calling via Project Astra and Google Beam.

Summary Table: Key Developer-Focused Features

Here's a summary for all the above unveiled features and releases.

| Feature/Tool | Description |

|---|---|

| Gemini 2.5 Pro & Flash | Advanced AI models with improved reasoning, multimodality, audio-visual input/output |

| Gemini API & SDK | Rapid prototyping, URL Context, MCP support, GenAI SDK for app generation |

| Jules Coding Agent | Asynchronous coding agent for automating codebase tasks via GitHub |

| Stitch UI Designer | AI-powered UI design and code generation tool |

| Gemini in Android Studio | AI coding companion, Journeys for testing, Version Upgrade Agent |

| ML Kit GenAI APIs | On-device AI APIs for Android apps |

| Chrome/Web APIs | New CSS primitives for carousels, Interest Invoker API for advanced UI |

| Android XR & Beam | Tools and APIs for spatial computing, 3D video calls, and XR device support |

The Future: Open, Powerful, and Accessible AI

Google’s I/O 2025 developer announcements mark a shift toward open, accessible, and highly capable AI for everyone building the next generation of apps. With Gemini 2.5’s reasoning power, multimodal capabilities, and new APIs, developers can now create smarter, more adaptive, and more human-like software than ever before.

“We believe developers are the architects of the future. That’s why Google I/O is our most anticipated event of the year, and a perfect moment to bring developers together and share our efforts for all the amazing builders out there.”

— Google AI Team

From agentic assistants to creative media apps and immersive XR experiences, Google has put the tools of tomorrow’s software in developers’ hands today.

What do you think about the announcements made by Google at Google I/O 2025? Let me know in the comments. Thanks for visiting and stay tuned for the next one. Until then, keep learning and stay secure!

![[The AI Show Episode 148]: Microsoft’s Quiet AI Layoffs, US Copyright Office’s Bombshell AI Guidance, 2025 State of Marketing AI Report, and OpenAI Codex](https://www.marketingaiinstitute.com/hubfs/ep%20148%20cover%20%281%29.png)

![[The AI Show Episode 146]: Rise of “AI-First” Companies, AI Job Disruption, GPT-4o Update Gets Rolled Back, How Big Consulting Firms Use AI, and Meta AI App](https://www.marketingaiinstitute.com/hubfs/ep%20146%20cover.png)

.jpg?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

.jpg?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

_Alan_Wilson_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

_pichetw_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

-xl.jpg)

![Apple Leads Global Wireless Earbuds Market in Q1 2025 [Chart]](https://www.iclarified.com/images/news/97394/97394/97394-640.jpg)

![OpenAI Acquires Jony Ive's 'io' to Build Next-Gen AI Devices [Video]](https://www.iclarified.com/images/news/97399/97399/97399-640.jpg)

![Apple Shares Teaser for 'Chief of War' Starring Jason Momoa [Video]](https://www.iclarified.com/images/news/97400/97400/97400-640.jpg)