Get Natural James Earl Jones AI Voice with Voice Generator

Many recognize James Earl Jones for his powerful voice in roles like Darth Vader. Now, AI technology can recreate that voice, enabling anyone to generate speech that sounds like him. The James Earl Jones AI Voice Generator uses advanced software to replicate his distinctive voice for various creative and professional applications. This tool analyzes voice recordings and builds a digital model. Users input text, and the system generates speech that mirrors his tone and style. This unlocks new possibilities in voiceovers, entertainment, and more. As the technology develops, responsible use becomes essential. Legal and ethical considerations help prevent misuse or misrepresentation. Key Takeaways AI replicates James Earl Jones's voice using digital models based on recordings. It's useful for creative projects like voiceovers and media production. Users must follow legal and ethical guidelines when using the AI voice. What Is a James Earl Jones AI Voice Generator? A James Earl Jones AI voice generator digitally recreates the actor’s unique voice using advanced technology. It replicates his deep tone and clarity and serves multiple purposes, from entertainment to communication. Definition and Key Features This software mimics his voice using artificial intelligence. It analyzes speech recordings to learn distinct voice traits such as pitch, speed, and tone. Key features include: Natural and realistic voice cloning Adjustable speech settings Instant text-to-speech conversion Once trained, it works without the actor’s input, enabling automated voice content that sounds authentic. How AI Mimics James Earl Jones' Voice The AI uses deep learning to study voice recordings, breaking them into sound fragments to learn reproduction patterns. It emphasizes: Tone and vocal depth Rhythm and pauses Pronunciation and accent Training with extensive audio allows the AI to replicate his style accurately. Popular Use Cases This AI voice is used in: Movie trailers and promotions Video games requiring iconic character voices Dramatic audiobook narration Virtual assistants or public announcements Creators value its professional and recognizable tone. How James Earl Jones AI Voice Technology Works The technology builds a digital version of James Earl Jones’s voice by analyzing recordings and mimicking speech characteristics. AI Voice Cloning Techniques Voice cloning captures core traits like pitch and intonation. Using this data, the system builds a model to reproduce his voice with high fidelity. Text-to-speech synthesis converts written input into audio matching his vocal style. Neural networks enhance realism by learning from actual voice data rather than static rules. Deep Learning and Neural Networks Deep learning uses neural networks to analyze voice data. These layers mimic brain activity to grasp the subtleties of speech. The AI trains on vast samples, learning pronunciation, inflection, and timing to produce a natural voice. The system continuously improves accuracy by refining its internal models. Data Requirements Effective cloning needs clean, varied audio. This includes different speech styles and emotional tones to fully capture the voice. Clear recordings with minimal noise help the AI detect patterns precisely. Aligned transcripts are essential for connecting speech to text and training the model. Limited or poor data can result in unnatural output or missing nuances. Choosing the Right James Earl Jones AI Voice Generator To choose the right tool, consider audio quality, feature set, and ease of use. Evaluating Audio Quality The voice should sound smooth, natural, and match Jones’s distinct tone. Minimal background noise is essential. Support for multiple formats like MP3 or WAV is useful. Customizable pitch and speed improve realism. Testing audio samples helps assess voice authenticity and emotional delivery. Key Features to Consider Look for accurate text-to-speech, faithful voice style replication, and tools for fine-tuning pauses or volume. Additional features include multi-language support, API access, and commercial licensing. Privacy and security of user data are crucial. The best tools clearly state their data protection policies. Ease of Use and Accessibility User-friendly interfaces reduce learning curves. Helpful guides and support add value. Device compatibility matters—tools should work on desktops and mobile. Clear pricing and trial options offer transparency and flexibility for different budgets. Top Platforms for James Earl Jones AI Voice Synthesis Different platforms provide voice generation through commercial software, open-source tools, or online services. Commercial Software Solutions Programs like Resemble AI and Descript offer high-quality voice synthesis with features like emotion contro

Many recognize James Earl Jones for his powerful voice in roles like Darth Vader. Now, AI technology can recreate that voice, enabling anyone to generate speech that sounds like him. The James Earl Jones AI Voice Generator uses advanced software to replicate his distinctive voice for various creative and professional applications.

This tool analyzes voice recordings and builds a digital model. Users input text, and the system generates speech that mirrors his tone and style. This unlocks new possibilities in voiceovers, entertainment, and more.

As the technology develops, responsible use becomes essential. Legal and ethical considerations help prevent misuse or misrepresentation.

Key Takeaways

- AI replicates James Earl Jones's voice using digital models based on recordings.

- It's useful for creative projects like voiceovers and media production.

- Users must follow legal and ethical guidelines when using the AI voice.

What Is a James Earl Jones AI Voice Generator?

A James Earl Jones AI voice generator digitally recreates the actor’s unique voice using advanced technology. It replicates his deep tone and clarity and serves multiple purposes, from entertainment to communication.

Definition and Key Features

This software mimics his voice using artificial intelligence. It analyzes speech recordings to learn distinct voice traits such as pitch, speed, and tone.

Key features include:

- Natural and realistic voice cloning

- Adjustable speech settings

- Instant text-to-speech conversion

Once trained, it works without the actor’s input, enabling automated voice content that sounds authentic.

How AI Mimics James Earl Jones' Voice

The AI uses deep learning to study voice recordings, breaking them into sound fragments to learn reproduction patterns.

It emphasizes:

- Tone and vocal depth

- Rhythm and pauses

- Pronunciation and accent

Training with extensive audio allows the AI to replicate his style accurately.

Popular Use Cases

This AI voice is used in:

- Movie trailers and promotions

- Video games requiring iconic character voices

- Dramatic audiobook narration

- Virtual assistants or public announcements

Creators value its professional and recognizable tone.

How James Earl Jones AI Voice Technology Works

The technology builds a digital version of James Earl Jones’s voice by analyzing recordings and mimicking speech characteristics.

AI Voice Cloning Techniques

Voice cloning captures core traits like pitch and intonation. Using this data, the system builds a model to reproduce his voice with high fidelity.

Text-to-speech synthesis converts written input into audio matching his vocal style.

Neural networks enhance realism by learning from actual voice data rather than static rules.

Deep Learning and Neural Networks

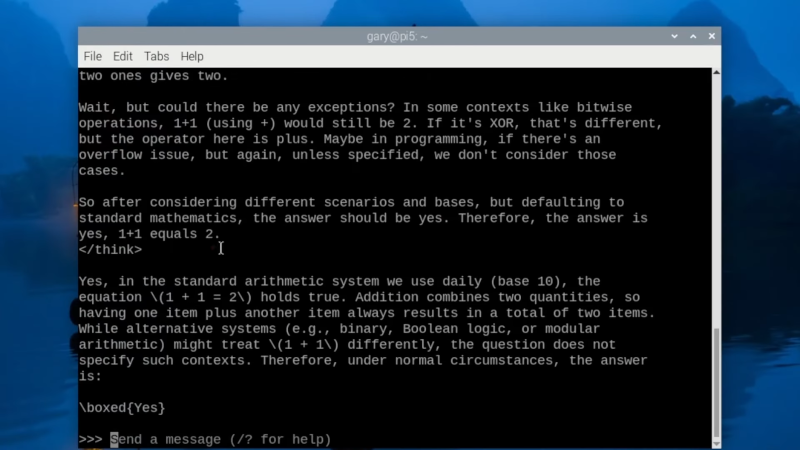

Deep learning uses neural networks to analyze voice data. These layers mimic brain activity to grasp the subtleties of speech.

The AI trains on vast samples, learning pronunciation, inflection, and timing to produce a natural voice.

The system continuously improves accuracy by refining its internal models.

Data Requirements

Effective cloning needs clean, varied audio. This includes different speech styles and emotional tones to fully capture the voice.

Clear recordings with minimal noise help the AI detect patterns precisely.

Aligned transcripts are essential for connecting speech to text and training the model.

Limited or poor data can result in unnatural output or missing nuances.

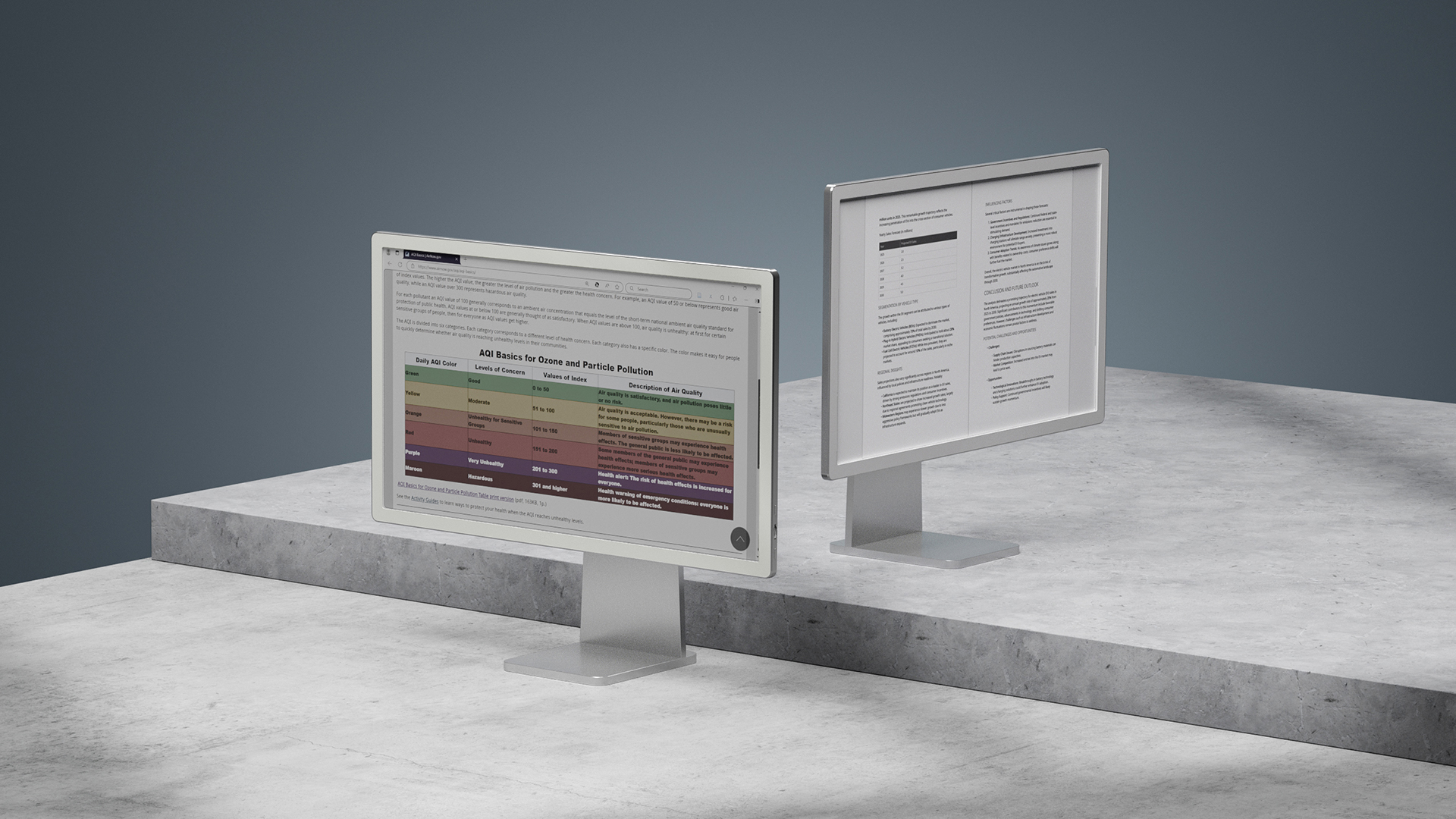

Choosing the Right James Earl Jones AI Voice Generator

To choose the right tool, consider audio quality, feature set, and ease of use.

Evaluating Audio Quality

The voice should sound smooth, natural, and match Jones’s distinct tone. Minimal background noise is essential.

Support for multiple formats like MP3 or WAV is useful. Customizable pitch and speed improve realism.

Testing audio samples helps assess voice authenticity and emotional delivery.

Key Features to Consider

Look for accurate text-to-speech, faithful voice style replication, and tools for fine-tuning pauses or volume.

Additional features include multi-language support, API access, and commercial licensing.

Privacy and security of user data are crucial. The best tools clearly state their data protection policies.

Ease of Use and Accessibility

User-friendly interfaces reduce learning curves. Helpful guides and support add value.

Device compatibility matters—tools should work on desktops and mobile.

Clear pricing and trial options offer transparency and flexibility for different budgets.

Top Platforms for James Earl Jones AI Voice Synthesis

Different platforms provide voice generation through commercial software, open-source tools, or online services.

Commercial Software Solutions

Programs like Resemble AI and Descript offer high-quality voice synthesis with features like emotion control and scripting.

They usually require a subscription or purchase and provide professional results across multiple audio formats.

Pre-trained models and upload options support customization for creators.

Open Source Tools

Projects like Mozilla TTS and Coqui TTS offer free tools for technically skilled users.

They allow full control over training and customization but may require time to set up and optimize.

Voice quality depends on the data and model used, making them ideal for developers or hobbyists.

Online Voice Generator Services

Web-based tools like Voxdazz and Lovo offer quick voice generation with pre-built models.

No technical skills are needed. These platforms often run on subscription or pay-per-use.

They provide fast previews and easy exports but may lack deep customization.

Legal and Ethical Considerations

Using a James Earl Jones AI voice involves rules around ownership and responsible application.

Copyright and Voice Ownership

His voice is protected by intellectual property laws. Unauthorized use may result in copyright or personality rights violations.

Users must have proper licensing and check platform terms before distribution.

Even synthetic voices based on real people require respect for their rights and consent.

Ethical Use Cases

Ethical use involves avoiding misleading, harmful, or exploitative content.

Misrepresentation or impersonation without disclosure is unethical. Transparency is key.

Proper credit and respectful usage maintain public trust and protect legacy.

Compliance with Regulations

Laws vary by country. In the U.S., usage often involves copyright and personal rights. In the EU, GDPR applies to personal data.

Disclosure is often required for synthetic voices in public content.

Staying informed and compliant helps avoid legal risks and ensures responsible innovation.

Creative and Professional Applications

The generator supports a wide range of creative and commercial uses by offering a familiar and authoritative voice.

Entertainment and Media Productions

Studios use the AI voice for narration and character dialogue without needing the actor on set. It saves time and maintains consistency.

It also allows quick updates or changes in audio content.

Marketing and Advertising

The AI voice adds authority to ads and podcasts. Its rich tone improves brand impact and listener trust.

It adapts to multiple formats and boosts engagement.

Educational Content

The voice aids in audiobooks and educational materials with clear and consistent delivery.

It engages learners while saving instructors time on voice recordings.

Best Practices for Using AI-Generated James Earl Jones Voices

Responsible use ensures the voice remains respectful, accurate, and law-compliant.

Maintaining Authenticity

Match the AI voice’s style and pronunciation to Jones’s real speech. Avoid unnatural edits.

Scripts should align with his tone—measured and serious.

Ensure clear, high-quality audio to preserve realism.

Avoiding Misuse

Don’t use the voice for false claims, scams, or impersonation.

Avoid suggesting Jones endorsed content unless explicitly approved.

Respect others’ rights and use the voice responsibly.

Attribution Guidelines

Clearly state the voice is AI-generated, e.g.,

"Voice generated using an AI model based on James Earl Jones."

Proper credit prevents confusion and shows respect for the original source.

Review licensing terms when using the voice commercially.

Future Trends in AI Voice Generation

Voice AI is advancing toward more personalization, expressiveness, and integration.

Advancements in Personalization

Users will choose voices based on style or emotion. AI will adapt tone and speed by context, improving relevance and realism.

Feedback-based learning will make interactions more personal and effective.

Improved Naturalness and Expressiveness

AI will better replicate human-like speech, emotion, and timing. This leads to more natural-sounding voices suitable for long conversations.

Sound glitches will decrease, enhancing listening comfort.

Integration with Other AI Technologies

AI voices will integrate with chatbots, translation tools, and facial recognition for smarter interactions.

Real-time multilingual support and behavior-driven content delivery will improve engagement across industries.

![[The AI Show Episode 146]: Rise of “AI-First” Companies, AI Job Disruption, GPT-4o Update Gets Rolled Back, How Big Consulting Firms Use AI, and Meta AI App](https://www.marketingaiinstitute.com/hubfs/ep%20146%20cover.png)

![[FREE EBOOKS] Offensive Security Using Python, Learn Computer Forensics — 2nd edition & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![Ditching a Microsoft Job to Enter Startup Purgatory with Lonewolf Engineer Sam Crombie [Podcast #171]](https://cdn.hashnode.com/res/hashnode/image/upload/v1746753508177/0cd57f66-fdb0-4972-b285-1443a7db39fc.png?#)

-xl.jpg)

![As Galaxy Watch prepares a major change, which smartwatch design to you prefer? [Poll]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2024/07/Galaxy-Watch-Ultra-and-Apple-Watch-Ultra-1.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple M4 iMac Drops to New All-Time Low Price of $1059 [Deal]](https://www.iclarified.com/images/news/97281/97281/97281-640.jpg)

![Beats Studio Buds + On Sale for $99.95 [Lowest Price Ever]](https://www.iclarified.com/images/news/96983/96983/96983-640.jpg)

![New iPad 11 (A16) On Sale for Just $277.78! [Lowest Price Ever]](https://www.iclarified.com/images/news/97273/97273/97273-640.jpg)

![Apple's 11th Gen iPad Drops to New Low Price of $277.78 on Amazon [Updated]](https://images.macrumors.com/t/yQCVe42SNCzUyF04yj1XYLHG5FM=/2500x/article-new/2025/03/11th-gen-ipad-orange.jpeg)

![[Exclusive] Infinix GT DynaVue: a Prototype that could change everything!](https://www.gizchina.com/wp-content/uploads/images/2025/05/Screen-Shot-2025-05-10-at-16.07.40-PM-copy.png)