Best Practices for Managing Cloud Configurations at Scale?

When most teams talk about cloud deployment, they think in terms of getting code live. But what happens after that moment, the configurations, secrets, environment variables, dependencies, is where real DevOps maturity is tested.

As someone who’s spent years working with cloud-native applications and AI-driven deployment tools, I’ve seen firsthand how poorly managed configurations can lead to instability, security risks, and engineering fatigue.

This guide breaks down the best practices for managing cloud configurations at scale in a world where cloud infrastructure is complex, dynamic, and increasingly AI-powered.

What Is cloud configuration management?

Cloud configuration management is the process of organizing, versioning, and applying settings that determine how cloud infrastructure behaves.

These include:

- Application settings (env variables, feature flags)

- Infrastructure settings (autoscaling, networking, storage)

- Secrets and credentials (tokens, keys, passwords)

- Service dependencies (databases, APIs, queues)

At scale, managing these consistently across dev, staging, and production becomes critical especially as teams shift from static VMs to containerized, distributed architectures.

The risk of poor configuration management

A single misconfigured variable can:

- Crash production environments

- Leak credentials

- Cause unexpected behavior across regions

- Inflate cloud costs due to wrong resource allocations

And worse, most of these bugs only surface after deployment, making them hard to detect early.

Best practices for managing cloud configurations at Scale

1. Centralize configuration as code (CaC)

Treat all configurations the same way you treat code:

- Use YAML/JSON to define configs

- Store in version-controlled repos

- Use tools like HashiCorp’s Consul, AWS SSM, or AI-based managers

This enables traceability, rollback, and review cycles before pushing changes live.

2. Segregate environments explicitly

Never hardcode values. Use environment-based segmentation:

config.production.jsonconfig.dev.yaml- Separate secrets for each stage

Avoid accidental production leaks into dev environments, or vice versa.

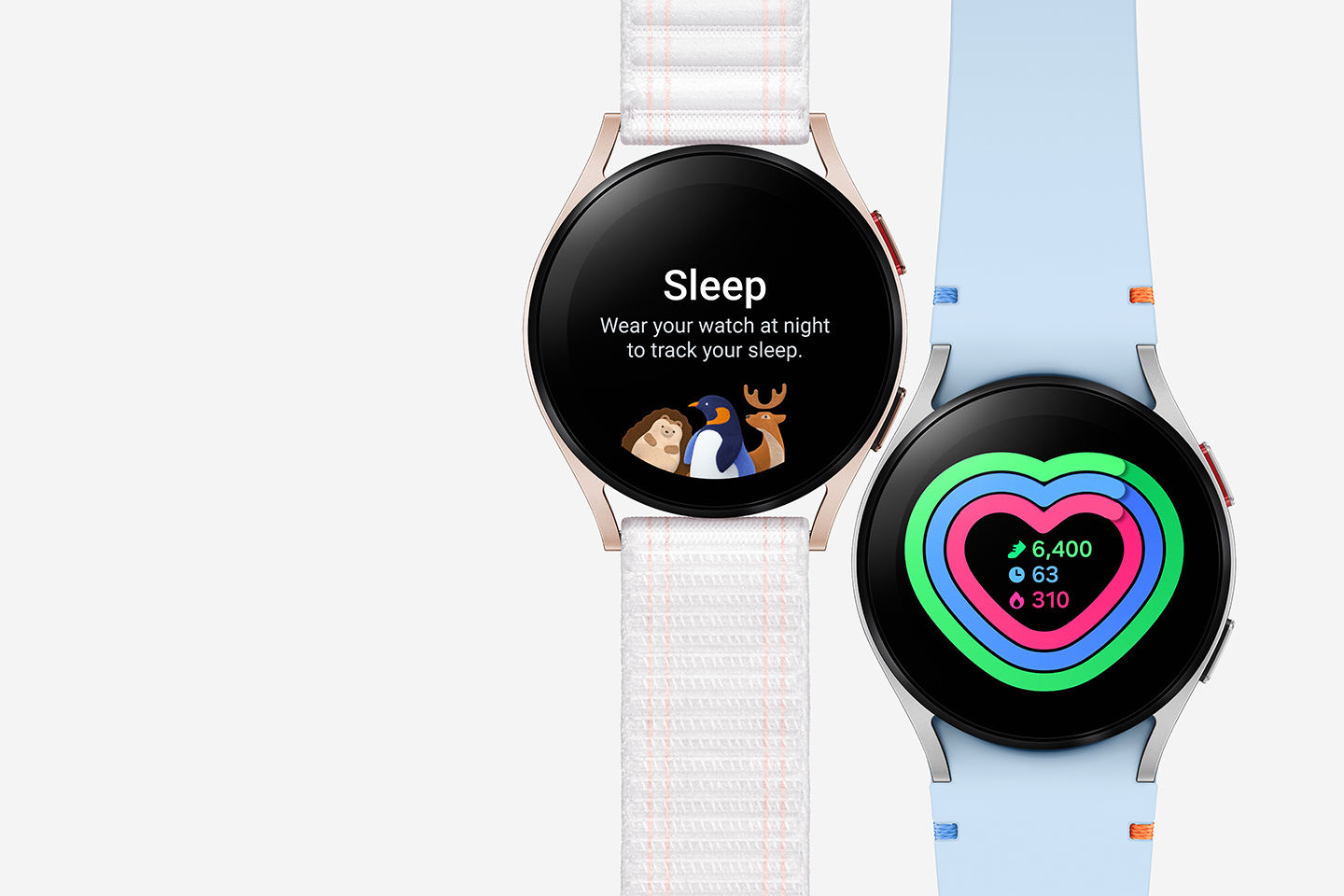

3. Leverage AI for Dynamic Configuration Decisions

Modern AI platforms can now auto-tune configurations based on:

- Historical performance

- Load patterns

- Resource utilization trends

Tools like Kuberns (AI deployment manager) auto-adjust config parameters like CPU limits, instance types, and even logging thresholds based on real usage — not static guesses.

4. Use encrypted secrets management

Store sensitive variables (API keys, DB creds) using:

- AWS Secrets Manager

- HashiCorp Vault

- Azure Key Vault

Never store secrets in plain config files, repos, or Docker images.

Bonus tip: Rotate secrets automatically where supported.

5. Validate configurations before deployment

Use automated tools to:

- Lint config files (YAML, JSON syntax)

- Enforce policy rules (e.g., no open S3 buckets)

- Run test environments with config dry-runs

This can be integrated into your CI/CD pipeline to catch issues early.

6. Implement configurations rollbacks and audit logs

Changes in cloud configs should be:

- Logged with timestamps and user IDs

- Easily revertible (like a

git revertfor config) - Tracked via diff tools to see what changed and why

This protects your system from human error and lets teams move fast with less risk.

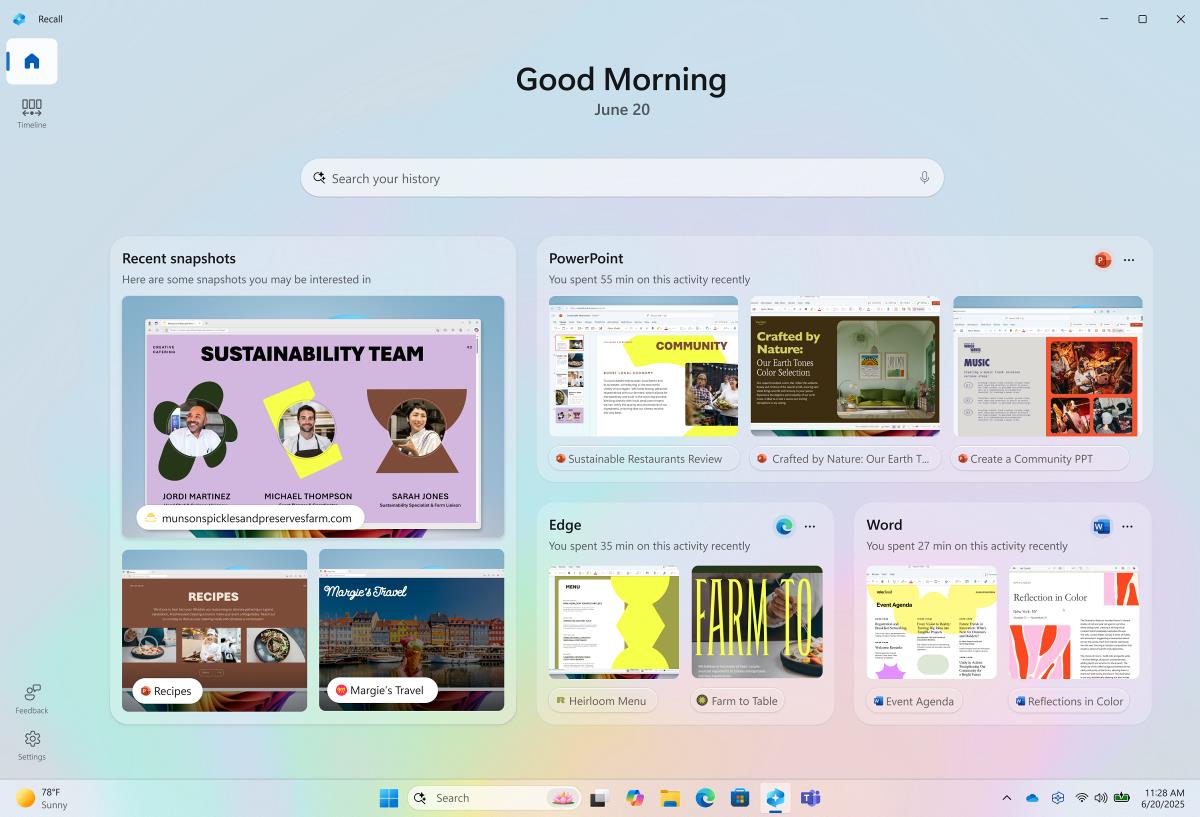

Scaling Smartly: From Static to Autonomous Config Management

As you scale, manual config tuning won’t hold up. This is where AI-powered cloud deployment tools shine.

They can:

- Analyze app behavior in real time

- Adjust resource configs autonomously

- Trigger auto-deploys with improved settings

The goal isn’t just configuring “management”, it’s configuration optimization at scale.

Where to go from here

If your team is scaling fast and your configurations are still being managed through static files or scattered dashboards, you’re likely closer to a breaking point than you think.

Start by:

- Auditing your current config process across environments

- Automating validation and policy checks

- Exploring AI-based tools that adapt configurations in real time

As your infrastructure grows, the way you manage configurations should evolve too from reactive scripts to intelligent, self-correcting systems.

The future of cloud ops isn’t about working harder, it’s about working with smarter systems that do the heavy lifting after deployment too.

![[The AI Show Episode 155]: The New Jobs AI Will Create, Amazon CEO: AI Will Cut Jobs, Your Brain on ChatGPT, Possible OpenAI-Microsoft Breakup & Veo 3 IP Issues](https://www.marketingaiinstitute.com/hubfs/ep%20155%20cover.png)

![GrandChase tier list of the best characters available [June 2025]](https://media.pocketgamer.com/artwork/na-33057-1637756796/grandchase-ios-android-3rd-anniversary.jpg?#)

_peter_kovac_alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Apple Considers LX Semicon and LG Innotek Components for iPad OLED Displays [Report]](https://www.iclarified.com/images/news/97699/97699/97699-640.jpg)

![Apple Releases New Beta Firmware for AirPods Pro 2 and AirPods 4 [8A293c]](https://www.iclarified.com/images/news/97704/97704/97704-640.jpg)