AI Agents with MCP: Practical Takeaways from n8n and GitHub Copilot

Key Takeaways for Developers ✅ Successful Approaches: Breaking down tasks into focused, single operations Maintaining control over the workflow instead of letting AI decide Using AI for specific steps rather than complex control flows Version controlling AI-assisted content ❌ Common Pitfalls: Letting AI handle long-running operations (leads to timeouts and context loss) Assuming consistent behavior between different AI models and platforms Letting AI figure out control flow independently Making AI think about too many things at once AI Agents - Insights and Observations The more I work with AI, the more I discover what is generic and predictable versus what constitutes actual original insights. There is also a third category: technical challenges. The expectation is that as technology develops, technical issues will decrease, and AI will handle more of the generic work. This means the only work that will remain is original thinking and insights. This is the optimistic outlook. Note this also means that you will actually have to think a lot harder during your knowledge work. If you let an AI agent take a lot of actions, like reordering a filesystem, it takes a lot of slow repetitions and eventually runs into timeouts. You also notice that it starts to forget what it is doing while controlling a browser. For example, Playwright tries to click on links from the previous page which are stale/unclickable, whereas earlier in the execution it was able to remember to navigate back to click on the links. Let it do one thing at a time to maintain reliability. If you know in which order actions should be performed, you don't need non-deterministic control flow to handle the task. Agentic AI and Copilot Chat edits in VS Code are removing the copy-paste steps out of the development process. Speed is an advantage that might be especially beneficial for Gemini, as specialized hardware can make it a lot faster than other models. If you know the control flow (i.e., what the agent should do), don't let the AI figure this out on its own. Instead, program the flow yourself and use AI function calling or something like Instructor to do the language processing. Letting the AI figure out the control flow is slow and only makes sense if the flow depends on intelligent decisions. It feels like you lose some intelligence by letting the AI think about too many things at once. The more focused you can make your task, the better output you can expect. Examples where you know the control flow: You are scraping LinkedIn profiles for gathering leads You do a code review where you know you want to first look at the code then add comments For file organization, you first scan files, then categorize, then move the files The key point is that in these cases, you already know the sequence of actions. The AI's role is to handle individual steps (like understanding file content or formatting text) rather than figuring out what to do next. Nice cases where you don't know what to do: Programming with AI where it's unclear which files need to be edited and how Search engines like Perplexity where the query path isn't predetermined Data analysis where patterns aren't known beforehand Customer support where each query requires a unique investigation path The common factor in all these cases is that the input is a user query or problem where there is no clear algorithm that could be hardcoded. The AI needs to make decisions based on context and intermediate findings rather than following a predetermined path. Understanding n8n and GitHub Copilot Agents What is n8n? n8n is an open-source workflow automation tool that allows users to connect various applications, APIs, and data sources to automate tasks. It provides a visual interface where you can build workflows by connecting nodes that represent different services or actions. Think of it as an alternative to tools like Zapier or Make (formerly Integromat), but with more flexibility and the ability to self-host. Key features of n8n include: Visual workflow builder 200+ pre-built integrations Ability to run custom JavaScript code Self-hosting option for complete control over your data Community-contributed nodes to extend functionality What are GitHub Copilot Agents? GitHub Copilot agents are an extension of GitHub Copilot that goes beyond code completion. These agents can: Have conversations with developers about code Execute tools and perform actions on behalf of users Interact with external services through MCP Help with complex development tasks To access Copilot agents: Sign up for GitHub Copilot (requires a subscription) Join the GitHub Copilot Insider program Install the latest version of VS Code and the GitHub Copilot extension Enable Copilot agent features in settings What is Model Context Protocol (MCP)? The Model Context Protocol (MCP) is a standardized way for AI models to interact with extern

Key Takeaways for Developers

✅ Successful Approaches:

- Breaking down tasks into focused, single operations

- Maintaining control over the workflow instead of letting AI decide

- Using AI for specific steps rather than complex control flows

- Version controlling AI-assisted content

❌ Common Pitfalls:

- Letting AI handle long-running operations (leads to timeouts and context loss)

- Assuming consistent behavior between different AI models and platforms

- Letting AI figure out control flow independently

- Making AI think about too many things at once

AI Agents - Insights and Observations

The more I work with AI, the more I discover what is generic and predictable versus what constitutes actual original insights. There is also a third category: technical challenges. The expectation is that as technology develops, technical issues will decrease, and AI will handle more of the generic work. This means the only work that will remain is original thinking and insights. This is the optimistic outlook. Note this also means that you will actually have to think a lot harder during your knowledge work.

If you let an AI agent take a lot of actions, like reordering a filesystem, it takes a lot of slow repetitions and eventually runs into timeouts. You also notice that it starts to forget what it is doing while controlling a browser. For example, Playwright tries to click on links from the previous page which are stale/unclickable, whereas earlier in the execution it was able to remember to navigate back to click on the links.

Let it do one thing at a time to maintain reliability.

If you know in which order actions should be performed, you don't need non-deterministic control flow to handle the task.

Agentic AI and Copilot Chat edits in VS Code are removing the copy-paste steps out of the development process.

Speed is an advantage that might be especially beneficial for Gemini, as specialized hardware can make it a lot faster than other models.

If you know the control flow (i.e., what the agent should do), don't let the AI figure this out on its own. Instead, program the flow yourself and use AI function calling or something like Instructor to do the language processing. Letting the AI figure out the control flow is slow and only makes sense if the flow depends on intelligent decisions. It feels like you lose some intelligence by letting the AI think about too many things at once. The more focused you can make your task, the better output you can expect.

Examples where you know the control flow:

- You are scraping LinkedIn profiles for gathering leads

- You do a code review where you know you want to first look at the code then add comments

- For file organization, you first scan files, then categorize, then move the files

The key point is that in these cases, you already know the sequence of actions. The AI's role is to handle individual steps (like understanding file content or formatting text) rather than figuring out what to do next.

Nice cases where you don't know what to do:

- Programming with AI where it's unclear which files need to be edited and how

- Search engines like Perplexity where the query path isn't predetermined

- Data analysis where patterns aren't known beforehand

- Customer support where each query requires a unique investigation path

The common factor in all these cases is that the input is a user query or problem where there is no clear algorithm that could be hardcoded. The AI needs to make decisions based on context and intermediate findings rather than following a predetermined path.

Understanding n8n and GitHub Copilot Agents

What is n8n?

n8n is an open-source workflow automation tool that allows users to connect various applications, APIs, and data sources to automate tasks. It provides a visual interface where you can build workflows by connecting nodes that represent different services or actions. Think of it as an alternative to tools like Zapier or Make (formerly Integromat), but with more flexibility and the ability to self-host.

Key features of n8n include:

- Visual workflow builder

- 200+ pre-built integrations

- Ability to run custom JavaScript code

- Self-hosting option for complete control over your data

- Community-contributed nodes to extend functionality

What are GitHub Copilot Agents?

GitHub Copilot agents are an extension of GitHub Copilot that goes beyond code completion. These agents can:

- Have conversations with developers about code

- Execute tools and perform actions on behalf of users

- Interact with external services through MCP

- Help with complex development tasks

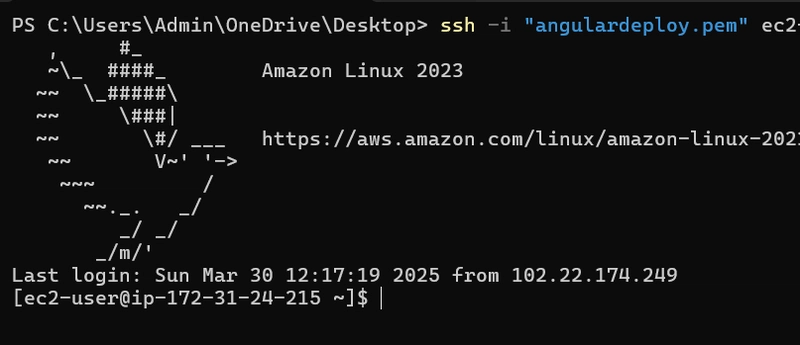

To access Copilot agents:

- Sign up for GitHub Copilot (requires a subscription)

- Join the GitHub Copilot Insider program

- Install the latest version of VS Code and the GitHub Copilot extension

- Enable Copilot agent features in settings

What is Model Context Protocol (MCP)?

The Model Context Protocol (MCP) is a standardized way for AI models to interact with external tools and services. It acts as a "universal translator" that enables seamless communication between different systems, allowing AI agents to perform actions in the real world.

n8n with MCP Integration

The n8n-nodes-mcp community node allows users to connect MCP servers within their workflows. This integration supports:

- Listing available tools on connected MCP servers

- Executing tools through AI agents

- Sending prompts and retrieving structured responses

- Accessing resources from connected servers

With this integration, you can create AI-powered workflows that automate complex tasks like data analysis, web scraping, or file management.

Installing MCP Tools

Installing MCP tools is remarkably straightforward, typically requiring just a simple npx command to download and run a server. Most tools can be installed and started with a single command line instruction. While you can add tools directly through VS Code's command line interface, there are other installation methods available, such as using configuration files or installing the servers globally via npm. Each tool may need some basic configuration, like specifying allowed directories for filesystem access or setting browser preferences, but the overall process remains simple and makes it easy to enhance your AI agents with new capabilities.

sh

code-insiders --add-mcp '{"name":"playwright","command":"npx","args":["@playwright/mcp@latest"]}'

code-insiders --add-mcp '{"name":"filesystem","command":"npx","args":["-y","@modelcontextprotocol/server-filesystem","~/Downloads"]}'

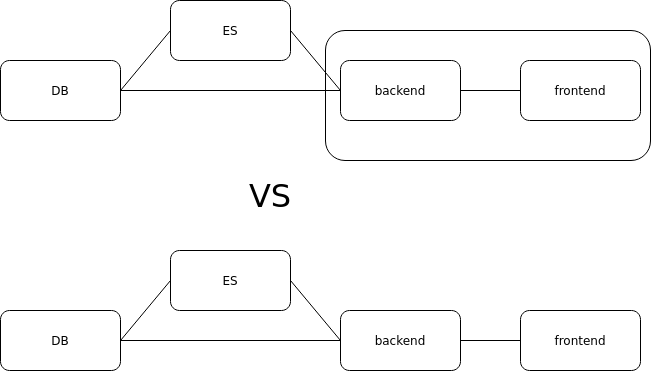

Comparison: n8n vs GitHub Copilot Agents

| Feature | n8n with MCP | GitHub Copilot Agents |

|---|---|---|

| Ease of use | More complex setup, steeper learning curve | Easier to work with, integrated into VS Code |

| Workflow complexity | Supports complex, multi-step workflows | Better for one-off tasks and development assistance |

| Persistence | Workflows can be saved and run repeatedly | Sessions are temporary, better for interactive use |

| MCP Playwright performance | Less stable, context issues between actions | Better performance, maintains browser context between actions |

| Debugging | Error messages can be cryptic and difficult to debug | More transparent, easier to see what's happening |

| Prompt engineering | Requires more explicit prompting | Handles vague instructions better |

| Automation frequency | Better for repeated automations | Better for ad-hoc automations |

| Tool behavior | More predictable tool usage patterns | Behavior can vary between models (e.g., Claude 3.5 vs 3.7) |

| Learning curve | Requires learning workflow concepts | Feels more like conversation with occasional "programming in a prompt" |

The choice between these platforms depends on your specific needs:

- Choose n8n for recurring automations, complex workflows, and when you need a visual representation of your process

- Choose GitHub Copilot agents for development assistance, ad-hoc automations, and when you prefer a conversational interface

My Insights and Findings

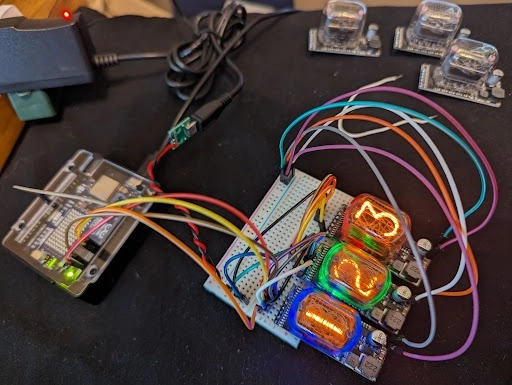

MCP is useful in quickly adding some tools. I tried out MCP Playwright and MCP FileSystem. When comparing the platforms, Playwright works better in GitHub Copilot than in n8n. In n8n it ran into errors due to the context not being there. I suspect n8n just reruns the Playwright MCP server every action, while Copilot keeps it open so it can keep connecting to the existing tab and browser context.

In n8n you can make more complex workflows and define more steps, which is beneficial for certain use cases. It does work, but you get error messages that are difficult to debug, many due to data being different than expected.

Overall GitHub Copilot agents was easier to work with, but n8n is more powerful when you want to run automations many times.

File Organization Experiment

I experimented with using AI agents to organize files in a filesystem, which revealed interesting differences between AI models. When tasked with file organization, Claude 3.7 took a more cautious approach by writing a shell script to move files. In contrast, Claude 3.5 was more proactive, directly executing the script in the terminal. This experiment highlighted that VS Code provides terminal access to agents by default, allowing for direct interaction with the filesystem. Through multiple iterations, the directory organization process showed continuous improvement.

Testing different MCP tools revealed consistent patterns in AI behavior. Initially, agents would attempt to access directories without checking permissions. After encountering errors, they learned to first inquire about which directories they could even access. This adaptive behavior demonstrates how agents can learn from system responses and adjust their approach accordingly. The process became more efficient when agents were explicitly instructed to check available directories before attempting operations.

LinkedIn Lead Generation Experiment

I conducted an experiment using AI to find decision-makers on LinkedIn:

- Set up a SQLite database to store contact information and post summaries

- The AI showed unexpected intelligence by focusing on director-level positions automatically

- Without being explicitly told, it knew to filter out consultants and engineers

- Used MCP Playwright for browser automation and data collection

Key observations:

- Different AI models were not equally reliable at storing data

- ChatGPT 4.0 was better at remembering to store data while browsing

- We learned that storing data immediately works better than saving it all at once

Writing this Blogpost Using an AI Agent

AI agents are helpful when writing. You can focus on your ideas instead of worrying about perfect sentences, grammar, and spelling. The AI helps make your writing flow better.

What matters most is including your own insights. If you let the AI write everything from scratch, you'll end up with generic content that misses the important points. That's why you should regularly save your blogpost to version control - this way, you can always go back if text gets lost or changed too much.

One useful approach is using tools like Perplexity to find documentation or copying READMEs from the internet yourself. Add these to your workspace, and then you can ask the AI agent to write about MCP using this documentation as context.

Some examples of challenges I ran into while writing this post:

- The AI often rewrote whole sections when I just wanted small improvements

- When writing about MCP setup, it gave generic, copy-pasted information instead of useful details

- While AI agents seem like they'll save time, you actually spend extra time learning how to work with them effectively

The AI particularly excelled at:

- Improving sentence structure and readability

- Adding transition sentences between paragraphs

- Catching grammar and spelling issues

- Suggesting better ways to organize information

- Maintaining consistent tone throughout the post

Conclusion

AI Agents take away the copy-paste steps out of your AI workflow. However, they are not a golden hammer yet. Just because AI Agents could theoretically take certain actions because they have the tools doesn't make them the best approach. Right now, the models often don't behave as expected and execute slowly. If you have to do a lot of prompt engineering to control the agent, you want tighter controls and asking smaller questions to better control the outcome.

I'm optimistic about the future because of the saying you're hearing more and more: what you see now is the worst that it's going to be.

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

.png?#)

.jpeg?#)

.jpg?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

![Leaker vaguely comments on under-screen camera in iPhone Fold [U]](https://i0.wp.com/9to5mac.com/wp-content/uploads/sites/6/2025/04/iPhone-Fold-will-have-Face-ID-embedded-in-the-display-%E2%80%93-leaker.webp?resize=1200%2C628&quality=82&strip=all&ssl=1)

![[Fixed] Gemini app is failing to generate Audio Overviews](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/03/Gemini-Audio-Overview-cover.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Seeds tvOS 18.5 Beta 2 to Developers [Download]](https://www.iclarified.com/images/news/97011/97011/97011-640.jpg)

![Apple Releases macOS Sequoia 15.5 Beta 2 to Developers [Download]](https://www.iclarified.com/images/news/97014/97014/97014-640.jpg)