The Algorithmic Faustian Bargain

The promise was seductive: machines capable of learning, adapting, even thinking. From the clunky expert systems of the 80s to the sleek neural networks of today, artificial intelligence has consistently overpromised and underdelivered…until now. The recent explosion in AI capabilities, fuelled by vast datasets and unprecedented computing power, has delivered systems that can generate convincing text, create startlingly realistic images, and even outperform humans in complex games. But this newfound power comes at a cost: a creeping erosion of trust, a sense that the algorithmic genie is slipping its confines. The Genesis of Belief: Early AI and the Myth of Objectivity The initial fascination with AI in the mid-20th century hinged on a naive belief in objectivity. Early AI research, heavily influenced by the logic-driven world of mathematics and computer science, aimed to create systems free from the biases and irrationalities of human thought. Expert systems, designed to mimic the reasoning of human specialists, were hailed as unbiased arbiters of knowledge. The appeal was potent. Deprive a problem of human emotion, the reasoning went, and you arrive at a purer, more accurate solution. This belief was reinforced by the technical challenges of the era. Building anything that resembled intelligence was hard enough; worrying about philosophical nuances like bias felt like a luxury. The focus remained firmly on replicating the process of reasoning, assuming that truth would naturally emerge. The limitations were glaring, of course. These systems were brittle, easily fooled by inputs outside their narrow domain of expertise. But the narrative of objective intelligence persisted, nurtured by science fiction’s portrayals of benevolent, all-knowing machines. Early successes in specific fields, like chess-playing programs, further cemented the idea that AI was a path to unvarnished truth. When Algorithms Began to Show Their Shadows The rise of machine learning in the 21st century shifted the paradigm, but not necessarily for the better. Instead of explicitly programming rules, engineers began to train algorithms using vast quantities of data. This shift, while unlocking incredible new capabilities, inadvertently opened the door to a far more insidious problem: algorithmic bias. The core conceit of machine learning is that data speaks for itself. But data doesn't simply exist; it's created, collected, and labelled by humans, reflecting existing societal inequalities and prejudices. Training an AI on biased data doesn’t magically erase that bias; it amplifies it, embedding it within the very fabric of the system. The consequences have been starkly evident in a growing number of real-world applications. Facial recognition systems consistently misidentify people of colour. AI-powered hiring tools discriminate against women. Loan applications are unfairly denied based on postcode. The illusion of objectivity shattered, replaced by the sobering reality that algorithms are not neutral arbiters, but mirrors reflecting our own flawed society. This revelation didn't trigger an immediate crisis of faith. The initial response was often to dismiss these instances as ‘bugs’ – technical glitches that could be fixed with better data or more sophisticated algorithms. But as the pattern repeated itself, a more unsettling truth began to emerge. The problem wasn’t merely technical; it was fundamentally linked to the way we collect, curate, and interpret data. Losing Sight of How Decisions Are Made Compounding the issue of bias is the inherent opacity of many modern AI systems, particularly deep learning models. These neural networks, inspired by the structure of the human brain, are incredibly complex, consisting of millions or even billions of interconnected nodes. While they can achieve remarkable performance, they are also notoriously difficult to understand. This “black box” problem makes it nearly impossible to trace the rationale behind a particular decision. Why did the algorithm deny that loan? What led it to misidentify that individual? The answers are often obscured within the tangled web of connections, defying human comprehension. This lack of transparency undermines trust in several ways. It makes it difficult to identify and correct biases. It creates a sense of powerlessness, as individuals are subjected to decisions made by an inscrutable force. And it raises fundamental questions about accountability. If an AI causes harm, who is responsible? The programmer? The data scientist? The company that deployed the system? The push for “explainable AI” (XAI) is attempting to address this challenge, but progress has been slow. Creating AI systems that are both accurate and interpretable remains a formidable task. And even when explanations are provided, they are often simplified approximations, failing to capture the full complexity of the underlying process. Generative AI a

The promise was seductive: machines capable of learning, adapting, even thinking. From the clunky expert systems of the 80s to the sleek neural networks of today, artificial intelligence has consistently overpromised and underdelivered…until now. The recent explosion in AI capabilities, fuelled by vast datasets and unprecedented computing power, has delivered systems that can generate convincing text, create startlingly realistic images, and even outperform humans in complex games. But this newfound power comes at a cost: a creeping erosion of trust, a sense that the algorithmic genie is slipping its confines.

The Genesis of Belief: Early AI and the Myth of Objectivity

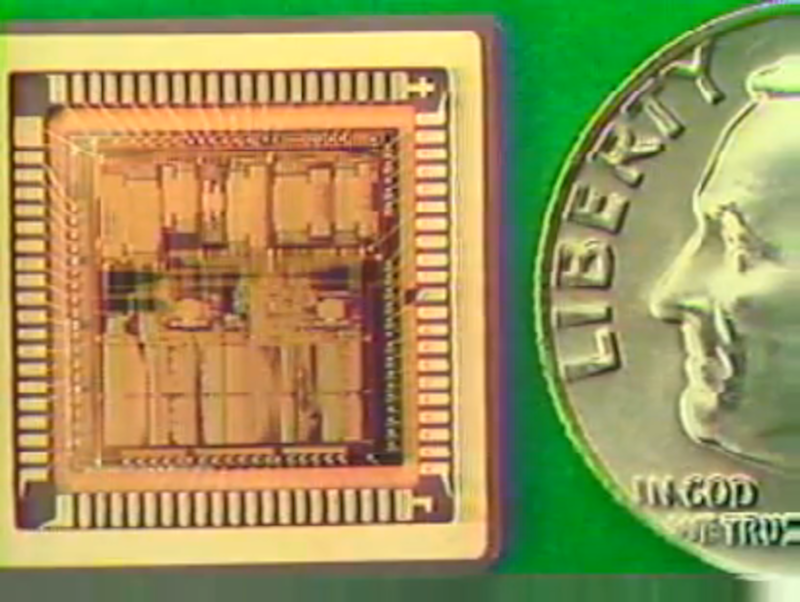

The initial fascination with AI in the mid-20th century hinged on a naive belief in objectivity. Early AI research, heavily influenced by the logic-driven world of mathematics and computer science, aimed to create systems free from the biases and irrationalities of human thought. Expert systems, designed to mimic the reasoning of human specialists, were hailed as unbiased arbiters of knowledge. The appeal was potent. Deprive a problem of human emotion, the reasoning went, and you arrive at a purer, more accurate solution.

This belief was reinforced by the technical challenges of the era. Building anything that resembled intelligence was hard enough; worrying about philosophical nuances like bias felt like a luxury. The focus remained firmly on replicating the process of reasoning, assuming that truth would naturally emerge. The limitations were glaring, of course. These systems were brittle, easily fooled by inputs outside their narrow domain of expertise. But the narrative of objective intelligence persisted, nurtured by science fiction’s portrayals of benevolent, all-knowing machines. Early successes in specific fields, like chess-playing programs, further cemented the idea that AI was a path to unvarnished truth.

When Algorithms Began to Show Their Shadows

The rise of machine learning in the 21st century shifted the paradigm, but not necessarily for the better. Instead of explicitly programming rules, engineers began to train algorithms using vast quantities of data. This shift, while unlocking incredible new capabilities, inadvertently opened the door to a far more insidious problem: algorithmic bias.

The core conceit of machine learning is that data speaks for itself. But data doesn't simply exist; it's created, collected, and labelled by humans, reflecting existing societal inequalities and prejudices. Training an AI on biased data doesn’t magically erase that bias; it amplifies it, embedding it within the very fabric of the system.

The consequences have been starkly evident in a growing number of real-world applications. Facial recognition systems consistently misidentify people of colour. AI-powered hiring tools discriminate against women. Loan applications are unfairly denied based on postcode. The illusion of objectivity shattered, replaced by the sobering reality that algorithms are not neutral arbiters, but mirrors reflecting our own flawed society.

This revelation didn't trigger an immediate crisis of faith. The initial response was often to dismiss these instances as ‘bugs’ – technical glitches that could be fixed with better data or more sophisticated algorithms. But as the pattern repeated itself, a more unsettling truth began to emerge. The problem wasn’t merely technical; it was fundamentally linked to the way we collect, curate, and interpret data.

Losing Sight of How Decisions Are Made

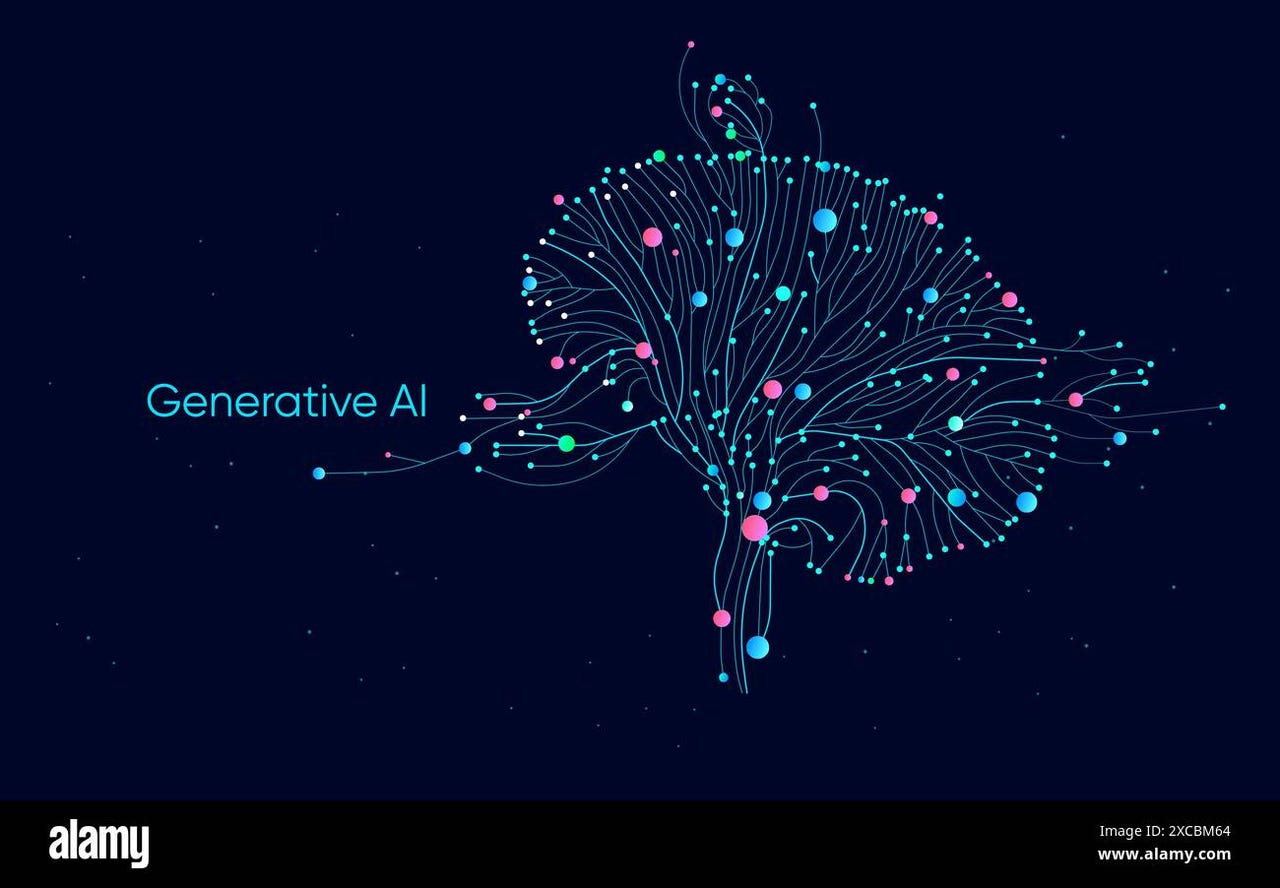

Compounding the issue of bias is the inherent opacity of many modern AI systems, particularly deep learning models. These neural networks, inspired by the structure of the human brain, are incredibly complex, consisting of millions or even billions of interconnected nodes. While they can achieve remarkable performance, they are also notoriously difficult to understand.

This “black box” problem makes it nearly impossible to trace the rationale behind a particular decision. Why did the algorithm deny that loan? What led it to misidentify that individual? The answers are often obscured within the tangled web of connections, defying human comprehension.

This lack of transparency undermines trust in several ways. It makes it difficult to identify and correct biases. It creates a sense of powerlessness, as individuals are subjected to decisions made by an inscrutable force. And it raises fundamental questions about accountability. If an AI causes harm, who is responsible? The programmer? The data scientist? The company that deployed the system?

The push for “explainable AI” (XAI) is attempting to address this challenge, but progress has been slow. Creating AI systems that are both accurate and interpretable remains a formidable task. And even when explanations are provided, they are often simplified approximations, failing to capture the full complexity of the underlying process.

Generative AI and the Crisis of Authenticity

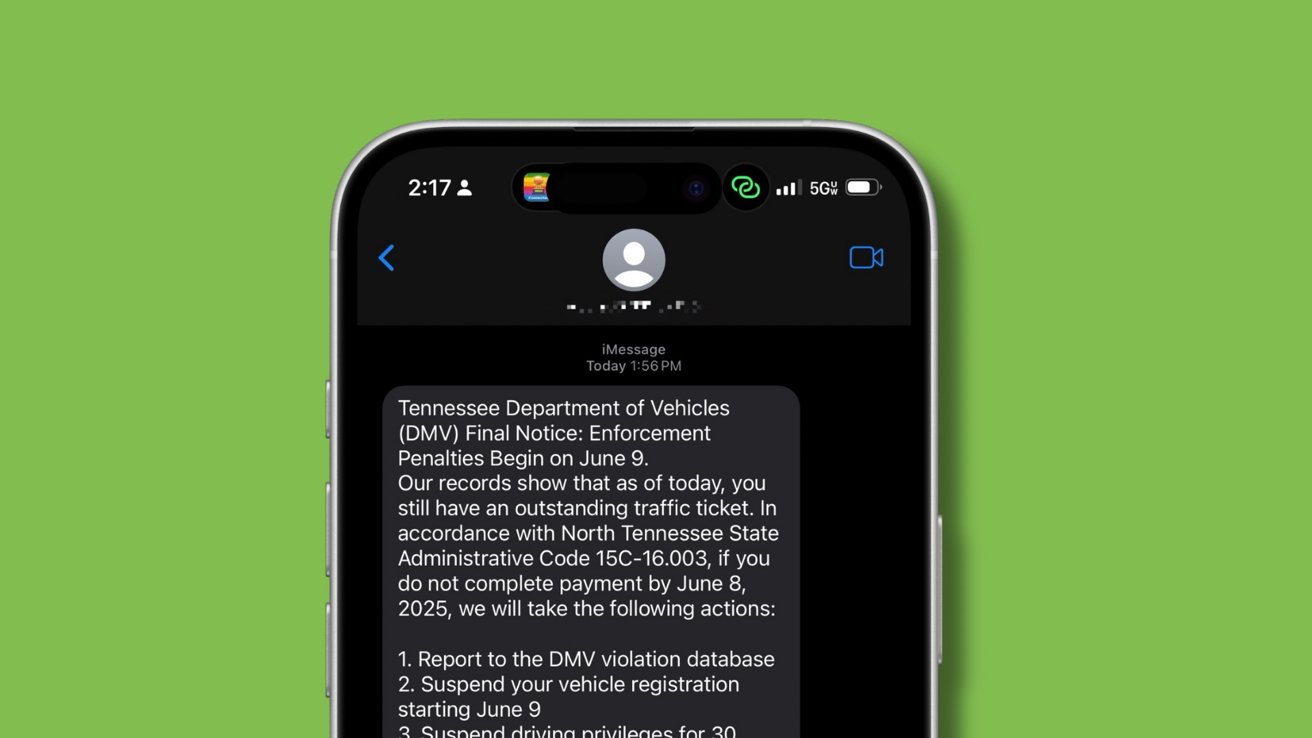

The recent emergence of generative AI—systems like DALL-E 2, Midjourney, and ChatGPT—has introduced a new dimension to the erosion of trust. These models can create incredibly realistic content – images, text, audio, video – that is indistinguishable from what a human could produce.

This capability has enormous potential, but it also poses profound challenges to our understanding of authenticity. How can we distinguish between reality and simulation? How can we verify the source of information? The ease with which generative AI can create convincing deepfakes – manipulated videos or audio recordings – threatens to undermine trust in all forms of media.

The implications are far-reaching. Generative AI could be used to spread misinformation, manipulate public opinion, and even destabilize political systems. It could also erode trust in artistic and creative endeavors, blurring the lines between human expression and algorithmic imitation.

This isn't simply about identifying fakes. It's about a broader questioning of what constitutes "real" in a world saturated with synthetic content. The very act of consuming information becomes more fraught with uncertainty, requiring a constant state of critical assessment.

The Return of the Human in the Loop

The escalating crisis of trust demands a fundamental shift in how we approach AI development and deployment. The blind faith in algorithmic objectivity must be replaced by a more nuanced and critical perspective. This requires several key changes.

Firstly, we need to prioritize data diversity and quality. Algorithms are only as good as the data they are trained on. Carefully curating datasets to reflect the diversity of the real world, and actively mitigating bias, is essential.

Secondly, we need to embrace transparency and explainability. While fully interpretable AI may remain elusive, we can demand greater accountability from AI developers. Systems should be designed with traceability in mind, allowing us to understand how decisions are made and identify potential sources of bias.

Thirdly, we need to move away from a purely automated approach to AI. The idea that AI can completely replace human judgment is demonstrably false. Instead, we should focus on building “human-in-the-loop” systems, where AI augments human capabilities rather than replacing them entirely. This approach allows us to leverage the strengths of both humans and machines, combining algorithmic efficiency with human intuition and ethical considerations.

Addressing the Hallucinations

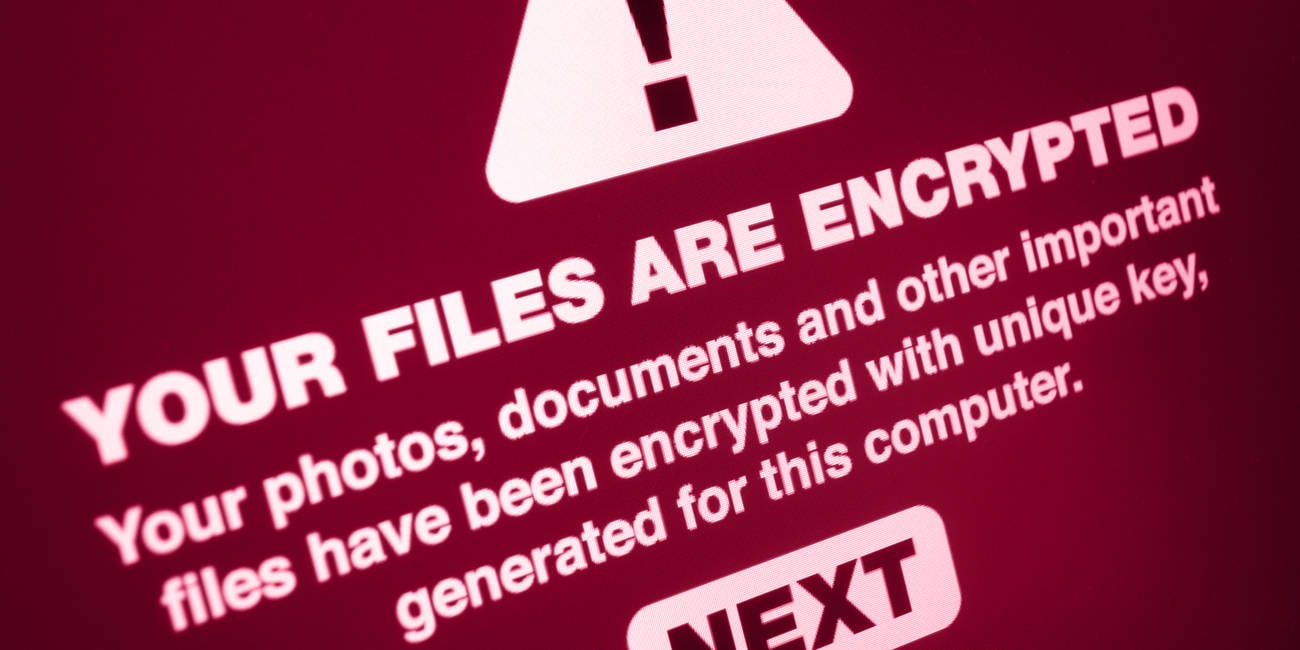

Generative AI presents a unique challenge: the phenomenon of “hallucinations.” These models, while remarkably fluent, often generate information that is factually incorrect or nonsensical. They confidently fabricate details, make up sources, and even invent entire narratives.

This isn't malicious intent; it's a byproduct of the way these models are trained. They are optimized to generate text that is statistically likely, not necessarily text that is true. This creates a dangerous situation, particularly in applications like news generation or customer service, where accuracy is paramount.

Addressing hallucinations requires a multi-faceted approach. Techniques like reinforcement learning from human feedback (RLHF) can help align AI models with human values and preferences, reducing the likelihood of generating false information. Knowledge retrieval systems can be integrated to ground AI outputs in verified facts. And, critically, users need to be aware of the potential for hallucinations and exercise critical judgment when interacting with generative AI systems.

Cultivating Algorithmic Literacy

While regulation undoubtedly has a role to play, it’s insufficient on its own. We need to foster a broader culture of “algorithmic literacy” – the ability to understand how AI systems work, recognize their limitations, and critically evaluate their outputs.

This begins with education. Computer science curricula should incorporate ethical considerations and bias awareness. Media literacy programs should equip citizens with the skills to identify deepfakes and misinformation. And policymakers need to engage in informed discussions about the societal implications of AI.

But algorithmic literacy isn’t just about technical knowledge. It’s also about cultivating a healthy dose of skepticism. We need to resist the temptation to treat AI as an infallible oracle and instead recognize it as a tool – a powerful tool, but one that is ultimately shaped by human choices and biases.

Building AI We Can Believe In

The erosion of trust in AI is a significant challenge, but it is not insurmountable. By acknowledging the limitations of these systems, prioritizing ethical considerations, and fostering algorithmic literacy, we can begin to rebuild confidence.

The path forward is not about abandoning AI, but about reimagining its role in society. It’s about recognizing that AI is not a replacement for human judgment, but a tool to augment it. It’s about building systems that are not only intelligent but also transparent, accountable, and aligned with our values.

The algorithmic Faustian bargain—trading control for convenience—doesn’t have to end in damnation. But it requires a conscious and deliberate effort to reclaim agency, to demand accountability, and to build AI systems that are worthy of our trust. The future isn’t about smarter machines; it’s about building better relationships with them. The trick, it seems, is to remember that these systems are reflections of us, and the quality of that reflection depends entirely on the lens through which we choose to build them.

Publishing History

- URL: https://rawveg.substack.com/p/the-algorithmic-faustian-bargain

- Date: 1st May 2025

_.png)

![[The AI Show Episode 151]: Anthropic CEO: AI Will Destroy 50% of Entry-Level Jobs, Veo 3’s Scary Lifelike Videos, Meta Aims to Fully Automate Ads & Perplexity’s Burning Cash](https://www.marketingaiinstitute.com/hubfs/ep%20151%20cover.png)

![From electrical engineering student to CTO with Hitesh Choudhary [Podcast #175]](https://cdn.hashnode.com/res/hashnode/image/upload/v1749158756824/3996a2ad-53e5-4a8f-ab97-2c77a6f66ba3.png?#)

.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

_Michael_Vi_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![watchOS 26 May Bring Third-Party Widgets to Control Center [Report]](https://www.iclarified.com/images/news/97520/97520/97520-640.jpg)

![AirPods Pro 2 On Sale for $169 — Save $80! [Deal]](https://www.iclarified.com/images/news/97526/97526/97526-640.jpg)