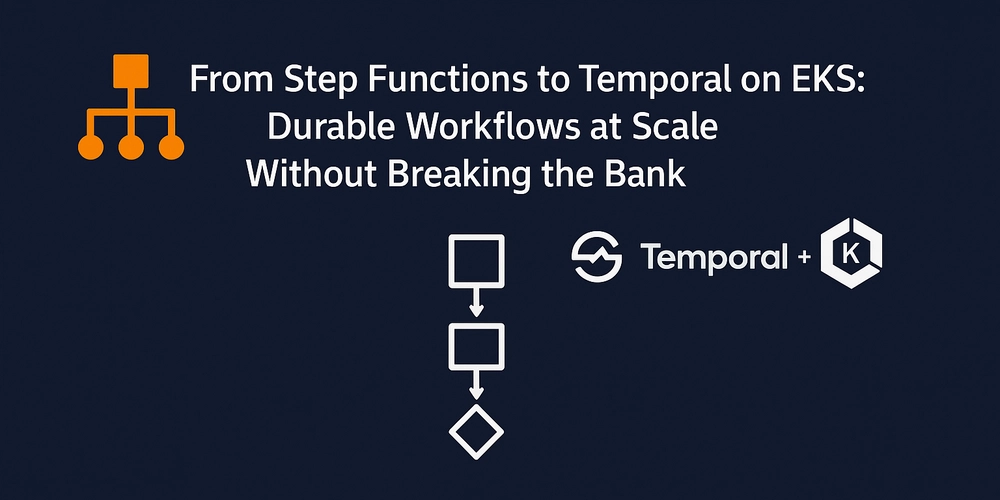

Kubernetes: The Catalyst for Cost-Efficiency, Scalability, and Infrastructure Freedom in Modern IT

This article explores how Kubernetes revolutionizes IT operations by enabling organizations to cut costs, eliminate operational bottlenecks, and scale seamlessly across hybrid, multi-cloud, and edge environments—without dependence on legacy systems or single-vendor solutions. Discover why Kubernetes is indispensable for businesses navigating today’s dynamic digital landscape. The Imperative for Modern Infrastructure Agility In today’s hypercompetitive and rapidly evolving technological landscape, businesses face relentless pressure to innovate while minimizing costs and maximizing efficiency. Traditional infrastructure models—often rigid, siloed, and tied to specific vendors or on-premises systems—struggle to keep pace with fluctuating demands, unpredictable workloads, and the growing complexity of multi-cloud or edge computing environments. Kubernetes, the open-source container orchestration platform, has emerged as a transformative force, empowering organizations to decouple from legacy constraints and build agile, cost-effective, and scalable operations from the ground up. Cost Optimization Through Intelligent Resource Management Kubernetes drives cost savings by optimizing resource utilization at every layer of the infrastructure stack. Unlike static virtual machines or bare-metal servers, Kubernetes dynamically allocates compute, memory, and storage based on real-time application needs. Features like Horizontal Pod Autoscaling (HPA) automatically adjust the number of application instances in response to traffic spikes, ensuring businesses pay only for what they use. Similarly, the Cluster Autoscaler provisions or decommissions nodes in cloud environments as demand fluctuates, eliminating idle resources. Furthermore, Kubernetes enables “bin packing,” a technique that maximizes node efficiency by intelligently scheduling containers to fill available capacity. This reduces the need for overprovisioning, a common source of wasted spending in traditional setups. Organizations can also leverage cost-saving strategies like spot instances (short-lived, low-cost cloud VMs) by deploying Kubernetes-aware tools such as Karpenter, which handles spot instance interruptions gracefully. By enforcing resource quotas and limits at the namespace level, Kubernetes prevents runaway consumption, ensuring no single team or application monopolizes shared infrastructure. Eliminating Operational Overhead with Automation Manual infrastructure management is a significant drain on time, expertise, and financial resources. Kubernetes eliminates this burden by automating critical workflows. For instance, its declarative configuration model allows developers to define desired system states (e.g., “run five replicas of this microservice”), while Kubernetes handles the complexities of deployment, scaling, and health monitoring. Self-healing capabilities automatically restart failed containers, replace unresponsive nodes, and roll back faulty updates, reducing downtime and the need for human intervention. Operational teams benefit from Kubernetes’ unified API and extensible architecture, which integrate seamlessly with CI/CD pipelines, monitoring tools (e.g., Prometheus), and service meshes (e.g., Istio). This standardization reduces the cognitive load of managing disparate tools and environments. Multi-tenancy features, such as namespaces and role-based access control (RBAC), allow organizations to securely share clusters across teams, further consolidating infrastructure and trimming administrative overhead. Elastic Scalability Across Diverse Environments Kubernetes’ true power lies in its ability to scale applications and infrastructure across heterogeneous environments—whether public clouds, private data centers, or edge devices. By abstracting applications from underlying infrastructure, Kubernetes ensures workloads run consistently anywhere, enabling a “build once, deploy anywhere” strategy. This portability is critical for businesses adopting hybrid or multi-cloud architectures to avoid vendor lock-in, comply with data residency laws, or optimize latency by processing data closer to users (e.g., IoT edge nodes). Scaling is not limited to applications; Kubernetes also simplifies infrastructure expansion. For example, a retailer can deploy a centralized Kubernetes cluster in AWS during peak holiday seasons while maintaining a smaller on-premises cluster for day-to-day operations, dynamically shifting workloads as needed. Similarly, automotive companies use Kubernetes to manage real-time analytics across thousands of edge devices in manufacturing plants, scaling compute resources locally without relying on centralized cloud systems. Liberation from Legacy Infrastructure Kubernetes enables organizations to bypass the limitations of existing systems. Teams can modernize legacy applications incrementally by containerizing components and deploying them alo

This article explores how Kubernetes revolutionizes IT operations by enabling organizations to cut costs, eliminate operational bottlenecks, and scale seamlessly across hybrid, multi-cloud, and edge environments—without dependence on legacy systems or single-vendor solutions. Discover why Kubernetes is indispensable for businesses navigating today’s dynamic digital landscape.

The Imperative for Modern Infrastructure Agility

In today’s hypercompetitive and rapidly evolving technological landscape, businesses face relentless pressure to innovate while minimizing costs and maximizing efficiency. Traditional infrastructure models—often rigid, siloed, and tied to specific vendors or on-premises systems—struggle to keep pace with fluctuating demands, unpredictable workloads, and the growing complexity of multi-cloud or edge computing environments. Kubernetes, the open-source container orchestration platform, has emerged as a transformative force, empowering organizations to decouple from legacy constraints and build agile, cost-effective, and scalable operations from the ground up.

Cost Optimization Through Intelligent Resource Management

Kubernetes drives cost savings by optimizing resource utilization at every layer of the infrastructure stack. Unlike static virtual machines or bare-metal servers, Kubernetes dynamically allocates compute, memory, and storage based on real-time application needs. Features like Horizontal Pod Autoscaling (HPA) automatically adjust the number of application instances in response to traffic spikes, ensuring businesses pay only for what they use. Similarly, the Cluster Autoscaler provisions or decommissions nodes in cloud environments as demand fluctuates, eliminating idle resources.

Furthermore, Kubernetes enables “bin packing,” a technique that maximizes node efficiency by intelligently scheduling containers to fill available capacity. This reduces the need for overprovisioning, a common source of wasted spending in traditional setups. Organizations can also leverage cost-saving strategies like spot instances (short-lived, low-cost cloud VMs) by deploying Kubernetes-aware tools such as Karpenter, which handles spot instance interruptions gracefully. By enforcing resource quotas and limits at the namespace level, Kubernetes prevents runaway consumption, ensuring no single team or application monopolizes shared infrastructure.

Eliminating Operational Overhead with Automation

Manual infrastructure management is a significant drain on time, expertise, and financial resources. Kubernetes eliminates this burden by automating critical workflows. For instance, its declarative configuration model allows developers to define desired system states (e.g., “run five replicas of this microservice”), while Kubernetes handles the complexities of deployment, scaling, and health monitoring. Self-healing capabilities automatically restart failed containers, replace unresponsive nodes, and roll back faulty updates, reducing downtime and the need for human intervention.

Operational teams benefit from Kubernetes’ unified API and extensible architecture, which integrate seamlessly with CI/CD pipelines, monitoring tools (e.g., Prometheus), and service meshes (e.g., Istio). This standardization reduces the cognitive load of managing disparate tools and environments. Multi-tenancy features, such as namespaces and role-based access control (RBAC), allow organizations to securely share clusters across teams, further consolidating infrastructure and trimming administrative overhead.

Elastic Scalability Across Diverse Environments

Kubernetes’ true power lies in its ability to scale applications and infrastructure across heterogeneous environments—whether public clouds, private data centers, or edge devices. By abstracting applications from underlying infrastructure, Kubernetes ensures workloads run consistently anywhere, enabling a “build once, deploy anywhere” strategy. This portability is critical for businesses adopting hybrid or multi-cloud architectures to avoid vendor lock-in, comply with data residency laws, or optimize latency by processing data closer to users (e.g., IoT edge nodes).

Scaling is not limited to applications; Kubernetes also simplifies infrastructure expansion. For example, a retailer can deploy a centralized Kubernetes cluster in AWS during peak holiday seasons while maintaining a smaller on-premises cluster for day-to-day operations, dynamically shifting workloads as needed. Similarly, automotive companies use Kubernetes to manage real-time analytics across thousands of edge devices in manufacturing plants, scaling compute resources locally without relying on centralized cloud systems.

Liberation from Legacy Infrastructure

Kubernetes enables organizations to bypass the limitations of existing systems. Teams can modernize legacy applications incrementally by containerizing components and deploying them alongside cloud-native services in Kubernetes clusters—without overhauling entire systems overnight. This approach avoids costly “rip-and-replace” projects while accelerating innovation.

Moreover, Kubernetes democratizes access to advanced infrastructure capabilities. Startups and smaller enterprises can deploy production-grade orchestration without investing in expensive hardware or specialized teams, leveling the playing field against larger competitors. Open-source distributions like K3s or managed services like Google Kubernetes Engine (GKE) further reduce barriers to entry, offering lightweight or fully automated Kubernetes experiences tailored to diverse needs.

Future-Proofing for Emerging Technologies

As technologies like AI/ML, serverless computing, and 5G networks reshape industries, Kubernetes provides a flexible foundation to adopt these innovations without reinventing infrastructure. Its extensible API supports custom operators and CRDs (Custom Resource Definitions), allowing teams to integrate Kubernetes with machine learning pipelines, blockchain networks, or IoT platforms. For instance, Kubernetes-native frameworks like Kubeflow streamline the deployment of distributed ML workloads, auto-scaling GPU resources on demand.

The rise of serverless architectures is also enhanced by Kubernetes through projects like Knative, which abstracts serverless logic while retaining portability across clouds. This adaptability ensures organizations can pivot quickly as market demands evolve, avoiding the sunk costs of rigid, proprietary systems.

Key Takeaways:

- Cost Efficiency: Kubernetes reduces waste through auto-scaling, bin packing, and spot instance integration, slashing cloud bills by up to 70% in optimized setups.

- Operational Simplicity: Automation of deployments, self-healing, and multi-tenancy cut manual effort, freeing teams to focus on innovation.

- Unified Scalability: Applications scale seamlessly across clouds, on-premises, and edge, ensuring agility in heterogeneous environments.

- Infrastructure Agnosticism: Break free from vendor lock-in and legacy systems with a portable, future-proof platform.

- Innovation Enablement: Kubernetes’ extensibility supports cutting-edge use cases, from AI to serverless, positioning businesses for long-term growth.

In an era where digital resilience and speed define competitive advantage, Kubernetes is not merely a tool—it’s a strategic imperative. By embracing its capabilities, organizations can transcend infrastructure limitations, optimize spending, and scale innovation sustainably.

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

.jpg?#)

.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

_ArtemisDiana_Alamy.jpg?#)

-xl.jpg)

![Yes, the Gemini icon is now bigger and brighter on Android [U]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/02/Gemini-on-Galaxy-S25.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Rushes Five Planes of iPhones to US Ahead of New Tariffs [Report]](https://www.iclarified.com/images/news/96967/96967/96967-640.jpg)

![Apple Vision Pro 2 Allegedly in Production Ahead of 2025 Launch [Rumor]](https://www.iclarified.com/images/news/96965/96965/96965-640.jpg)