Kubernetes on the Edge: Running Containers Closer to Users

Introduction In today's fast-paced digital landscape, DevOps teams are constantly seeking ways to optimize application performance and ensure low-latency user experiences. Traditional cloud-based deployments are effective, but they introduce latency due to the distance between users and centralized data centers. Kubernetes on the Edge is an emerging technology that extends containerized applications closer to end users, improving responsiveness and efficiency. This blog explores how Kubernetes on the Edge is transforming DevOps workflows and enabling next-generation applications. What is Kubernetes on the Edge? Kubernetes on the Edge refers to deploying and managing containerized applications on edge computing environments instead of traditional centralized cloud data centers. Edge computing allows computation and data storage to be performed closer to the data source, reducing latency and bandwidth usage. In the DevOps ecosystem, Kubernetes on the Edge enables teams to deploy microservices at geographically distributed locations, such as IoT devices, 5G networks, smart cities, and autonomous vehicles, ensuring real-time processing and high availability. How it Works Architecture and Core Components Edge Nodes – These are lightweight computing devices or mini data centers located near users. Kubernetes Control Plane – Can be centralized in a cloud data center or distributed across edge locations. KubeEdge or MicroK8s – Lightweight Kubernetes distributions optimized for edge deployments. Containerized Applications – Workloads are deployed as microservices on edge nodes. Networking & Security – Service mesh tools like Istio, Linkerd, and network policies ensure secure communication. Real-World Example Consider a retail chain implementing edge Kubernetes to process customer data locally in each store. Instead of sending all transactions to a central cloud, the store’s edge server processes transactions in real-time and syncs with the cloud periodically. Key Features & Benefits Low Latency: Applications respond faster as processing occurs closer to users. Reduced Bandwidth Usage: Less data is transmitted to the cloud, saving costs. Offline Operation: Some workloads can function even without internet connectivity. Improved Scalability: Edge Kubernetes enables dynamic scaling across multiple locations. Enhanced Security: Data processing at the edge minimizes exposure to cyber threats. Use Cases & Industry Adoption Telecommunications: 5G networks use Kubernetes on the Edge to deliver ultra-fast, low-latency services. Manufacturing: Industrial IoT devices use edge Kubernetes for real-time monitoring and automation. Healthcare: Smart hospitals leverage edge computing for AI-driven diagnostics and patient monitoring. Retail: In-store analytics and personalized customer experiences are powered by edge Kubernetes. Autonomous Vehicles: Self-driving cars process data locally for quick decision-making. Comparison with Alternatives Feature Kubernetes on the Edge Traditional Cloud Kubernetes On-Prem Kubernetes Latency Very low Higher Moderate Scalability High High Limited Bandwidth Usage Low High Moderate Cost Efficiency Optimized Expensive Moderate Complexity High Moderate High Step-by-Step Implementation 1. Install MicroK8s on an Edge Node sudo snap install microk8s --classic microk8s status --wait-ready 2. Deploy an Application microk8s kubectl create deployment nginx --image=nginx 3. Expose the Application microk8s kubectl expose deployment nginx --port=80 --type=NodePort 4. Verify Deployment microk8s kubectl get pods,services Latest Updates & Trends K3s and MicroK8s adoption is increasing for lightweight Kubernetes edge deployments. 5G networks are integrating edge Kubernetes to improve latency for connected devices. AI and ML at the edge are becoming more prevalent in smart applications. Security enhancements like zero-trust networking are being adopted in edge Kubernetes. Challenges & Considerations Limited Computing Resources: Edge devices often have lower processing power than cloud servers. Network Reliability: Ensuring consistent connectivity between edge nodes and central control planes. Security Risks: Data at the edge can be more vulnerable to local cyberattacks. Complex Deployment & Management: Managing distributed workloads requires expertise. Conclusion & Future Scope Kubernetes on the Edge is revolutionizing the way DevOps teams deploy and manage applications. By bringing workloads closer to users, it enhances performance, scalability, and efficiency. However, challenges like resource limitations and security concerns need to be addressed. As edge computing continues to evolve, Kubernetes will play a vital role

Introduction

In today's fast-paced digital landscape, DevOps teams are constantly seeking ways to optimize application performance and ensure low-latency user experiences. Traditional cloud-based deployments are effective, but they introduce latency due to the distance between users and centralized data centers. Kubernetes on the Edge is an emerging technology that extends containerized applications closer to end users, improving responsiveness and efficiency. This blog explores how Kubernetes on the Edge is transforming DevOps workflows and enabling next-generation applications.

What is Kubernetes on the Edge?

Kubernetes on the Edge refers to deploying and managing containerized applications on edge computing environments instead of traditional centralized cloud data centers. Edge computing allows computation and data storage to be performed closer to the data source, reducing latency and bandwidth usage.

In the DevOps ecosystem, Kubernetes on the Edge enables teams to deploy microservices at geographically distributed locations, such as IoT devices, 5G networks, smart cities, and autonomous vehicles, ensuring real-time processing and high availability.

How it Works

Architecture and Core Components

- Edge Nodes – These are lightweight computing devices or mini data centers located near users.

- Kubernetes Control Plane – Can be centralized in a cloud data center or distributed across edge locations.

- KubeEdge or MicroK8s – Lightweight Kubernetes distributions optimized for edge deployments.

- Containerized Applications – Workloads are deployed as microservices on edge nodes.

- Networking & Security – Service mesh tools like Istio, Linkerd, and network policies ensure secure communication.

Real-World Example

Consider a retail chain implementing edge Kubernetes to process customer data locally in each store. Instead of sending all transactions to a central cloud, the store’s edge server processes transactions in real-time and syncs with the cloud periodically.

Key Features & Benefits

- Low Latency: Applications respond faster as processing occurs closer to users.

- Reduced Bandwidth Usage: Less data is transmitted to the cloud, saving costs.

- Offline Operation: Some workloads can function even without internet connectivity.

- Improved Scalability: Edge Kubernetes enables dynamic scaling across multiple locations.

- Enhanced Security: Data processing at the edge minimizes exposure to cyber threats.

Use Cases & Industry Adoption

- Telecommunications: 5G networks use Kubernetes on the Edge to deliver ultra-fast, low-latency services.

- Manufacturing: Industrial IoT devices use edge Kubernetes for real-time monitoring and automation.

- Healthcare: Smart hospitals leverage edge computing for AI-driven diagnostics and patient monitoring.

- Retail: In-store analytics and personalized customer experiences are powered by edge Kubernetes.

- Autonomous Vehicles: Self-driving cars process data locally for quick decision-making.

Comparison with Alternatives

| Feature | Kubernetes on the Edge | Traditional Cloud Kubernetes | On-Prem Kubernetes |

|---|---|---|---|

| Latency | Very low | Higher | Moderate |

| Scalability | High | High | Limited |

| Bandwidth Usage | Low | High | Moderate |

| Cost Efficiency | Optimized | Expensive | Moderate |

| Complexity | High | Moderate | High |

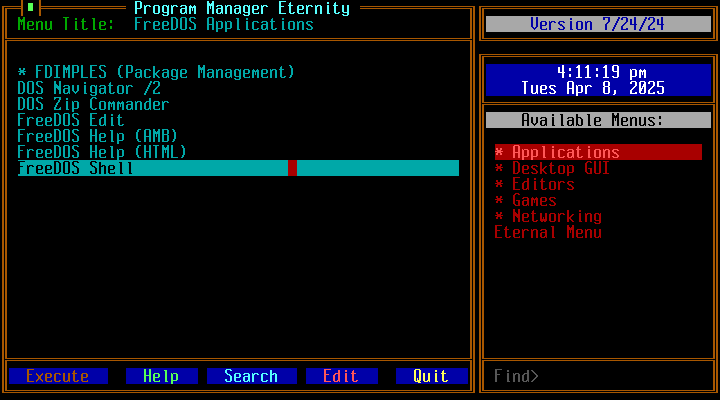

Step-by-Step Implementation

1. Install MicroK8s on an Edge Node

sudo snap install microk8s --classic

microk8s status --wait-ready

2. Deploy an Application

microk8s kubectl create deployment nginx --image=nginx

3. Expose the Application

microk8s kubectl expose deployment nginx --port=80 --type=NodePort

4. Verify Deployment

microk8s kubectl get pods,services

Latest Updates & Trends

- K3s and MicroK8s adoption is increasing for lightweight Kubernetes edge deployments.

- 5G networks are integrating edge Kubernetes to improve latency for connected devices.

- AI and ML at the edge are becoming more prevalent in smart applications.

- Security enhancements like zero-trust networking are being adopted in edge Kubernetes.

Challenges & Considerations

- Limited Computing Resources: Edge devices often have lower processing power than cloud servers.

- Network Reliability: Ensuring consistent connectivity between edge nodes and central control planes.

- Security Risks: Data at the edge can be more vulnerable to local cyberattacks.

- Complex Deployment & Management: Managing distributed workloads requires expertise.

Conclusion & Future Scope

Kubernetes on the Edge is revolutionizing the way DevOps teams deploy and manage applications. By bringing workloads closer to users, it enhances performance, scalability, and efficiency. However, challenges like resource limitations and security concerns need to be addressed. As edge computing continues to evolve, Kubernetes will play a vital role in enabling real-time applications, making it a key technology for the future of DevOps.

References & Further Learning

- Kubernetes Official Documentation

- MicroK8s Documentation

- KubeEdge Project

- Edge Computing with Kubernetes

- GitHub: Kubernetes on the Edge Examples

Kubernetes on the Edge is not just a trend—it’s a transformative shift in cloud-native computing. As DevOps professionals, staying ahead in this field will be essential for optimizing next-generation applications. Happy learning!

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

![Apple Rushes Five Planes of iPhones to US Ahead of New Tariffs [Report]](https://www.iclarified.com/images/news/96967/96967/96967-640.jpg)

![Apple Vision Pro 2 Allegedly in Production Ahead of 2025 Launch [Rumor]](https://www.iclarified.com/images/news/96965/96965/96965-640.jpg)

![3 big talking points after Daredevil Born Again episode 8: why did Daredevil save [spoiler], who really killed Foggy in the Marvel TV show, and more](https://cdn.mos.cms.futurecdn.net/FpR4EjKc9Pgn4VSqYqqoc3.jpg?#)