How to Build Agentic Rag in Rust

The Problem: RAG That Searches for Everything Traditional RAG systems have a glaring issue: they search for everything. User asks about pizza recipes? Search the knowledge base. Wants to know the weather? Search again. Having a casual conversation? Yep, another search. This shotgun approach leads to: Irrelevant context cluttering the LLM's input Higher costs from unnecessary searches and longer prompts Slower responses due to constant database hits Confused AI trying to make sense of unrelated documents What if the AI could decide for itself when it actually needs to search? Enter Agentic RAG: The LLM Calls the Shots Agentic RAG flips the script. Instead of automatically searching on every query, we give the language model tools it can choose to use. Think of it like handing someone a toolbox—they'll grab a hammer when they need to drive a nail, not when they're stirring soup. In our implementation, the LLM gets two main tools: Search tool: "I need information about X" Chunk selection tool: "I'll use these specific documents" The magic happens when the AI decides it needs more information. Only then does it reach for the search tool. If you are only interested in the source code (Rust btw

The Problem: RAG That Searches for Everything

Traditional RAG systems have a glaring issue: they search for everything. User asks about pizza recipes? Search the knowledge base. Wants to know the weather? Search again. Having a casual conversation? Yep, another search.

This shotgun approach leads to:

- Irrelevant context cluttering the LLM's input

- Higher costs from unnecessary searches and longer prompts

- Slower responses due to constant database hits

- Confused AI trying to make sense of unrelated documents

What if the AI could decide for itself when it actually needs to search?

Enter Agentic RAG: The LLM Calls the Shots

Agentic RAG flips the script. Instead of automatically searching on every query, we give the language model tools it can choose to use. Think of it like handing someone a toolbox—they'll grab a hammer when they need to drive a nail, not when they're stirring soup.

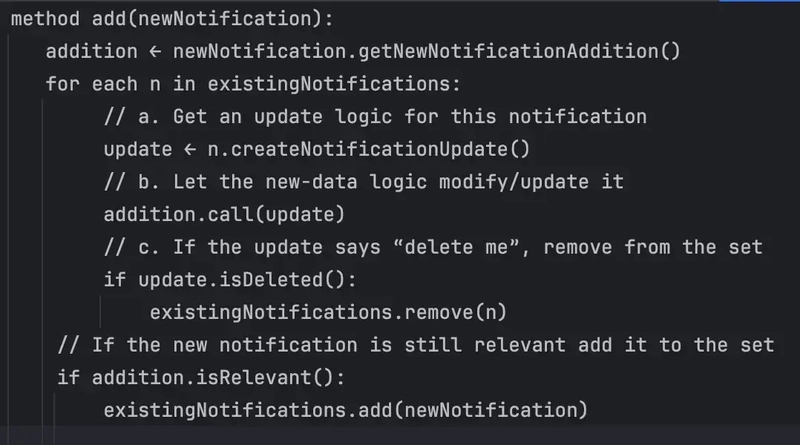

In our implementation, the LLM gets two main tools:

- Search tool: "I need information about X"

- Chunk selection tool: "I'll use these specific documents"

The magic happens when the AI decides it needs more information. Only then does it reach for the search tool. If you are only interested in the source code (Rust btw

![[The AI Show Episode 150]: AI Answers: AI Roadmaps, Which Tools to Use, Making the Case for AI, Training, and Building GPTs](https://www.marketingaiinstitute.com/hubfs/ep%20150%20cover.png)

![[The AI Show Episode 149]: Google I/O, Claude 4, White Collar Jobs Automated in 5 Years, Jony Ive Joins OpenAI, and AI’s Impact on the Environment](https://www.marketingaiinstitute.com/hubfs/ep%20149%20cover.png)

.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

_GvHa2ZS.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

_ArtemisDiana_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

.webp?#)

![WWDC 2025 May Disappoint on AI [Gurman]](https://www.iclarified.com/images/news/97473/97473/97473-640.jpg)

![Apple to Name Next macOS 'Tahoe,' Switch to Year-Based OS Names Like 'macOS 26' [Report]](https://www.iclarified.com/images/news/97471/97471/97471-640.jpg)

![Sonos Father's Day Sale: Save Up to 26% on Arc Ultra, Ace, Move 2, and More [Deal]](https://www.iclarified.com/images/news/97469/97469/97469-640.jpg)