Google DeepMind Unveils QuestBench to Enhance LLM Evaluation

Originally published at ssojet Google DeepMind’s QuestBench benchmark evaluates if LLMs can pinpoint crucial questions needed to solve logic, planning, or math problems. The recent article published by the DeepMind team describes a set of underspecified reasoning tasks solvable by asking at most one question. Large language models (LLMs) are increasingly applied to reasoning tasks such as math and logic. These applications typically assume that tasks are well-defined with all necessary information provided. However, real-world queries often lack critical details, necessitating LLMs to gather missing information proactively. This means LLMs must generate clarifying questions to address underspecified problems effectively. The team formalizes the information-gathering task as an underspecified Constraint Satisfaction Problem (CSP). This formalization distinguishes between semantic ambiguity and underspecification. The QuestBench effort focuses on cases where the user has not provided sufficient information for the model to fulfill the request, which can occur when users are unaware of what information the model lacks. The evaluation framework categorizes tasks into four areas: Logic-Q for logical reasoning, Planning-Q for planning problems defined in Planning Domain Definition Language (PDDL), GSM-Q for grade school math problems with one missing variable, and GSME-Q for similar problems translated into equations. The datasets for QuestBench involve constructing sufficient CSPs in logical reasoning, planning, and math domains. The evaluations included several state-of-the-art LLMs such as GPT-4o, Claude 3.5 Sonnet, and open-sourced Gemma models. The study found that LLMs performed well on GSM-Q and GSME-Q domains with over 80% accuracy, while struggling with Logic-Q and Planning-Q, where they barely exceeded 50% accuracy. For further details, visit the website and access the GitHub project for code to generate QuestBench data. Researchers Claim Breakthrough in Fight Against AI’s Security Hole Prompt injections have been a significant vulnerability in AI systems. Google has introduced CaMeL, a new system designed to mitigate these attacks by treating language models as untrusted components within a secure framework. This approach avoids the failed strategy of self-policing AI models. CaMeL's design is grounded in established software security principles like Control Flow Integrity and Access Control. The goal is to create boundaries between user commands and potentially malicious content, effectively addressing the challenge of maintaining trust in AI interactions. Prompt injections occur when AI systems cannot differentiate between legitimate user commands and malicious instructions. The CaMeL system splits responsibilities between two language models: a "privileged LLM" (P-LLM) that generates code for user commands and a "quarantined LLM" (Q-LLM) that processes unstructured data. This separation ensures that malicious text cannot influence the actions taken by the AI. The CaMeL architecture was tested against the AgentDojo benchmark and showed a high level of utility while resisting previously unsolvable prompt-injection attacks. The research team believes this architecture can also address insider threats and data exfiltration attempts. For more information, you can read the paper here. What's Next for AI at DeepMind At Google DeepMind, researchers are pursuing artificial general intelligence (AGI), which aims to develop a silicon intellect as versatile as a human's but with superior speed and knowledge. The focus is on creating systems that can learn and adapt in ways comparable to human cognition. For updates on DeepMind's ongoing research and developments, visit the CBS News segment. Google DeepMind Scientists Win Nobel Prize for AlphaFold AI Project Demis Hassabis and John Jumper received the Nobel Prize for their innovative work on AlphaFold, an AI model predicting protein structures. This project has transformed biological research by allowing scientists to predict the structure of over 200 million proteins. AlphaFold2 has been widely adopted, with over 2 million users globally. Hassabis and Jumper noted that their upcoming version, AlphaFold3, will be made available for free to the scientific community, further accelerating advancements in medical treatment development and biological understanding. For more details, read the full announcement here. Explore secure authentication solutions with SSOJet's API-first platform, designed to implement secure SSO and user management for enterprise clients. With features like directory sync, SAML, OIDC, and magic link authentication, SSOJet ensures your enterprise remains protected against breaches and unauthorized access. Visit SSOJet to learn more.

Originally published at ssojet

Google DeepMind’s QuestBench benchmark evaluates if LLMs can pinpoint crucial questions needed to solve logic, planning, or math problems. The recent article published by the DeepMind team describes a set of underspecified reasoning tasks solvable by asking at most one question.

Large language models (LLMs) are increasingly applied to reasoning tasks such as math and logic. These applications typically assume that tasks are well-defined with all necessary information provided. However, real-world queries often lack critical details, necessitating LLMs to gather missing information proactively. This means LLMs must generate clarifying questions to address underspecified problems effectively.

The team formalizes the information-gathering task as an underspecified Constraint Satisfaction Problem (CSP). This formalization distinguishes between semantic ambiguity and underspecification. The QuestBench effort focuses on cases where the user has not provided sufficient information for the model to fulfill the request, which can occur when users are unaware of what information the model lacks.

The evaluation framework categorizes tasks into four areas: Logic-Q for logical reasoning, Planning-Q for planning problems defined in Planning Domain Definition Language (PDDL), GSM-Q for grade school math problems with one missing variable, and GSME-Q for similar problems translated into equations. The datasets for QuestBench involve constructing sufficient CSPs in logical reasoning, planning, and math domains.

The evaluations included several state-of-the-art LLMs such as GPT-4o, Claude 3.5 Sonnet, and open-sourced Gemma models. The study found that LLMs performed well on GSM-Q and GSME-Q domains with over 80% accuracy, while struggling with Logic-Q and Planning-Q, where they barely exceeded 50% accuracy.

For further details, visit the website and access the GitHub project for code to generate QuestBench data.

Researchers Claim Breakthrough in Fight Against AI’s Security Hole

Prompt injections have been a significant vulnerability in AI systems. Google has introduced CaMeL, a new system designed to mitigate these attacks by treating language models as untrusted components within a secure framework. This approach avoids the failed strategy of self-policing AI models.

CaMeL's design is grounded in established software security principles like Control Flow Integrity and Access Control. The goal is to create boundaries between user commands and potentially malicious content, effectively addressing the challenge of maintaining trust in AI interactions.

Prompt injections occur when AI systems cannot differentiate between legitimate user commands and malicious instructions. The CaMeL system splits responsibilities between two language models: a "privileged LLM" (P-LLM) that generates code for user commands and a "quarantined LLM" (Q-LLM) that processes unstructured data. This separation ensures that malicious text cannot influence the actions taken by the AI.

The CaMeL architecture was tested against the AgentDojo benchmark and showed a high level of utility while resisting previously unsolvable prompt-injection attacks. The research team believes this architecture can also address insider threats and data exfiltration attempts.

For more information, you can read the paper here.

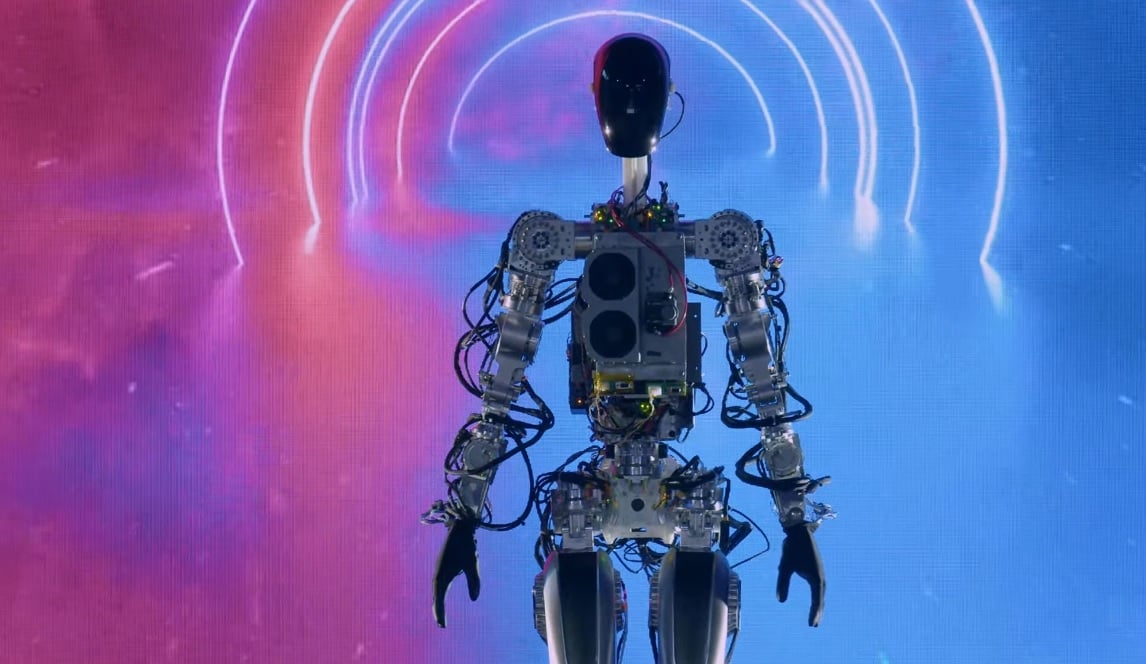

What's Next for AI at DeepMind

At Google DeepMind, researchers are pursuing artificial general intelligence (AGI), which aims to develop a silicon intellect as versatile as a human's but with superior speed and knowledge. The focus is on creating systems that can learn and adapt in ways comparable to human cognition.

For updates on DeepMind's ongoing research and developments, visit the CBS News segment.

Google DeepMind Scientists Win Nobel Prize for AlphaFold AI Project

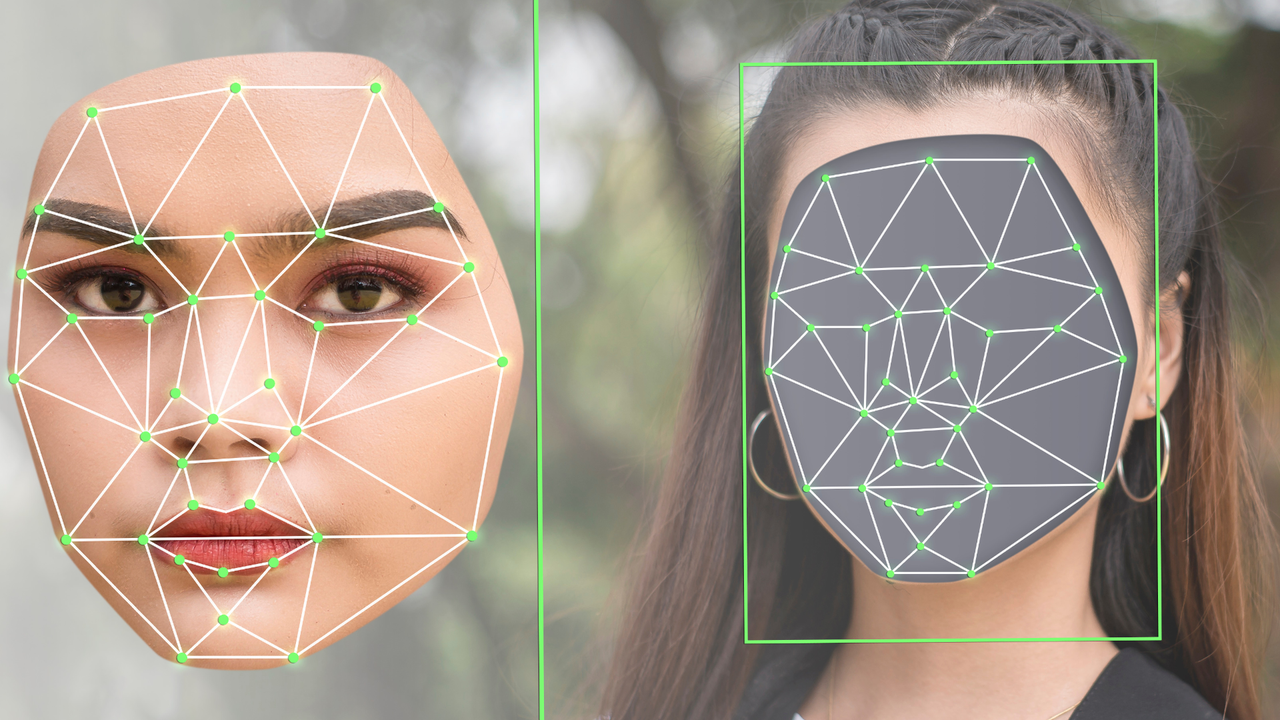

Demis Hassabis and John Jumper received the Nobel Prize for their innovative work on AlphaFold, an AI model predicting protein structures. This project has transformed biological research by allowing scientists to predict the structure of over 200 million proteins.

AlphaFold2 has been widely adopted, with over 2 million users globally. Hassabis and Jumper noted that their upcoming version, AlphaFold3, will be made available for free to the scientific community, further accelerating advancements in medical treatment development and biological understanding.

For more details, read the full announcement here.

Explore secure authentication solutions with SSOJet's API-first platform, designed to implement secure SSO and user management for enterprise clients. With features like directory sync, SAML, OIDC, and magic link authentication, SSOJet ensures your enterprise remains protected against breaches and unauthorized access. Visit SSOJet to learn more.

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![Is This Programming Paradigm New? [closed]](https://miro.medium.com/v2/resize:fit:1200/format:webp/1*nKR2930riHA4VC7dLwIuxA.gif)

-Classic-Nintendo-GameCube-games-are-coming-to-Nintendo-Switch-2!-00-00-13.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

![New iPhone 17 Dummy Models Surface in Black and White [Images]](https://www.iclarified.com/images/news/97106/97106/97106-640.jpg)

![Hands-On With 'iPhone 17 Air' Dummy Reveals 'Scary Thin' Design [Video]](https://www.iclarified.com/images/news/97100/97100/97100-640.jpg)