From Protocol to Production: How Model Context Protocol (MCP) Gateways Enable Secure, Scalable, and Seamless AI Integrations Across Enterprises

The Model Context Protocol (MCP) has rapidly become a cornerstone for integrating AI models with the broader software ecosystem. Developed by Anthropic, MCP standardizes how a language model or autonomous agent discovers and invokes external services, whether REST APIs, database queries, file system operations, or hardware controls. By exposing each capability as a self-describing “tool,” […] The post From Protocol to Production: How Model Context Protocol (MCP) Gateways Enable Secure, Scalable, and Seamless AI Integrations Across Enterprises appeared first on MarkTechPost.

The Model Context Protocol (MCP) has rapidly become a cornerstone for integrating AI models with the broader software ecosystem. Developed by Anthropic, MCP standardizes how a language model or autonomous agent discovers and invokes external services, whether REST APIs, database queries, file system operations, or hardware controls. By exposing each capability as a self-describing “tool,” MCP eliminates the tedium of writing bespoke connectors for every new integration and offers a plug-and-play interface.

The Role of Gateways in Production

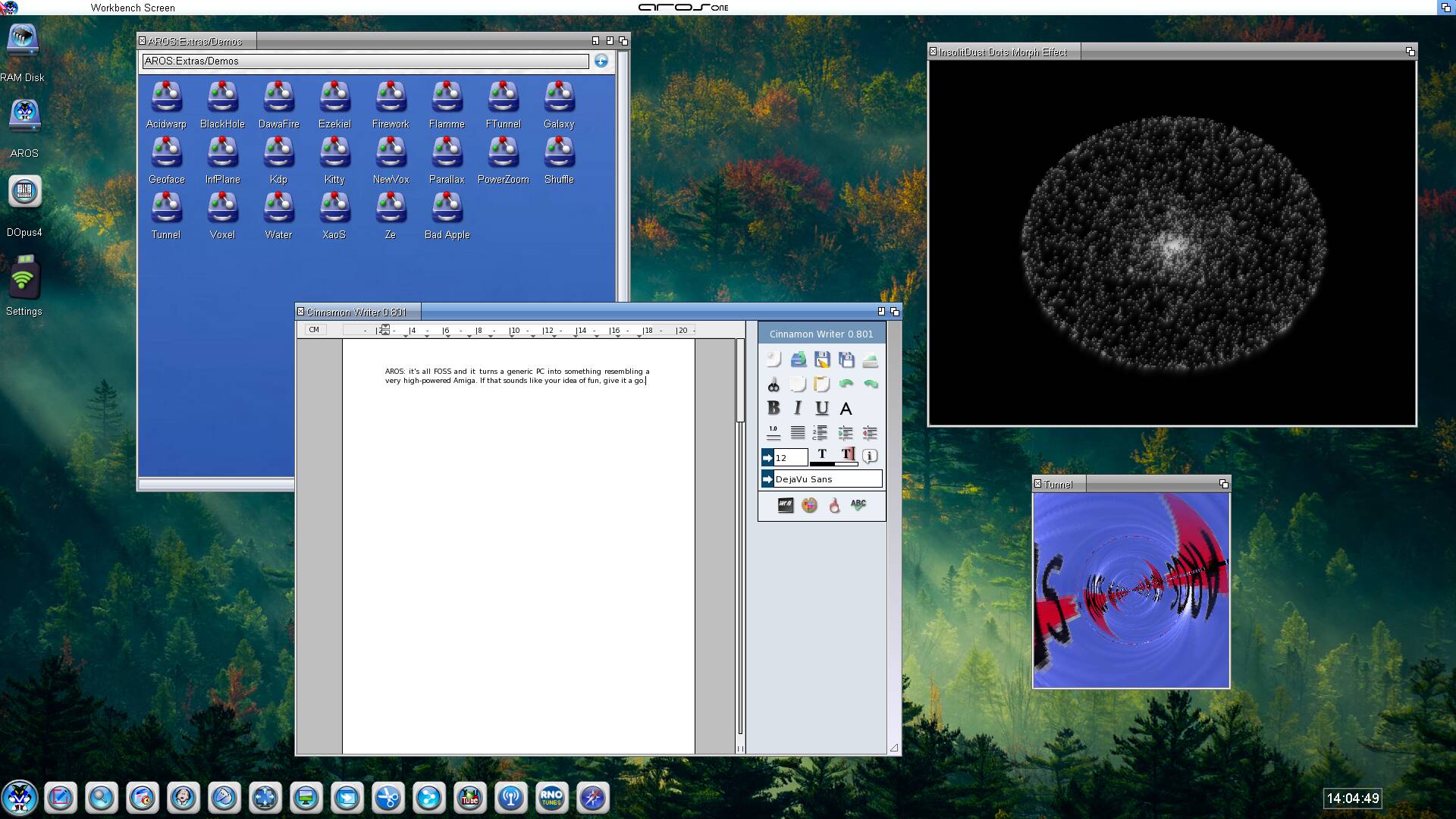

While MCP’s specification defines the mechanics of tool invocation and result streaming, it does not prescribe how to manage those connections at scale or enforce enterprise policies. That responsibility falls to MCP gateways, which act as centralized intermediaries between AI clients and tool servers. A gateway translates local transports (for example, STDIO or Unix sockets) into network-friendly protocols such as HTTP with Server-Sent Events or WebSockets. It also maintains a catalog of available tools, applies authentication and authorization rules, sanitizes inputs to defend against prompt injections, and aggregates logs and metrics for operational visibility. Without a gateway, every AI instance must handle these concerns independently, an approach that rapidly becomes unmanageable in multi-tenant, multi-service environments.

Open-Source Gateway Solutions

Among community-driven gateways, Lasso Security’s MCP Gateway stands out for its emphasis on built-in guardrails. Deployed as a lightweight Python service alongside AI applications, it intercepts tool requests to redact sensitive fields, enforces declarative policies that control each agent’s operations, and logs every invocation to standard SIEM platforms. Its plugin architecture allows security teams to introduce custom checks or data-loss-prevention measures without modifying the core code.

Solo.io’s Agent Gateway integrates MCP into the Envoy service mesh in cloud-native settings. Each MCP server registers itself with the gateway, using mutual TLS (leveraging SPIFFE identities) to authenticate clients and providing fine-grained rate-limiting and tracing through Prometheus and Jaeger. This Envoy-based approach ensures that MCP traffic receives the same robust networking controls and observability as any other microservice in the cluster.

Acehoss’s remote proxy offers a minimal-footprint bridge for rapid prototyping or developer-focused demos. Wrapping a local STDIO-based MCP server in an HTTP/SSE endpoint exposes tool functionality to remote AI clients in minutes. Although it lacks enterprise-grade policy enforcement, its simplicity makes it ideal for exploration and proof-of-concept work.

Enterprise-Grade Integration Platforms

Major cloud and integration vendors have embraced MCP by adapting their existing API management and iPaaS offerings. MCP servers can be published through Azure API Management like any REST API in the Azure ecosystem. Organizations leverage APIM policies to validate JSON Web Tokens, enforce IP restrictions, apply payload size limits, and collect rich telemetry via Azure Monitor. The familiar developer portal then serves as a catalog where teams can browse available MCP tools, test calls interactively, and obtain access credentials, all without standing up new infrastructure beyond Azure’s managed service.

Salesforce’s MuleSoft Anypoint Platform has introduced an MCP connector in beta, turning any of MuleSoft’s hundreds of adapters, whether to SAP, Oracle, or custom databases, into MCP-compliant servers. The low-code connector in Anypoint Studio automatically generates the protocol boilerplate needed for discovery and invocation, while inheriting all of MuleSoft’s policy framework for data encryption, OAuth scopes, and audit logging. This approach empowers large enterprises to transform their integration backbone into a secure, governed set of AI-accessible tools.

Major Architectural Considerations

When evaluating MCP gateway options, it is important to consider deployment topology, transport support, and resilience. A standalone proxy that runs as a sidecar to your AI application offers the fastest path to adoption, but requires you to manage high availability and scaling yourself. By contrast, gateways built on API management or service-mesh platforms inherit clustering, multi-region failover, and rolling-upgrade capabilities. Transport flexibility, support for both streaming via Server-Sent Events and full-duplex HTTP, ensures that long-running operations and incremental outputs do not stall the AI agent. Finally, look for gateways that can manage the lifecycle of tool-server processes, launching or restarting them as needed to maintain uninterrupted service.

Performance and Scalability

Introducing a gateway naturally adds some round-trip latency. Still, in most AI workflows, this overhead is dwarfed by the time spent in I/O-bound operations like database queries or external API calls. Envoy-based gateways and managed API management solutions can handle thousands of concurrent connections, including persistent streaming sessions, making them suitable for high-throughput environments where many agents and users interact simultaneously. Simpler proxies typically suffice for smaller workloads or development environments; however, it is advisable to conduct load testing against your expected peak traffic patterns to uncover any bottlenecks before going live.

Advanced Deployment Scenarios

In edge-to-cloud architectures, MCP gateways enable resource-constrained devices to expose local sensors and actuators as MCP tools while allowing central AI orchestrators to summon insights or issue commands over secure tunnels. In federated learning setups, gateways can federate requests among multiple on-premise MCP servers, each maintaining its dataset, so that a central coordinator can aggregate model updates or query statistics without moving raw data. Even multi-agent systems can benefit when each specialized agent publishes its capabilities via MCP and a gateway mediates handoffs between them, creating complex, collaborative AI workflows across organizational or geographic boundaries.

How to Select the Right Gateway

Choosing an MCP gateway hinges on aligning with existing infrastructure and priorities. Teams already invested in Kubernetes and service meshes will find Envoy-based solutions like Solo.io’s quickest to integrate. At the same time, API-first organizations may prefer Azure API Management or Apigee to leverage familiar policy frameworks. When handling sensitive information, favor gateways with built-in sanitization, policy enforcement, and audit integration, whether Lasso’s open-source offering or a commercial platform with SLAs. Lightweight proxies provide the simplest on-ramp for experimental projects or tightly scoped proofs of concept. Regardless of choice, adopting an incremental approach, starting small and evolving toward more robust platforms as requirements mature, will mitigate risk and ensure a smoother transition from prototype to production.

In conclusion, as AI models transition from isolated research tools to mission-critical components in enterprise systems, MCP gateways are the linchpins that make these integrations practical, secure, and scalable. Gateways centralize connectivity, policy enforcement, and observability, transforming MCP’s promise into a robust foundation for next-generation AI architectures, whether deployed in the cloud, on the edge, or across federated environments.

Sources

- https://arxiv.org/abs/2504.19997

- https://arxiv.org/abs/2503.23278

- https://arxiv.org/abs/2504.08623

- https://arxiv.org/abs/2504.21030

- https://arxiv.org/abs/2505.03864

- https://arxiv.org/abs/2504.03767

The post From Protocol to Production: How Model Context Protocol (MCP) Gateways Enable Secure, Scalable, and Seamless AI Integrations Across Enterprises appeared first on MarkTechPost.

![[The AI Show Episode 148]: Microsoft’s Quiet AI Layoffs, US Copyright Office’s Bombshell AI Guidance, 2025 State of Marketing AI Report, and OpenAI Codex](https://www.marketingaiinstitute.com/hubfs/ep%20148%20cover%20%281%29.png)

![[The AI Show Episode 146]: Rise of “AI-First” Companies, AI Job Disruption, GPT-4o Update Gets Rolled Back, How Big Consulting Firms Use AI, and Meta AI App](https://www.marketingaiinstitute.com/hubfs/ep%20146%20cover.png)

.jpg?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

.jpg?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

_Alan_Wilson_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

_pichetw_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

-xl.jpg)

![Apple Leads Global Wireless Earbuds Market in Q1 2025 [Chart]](https://www.iclarified.com/images/news/97394/97394/97394-640.jpg)

![OpenAI Acquires Jony Ive's 'io' to Build Next-Gen AI Devices [Video]](https://www.iclarified.com/images/news/97399/97399/97399-640.jpg)

![Apple Shares Teaser for 'Chief of War' Starring Jason Momoa [Video]](https://www.iclarified.com/images/news/97400/97400/97400-640.jpg)