Breaking the Bottleneck: Overcoming Scalability Hurdles in LLM Training and Inference

As large language models (LLMs) grow in complexity, the infrastructure supporting their training and inference struggles to keep pace. This article explores the critical challenges of network bottlenecks, resource allocation inefficiencies, and fault recovery in distributed environments—and how cutting-edge strategies are paving the way for scalable, efficient AI systems. The rapid evolution of large language models (LLMs) like GPT-4, PaLM, and LLaMA has pushed the boundaries of artificial intelligence, enabling breakthroughs in natural language understanding, code generation, and creative applications. However, the infrastructure required to train and deploy these models at scale faces monumental challenges. As models balloon to hundreds of billions of parameters and datasets expand into terabytes, distributed computing environments must grapple with three critical bottlenecks: network communication, resource allocation, and fault recovery. These challenges directly impact the scalability, cost, and reliability of LLM workflows, demanding innovative solutions to sustain progress in AI. The Network Communication Quandary In distributed training, LLMs are partitioned across thousands of GPUs or TPUs, requiring constant synchronization of gradients and parameters. Network latency and bandwidth limitations often become the primary bottleneck, especially when nodes span multiple data centers. For example, training a 175B-parameter model like GPT-3 requires exchanging petabytes of data during backpropagation. Traditional protocols like TCP/IP introduce overhead, while inefficient parallelism strategies (e.g., naive data parallelism) exacerbate congestion. To mitigate this, researchers and engineers are adopting hybrid parallelism techniques. Pipeline parallelism splits the model vertically across layers, reducing inter-node communication, while tensor parallelism distributes individual layer computations. Combined with optimized communication libraries like NVIDIA’s NCCL or Facebook’s Gloo, these methods minimize data transfer volumes. Emerging technologies like in-network computation (e.g., programmable switches that aggregate gradients) and optical circuit switches further reduce latency. For inference, edge computing and model sharding decentralize workloads, ensuring low-latency responses even for geographically dispersed users. Resource Allocation: Balancing Efficiency and Flexibility Training LLMs demands vast computational resources, often requiring months of GPU time. Static resource allocation—common in conventional clusters—leads to underutilization, as CPUs, memory, and accelerators sit idle during I/O-bound phases like data loading. Dynamic workloads, such as hyperparameter tuning or multi-task inference, compound this inefficiency. Modern orchestration frameworks like Kubernetes with GPU-aware scheduling or specialized job managers (e.g., Slurm) enable elastic resource allocation. Techniques like gang scheduling ensure all workers launch simultaneously, preventing stragglers from delaying training. Cloud providers are also leveraging spot instances for cost-effective scaling, though this requires robust checkpointing to handle preemptions. For inference, autoscaling and mixed-precision serving optimize throughput, dynamically adjusting resources based on query volume. Additionally, multi-tenant clusters with fairness policies (e.g., Dominant Resource Fairness) allow organizations to share infrastructure without contention. Fault Recovery: Ensuring Continuity in a Sea of Failures In large-scale distributed systems, hardware failures, network outages, and software bugs are inevitable. A single node failure in a 10,000-GPU cluster can derail weeks of training progress if not handled gracefully. Traditional checkpointing—saving model states periodically—introduces significant overhead, especially for trillion-parameter models where each save operation can take hours. Advanced fault tolerance strategies now combine asynchronous checkpointing with partial recovery. Instead of saving the entire model, systems like DeepSpeed’s Zero-Infinity checkpoint only persistent sharded parameters, reducing I/O strain. Erasure coding and replicated computation provide redundancy without duplicating full models. For real-time inference, redundant replicas and circuit breakers isolate failures, ensuring service continuity. Meanwhile, frameworks like Ray and Spark’s Barrier Execution model enable tasks to restart from the nearest consistent state, minimizing downtime. The Path Forward Addressing these challenges requires a holistic approach. Innovations in hardware—such as exascale GPUs, optical interconnects, and near-memory processing—will alleviate physical bottlenecks. On the software side, compiler-based optimizations (e.g., PyTorch’s Dynamo) and domain-specific languages (DSLs) for distributed computing are streamlining workflows. Cruci

As large language models (LLMs) grow in complexity, the infrastructure supporting their training and inference struggles to keep pace. This article explores the critical challenges of network bottlenecks, resource allocation inefficiencies, and fault recovery in distributed environments—and how cutting-edge strategies are paving the way for scalable, efficient AI systems.

The rapid evolution of large language models (LLMs) like GPT-4, PaLM, and LLaMA has pushed the boundaries of artificial intelligence, enabling breakthroughs in natural language understanding, code generation, and creative applications. However, the infrastructure required to train and deploy these models at scale faces monumental challenges. As models balloon to hundreds of billions of parameters and datasets expand into terabytes, distributed computing environments must grapple with three critical bottlenecks: network communication, resource allocation, and fault recovery. These challenges directly impact the scalability, cost, and reliability of LLM workflows, demanding innovative solutions to sustain progress in AI.

The Network Communication Quandary

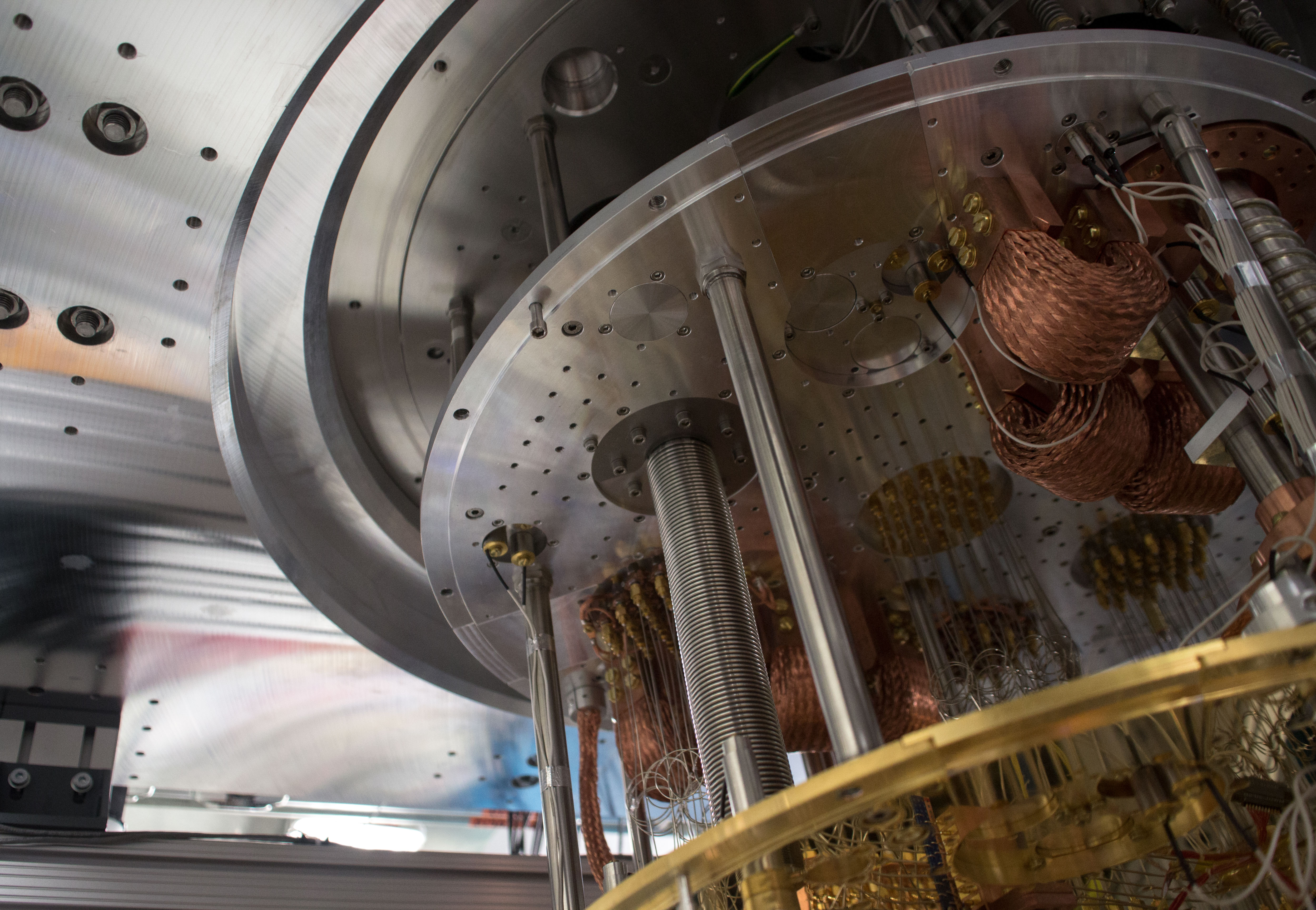

In distributed training, LLMs are partitioned across thousands of GPUs or TPUs, requiring constant synchronization of gradients and parameters. Network latency and bandwidth limitations often become the primary bottleneck, especially when nodes span multiple data centers. For example, training a 175B-parameter model like GPT-3 requires exchanging petabytes of data during backpropagation. Traditional protocols like TCP/IP introduce overhead, while inefficient parallelism strategies (e.g., naive data parallelism) exacerbate congestion.

To mitigate this, researchers and engineers are adopting hybrid parallelism techniques. Pipeline parallelism splits the model vertically across layers, reducing inter-node communication, while tensor parallelism distributes individual layer computations. Combined with optimized communication libraries like NVIDIA’s NCCL or Facebook’s Gloo, these methods minimize data transfer volumes. Emerging technologies like in-network computation (e.g., programmable switches that aggregate gradients) and optical circuit switches further reduce latency. For inference, edge computing and model sharding decentralize workloads, ensuring low-latency responses even for geographically dispersed users.

Resource Allocation: Balancing Efficiency and Flexibility

Training LLMs demands vast computational resources, often requiring months of GPU time. Static resource allocation—common in conventional clusters—leads to underutilization, as CPUs, memory, and accelerators sit idle during I/O-bound phases like data loading. Dynamic workloads, such as hyperparameter tuning or multi-task inference, compound this inefficiency.

Modern orchestration frameworks like Kubernetes with GPU-aware scheduling or specialized job managers (e.g., Slurm) enable elastic resource allocation. Techniques like gang scheduling ensure all workers launch simultaneously, preventing stragglers from delaying training. Cloud providers are also leveraging spot instances for cost-effective scaling, though this requires robust checkpointing to handle preemptions. For inference, autoscaling and mixed-precision serving optimize throughput, dynamically adjusting resources based on query volume. Additionally, multi-tenant clusters with fairness policies (e.g., Dominant Resource Fairness) allow organizations to share infrastructure without contention.

Fault Recovery: Ensuring Continuity in a Sea of Failures

In large-scale distributed systems, hardware failures, network outages, and software bugs are inevitable. A single node failure in a 10,000-GPU cluster can derail weeks of training progress if not handled gracefully. Traditional checkpointing—saving model states periodically—introduces significant overhead, especially for trillion-parameter models where each save operation can take hours.

Advanced fault tolerance strategies now combine asynchronous checkpointing with partial recovery. Instead of saving the entire model, systems like DeepSpeed’s Zero-Infinity checkpoint only persistent sharded parameters, reducing I/O strain. Erasure coding and replicated computation provide redundancy without duplicating full models. For real-time inference, redundant replicas and circuit breakers isolate failures, ensuring service continuity. Meanwhile, frameworks like Ray and Spark’s Barrier Execution model enable tasks to restart from the nearest consistent state, minimizing downtime.

The Path Forward

Addressing these challenges requires a holistic approach. Innovations in hardware—such as exascale GPUs, optical interconnects, and near-memory processing—will alleviate physical bottlenecks. On the software side, compiler-based optimizations (e.g., PyTorch’s Dynamo) and domain-specific languages (DSLs) for distributed computing are streamlining workflows. Crucially, collaboration across academia and industry is accelerating the adoption of open-source tools like Megatron-LM and Hugging Face’s Accelerate, democratizing access to scalable AI infrastructure.

As LLMs grow more sophisticated, the interplay between algorithmic advances and infrastructure resilience will define the next era of AI. By tackling network, resource, and fault tolerance challenges head-on, the community can unlock faster training cycles, greener computing, and real-time inference—ushering in a future where LLMs are both powerful and practical.

Key Takeaways:

- Hybrid Parallelism (pipeline, tensor, data) and optimized communication protocols are critical to overcoming network bottlenecks in distributed training.

- Dynamic Resource Allocation via GPU-aware schedulers and autoscaling maximizes hardware utilization while reducing costs.

- Asynchronous Checkpointing and partial recovery mechanisms ensure fault tolerance without crippling overhead.

- Collaboration between open-source ecosystems and hardware innovators is essential to sustain LLM scalability.

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

.png?#)

.webp?#)

![[Fixed] Gemini app is failing to generate Audio Overviews](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/03/Gemini-Audio-Overview-cover.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![What’s new in Android’s April 2025 Google System Updates [U: 4/14]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/01/google-play-services-3.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Seeds tvOS 18.5 Beta 2 to Developers [Download]](https://www.iclarified.com/images/news/97011/97011/97011-640.jpg)

![Apple Releases macOS Sequoia 15.5 Beta 2 to Developers [Download]](https://www.iclarified.com/images/news/97014/97014/97014-640.jpg)

![Rust Error Handling: A Complete Guide to Building Reliable Applications [2024]](https://media2.dev.to/dynamic/image/width%3D1000,height%3D500,fit%3Dcover,gravity%3Dauto,format%3Dauto/https:%2F%2Fjsschools.com%2Fimages%2Fc92dbdd2-fd90-4382-9991-437ac7884d09.webp)