Why AI struggles with "no" and what that teaches us about ourselves

Over the last few months, I’ve been building some pretty layered automation using Zapier, Ghost, PDFMonkey, and Cloudinary, guided step by step by ChatGPT, and it’s been... eye-opening. I think AI is the best assistant ever when exploring possibilities to solve a problem, but it occasionally fails in ways that feel surprisingly human. As a former teacher, I’ve come to appreciate two patterns—two things large language models consistently struggle with—that have deep roots in how people think and learn. Let’s break them down. 1. Negation is hard for both humans and machines One of the most common mistakes I’ve seen ChatGPT make is with “negative commands.” For example, I once said: “Don’t overwrite existing tags unless the user doesn’t have any.” The result? The tag was overwritten, even when it shouldn’t have been. Why? Because large language models don’t “understand” logic like a programmer. They predict the most likely sequence of words based on examples from their training data. When phrasing is complex or wrapped in multiple negations, models often pick up on the structure of the sentence without truly grasping the logic. This is where it gets human. Children, especially infants and toddlers, also struggle with negative commands. Telling a 2-year-old, “Don’t touch that,” often leads to… them touching it. Why? Because understanding “don’t” requires holding two ideas in mind: What the action is (“touch that”) That it should not be done Developmental psychologists have studied this for decades. A classic source is: Pea, R. D. (1980). “The Development of Negation and Its Role in the Construction of the Self.” Cognitive Science Even adults process negative statements more slowly and with more errors than positive ones. This parallels how LLMs “misread” complex negation—they’re great with surface forms, but logic wrapped in linguistic twists? That’s still a blind spot. 2. Memory gets fuzzy when things get long Here’s another pattern: long conversations with ChatGPT often lead to inconsistent behavior. You’ll say something, set up a rule, get it working, and 20 minutes later, the AI starts forgetting the rule or contradicting something it already confirmed. Why? Large language models have a limited “context window.” GPT-4 can look at many tokens (~128k words at most), but the longer the conversation, the more compressed and imprecise that earlier information becomes. It’s like trying to summarize 40 pages of notes and then recall just one detail from page 4—you might miss it. OpenAI describes this in its technical report on GPT-4: memory is not long-term; it's a temporary window. Again, this echoes human learning. When we overload working memory, especially without structured reinforcement, information decays. Educational research shows that our brains retain new concepts best through spaced repetition, simplified input, and direct reinforcement. Long chats with no clear breaks? That’s like reading 12 chapters of a textbook in one night and hoping it all sticks. 3. “Structured memory” sounds great — but here’s the real story One thing I wanted to figure out was how to help ChatGPT remember key info across different workflows. At first, I assumed the new Projects feature meant each workspace had its own memory. Not quite. Here’s how it really works: ChatGPT’s memory is global, not project-specific. If memory is on, it might remember something you told it (like your name or that you're working on a Ghost theme), but it doesn’t organize those memories by project. The Projects feature is amazing for keeping chats and uploads organized, but memory isn’t isolated to one project versus another. If you go to Settings → Personalization → Manage Memory, you can see what it remembers and delete specific entries—but it’s still one big pool of memory. So, how do you carry memory across projects? There’s no native way to export memory and plug it into a new project. But here's what I’ve been doing that actually works: Export your chat history: Go to Settings → Data Controls → Export Data and you’ll get a ZIP with all your chats. Save useful logic and notes from past chats into a document. When starting a new project, upload that document or paste in key info. ChatGPT will use it during that conversation—even if it doesn’t "remember" it forever. It’s not real memory, but it’s a repeatable way to simulate it. What it teaches us This is what I love most: these quirks in AI aren’t just bugs—they’re mirrors. When LLMs trip over negation, they reveal how language is more than structure—it’s logic in disguise, and logic is never as simple as it looks. When their memory fades, they remind us that attention, reinforcement, and structure matter—not just for machines but also for our own learning. When we build systems that help them remember better, we’re also uncovering what we need to organize complexity in our

Over the last few months, I’ve been building some pretty layered automation using Zapier, Ghost, PDFMonkey, and Cloudinary, guided step by step by ChatGPT, and it’s been... eye-opening.

I think AI is the best assistant ever when exploring possibilities to solve a problem, but it occasionally fails in ways that feel surprisingly human. As a former teacher, I’ve come to appreciate two patterns—two things large language models consistently struggle with—that have deep roots in how people think and learn.

Let’s break them down.

1. Negation is hard for both humans and machines

One of the most common mistakes I’ve seen ChatGPT make is with “negative commands.”

For example, I once said:

“Don’t overwrite existing tags unless the user doesn’t have any.”

The result? The tag was overwritten, even when it shouldn’t have been.

Why? Because large language models don’t “understand” logic like a programmer. They predict the most likely sequence of words based on examples from their training data. When phrasing is complex or wrapped in multiple negations, models often pick up on the structure of the sentence without truly grasping the logic.

This is where it gets human.

Children, especially infants and toddlers, also struggle with negative commands. Telling a 2-year-old, “Don’t touch that,” often leads to… them touching it.

Why? Because understanding “don’t” requires holding two ideas in mind:

- What the action is (“touch that”)

- That it should not be done

Developmental psychologists have studied this for decades. A classic source is:

Even adults process negative statements more slowly and with more errors than positive ones.

This parallels how LLMs “misread” complex negation—they’re great with surface forms, but logic wrapped in linguistic twists? That’s still a blind spot.

2. Memory gets fuzzy when things get long

Here’s another pattern: long conversations with ChatGPT often lead to inconsistent behavior.

You’ll say something, set up a rule, get it working, and 20 minutes later, the AI starts forgetting the rule or contradicting something it already confirmed.

Why?

Large language models have a limited “context window.” GPT-4 can look at many tokens (~128k words at most), but the longer the conversation, the more compressed and imprecise that earlier information becomes. It’s like trying to summarize 40 pages of notes and then recall just one detail from page 4—you might miss it.

OpenAI describes this in its technical report on GPT-4: memory is not long-term; it's a temporary window.

Again, this echoes human learning.

When we overload working memory, especially without structured reinforcement, information decays. Educational research shows that our brains retain new concepts best through spaced repetition, simplified input, and direct reinforcement.

Long chats with no clear breaks? That’s like reading 12 chapters of a textbook in one night and hoping it all sticks.

3. “Structured memory” sounds great — but here’s the real story

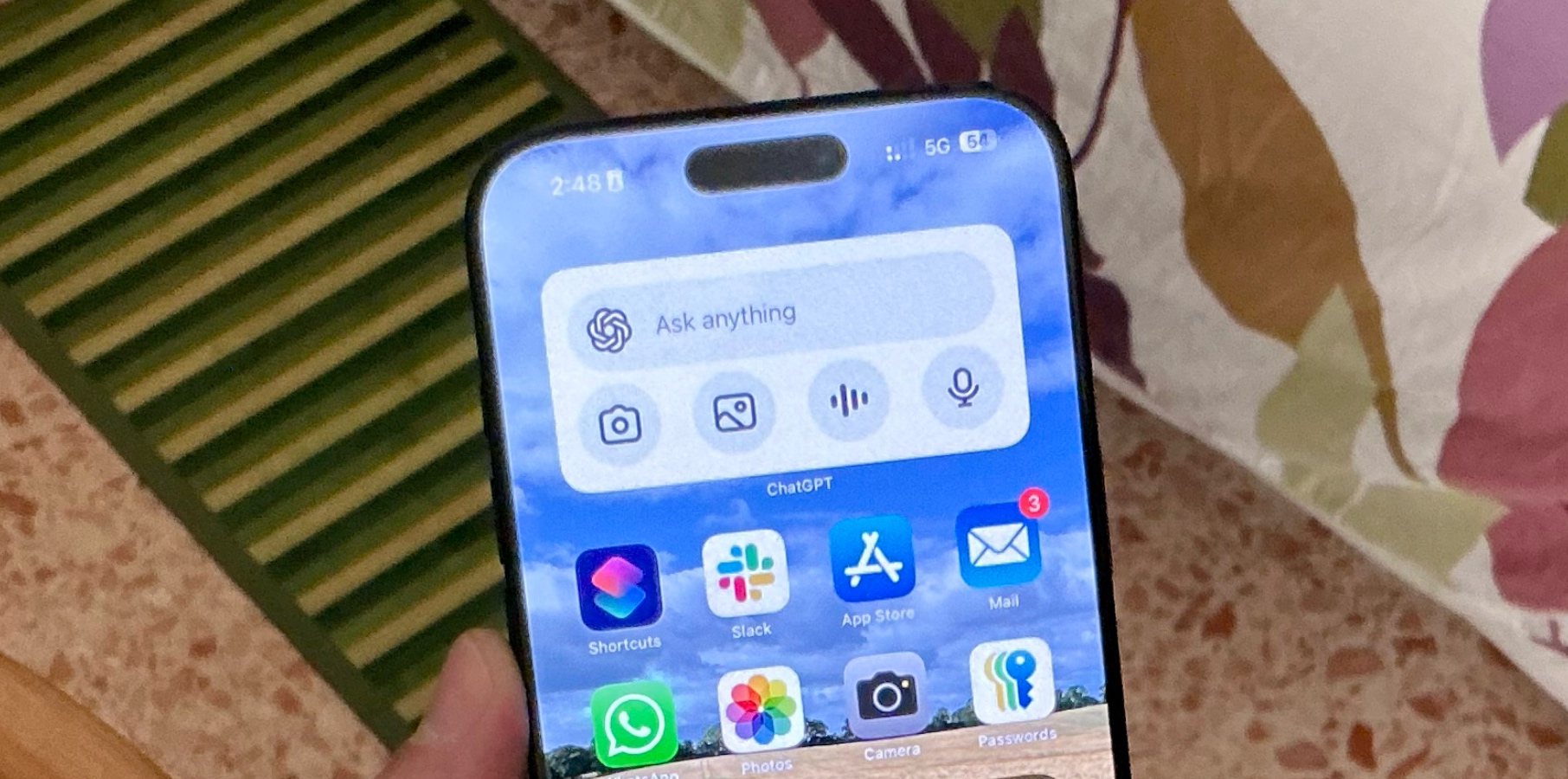

One thing I wanted to figure out was how to help ChatGPT remember key info across different workflows. At first, I assumed the new Projects feature meant each workspace had its own memory. Not quite.

Here’s how it really works:

ChatGPT’s memory is global, not project-specific. If memory is on, it might remember something you told it (like your name or that you're working on a Ghost theme), but it doesn’t organize those memories by project.

- The Projects feature is amazing for keeping chats and uploads organized, but memory isn’t isolated to one project versus another.

- If you go to

Settings → Personalization → Manage Memory, you can see what it remembers and delete specific entries—but it’s still one big pool of memory.

So, how do you carry memory across projects?

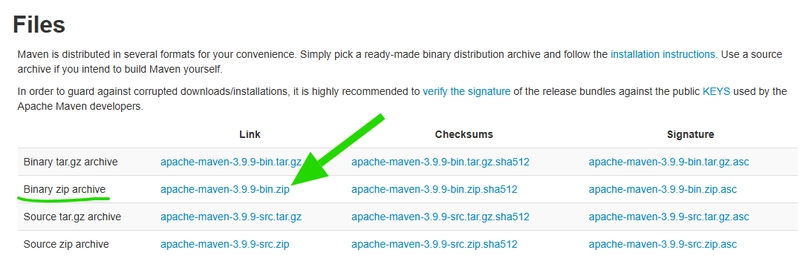

There’s no native way to export memory and plug it into a new project. But here's what I’ve been doing that actually works:

-

Export your chat history: Go to

Settings → Data Controls → Export Dataand you’ll get a ZIP with all your chats. - Save useful logic and notes from past chats into a document.

- When starting a new project, upload that document or paste in key info. ChatGPT will use it during that conversation—even if it doesn’t "remember" it forever.

It’s not real memory, but it’s a repeatable way to simulate it.

What it teaches us

This is what I love most: these quirks in AI aren’t just bugs—they’re mirrors.

- When LLMs trip over negation, they reveal how language is more than structure—it’s logic in disguise, and logic is never as simple as it looks. When their memory fades, they remind us that attention, reinforcement, and structure matter—not just for machines but also for our own learning.

- When we build systems that help them remember better, we’re also uncovering what we need to organize complexity in our own minds.

What I’ve learned

Here’s what’s helped me when working with AI (and people, frankly, haha.

![[The AI Show Episode 148]: Microsoft’s Quiet AI Layoffs, US Copyright Office’s Bombshell AI Guidance, 2025 State of Marketing AI Report, and OpenAI Codex](https://www.marketingaiinstitute.com/hubfs/ep%20148%20cover%20%281%29.png)

![[The AI Show Episode 146]: Rise of “AI-First” Companies, AI Job Disruption, GPT-4o Update Gets Rolled Back, How Big Consulting Firms Use AI, and Meta AI App](https://www.marketingaiinstitute.com/hubfs/ep%20146%20cover.png)

![[FREE EBOOKS] The Embedded Linux Security Handbook, Modern Generative AI with ChatGPT and OpenAI Models & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![Laid off but not afraid with X-senior Microsoft Dev MacKevin Fey [Podcast #173]](https://cdn.hashnode.com/res/hashnode/image/upload/v1747965474270/ae29dc33-4231-47b2-afd1-689b3785fb79.png?#)

.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

_David_Hall_-Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

_Andriy_Popov_Alamy_Stock_Photo.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Apple 15-inch M4 MacBook Air On Sale for $1049.99 [Deal]](https://www.iclarified.com/images/news/97419/97419/97419-640.jpg)

![Xiaomi Tops Wearables Market as Apple Slips to Second in Q1 2025 [Chart]](https://www.iclarified.com/images/news/97417/97417/97417-640.jpg)