Hire Data Scientists to Boost DevOps Performance & Predict Failures

Discover practical strategies for integrating data science expertise into your DevOps workflow to reduce downtime and optimize system reliability.

Modern DevOps teams generate massive amounts of operational data daily, from server logs to deployment metrics. However, most organizations struggle to extract meaningful insights from this information flood. Smart companies now hire data scientists specifically to transform raw DevOps data into actionable intelligence that prevents outages and optimizes performance.

The integration of data science into DevOps represents a fundamental shift from reactive troubleshooting to proactive system management. Teams that successfully combine these disciplines achieve 60% fewer critical incidents and 40% faster resolution times compared to traditional approaches.

This transformation isn't just about adding more tools to your stack. It requires dedicated professionals who understand both statistical analysis and operational challenges. When organizations hire data scientists with DevOps domain expertise, they create a competitive advantage that translates directly to improved system reliability and reduced operational costs.

Traditional DevOps Limitations

Legacy monitoring approaches rely heavily on threshold-based alerting systems that generate significant noise while missing subtle patterns indicating emerging problems. These reactive methods often result in firefighting scenarios where teams scramble to address issues after they've already impacted users.

Predictive Analytics Transforming Incident Management

Predictive modeling has revolutionized how teams approach system reliability. Instead of waiting for alerts to trigger, data scientists analyze historical patterns to identify conditions that precede failures. This proactive approach enables teams to address potential issues before they escalate into customer-facing problems.

Machine learning algorithms excel at detecting anomalies in complex, multi-dimensional data sets that human operators would miss. When companies hire data scientists for DevOps roles, they gain access to sophisticated pattern recognition capabilities that can process thousands of metrics simultaneously.

Real-world implementations show impressive results. Netflix's chaos engineering approach, supported by data science insights, has reduced their incident response time by 75% while maintaining 99.9% uptime across their global platform.

Time Series Analysis for System Health

Time series forecasting models analyze metric trends over various time horizons, from minutes to months. These models identify seasonal patterns, drift, and anomalies that indicate developing problems before they manifest as outages.

Performance Optimization Through Statistical Analysis

Data scientists bring rigorous analytical methods to performance tuning that go far beyond basic monitoring dashboards. They use correlation analysis, regression modeling, and clustering techniques to identify root causes of performance degradation and optimize resource allocation.

Modern applications generate performance data from multiple layers - application code, databases, network infrastructure, and user interactions. When organizations hire data scientists to analyze this multifaceted data, they discover optimization opportunities that weren't apparent through traditional monitoring approaches.

Statistical analysis reveals hidden relationships between seemingly unrelated metrics. For example, data scientists might discover that increased memory usage in one service correlates with network latency spikes in a completely different component, leading to targeted optimizations that improve overall system performance.

Resource Allocation Optimization

Data-driven capacity planning eliminates guesswork from infrastructure scaling decisions. Statistical models predict resource requirements based on usage patterns, seasonal trends, and business growth projections, ensuring optimal performance while controlling costs.

Intelligent Alerting Systems Reducing Noise

Alert fatigue represents one of the biggest challenges in modern DevOps environments. Traditional threshold-based systems generate thousands of notifications daily, many of which represent false positives or low-priority issues. Data scientists design intelligent alerting systems that prioritize notifications based on business impact and likelihood of escalation.

Machine learning algorithms learn from historical incident data to classify alerts by severity and urgency. These systems consider factors like time of day, current system load, recent deployment activity, and user impact when determining alert priority.

Teams that hire data scientists to redesign their alerting systems report 80% reduction in alert volume while maintaining or improving their ability to detect critical issues. This dramatic noise reduction allows engineers to focus on genuine problems rather than constantly triaging false alarms.

Dynamic Threshold Adjustment

Adaptive thresholds automatically adjust based on historical patterns, seasonal variations, and current system state. This approach eliminates the manual threshold tuning that consumes significant engineering time in traditional monitoring setups.

Deployment Risk Assessment and Automation

Data scientists analyze deployment patterns to predict the likelihood of failures before code reaches production. By examining factors like code complexity, test coverage, developer experience, and historical failure rates, they create risk scoring models that guide deployment decisions.

These predictive models integrate with CI/CD pipelines to automatically flag high-risk deployments for additional review or testing. Teams can configure automated rollback triggers based on real-time performance metrics and statistical confidence intervals.

Organizations that hire data scientists for deployment optimization report 45% fewer production incidents and 30% faster feature delivery cycles. The combination of risk assessment and automated decision-making creates a more reliable and efficient deployment process.

Blue-Green Deployment Intelligence

Statistical analysis of traffic patterns and performance metrics during blue-green deployments enables intelligent traffic shifting strategies that minimize risk while maximizing deployment speed.

Monitoring Infrastructure Cost Optimization

Cloud infrastructure costs can spiral out of control without proper analysis and optimization. Data scientists apply cost modeling techniques to identify opportunities for resource optimization without compromising performance or reliability.

By analyzing usage patterns, they identify over-provisioned resources, underutilized instances, and opportunities for reserved capacity purchases. These insights often result in 20-40% cost reductions while maintaining service level agreements.

When companies hire data scientists focused on infrastructure optimization, they gain expertise in multi-objective optimization problems that balance cost, performance, and reliability constraints simultaneously.

Predictive Auto-Scaling Models

Advanced auto-scaling algorithms use machine learning to predict resource demands before they occur, enabling proactive scaling that maintains performance while minimizing costs.

Security Incident Detection and Response

Security threats often manifest as subtle anomalies in system behavior that traditional rule-based detection systems miss. Data scientists develop behavioral analysis models that establish normal operation baselines and identify deviations that might indicate security incidents.

These models excel at detecting insider threats, advanced persistent threats, and zero-day exploits that evade signature-based detection systems. By analyzing patterns in network traffic, user behavior, and system access logs, they identify suspicious activities that warrant investigation.

Teams that integrate data science expertise into their security operations center (SOC) detect threats 65% faster and reduce false positive rates by 70% compared to traditional approaches.

Anomaly Detection in User Behavior

User and entity behavior analytics (UEBA) systems use machine learning to identify suspicious patterns in user activities, system access, and data movement that might indicate compromised accounts or malicious insiders.

Building Cross-Functional DevOps Data Teams

Successful integration of data science into DevOps requires careful team structure and collaboration models. The most effective approach involves embedding data scientists directly within DevOps teams rather than maintaining separate analytics groups.

This embedded model ensures that data scientists understand operational context and business requirements while DevOps engineers gain appreciation for statistical rigor and experimental design. Cross-training initiatives help team members develop hybrid skills that improve collaboration and communication.

Organizations that hire data scientists for integrated DevOps roles create a culture of data-driven decision making that extends throughout their engineering organization. This cultural shift often proves more valuable than any specific technical implementation.

Skill Development and Training Programs

Investing in cross-functional training programs helps DevOps engineers develop basic data analysis skills while teaching data scientists operational concepts and tools.

Implementation Roadmap and Best Practices

Starting a data-driven DevOps transformation requires careful planning and realistic expectations. Most successful implementations begin with pilot projects focused on specific use cases like incident prediction or performance optimization.

The key to success lies in selecting initial projects with clear success metrics and measurable business impact. Early wins build organizational support for expanded data science integration and justify additional investment in tools and talent.

When organizations hire data scientists for DevOps transformation, they should prioritize candidates with both statistical expertise and practical understanding of operational challenges. This combination of skills proves more valuable than deep specialization in either domain alone.

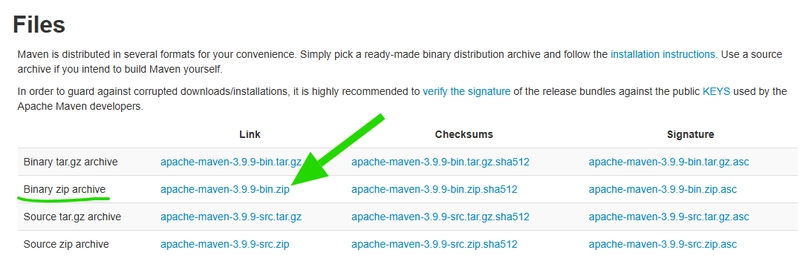

Tool Integration and Data Pipeline Architecture

Modern data platforms like Datadog, New Relic, and Prometheus provide APIs that enable data scientists to access operational metrics for analysis. Establishing robust data pipelines ensures consistent, high-quality inputs for predictive models.

Measuring Success and ROI

Data-driven DevOps initiatives require clear metrics to demonstrate value and guide continuous improvement. Key performance indicators include mean time to detection (MTTD), mean time to resolution (MTTR), deployment frequency, and change failure rate.

Financial metrics like infrastructure cost savings, reduced downtime costs, and engineering productivity improvements provide compelling ROI justification. Organizations typically see positive returns within 6-12 months of implementing data-driven DevOps practices.

Regular assessment and model refinement ensure that predictive systems remain accurate as infrastructure and applications evolve. Teams that hire data scientists for ongoing optimization achieve better long-term results than one-time implementation projects.

The future of DevOps lies in intelligent, self-healing systems that leverage data science to predict, prevent, and automatically resolve operational issues. Organizations that invest in this transformation today will gain significant competitive advantages in reliability, efficiency, and innovation speed.

![[The AI Show Episode 148]: Microsoft’s Quiet AI Layoffs, US Copyright Office’s Bombshell AI Guidance, 2025 State of Marketing AI Report, and OpenAI Codex](https://www.marketingaiinstitute.com/hubfs/ep%20148%20cover%20%281%29.png)

![[The AI Show Episode 146]: Rise of “AI-First” Companies, AI Job Disruption, GPT-4o Update Gets Rolled Back, How Big Consulting Firms Use AI, and Meta AI App](https://www.marketingaiinstitute.com/hubfs/ep%20146%20cover.png)

![[FREE EBOOKS] The Embedded Linux Security Handbook, Modern Generative AI with ChatGPT and OpenAI Models & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![Laid off but not afraid with X-senior Microsoft Dev MacKevin Fey [Podcast #173]](https://cdn.hashnode.com/res/hashnode/image/upload/v1747965474270/ae29dc33-4231-47b2-afd1-689b3785fb79.png?#)

.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

_David_Hall_-Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

_Andriy_Popov_Alamy_Stock_Photo.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Apple 15-inch M4 MacBook Air On Sale for $1049.99 [Deal]](https://www.iclarified.com/images/news/97419/97419/97419-640.jpg)

![Xiaomi Tops Wearables Market as Apple Slips to Second in Q1 2025 [Chart]](https://www.iclarified.com/images/news/97417/97417/97417-640.jpg)