I’m excited to share my journey of fine-tuning TinyLlama/TinyLlama-1.1B-intermediate-step-1431k-3T, a compact 1.1 billion parameter language model, using Low-Rank Adaptation (LoRA) to classify emotions in tweets from the dair-ai/emotion dataset. LoRA makes fine-tuning efficient by tweaking only a small subset of parameters, perfect for those of us working with limited hardware. You can dive into the full code and resources at my GitHub repo: mrzaizai2k/finetune-tinyllama-lora. What’s Inside Why This Matters Getting Started Exploring the Data Prepping the Dataset Training with LoRA How It Performs What’s Next Why This Matters TinyLlama is a lean, mean machine from the Llama family, designed for efficiency without sacrificing power. Fine-tuning it for emotion classification—identifying sadness, joy, love, anger, fear, or surprise in tweets—can be resource-intensive. That’s where LoRA, implemented via the PEFT library, saves the day. By updating only low-rank weight matrices, I can fine-tune effectively even on modest hardware. Getting Started To kick things off, I set up a Python environment using Conda and installed the necessary dependencies. Here’s how you can follow along: git clone https://github.com/mrzaizai2k/finetune-tinyllama-lora.git cd finetune-tinyllama-lora conda create -n tinyllama python=3.10 conda activate tinyllama pip install -r setup.txt Exploring the Data Before diving into training, I explored the dair-ai/emotion dataset using exploratory data analysis (EDA) in emotion_eda.ipynb. The dataset labels tweets with six emotions, but I noticed a couple of challenges: Class Imbalance: Emotions like joy and sadness had way more examples than surprise, which had only 572 samples. Token Lengths: When tokenized with TinyLlama’s tokenizer, many tweets were too long, inflating memory and training time. This chart highlights the original imbalance and the balanced dataset. Token length distribution for the dataset. To tackle these, I set a maximum sequence length of 64 tokens, as most tweets were concise, and longer ones often had fluff. This meant filtering out longer tweets, which shrank the dataset. To fix the imbalance, I capped each emotion at 572 samples, trimming overrepresented classes. While this balanced the data, it reduced the overall size, which could limit the model’s ability to generalize. Prepping the Dataset I formatted the dataset into the Alpaca format for instruction-based fine-tuning, structuring each example like this: { "instruction": "Classify the emotion in this tweet:", "input": "i feel disheartened or defeated", "output": "sadness" } In train.py, I: Converted tweets into the Alpaca format. Filtered out examples exceeding 64 tokens. Balanced the dataset to 572 samples per emotion. Saved the results as train_data.json, val_data.json, and test_data.json. The emotions were mapped as: 0: sadness 1: joy 2: love 3: anger 4: fear 5: surprise Training with LoRA I fine-tuned TinyLlama using LoRA with the PEFT library. Here’s a peek at the core setup from train.py: from peft import LoraConfig, get_peft_model from transformers import AutoModelForCausalLM # Configurations MODEL_NAME = "TinyLlama/TinyLlama-1.1B-intermediate-step-1431k-3T" OUTPUT_DIR = "./model" LORA_R = 8 LORA_ALPHA = 16 LORA_DROPOUT = 0.05 LORA_TARGET_MODULES = ["q_proj", "v_proj"] BATCH_SIZE = 128 MICRO_BATCH_SIZE = 4 GRADIENT_ACCUMULATION_STEPS = BATCH_SIZE // MICRO_BATCH_SIZE LEARNING_RATE = 3e-4 TRAIN_STEPS = 300 WARMUP_STEPS = 100 EVAL_STEPS = 50 SAVE_STEPS = 50 LOGGING_STEPS = 10 MAX_SEQ_LENGTH = 64 def prepare_model(model_name): model = AutoModelForCausalLM.from_pretrained(model_name) config = LoraConfig( r=LORA_R, lora_alpha=LORA_ALPHA, target_modules=LORA_TARGET_MODULES, lora_dropout=LORA_DROPOUT, bias="none", task_type="CAUSAL_LM", ) model = get_peft_model(model, config) model.print_trainable_parameters() return model This code loads TinyLlama, applies a LoRA config, and confirms that only a tiny fraction of parameters are updated—keeping things lightweight. My LoRA settings were: Rank (r=8): Low rank for efficiency and expressiveness. Alpha (16): Scales updates for stable adaptation. Target Modules: Focused on q_proj and v_proj in the attention mechanism. Dropout (0.05): Adds regularization. Training used: Batch Size: 128, with micro-batches of 4 and 32 gradient accumulation steps to fit my GPU. Learning Rate (3e-4): Balanced for stable convergence. Max Sequence Length: 64, based on EDA. I used the Trainer class from Transformers with mixed precision (fp16): from transformers import Trainer, TrainingArguments, DataCollatorForLanguageModeling def setup_training_args(output_dir): return TrainingArguments( output_dir=output_dir, per_device_train_batch_size=MICRO_BATCH_SIZE, g

I’m excited to share my journey of fine-tuning TinyLlama/TinyLlama-1.1B-intermediate-step-1431k-3T, a compact 1.1 billion parameter language model, using Low-Rank Adaptation (LoRA) to classify emotions in tweets from the dair-ai/emotion dataset. LoRA makes fine-tuning efficient by tweaking only a small subset of parameters, perfect for those of us working with limited hardware. You can dive into the full code and resources at my GitHub repo: mrzaizai2k/finetune-tinyllama-lora.

What’s Inside

- Why This Matters

- Getting Started

- Exploring the Data

- Prepping the Dataset

- Training with LoRA

- How It Performs

- What’s Next

Why This Matters

TinyLlama is a lean, mean machine from the Llama family, designed for efficiency without sacrificing power. Fine-tuning it for emotion classification—identifying sadness, joy, love, anger, fear, or surprise in tweets—can be resource-intensive. That’s where LoRA, implemented via the PEFT library, saves the day. By updating only low-rank weight matrices, I can fine-tune effectively even on modest hardware.

Getting Started

To kick things off, I set up a Python environment using Conda and installed the necessary dependencies. Here’s how you can follow along:

git clone https://github.com/mrzaizai2k/finetune-tinyllama-lora.git

cd finetune-tinyllama-lora

conda create -n tinyllama python=3.10

conda activate tinyllama

pip install -r setup.txt

Exploring the Data

Before diving into training, I explored the dair-ai/emotion dataset using exploratory data analysis (EDA) in emotion_eda.ipynb. The dataset labels tweets with six emotions, but I noticed a couple of challenges:

- Class Imbalance: Emotions like joy and sadness had way more examples than surprise, which had only 572 samples.

- Token Lengths: When tokenized with TinyLlama’s tokenizer, many tweets were too long, inflating memory and training time.

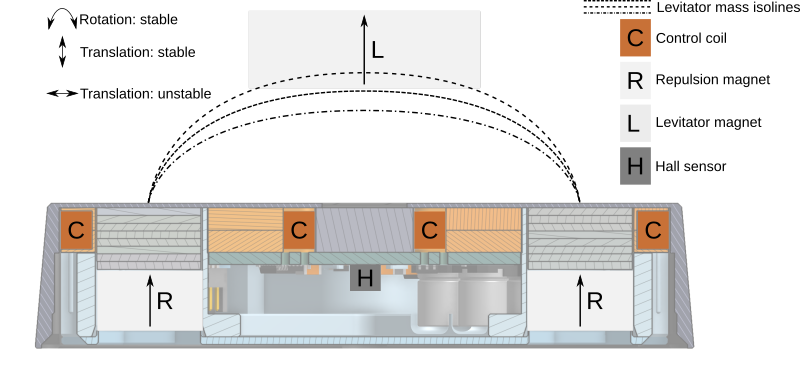

This chart highlights the original imbalance and the balanced dataset.

Token length distribution for the dataset.

To tackle these, I set a maximum sequence length of 64 tokens, as most tweets were concise, and longer ones often had fluff. This meant filtering out longer tweets, which shrank the dataset. To fix the imbalance, I capped each emotion at 572 samples, trimming overrepresented classes. While this balanced the data, it reduced the overall size, which could limit the model’s ability to generalize.

Prepping the Dataset

I formatted the dataset into the Alpaca format for instruction-based fine-tuning, structuring each example like this:

{

"instruction": "Classify the emotion in this tweet:",

"input": "i feel disheartened or defeated",

"output": "sadness"

}

In train.py, I:

- Converted tweets into the Alpaca format.

- Filtered out examples exceeding 64 tokens.

- Balanced the dataset to 572 samples per emotion.

- Saved the results as

train_data.json,val_data.json, andtest_data.json.

The emotions were mapped as:

- 0: sadness

- 1: joy

- 2: love

- 3: anger

- 4: fear

- 5: surprise

Training with LoRA

I fine-tuned TinyLlama using LoRA with the PEFT library. Here’s a peek at the core setup from train.py:

from peft import LoraConfig, get_peft_model

from transformers import AutoModelForCausalLM

# Configurations

MODEL_NAME = "TinyLlama/TinyLlama-1.1B-intermediate-step-1431k-3T"

OUTPUT_DIR = "./model"

LORA_R = 8

LORA_ALPHA = 16

LORA_DROPOUT = 0.05

LORA_TARGET_MODULES = ["q_proj", "v_proj"]

BATCH_SIZE = 128

MICRO_BATCH_SIZE = 4

GRADIENT_ACCUMULATION_STEPS = BATCH_SIZE // MICRO_BATCH_SIZE

LEARNING_RATE = 3e-4

TRAIN_STEPS = 300

WARMUP_STEPS = 100

EVAL_STEPS = 50

SAVE_STEPS = 50

LOGGING_STEPS = 10

MAX_SEQ_LENGTH = 64

def prepare_model(model_name):

model = AutoModelForCausalLM.from_pretrained(model_name)

config = LoraConfig(

r=LORA_R,

lora_alpha=LORA_ALPHA,

target_modules=LORA_TARGET_MODULES,

lora_dropout=LORA_DROPOUT,

bias="none",

task_type="CAUSAL_LM",

)

model = get_peft_model(model, config)

model.print_trainable_parameters()

return model

This code loads TinyLlama, applies a LoRA config, and confirms that only a tiny fraction of parameters are updated—keeping things lightweight. My LoRA settings were:

- Rank (r=8): Low rank for efficiency and expressiveness.

- Alpha (16): Scales updates for stable adaptation.

-

Target Modules: Focused on

q_projandv_projin the attention mechanism. - Dropout (0.05): Adds regularization.

Training used:

- Batch Size: 128, with micro-batches of 4 and 32 gradient accumulation steps to fit my GPU.

- Learning Rate (3e-4): Balanced for stable convergence.

- Max Sequence Length: 64, based on EDA.

I used the Trainer class from Transformers with mixed precision (fp16):

from transformers import Trainer, TrainingArguments, DataCollatorForLanguageModeling

def setup_training_args(output_dir):

return TrainingArguments(

output_dir=output_dir,

per_device_train_batch_size=MICRO_BATCH_SIZE,

gradient_accumulation_steps=GRADIENT_ACCUMULATION_STEPS,

warmup_steps=WARMUP_STEPS,

max_steps=TRAIN_STEPS,

learning_rate=LEARNING_RATE,

fp16=True,

logging_steps=LOGGING_STEPS,

evaluation_strategy="steps",

eval_steps=EVAL_STEPS,

save_strategy="steps",

save_steps=SAVE_STEPS,

save_total_limit=3,

load_best_model_at_end=True,

optim="adamw_torch"

)

data_collator = DataCollatorForLanguageModeling(

tokenizer=tokenizer,

mlm=False,

pad_to_multiple_of=8

)

trainer = Trainer(

model=model,

args=training_args,

train_dataset=train_dataset,

eval_dataset=val_dataset,

data_collator=data_collator

)

The fine-tuned model was saved to ./model.

How It Performs

I compared the base and fine-tuned models in compare_performance.ipynb. Here’s what I found:

Fine-tuned model confusion matrix.

| Metric | Base Model | Fine-Tuned Model | Improvement |

|---|---|---|---|

| Accuracy | 0.00 | 37.34% | 37.34% |

| F1 (Weighted) | 0.00 | 48.27% | 48.27% |

| F1 (Macro) | 0.00 | 39.56% | 39.56% |

| Precision (Weighted) | 0.00 | 72.56% | 72.56% |

| Recall (Weighted) | 0.00 | 37.34% | 37.34% |

- Base Model: Out of the box, it predicted everything as "unknown," scoring zeros across the board.

- Fine-Tuned Model: Hit 37.34% accuracy and 72.56% weighted precision, showing it’s learning to nail positive predictions.

What’s Next

This project was a blast, proving TinyLlama can handle emotion classification with LoRA’s efficiency. But there’s room to grow:

- Tweak Hyperparameters: I’d love to use Weights & Biases to fine-tune settings.

- Boost Data: Generating synthetic samples could help avoid tossing out data.

- Track Experiments: Adding logging would make results more reproducible.

- Try New Models: Comparing LoRA with other fine-tuning methods or models could be fun.

Check out the repo, give it a spin, and let me know how it goes!

%20Abstract%20Background%20102024%20SOURCE%20Amazon.jpg)

![[The AI Show Episode 146]: Rise of “AI-First” Companies, AI Job Disruption, GPT-4o Update Gets Rolled Back, How Big Consulting Firms Use AI, and Meta AI App](https://www.marketingaiinstitute.com/hubfs/ep%20146%20cover.png)

_Alexey_Kotelnikov_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

_Brian_Jackson_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Roku clarifies how ‘Pause Ads’ work amid issues with some HDR content [U]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/05/roku-pause-ad-1.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Seeds visionOS 2.5 RC to Developers [Download]](https://www.iclarified.com/images/news/97240/97240/97240-640.jpg)

![Apple Seeds tvOS 18.5 RC to Developers [Download]](https://www.iclarified.com/images/news/97243/97243/97243-640.jpg)