Supercharging API Rate Limiting with AIMD - A Deep Dive into Modern System Design

Abstract APIs have become the lifeblood of modern digital ecosystems. Ensuring scalability, fairness, and resilience is no longer optional—it’s fundamental. Traditional rate limiting techniques offer a static shield against abuse and overload, but they can fall short when faced with dynamic, ever-shifting workloads. Inspired by the adaptive system design strategies from Alex Xu’s acclaimed System Design Interview series, this article explores a powerful, nuanced alternative: building adaptive rate limiters using AIMD (Additive Increase, Multiplicative Decrease). We delve into how AIMD works, why Redis is an ideal backend, and provide a full C# implementation to empower developers building the next generation of cloud-native APIs. The Static Rate Limiter Problem: Why Conventional Solutions Break Down Today’s APIs operate in a world where user demand and system capacity fluctuate wildly. Conventional fixed-threshold rate limiters—think "1000 requests per hour per user"—leave us trapped between two unsatisfactory extremes: set limits too low and frustrate legitimate users; set them too high and risk service instability. Alex Xu emphasizes in System Design Interview that scalable systems must adapt fluidly to real-time load while safeguarding fairness and reliability. Although Xu doesn’t explicitly name AIMD, the adaptive, feedback-driven mindset he advocates resonates strongly with its principles. Understanding AIMD: The Algorithm that Powers Both TCP and APIs AIMD—famously used in TCP congestion control—offers a sophisticated, time-tested control mechanism. Here’s how it applies to API rate limiting: Additive Increase: Gradually increase the user’s allowed request rate during stable, healthy conditions. Multiplicative Decrease: Aggressively reduce the allowed rate when the system detects stress signals such as errors or high latency. This elegant feedback loop intelligently balances user demand and system capacity, adjusting dynamically to real-world conditions. Redis as the Backbone: Why Fast Distributed State Matters Xu advocates using fast, reliable distributed stores for managing shared state in scalable systems. Redis is tailor-made for this task—delivering microsecond-level latency, atomic counters, and powerful Lua scripting for atomic multi-key operations. Redis allows consistent, real-time rate limiting across a fleet of API servers, sidestepping the pitfalls of in-memory counters that fragment across instances. Building an AIMD Rate Limiter in C#: Practical Implementation Let’s bring theory into practice with a concrete example: using StackExchange.Redis; using System; using System.Threading.Tasks; public class AimdRateLimiter { private readonly IDatabase _redis; private readonly string _prefix; private readonly int _minRate; private readonly int _maxRate; private readonly int _increaseStep; private readonly double _decreaseFactor; public AimdRateLimiter(IConnectionMultiplexer connection, string prefix, int minRate, int maxRate, int increaseStep, double decreaseFactor) { _redis = connection.GetDatabase(); _prefix = prefix; _minRate = minRate; _maxRate = maxRate; _increaseStep = increaseStep; _decreaseFactor = decreaseFactor; } public async Task GetAllowedRateAsync(string key, bool overloadDetected) { string redisKey = $"{_prefix}:{key}"; int currentRate = (int)(await _redis.StringGetAsync(redisKey)); if (currentRate == 0) currentRate = _minRate; int newRate; if (overloadDetected) { newRate = Math.Max(_minRate, (int)(currentRate * _decreaseFactor)); } else { newRate = Math.Min(_maxRate, currentRate + _increaseStep); } await _redis.StringSetAsync(redisKey, newRate, TimeSpan.FromMinutes(5)); return newRate; } } Putting AIMD to Work: Real-World Application and Benefits Imagine a SaaS API handling 10,000 concurrent users. An AIMD-powered limiter enables low-traffic users to gradually increase their limits while allowing heavy users to quickly back off under congestion. Infrastructure operators can integrate real-time metrics—such as CPU saturation, memory pressure, or error rates—to fine-tune the overload detection logic, synchronizing rate adjustments with backend health. This echoes Xu’s emphasis on observability and transparency: rate limiting should be data-informed, adjustable, and visible to developers and operators alike. Pros and Cons of AIMD for API Rate Limiting Pros: Adaptive to real-time conditions: Dynamically balances demand and system capacity. Fair resource sharing: Encourages equitable bandwidth usage among users. Proven and mature: Battle-tested in TCP congestion control with decades of successful deployment. Smooth ramp-up: Cautious increases avoid sudden

Abstract

APIs have become the lifeblood of modern digital ecosystems. Ensuring scalability, fairness, and resilience is no longer optional—it’s fundamental. Traditional rate limiting techniques offer a static shield against abuse and overload, but they can fall short when faced with dynamic, ever-shifting workloads. Inspired by the adaptive system design strategies from Alex Xu’s acclaimed System Design Interview series, this article explores a powerful, nuanced alternative: building adaptive rate limiters using AIMD (Additive Increase, Multiplicative Decrease). We delve into how AIMD works, why Redis is an ideal backend, and provide a full C# implementation to empower developers building the next generation of cloud-native APIs.

The Static Rate Limiter Problem: Why Conventional Solutions Break Down

Today’s APIs operate in a world where user demand and system capacity fluctuate wildly. Conventional fixed-threshold rate limiters—think "1000 requests per hour per user"—leave us trapped between two unsatisfactory extremes: set limits too low and frustrate legitimate users; set them too high and risk service instability.

Alex Xu emphasizes in System Design Interview that scalable systems must adapt fluidly to real-time load while safeguarding fairness and reliability. Although Xu doesn’t explicitly name AIMD, the adaptive, feedback-driven mindset he advocates resonates strongly with its principles.

Understanding AIMD: The Algorithm that Powers Both TCP and APIs

AIMD—famously used in TCP congestion control—offers a sophisticated, time-tested control mechanism. Here’s how it applies to API rate limiting:

- Additive Increase: Gradually increase the user’s allowed request rate during stable, healthy conditions.

- Multiplicative Decrease: Aggressively reduce the allowed rate when the system detects stress signals such as errors or high latency.

This elegant feedback loop intelligently balances user demand and system capacity, adjusting dynamically to real-world conditions.

Redis as the Backbone: Why Fast Distributed State Matters

Xu advocates using fast, reliable distributed stores for managing shared state in scalable systems. Redis is tailor-made for this task—delivering microsecond-level latency, atomic counters, and powerful Lua scripting for atomic multi-key operations. Redis allows consistent, real-time rate limiting across a fleet of API servers, sidestepping the pitfalls of in-memory counters that fragment across instances.

Building an AIMD Rate Limiter in C#: Practical Implementation

Let’s bring theory into practice with a concrete example:

using StackExchange.Redis;

using System;

using System.Threading.Tasks;

public class AimdRateLimiter

{

private readonly IDatabase _redis;

private readonly string _prefix;

private readonly int _minRate;

private readonly int _maxRate;

private readonly int _increaseStep;

private readonly double _decreaseFactor;

public AimdRateLimiter(IConnectionMultiplexer connection, string prefix, int minRate, int maxRate, int increaseStep, double decreaseFactor)

{

_redis = connection.GetDatabase();

_prefix = prefix;

_minRate = minRate;

_maxRate = maxRate;

_increaseStep = increaseStep;

_decreaseFactor = decreaseFactor;

}

public async Task GetAllowedRateAsync(string key, bool overloadDetected)

{

string redisKey = $"{_prefix}:{key}";

int currentRate = (int)(await _redis.StringGetAsync(redisKey));

if (currentRate == 0) currentRate = _minRate;

int newRate;

if (overloadDetected)

{

newRate = Math.Max(_minRate, (int)(currentRate * _decreaseFactor));

}

else

{

newRate = Math.Min(_maxRate, currentRate + _increaseStep);

}

await _redis.StringSetAsync(redisKey, newRate, TimeSpan.FromMinutes(5));

return newRate;

}

}

Putting AIMD to Work: Real-World Application and Benefits

Imagine a SaaS API handling 10,000 concurrent users. An AIMD-powered limiter enables low-traffic users to gradually increase their limits while allowing heavy users to quickly back off under congestion. Infrastructure operators can integrate real-time metrics—such as CPU saturation, memory pressure, or error rates—to fine-tune the overload detection logic, synchronizing rate adjustments with backend health.

This echoes Xu’s emphasis on observability and transparency: rate limiting should be data-informed, adjustable, and visible to developers and operators alike.

Pros and Cons of AIMD for API Rate Limiting

Pros:

- Adaptive to real-time conditions: Dynamically balances demand and system capacity.

- Fair resource sharing: Encourages equitable bandwidth usage among users.

- Proven and mature: Battle-tested in TCP congestion control with decades of successful deployment.

- Smooth ramp-up: Cautious increases avoid sudden load spikes.

- Fast recovery from overload: Sharp decrease prevents prolonged instability.

Cons:

- Requires accurate overload detection: False positives can unfairly throttle users.

- Slight complexity: More sophisticated than fixed or token bucket limiters.

- Tuning sensitivity: Selecting optimal increase and decrease parameters is non-trivial.

- Possible oscillation: In highly variable systems, rates can fluctuate noticeably.

Why AIMD Is the Future of Fair and Resilient Rate Limiting

AIMD elevates rate limiting from a rigid enforcement tool to a dynamic control loop. For modern API architects facing volatile workloads, complex microservices ecosystems, and unpredictable user behavior, AIMD provides a smarter alternative that balances caution and responsiveness.

In the words of Alex Xu: "Design is not just about availability or scale—it’s also about control." AIMD offers precisely that: intelligent, adaptive control—essential for modern, cloud-native system design.

References:

- Alex Xu, System Design Interview – An Insider’s Guide, Volumes 1 & 2, 2020–2022.

- V. Jacobson, Congestion Avoidance and Control, ACM SIGCOMM, 1988.

- Redis Documentation: Redis Docs

- Van Jacobson’s TCP AIMD Paper: Congestion Avoidance Paper

![[The AI Show Episode 146]: Rise of “AI-First” Companies, AI Job Disruption, GPT-4o Update Gets Rolled Back, How Big Consulting Firms Use AI, and Meta AI App](https://www.marketingaiinstitute.com/hubfs/ep%20146%20cover.png)

![[DEALS] The Premium Python Programming PCEP Certification Prep Bundle (67% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

.jpg?#)

-Mafia-The-Old-Country---The-Initiation-Trailer-00-00-54.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

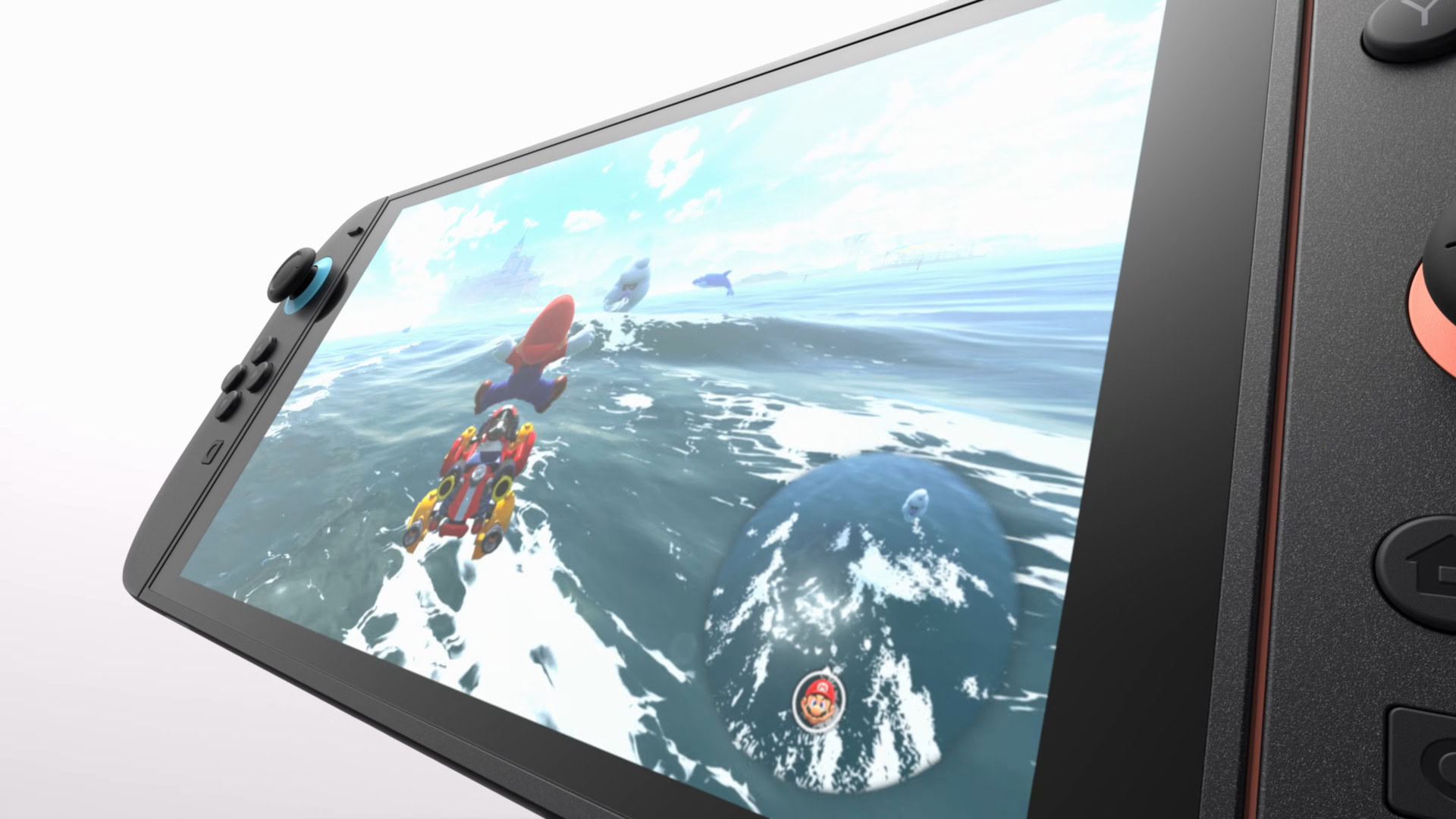

-Nintendo-Switch-2---Reveal-Trailer-00-01-52.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

_Aleksey_Funtap_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

_Sergey_Tarasov_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Apple Shares Official Trailer for 'Stick' Starring Owen Wilson [Video]](https://www.iclarified.com/images/news/97264/97264/97264-640.jpg)

![Beats Studio Pro Wireless Headphones Now Just $169.95 - Save 51%! [Deal]](https://www.iclarified.com/images/news/97258/97258/97258-640.jpg)