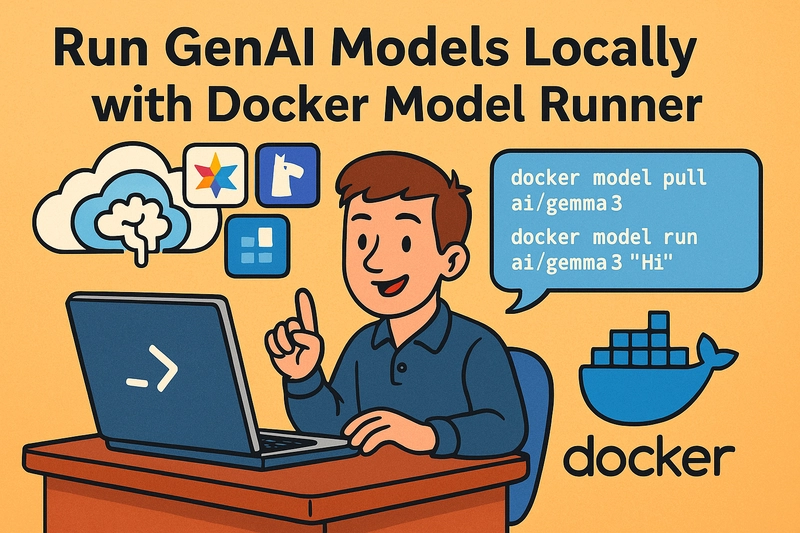

Run GenAI Models Locally with Docker Model Runner

This guide will show you how to run Google Gemma 3 or a similar LLM model locally using Docker's new feature that was introduced today in Docker Desktop 4.40 for Mac Silicon users. Currently, the available models are the following: Gemma 3 from Google, which is based on Gemini 2.0 Llama 3.2 from Meta DeepSeek R1 Distill Llama, which is not exactly the DeepSeek model; it's a Llama trained on DeepSeek-R1 inputs/outputs Phi4 from Microsoft and more (you can see the list of available models here) Introduction There are multiple use cases for having an LLM model running locally, such as: Privacy: You don't want to send your data to a cloud provider. It might be your company's policy not to send data to third-party services. Internet Connection: You might not have a stable internet connection, or you might be in a location where the internet is restricted. Development: You might be developing a product that uses an LLM model, and you want to test it locally before deploying it to the cloud. Either way, you can use the newly available Docker Model Runner rather easily: docker model pull ai/gemma3 docker model run ai/gemma3 "Hi" This will pull the Gemma 3 model from Docker Hub and run it with the prompt "Hi"! You can replace the model name with any of the available models, or have a different prompt: docker model run ai/gemma3 "Write me a Hello World program in Java" If you want to enter the interactive mode and enter multiple prompts, you can do so by running the following command: docker model run -it ai/gemma3 With the interactive mode, you can enter multiple prompts and see the responses from the model. It's similar to a chatbot, but e.g. it doesn't have a memory of the previous prompts. To fix that, we will try and run a web-based chatbot in the next section. But, first let's make sure you have a working Docker Model Runner. Installation To have access to the Docker Model Runner, you need to install Docker Desktop 4.40 or later. You can download it from the official Docker website. And make sure the beta feature is enabled in the Docker Desktop settings (should be enabled by default). Run the following command to check if the Docker Model Runner is available: docker model --help If the CLI option is not available, it might be fixed by creating a symbolic link: ln -s /Applications/Docker.app/Contents/Resources/cli-plugins/docker-model ~/.docker/cli-plugins/docker-model If the help command works, you can see that the following commands are available: Docker Model Runner Commands: inspect Display detailed information on one model list List the available models that can be run with the Docker Model Runner pull Download a model rm Remove a model downloaded from Docker Hub run Run a model with the Docker Model Runner status Check if the Docker Model Runner is running version Show the Docker Model Runner version We have already used the pull and run commands. To be able to run an application locally against your model, you need to be able to access it programmatically. The Docker Model Runner exposes a REST API that you can use to interact with the model. So, let's check it out first. Accessing the Runner Through Network If a model is running, you can access it from within a container using Docker's internal DNS resolution: http://model-runner.docker.internal/ Let's try it with an Ubuntu image: docker run -it --rm ubuntu bash -c "apt update && apt install -y curl && curl http://model-runner.docker.internal" The output is something similar to this: Docker Model Runner The service is running. In the Docker Desktop settings, you can also enable host-side TCP support, which means you can access the model from your host machine through a port. If you enable it, the default port is 12434. Run a ChatBot To run an example chatbot, let's clone a repository: git clone https://github.com/dockersamples/genai-app-demo cd genai-app-demo Edit the file backend.env and make it match the following content: BASE_URL: http://model-runner.docker.internal/engines/llama.cpp/v1/ MODEL: ai/gemma3 API_KEY: ${API_KEY:-ollama} Then, start the application using Docker Compose: docker compose up -d Now you can access the frontend at http://localhost:3000. You can enter a prompt and see the response from the model. Conclusion The Docker Model Runner represents a significant step forward in democratizing access to powerful Large Language Models. By leveraging Docker Desktop 4.40 and its newly introduced features, users can now effortlessly run models like Gemma 3, Llama 3.2, and DeepSeek R1 locally, addressing critical needs around privacy, internet connectivity, and development workflows. I let the model running locally on my machine write the previous paragraph, by feeding it the rest of my article. And t

This guide will show you how to run Google Gemma 3 or a similar LLM model locally using Docker's new feature that was introduced today in Docker Desktop 4.40 for Mac Silicon users. Currently, the available models are the following:

- Gemma 3 from Google, which is based on Gemini 2.0

- Llama 3.2 from Meta

- DeepSeek R1 Distill Llama, which is not exactly the DeepSeek model; it's a Llama trained on DeepSeek-R1 inputs/outputs

- Phi4 from Microsoft

- and more (you can see the list of available models here)

Introduction

There are multiple use cases for having an LLM model running locally, such as:

- Privacy: You don't want to send your data to a cloud provider. It might be your company's policy not to send data to third-party services.

- Internet Connection: You might not have a stable internet connection, or you might be in a location where the internet is restricted.

- Development: You might be developing a product that uses an LLM model, and you want to test it locally before deploying it to the cloud.

Either way, you can use the newly available Docker Model Runner rather easily:

docker model pull ai/gemma3

docker model run ai/gemma3 "Hi"

This will pull the Gemma 3 model from Docker Hub and run it with the prompt "Hi"! You can replace the model name with any of the available models, or have a different prompt:

docker model run ai/gemma3 "Write me a Hello World program in Java"

If you want to enter the interactive mode and enter multiple prompts, you can do so by running the following command:

docker model run -it ai/gemma3

With the interactive mode, you can enter multiple prompts and see the responses from the model. It's similar to a chatbot, but e.g. it doesn't have a memory of the previous prompts. To fix that, we will try and run a web-based chatbot in the next section. But, first let's make sure you have a working Docker Model Runner.

Installation

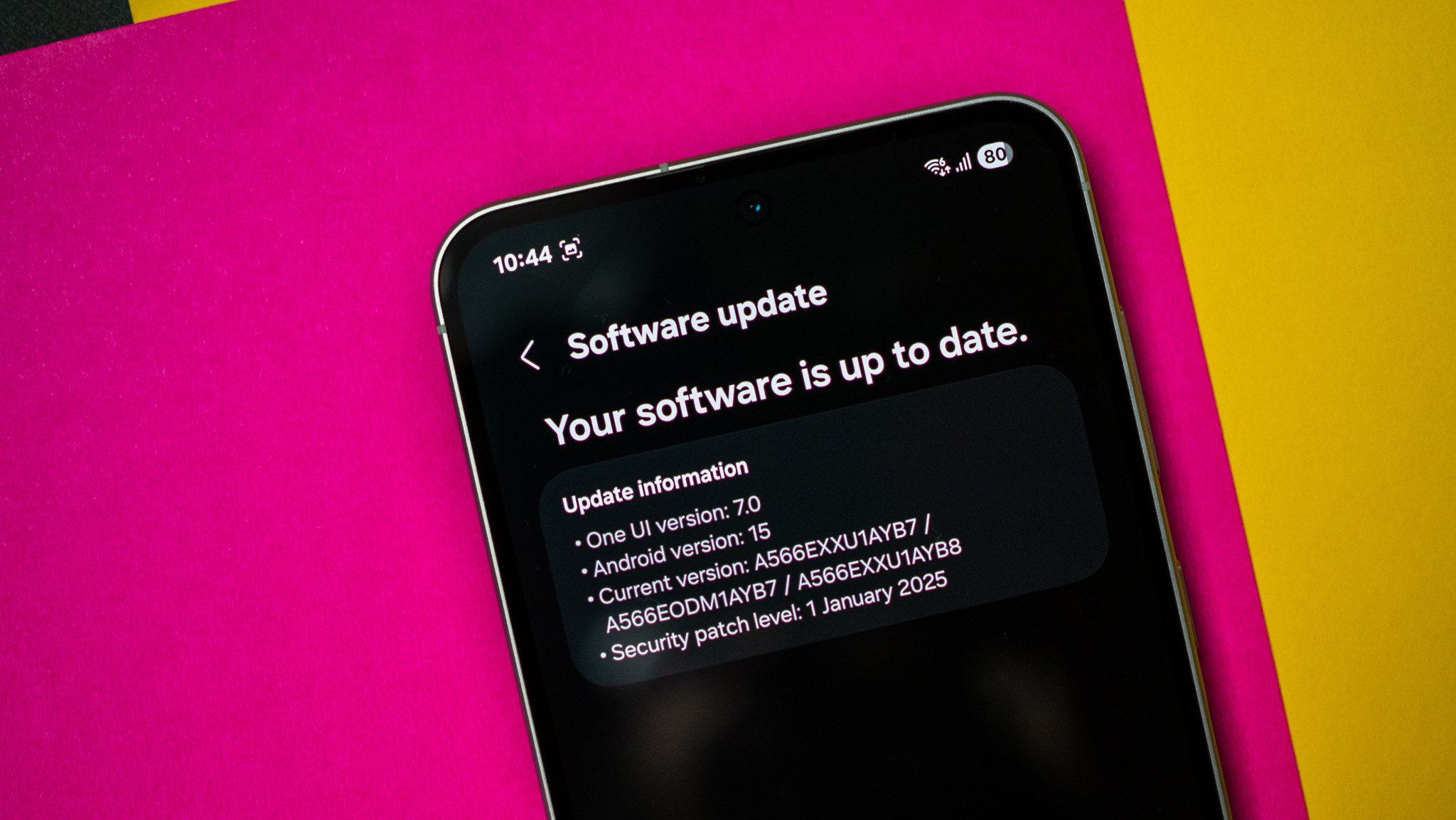

To have access to the Docker Model Runner, you need to install Docker Desktop 4.40 or later. You can download it from the official Docker website. And make sure the beta feature is enabled in the Docker Desktop settings (should be enabled by default).

Run the following command to check if the Docker Model Runner is available:

docker model --help

If the CLI option is not available, it might be fixed by creating a symbolic link:

ln -s /Applications/Docker.app/Contents/Resources/cli-plugins/docker-model ~/.docker/cli-plugins/docker-model

If the help command works, you can see that the following commands are available:

Docker Model Runner

Commands:

inspect Display detailed information on one model

list List the available models that can be run with the Docker Model Runner

pull Download a model

rm Remove a model downloaded from Docker Hub

run Run a model with the Docker Model Runner

status Check if the Docker Model Runner is running

version Show the Docker Model Runner version

We have already used the pull and run commands.

To be able to run an application locally against your model, you need to be able to access it programmatically. The Docker Model Runner exposes a REST API that you can use to interact with the model. So, let's check it out first.

Accessing the Runner Through Network

If a model is running, you can access it from within a container using Docker's internal DNS resolution:

http://model-runner.docker.internal/

Let's try it with an Ubuntu image:

docker run -it --rm ubuntu bash -c "apt update && apt install -y curl && curl http://model-runner.docker.internal"

The output is something similar to this:

Docker Model Runner

The service is running.

In the Docker Desktop settings, you can also enable host-side TCP support, which means you can access the model from your host machine through a port. If you enable it, the default port is 12434.

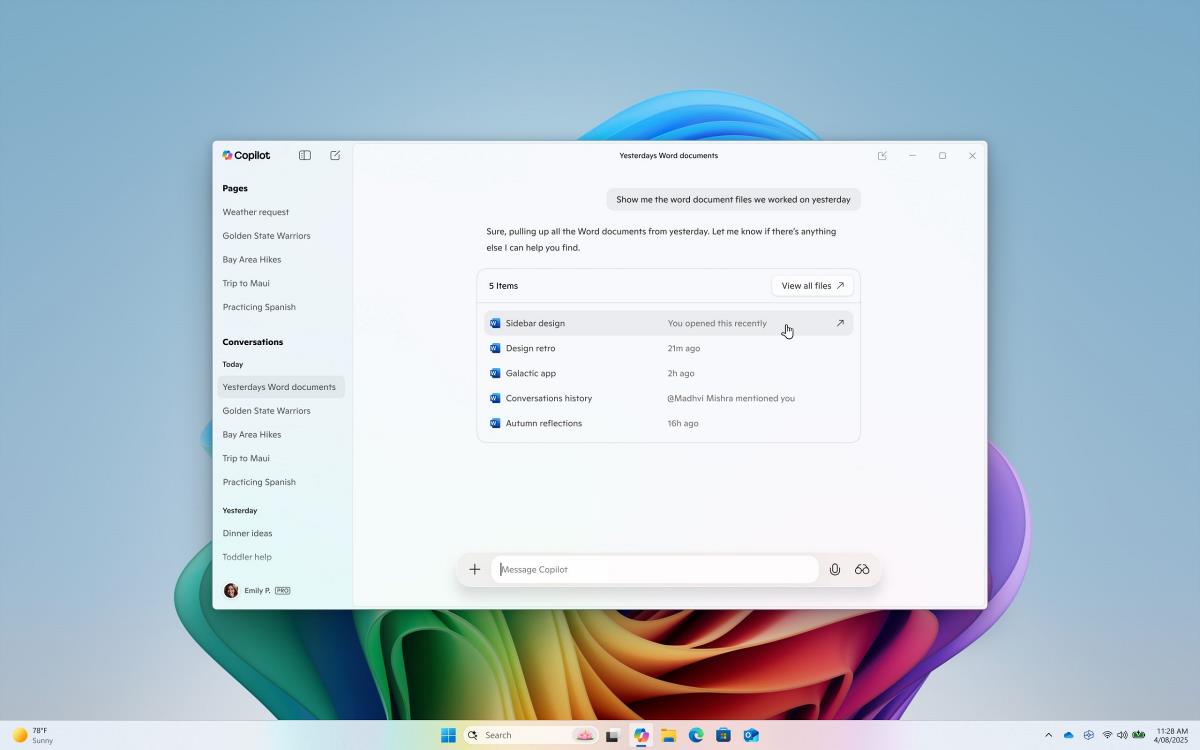

Run a ChatBot

To run an example chatbot, let's clone a repository:

git clone https://github.com/dockersamples/genai-app-demo

cd genai-app-demo

Edit the file backend.env and make it match the following content:

BASE_URL: http://model-runner.docker.internal/engines/llama.cpp/v1/

MODEL: ai/gemma3

API_KEY: ${API_KEY:-ollama}

Then, start the application using Docker Compose:

docker compose up -d

Now you can access the frontend at http://localhost:3000. You can enter a prompt and see the response from the model.

Conclusion

The Docker Model Runner represents a significant step forward in democratizing access to powerful Large Language Models. By leveraging Docker Desktop 4.40 and its newly introduced features, users can now effortlessly run models like Gemma 3, Llama 3.2, and DeepSeek R1 locally, addressing critical needs around privacy, internet connectivity, and development workflows.

I let the model running locally on my machine write the previous paragraph, by feeding it the rest of my article. And the response was bloody fast!

If you have any questions or need help with running a model locally, feel free to reach out to me on:

- Twitter: @MohammadAliEN

- BlueSky: @aerabi.com

- LinkedIn: @aerabi

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

.png?#)

![Beats Studio Buds + On Sale for $99.95 [Lowest Price Ever]](https://www.iclarified.com/images/news/96983/96983/96983-640.jpg)

![Apple Watch to Get visionOS Inspired Refresh, Apple Intelligence Support [Rumor]](https://www.iclarified.com/images/news/96976/96976/96976-640.jpg)