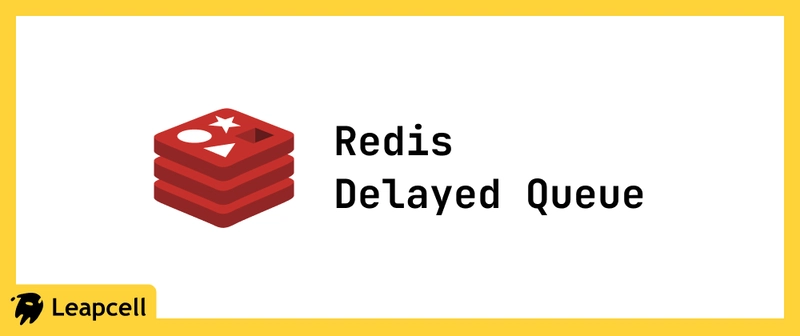

Redis Delayed Queue: Explained Once and for All

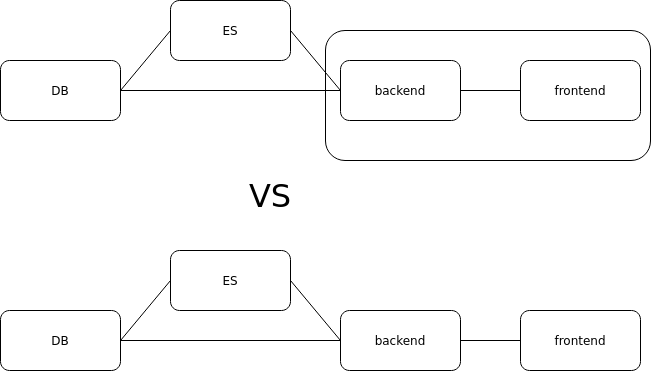

A delayed queue is essentially a message queue that delays execution. What business scenarios is it useful in? Practical Scenarios When an order payment fails, periodically remind the user. In cases of user concurrency, you can delay sending an email to the user by 2 minutes. Using Redis to Implement a Basic Message Queue As we know, for professional message queue middleware such as Kafka and RabbitMQ, consumers need to go through a series of complex steps before they can consume messages. For example, in RabbitMQ, you must create an Exchange before sending messages, then create a Queue, bind the Queue and Exchange with some routing rules, specify a routing key when sending the message, and control the message header info. Yet in most cases, even if our message queue only has a single consumer, we still need to go through the above process. With Redis, for message queues with only one consumer group, things become much simpler. Redis is not a specialized message queue, and it lacks advanced features—there’s no ack guarantee. So, if you have strict reliability requirements for messages, Redis may not be suitable. Basic Implementation of an Asynchronous Message Queue Redis’s list data structure is commonly used for asynchronous message queues. You can use rpush or lpush to enqueue items and use lpop or rpop to dequeue. > rpush queue Leapcell_1 Leapcell_2 Leapcell_3 (integer) 3 > lpop queue "Leapcell_1" > llen queue (integer) 2 Problem 1: What If the Queue Is Empty? The client fetches messages using the pop operation and processes them. After processing, it fetches the next message and continues processing. This cycle repeats—that’s the lifecycle of a queue consumer. However, if the queue is empty, the client enters a dead loop of pop operations—continuously popping with no data, again and again. This is wasteful and inefficient polling. Not only does it spike the client's CPU usage, but it also increases Redis QPS. If dozens of clients are polling like this, Redis may experience a significant number of slow queries. Typically, we solve this with a sleep operation—make the thread sleep for 1 second. This reduces CPU usage on the client side and lowers Redis QPS as well. Problem 2: Queue Latency Sleeping helps solve the problem, but if there's only one consumer, that delay is 1 second. With multiple consumers, the delay is reduced somewhat because their sleep durations are staggered. Is there a way to significantly reduce this latency? Yes—by using blpop/brpop. The b prefix stands for blocking, i.e., blocking reads. When the queue has no data, blocking reads cause the thread to immediately sleep, and as soon as data arrives, it wakes up instantly. This brings message latency down to near zero. By replacing lpop/rpop with blpop/brpop, we can perfectly solve the above problem. Problem 3: Idle Connections Automatically Disconnect There’s another issue that needs addressing—idle connections. If a thread remains blocked for too long, the Redis client connection becomes idle. Most servers will actively close idle connections to reduce resource usage. When this happens, blpop/brpop will throw an exception. Therefore, when writing the client-side consumer logic, be sure to catch exceptions and implement retry logic. Handling Distributed Lock Conflicts What if the client fails to acquire a distributed lock while processing a request? Typically, there are three strategies to handle lock acquisition failure: Directly throw an exception and notify the user to retry later. Sleep for a while before retrying. Move the request to a delayed queue and retry later. Directly Throwing a Specific Type of Exception This approach works well for user-initiated requests. When the user sees an error dialog, they’ll usually read the message and click "Retry," which naturally creates a delay. For better user experience, the frontend code can take over this retry delay instead of relying on the user. Essentially, this strategy abandons the current request and leaves it up to the user to decide whether to reinitiate it. Sleep Using sleep blocks the current message processing thread, causing delays in processing subsequent messages in the queue. If collisions happen frequently or the queue has a lot of messages, sleep might not be a good fit. If the failure to acquire the lock is caused by a deadlocked key, the thread will be completely stuck, preventing further message processing. Delayed Queue This strategy is more suitable for asynchronous message processing. You throw the conflicting request into a different queue to process it later, avoiding immediate contention. Implementing a Delayed Queue We can use Redis’s zset data structure, assigning a timestamp as the score to sort elements. Use the zadd score1 value1 ... command to continuously produce messages in memory. Then use zrangebys

A delayed queue is essentially a message queue that delays execution. What business scenarios is it useful in?

Practical Scenarios

- When an order payment fails, periodically remind the user.

- In cases of user concurrency, you can delay sending an email to the user by 2 minutes.

Using Redis to Implement a Basic Message Queue

As we know, for professional message queue middleware such as Kafka and RabbitMQ, consumers need to go through a series of complex steps before they can consume messages.

For example, in RabbitMQ, you must create an Exchange before sending messages, then create a Queue, bind the Queue and Exchange with some routing rules, specify a routing key when sending the message, and control the message header info.

Yet in most cases, even if our message queue only has a single consumer, we still need to go through the above process.

With Redis, for message queues with only one consumer group, things become much simpler. Redis is not a specialized message queue, and it lacks advanced features—there’s no ack guarantee. So, if you have strict reliability requirements for messages, Redis may not be suitable.

Basic Implementation of an Asynchronous Message Queue

Redis’s list data structure is commonly used for asynchronous message queues. You can use rpush or lpush to enqueue items and use lpop or rpop to dequeue.

> rpush queue Leapcell_1 Leapcell_2 Leapcell_3

(integer) 3

> lpop queue

"Leapcell_1"

> llen queue

(integer) 2

Problem 1: What If the Queue Is Empty?

The client fetches messages using the pop operation and processes them. After processing, it fetches the next message and continues processing. This cycle repeats—that’s the lifecycle of a queue consumer.

However, if the queue is empty, the client enters a dead loop of pop operations—continuously popping with no data, again and again. This is wasteful and inefficient polling. Not only does it spike the client's CPU usage, but it also increases Redis QPS. If dozens of clients are polling like this, Redis may experience a significant number of slow queries.

Typically, we solve this with a sleep operation—make the thread sleep for 1 second. This reduces CPU usage on the client side and lowers Redis QPS as well.

Problem 2: Queue Latency

Sleeping helps solve the problem, but if there's only one consumer, that delay is 1 second. With multiple consumers, the delay is reduced somewhat because their sleep durations are staggered.

Is there a way to significantly reduce this latency?

Yes—by using blpop/brpop.

The b prefix stands for blocking, i.e., blocking reads.

When the queue has no data, blocking reads cause the thread to immediately sleep, and as soon as data arrives, it wakes up instantly. This brings message latency down to near zero. By replacing lpop/rpop with blpop/brpop, we can perfectly solve the above problem.

Problem 3: Idle Connections Automatically Disconnect

There’s another issue that needs addressing—idle connections.

If a thread remains blocked for too long, the Redis client connection becomes idle. Most servers will actively close idle connections to reduce resource usage. When this happens, blpop/brpop will throw an exception.

Therefore, when writing the client-side consumer logic, be sure to catch exceptions and implement retry logic.

Handling Distributed Lock Conflicts

What if the client fails to acquire a distributed lock while processing a request?

Typically, there are three strategies to handle lock acquisition failure:

- Directly throw an exception and notify the user to retry later.

- Sleep for a while before retrying.

- Move the request to a delayed queue and retry later.

Directly Throwing a Specific Type of Exception

This approach works well for user-initiated requests. When the user sees an error dialog, they’ll usually read the message and click "Retry," which naturally creates a delay. For better user experience, the frontend code can take over this retry delay instead of relying on the user. Essentially, this strategy abandons the current request and leaves it up to the user to decide whether to reinitiate it.

Sleep

Using sleep blocks the current message processing thread, causing delays in processing subsequent messages in the queue. If collisions happen frequently or the queue has a lot of messages, sleep might not be a good fit. If the failure to acquire the lock is caused by a deadlocked key, the thread will be completely stuck, preventing further message processing.

Delayed Queue

This strategy is more suitable for asynchronous message processing. You throw the conflicting request into a different queue to process it later, avoiding immediate contention.

Implementing a Delayed Queue

We can use Redis’s zset data structure, assigning a timestamp as the score to sort elements. Use the zadd score1 value1 ... command to continuously produce messages in memory. Then use zrangebyscore to query all tasks that are ready for processing. You can loop through these and execute them one by one. You can also use zrangebyscore key min max withscores limit 0 1 to query just the earliest task for consumption.

private Jedis jedis;

public void redisDelayQueueTest() {

String key = "delay_queue";

// In real applications, it is recommended to use a business ID and a randomly generated unique ID as the value.

// The unique ID ensures message uniqueness, and the business ID avoids carrying too much data in the value.

String orderId1 = UUID.randomUUID().toString();

jedis.zadd(queueKey, System.currentTimeMillis() + 5000, orderId1);

String orderId2 = UUID.randomUUID().toString();

jedis.zadd(queueKey, System.currentTimeMillis() + 5000, orderId2);

new Thread() {

@Override

public void run() {

while (true) {

Set<String> resultList;

// Get only the first item (non-destructive read)

resultList = jedis.zrangebyscore(key, System.currentTimeMillis(), 0, 1);

if (resultList.size() == 0) {

try {

Thread.sleep(1000);

} catch (InterruptedException e) {

e.printStackTrace();

break;

}

} else {

// Remove the fetched data

if (jedis.zrem(key, resultList.iterator().next()) > 0) {

String orderId = resultList.iterator().next();

log.info("orderId = {}", orderId);

this.handleMsg(orderId);

}

}

}

}

}.start();

}

public void handleMsg(T msg) {

System.out.println(msg);

}

The implementation above also works fine in a multithreaded scenario. Suppose you have two threads T1 and T2, and possibly more. The logic proceeds like this, ensuring only one thread handles a message:

- T1, T2, and other threads call

zrangebyscoreand retrieve message A. - T1 begins deleting message A. Since this is an atomic operation, T2 and other threads wait for T1 to complete

zrembefore proceeding. - T1 successfully deletes message A and processes it.

- T2 and others attempt to delete message A but fail, since it’s already been removed—they give up processing.

Also, be sure to add exception handling to handleMsg, so that a single faulty task doesn’t cause the whole processing loop to crash.

Further Optimization

In the algorithm above, the same task may be fetched by multiple processes, and only one will succeed in deleting it using zrem. The others will have fetched the task in vain—this is wasteful. To improve this, you can use Lua scripting to optimize the logic by combining zrangebyscore and zrem into a single atomic operation on the server side. This way, multiple processes competing for the same task won’t result in unnecessary fetches.

Use Lua Script for Further Optimization

The Lua script will check for expired messages, remove them, and return the message if deletion was successful. Otherwise, it returns an empty string:

String luaScript = "local resultArray = redis.call('zrangebyscore', KEYS[1], 0, ARGV[1], 'limit', 0, 1)\n" +

"if #resultArray > 0 then\n" +

" if redis.call('zrem', KEYS[1], resultArray[1]) > 0 then\n" +

" return resultArray[1]\n" +

" else\n" +

" return ''\n" +

" end\n" +

"else\n" +

" return ''\n" +

"end";

jedis.eval(luaScript, ScriptOutputType.VALUE, new String[]{key}, String.valueOf(System.currentTimeMillis()));

Advantages of Redis-Based Delayed Queues

Redis offers the following advantages when used to implement delayed queues:

- Redis

zsetprovides high-performance score-based sorting. - Redis operates in-memory, making it extremely fast.

- Redis supports clustering. When there are many messages, clusters can improve message processing speed and availability.

- Redis supports persistence. In case of failure, data can be recovered using AOF or RDB, ensuring reliability.

Disadvantages of Redis-Based Delayed Queues

However, Redis-based delayed queues also have some limitations:

- Message persistence and reliability are still a concern. While Redis supports persistence, it's not as reliable as a dedicated MQ.

- No retry mechanism – If an exception occurs during message processing, Redis doesn’t provide a built-in retry mechanism. You must implement this yourself, including managing retry counts.

- No ACK mechanism – For example, if a client retrieves and deletes a message, but crashes during processing, the message will be lost. In contrast, message queues (MQ) require an acknowledgment to confirm successful processing before removing a message.

If message reliability is critical, it’s recommended to use a dedicated MQ instead.

Implementing Delayed Queues with Redisson

The Redisson-based distributed delayed queue (RDelayedQueue) is built on top of the RQueue interface and provides the functionality to delay adding items to the queue. This can be used to implement geometrically increasing or decreasing message delivery strategies.

RQueue<String> destinationQueue = ...

RDelayedQueue<String> delayedQueue = getDelayedQueue(destinationQueue);

// Send message to queue after 10 seconds

delayedQueue.offer("msg1", 10, TimeUnit.SECONDS);

// Send message to queue after 1 minute

delayedQueue.offer("msg2", 1, TimeUnit.MINUTES);

When the object is no longer needed, it should be actively destroyed. Only if the associated Redisson object is being shut down is it acceptable not to destroy it manually.

RDelayedQueue<String> delayedQueue = ...

delayedQueue.destroy();

Isn’t it convenient?

We are Leapcell, your top choice for hosting backend projects.

Leapcell is the Next-Gen Serverless Platform for Web Hosting, Async Tasks, and Redis:

Multi-Language Support

- Develop with Node.js, Python, Go, or Rust.

Deploy unlimited projects for free

- pay only for usage — no requests, no charges.

Unbeatable Cost Efficiency

- Pay-as-you-go with no idle charges.

- Example: $25 supports 6.94M requests at a 60ms average response time.

Streamlined Developer Experience

- Intuitive UI for effortless setup.

- Fully automated CI/CD pipelines and GitOps integration.

- Real-time metrics and logging for actionable insights.

Effortless Scalability and High Performance

- Auto-scaling to handle high concurrency with ease.

- Zero operational overhead — just focus on building.

Explore more in the Documentation!

Follow us on X: @LeapcellHQ

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

.png?#)

.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

.webp?#)

![Leaker vaguely comments on under-screen camera in iPhone Fold [U]](https://i0.wp.com/9to5mac.com/wp-content/uploads/sites/6/2025/04/iPhone-Fold-will-have-Face-ID-embedded-in-the-display-%E2%80%93-leaker.webp?resize=1200%2C628&quality=82&strip=all&ssl=1)

![[Fixed] Gemini app is failing to generate Audio Overviews](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/03/Gemini-Audio-Overview-cover.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Seeds tvOS 18.5 Beta 2 to Developers [Download]](https://www.iclarified.com/images/news/97011/97011/97011-640.jpg)

![Apple Releases macOS Sequoia 15.5 Beta 2 to Developers [Download]](https://www.iclarified.com/images/news/97014/97014/97014-640.jpg)